Lawyers and High School Students Cut Corners

March 6, 2025

Cost-cutting lawyers beware: using AI in your practice may make it tough to buy a new BMW this quarter. TechSpot reports, "Lawyer Faces $15,000 Fine for Using Fake AI-Generated Cases in Court Filing." Writer Rob Thubron tells us:

"When representing HooserVac LLC in a lawsuit over its retirement fund in October 2024, Indiana attorney Rafael Ramirez included case citations in three separate briefs. The court could not locate these cases as they had been fabricated by ChatGPT."

Yes, ChatGPT completely invented precedents to support Ramirez’ case. Unsurprisingly, the court took issue with this:

"In December, US Magistrate Judge for the Southern District of Indiana Mark J. Dinsmore ordered Ramirez to appear in court and show cause as to why he shouldn’t be sanctioned for the errors. ‘Transposing numbers in a citation, getting the date wrong, or misspelling a party’s name is an error,’ the judge wrote. ‘Citing to a case that simply does not exist is something else altogether. Mr Ramirez offers no hint of an explanation for how a case citation made up out of whole cloth ended up in his brief. The most obvious explanation is that Mr Ramirez used an AI-generative tool to aid in drafting his brief and failed to check the citations therein before filing it.’ Ramirez admitted that he used generative AI, but insisted he did not realize the cases weren’t real as he was unaware that AI could generate fictitious cases and citations."

Unaware? Perhaps he had not heard about the similar case in 2023. Then again, maybe he had. Ramirez told the court he had tried to verify the cases were real—by asking ChatGPT itself (which replied in the affirmative). But that query falls woefully short of the due diligence required by the Federal Rule of Civil Procedure 11, Thubron notes. As the judge who ultimately did sanction the firm observed, Ramirez would have noticed the cases were fiction had his attempt to verify them ventured beyond the ChatGPT UI.

For his negligence, Ramirez may face disciplinary action beyond the $15,000 in fines. We are told he continues to use AI tools, but has taken courses on its responsible use in the practice of law. Perhaps he should have done that before building a case on a chatbot’s hallucinations.

Cynthia Murrell, March 6, 2025

Sergey Says: Work Like It Was 1975 at McKinsey or Booz, Allen

March 6, 2025

Yep, another dinobaby original.

Yep, another dinobaby original.

Sergey Brin, invigorated with his work at the Google on smart software, has provided some management and work life tips to today’s job hunters and aspiring Alphabet employees. “In Leaked Memo to Google’s AI Workers, Sergey Brin Says 60 hours a Week Is the Sweet Spot and Doing the Bare Minimum Can Demoralize Peers”, Mr. Brin offers his view of sage management and career advice. (I do want to point out that the write up does not reference the work ethic and other related interactions of the Google Glass marketing team. My view of this facet of Mr. Brin’s contributions suggest that it is tough to put in 60 hours a week while an employee is ensconced in the Stanford Medical psychiatric ward. But that’s water under the bridge, so let’s move on to the current wisdom.)

The write up reports:

Sergey Brin believes Google can win the race to artificial general intelligence and outlined his ideas for how to do that—including a workweek that’s 50% longer than the standard 40 hours.

Presumably working harder will allow Google to avoid cheese mishaps related to pizza and Super Bowl advertising. Harder working Googlers will allow the company to avoid the missteps which have allowed unenlightened regulators in the European Union and the US to find the company exercising behavior which is not in the best interest of the service’s “users.”

The write up says:

“A number of folks work less than 60 hours and a small number put in the bare minimum to get by,” Brin wrote on Wednesday. “This last group is not only unproductive but also can be highly demoralizing to everyone else.”

I wonder if a consistent, document method for reviewing the work of employees would allow management to offer training, counseling, or incentives to get the mavericks back in the herd.

The protests, the allegations of erratic punitive actions like firing people who use words like “stochastic”, and the fact that the 60-hour information comes from a leaked memo — each of these incidents suggests that the management of the Google may have some work to do. You know, that old nosce teipsum stuff.

The Fortune write up winds down with this statement:

Last year, he acknowledged that he “kind of came out of retirement just because the trajectory of AI is so exciting.” That also coincided with some high-profile gaffes in Gemini’s AI, including an image generator that produced racially diverse Na#is. [Editor note: Member of a German affiliation group in the 1930s and 1940s. I have to avoid the Google stop words list.]

And the cheese, the Google Glass marketing tours, and so much more.

Stephen E Arnold, March 6, 2025

Shocker! Students Use AI and Engage in Sex, Drugs, and Rock and Roll

March 5, 2025

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

I read “Surge in UK University Students Using AI to Complete Work.” The write up says:

The number of UK undergraduate students using artificial intelligence to help them complete their studies has surged over the past 12 months, raising questions about how universities assess their work. More than nine out of 10 students are now using AI in some form, compared with two-thirds a year ago…

I understand the need to create “real” news; however, the information did not surprise me. But the weird orange newspaper tosses in this observation:

Experts warned that the sheer speed of take-up of AI among undergraduates required universities to rapidly develop policies to give students clarity on acceptable uses of the technology.

As a purely practical matter, information has crossed my about professors cranking out papers for peer review or the ever-popular gray literature consumers that are not reproducible, contain data which have been shaped like a kindergartener’s clay animal, and links to pals who engage in citation boosting.

Plus, students who use Microsoft have a tough time escaping the often inept outputs of the Redmond crowd. A Google user is no longer certain what information is created by a semi reputable human or a cheese-crazed Google system. Emails write themselves. Message systems suggest emojis. Agentic AIs take care of mum’s and pop’s questions about life at the uni.

The topper for me was the inclusion in the cited article of this statement:

it was almost unheard of to see such rapid changes in student behavior…

Did this fellow miss drinking, drugs, staying up late, and sex on campus? How fast did those innovations take to sweep through the student body?

I liked the note of optimism at the end of the write up. Check this:

Janice Kay, a director of a higher education consulting firm: ““There is little evidence here that AI tools are being misused to cheat and play the system. [But] there are quite a lot of signs that will pose serious challenges for learners, teachers and institutions and these will need to be addressed as higher education transforms,” she added.”

That encouraging. The academic research crowd does one thing, and I am to assume that students will do everything the old-fashioned way. When you figure out how to remove smart software from online systems and local installations of smart helpers, let me know. Fix up AI usage and then turn one’s attention to changing student behavior in the drinking, sex, and drug departments too.

Good luck.

Stephen E Arnold, March 5, 2025

Mathematics Is Going to Be Quite Effective, Citizen

March 5, 2025

This blog post is the work of a real-live dinobaby. No smart software involved.

This blog post is the work of a real-live dinobaby. No smart software involved.

The future of AI is becoming more clear: Get enough people doing something, gather data, and predict what humans will do. What if an individual does not want to go with the behavior of the aggregate? The answer is obvious, “Too bad.”

How do I know that as a handful of organizations will use their AI in this manner? I read “Spanish Running of the Bulls’ Festival Reveals Crowd Movements Can Be Predictable, Above a Certain Density.” If the data in the report are close to the pin, AI will be used to predict and then those predictions can be shaped by weaponized information flows. I got a glimpse of how this number stuff works when I worked at Halliburton Nuclear with Dr. Jim Terwilliger. He and a fellow named Julian Steyn were only too happy to explain that the mathematics used for figuring out certain nuclear processes would work for other applications as well. I won’t bore you with comments about the Monte Carl method or the even older Bayesian statistics procedures. But if it made certain nuclear functions manageable, the approach was okay mostly.

Let’s look at what the Phys.org write up says about bovines:

Denis Bartolo and colleagues tracked the crowds of an estimated 5,000 people over four instances of the San Fermín festival in Pamplona, Spain, using cameras placed in two observation spots in the plaza, which is 50 meters long and 20 meters wide. Through their footage and a mathematical model—where people are so packed that crowds can be treated as a continuum, like a fluid—the authors found that the density of the crowds changed from two people per square meter in the hour before the festival began to six people per square meter during the event. They also found that the crowds could reach a maximum density of 9 people per square meter. When this upper threshold density was met, the authors observed pockets of several hundred people spontaneously behaving like one fluid that oscillated in a predictable time interval of 18 seconds with no external stimuli (such as pushing).

I think that’s an important point. But here’s the comment that presages how AI data will be used to control human behavior. Remember. This is emergent behavior similar to the hoo-hah cranked out by the Santa Fe Institute crowd:

The authors note that these findings could offer insights into how to anticipate the behavior of large crowds in confined spaces.

Once probabilities allow one to “anticipate”, it follows that flows of information can be used to take or cause action. Personally I am going to make a note in my calendar and check in one year to see how my observation turns out. In the meantime, I will try to keep an eye on the Sundars, Zucks, and their ilk for signals about their actions and their intent, which is definitely concerned with individuals like me. Right?

Stephen E Arnold, March 5, 2025

LinkedIn: An Ego Buster and Dating App. Who Knew?

March 5, 2025

Yep, another dinobaby original.

Yep, another dinobaby original.

Okay, GenZ, you are having a traumatic moment. I mean your mobile phone works. You have social media. You have the Dark Web, Telegram, and smart software. Oh, you find that living with your parents a bit of a downer. I understand. And the lack of having a role as a decider in an important company chews on your id, ego, and superego simultaneously. Not good.

I learned something when I read “GenZ Is Suffering from LinkedIn envy — And It’s Crushing Their Chill: My Reactions Are So Intense.” I noted this statement in the “real” news write up:

…at a time when unemployed people are finding it harder to find new work, LinkedIn has become the “unrivaled behemoth of digital inadequacy,” journalist Lotte Brundle wrote for The UK Times.

I want to refer Ms. Brundle to the US Department of Labor Statistics report that says AI and other factors are not hampering the job market in the US. Is it time to apply for a green card?

The write up adds:

Brundle also likened the platform to a dating site where people compare themselves to others, adding that she has used the platform to “see what exes and past nemeses are up to” — and some of her friends have even been “chatted up” on it.

There are a couple of easy fixes. First, hire someone on Fiverr to be “you” on LinkedIn. If something important appears, that individual will alert you so you can say, “Do this.” Second, do not log into LinkedIn.

What happens if you embrace the Microsoft product? Here’s a partial answer:

“I deleted my account because every time I go on it I feel absolutely terrible about myself,” the confessional said. “It might just be me and comparing myself too much to others but does anyone else find people on there to be completely cringe and egotistical lol?! I don’t even have a bad job but I think LinkedIn has just become an egocentric breeding zone like every other social media platform.”

Okay. LinkedIn public relations and marketing messages cause a person to feel bad about oneself. I am not sure I understand.

Suck it up, buttercup or learn to use agentic AI which can send you personalized emails every hour telling you that you are not terrible. Give that a try if ignoring LinkedIn is not possible.

Stephen E Arnold, March 5, 2025

We Have to Spread More Google Cheese

March 4, 2025

A Super Bowl ad is a big deal for companies that shell out for those pricy spots. So it is a big embarrassment when one goes awry. The BBC reports, “Google Remakes Super Bowl Ad After AI Cheese Gaffe.” Google was trying to how smart Gemini is. Instead, the ad went out with a stupid mistake. Writers Graham Fraser and Tom Singleton tell us:

“The commercial – which was supposed to showcase Gemini’s abilities – was created to be broadcast during the Super Bowl. It showed the tool helping a cheesemonger in Wisconsin write a product description by informing him Gouda accounts for ’50 to 60 percent of global cheese consumption.’ However, a blogger pointed out on X that the stat was ‘unequivocally false’ as the Dutch cheese was nowhere near that popular.”

In fact, cheddar and mozzarella vie for the world’s favorite cheese. Gouda is not even a contender. Though the company did remake the ad, one top Googler at first defended Gemini with some dubious logic. We learn:

“Replying to him, Google executive Jerry Dischler insisted this was not a ‘hallucination’ – where AI systems invent untrue information – blaming the websites Gemini had scraped the information from instead. ‘Gemini is grounded in the Web – and users can always check the results and references,’ he wrote. ‘In this case, multiple sites across the web include the 50-60% stat.'”

Sure, users can double check an AI’s work. But apparently not even Google itself can be bothered. Was the company so overconfident it did not use a human copyeditor? Or do those not exist anymore? Wrong information is wrong information, whether technically a hallucination or not. Spitting out data from unreliable sources is just as bad as making stuff up. Google still has not perfected the wildly imperfect Gemini, it seems.

Cynthia Murrell, February 28, 2025

Big Thoughts On How AI Will Affect The Job Market

March 4, 2025

Every time there is an advancement in technology, humans are fearful they won’t make an income. While some jobs disappeared, others emerged and humans adapted to the changes. We’ll continue to adapt as AI becomes more integral in society. How will we handle the changes?

Anthropic, a big player in the OpenAI field, launched The Anthropic Index to understand AI’s effects on labor markers and the economy. Anthropic claims it’s gathering “first-of-its” kind data from Claude.ai anonymized conversations. This data demonstrates how AI is incorporated into the economy. The organization is also building an open source dataset for researchers to use and build on their findings. Anthropic surmises that this data will help develop policy on employment and productivity.

Anthropic reported on their findings in their first paper:

• “Today, usage is concentrated in software development and technical writing tasks. Over one-third of occupations (roughly 36%) see AI use in at least a quarter of their associated tasks, while approximately 4% of occupations use it across three-quarters of their associated tasks.

• AI use leans more toward augmentation (57%), where AI collaborates with and enhances human capabilities, compared to automation (43%), where AI directly performs tasks.

• AI use is more prevalent for tasks associated with mid-to-high wage occupations like computer programmers and data scientists, but is lower for both the lowest- and highest-paid roles. This likely reflects both the limits of current AI capabilities, as well as practical barriers to using the technology.”

The Register put the Anthropic report in layman’s terms in the article, “Only 4 Percent Of Jobs Rely Heavily On AI, With Peak Use In Mid-Wage Roles.” They share that only 4% of jobs rely heavily on AI for their work. These jobs use AI for 75% of their tasks. Overall only 36% of jobs use AI for 25% of their tasks. Most of these jobs are in software engineering, media industries, and educational/library fields. Physical jobs use AI less. Anthropic also found that 57% of these jobs use AI to augment human tasks and 43% automates them.

These numbers make sense based on AI’s advancements and limitations. It’s also common sense that mid-tier wage roles will be affected and not physical or highly skilled labor. The top tier will surf on money; the water molecules are not so lucky.

Whitney Grace, March 4, 2025

Azure Insights: A Useful and Amusing Resource

March 4, 2025

This blog post is the work of a real live dinobaby. At age 80, I will be heading to the big natural history museum in the sky. Until then, creative people surprise and delight me.

This blog post is the work of a real live dinobaby. At age 80, I will be heading to the big natural history museum in the sky. Until then, creative people surprise and delight me.

I read some of the posts in a service named “Daily Azure Sh$t.” You can find the content on Mastodon.social at this link. Reading through the litany of issues, glitches, and goofs had me in stitches. If you work with Microsoft Azure, you might not be reading the Mastodon stomps with a chortle. You might be a little worried.

The post states:

This account is obviously not affiliated with Microsoft.

My hunch is that like other Microsoft-skeptical blogs, some of the Softies’ legal eagles will take flight. Upon determining the individual responsible for the humorous summary of technical antics, the individual may find that knocking off the service is one of the better ideas a professional might have. But until then, check out the newsy items.

As interesting are the comments on Hacker News. You will find these at this link.

For your delectation and elucidation, here are some of the comments from Hacker News:

- Osigurdson said: “Businesses are theoretically all about money but end up being driven by pride half the time.”

- Amarant said: “Azure was just utterly unable to deliver on anything they promised, thus the write-off on my part.”

- Abrookewood said: “Years ago, we migrated of Rackspace to Azure, but the database latency was diabolical. In the end, we got better performance by pointing the Azure web servers to the old database that was still in Rackspace than we did trying to use the database that was supposedly in the same data center.”

You may have a sense of humor different from mine. Enjoy either the laughing or the weeping.

Stephen E Arnold, March 9, 2025

The Big Cull: Goodbye, Type A People Who Make the Government Chug Along

March 3, 2025

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

I used to work at a couple of big time consulting firms in Washington, DC. Both were populated with the Googlers of that time. The blue chip consulting firm boasted a wider range of experts than the nuclear consulting outfit. There were some lessons I learned beginning with my first day on the job in the early 1970s. Here are three:

- Most of the Federal government operates because of big time consulting firms which do “work” and show up for meetings with government professionals

- Government professionals manage big time consulting firms’ projects with much of the work day associated with these projects and assorted fire drills related to non consulting firm work

- Government workers support, provide input, and take credit or avoid blame for work involving big time consulting firms. These individuals are involved in undertaking tasks not assigned to consulting firms and doing the necessary administrative and support work for big time consulting firm projects.

A big time consulting professional has learned that her $2.5 billion project has been cancelled. The contract workers are now coming toward her, and they are a bit agitated because they have been terminated. Thanks, OpenAI. Too bad about your being “out of GPUs.” Planning is sometimes helpful.

There were some other things I learned in 1972, but these three insights appear to have had sticking power. Whenever I interacted with the US federal government, I kept the rules in mind and followed them for a number of not-do-important projects.

This brings me to the article in what is now called Nextgov FCW. I think “FCW” means or meant Federal Computer Week. The story which I received from a colleague who pays a heck of a lot more attention to the federal government than I do caught my attention.

[Note: This article’s link was sometimes working and sometimes not working. If you 404, you will have do do some old fashioned research.] “Trump Administration Asks Agencies to Cull Consultants” says:

The acting head of the General Services Administration, Stephen Ehikian, asked “agency senior procurement executive[s]” to review their consulting contracts with the 10 companies the administration deemed the highest paid using procurement data — Deloitte, Accenture Federal Services, Booz Allen Hamilton, General Dynamics, Leidos, Guidehouse, Hill Mission Technologies Corp., Science Applications International Corporation, CGI Federal and International Business Machines Corporation — in a memo dated Feb. 26 obtained by Nextgov/FCW. Those 10 companies “are set to receive over $65 billion in fees in 2025 and future years,” Ehikian wrote. “This needs to, and must, change,” he added in bold.

Mr. Ehikian’s GSA biography states:

Stephen Ehikian currently serves as Acting Administrator and Deputy Administrator of the General Services Administration. Stephen is a serial entrepreneur in the software industry who has successfully built and sold two companies focused on sales and customer service to Salesforce (Airkit.ai in 2023 and RelateIQ in 2014). He most recently served as Vice President of AI Products and has a strong record of identifying next-generation technology. He is committed to accelerating the adoption of technology throughout government, driving maximum efficiency in government procurement for the benefit of all taxpayers, and will be working closely with the DOGE team to do so. Stephen graduated from Yale University with a bachelor’s degree in Mechanical Engineering and Economics and earned an MBA from Stanford University.

The firms identified in the passage from Nextgov would have viewed a person with Mr. Ehikian’s credentials as a potential candidate for a job. In the 1970s, an individuals with prior business experience and an MBA would have been added as an associate and assigned to project teams. He would have attended one of the big time consulting firms’ “charm schools.” The idea at the firm which employed me was that each big time consulting firm had a certain way of presenting information, codes of conduct, rules of engagement with prospects and clients, and even the way to dress.

Today I am not sure what a managing partner would assign a person like Mr. Ehikian to undertake. My initial thought is that I am a dinobaby and don’t have a clue about how one of the big time firms in the passage listing companies with multi billions of US government contracts operates. I don’t think too much would change because at the firm where I labored for a number of years much of the methodology was nailed down by 1920 and persisted for 50 years when I arrived. Now 50 years from the date of my arrival, I would be dollars to donuts that the procedures, the systems, and the methods were quite similar. If a procedure works, why change it dramatically. Incremental improvements will get the contract signed. The big time consulting firms have a culture and acculturation is important to these firms’ success.

The cited Nextgov article reports:

The notice comes alongside a new executive order directing agencies to build centralized tech to record all payments issued through contracts and grants, along with justification for those payments. Agency leaders were also told to review all grants and contracts within 30 days and terminate or modify them to reduce spending under that executive order.

This project to “build centralized technology to record all payments issued through contracts and grants” is exactly the type of work that some of the big time consulting firms identified can do. I know that some government entities have the expertise to create this type of system. However, given the time windows, the different departments and cross departmental activities, and the accounting and database hoops that must be navigated, the order to “build centralized technology to record all payments” is a very big job. (That’s why big time consulting firms exist. The US federal government has not developed the pools of expensive and specialized talent to do some big jobs.) I have worked on not-too-important jobs, and I found that just do it was easier said than done.

Several observations:

- I am delighted that I am no longer working at either of the big time consulting firms which used to employ me. At age 80, I don’t have the stamina to participate in the intense, contentious, what are we going to do meetings that are going to ruin many consulting firms’ weekends.

- I am not sure what will happen when the consulting firms’ employees and contractors’ just stop work. Typically, when there is not billing, people are terminated. Bang. Yes, just like that. Maybe today’s work world is a kinder and gentler place, but I am not sure about that.

- The impact on citizens and other firms dependent on the big time consulting firms’ projects is likely to chug along with not much visible change. Then just like the banking outages today (February 28, 2024) in the UK, systems and services will begin to exhibit issues. Some may just outright fail without the ministrations of consulting firm personnel.

- Figuring out which project is mission critical and which is not may be more difficult than replacing a broken MacBook Pro at the Apple Store in the old Carnegie Library Building on K Street. Decisions like these were typical of the projects that big time consulting firms were set up to handle with aplomb. A mistake may take months to surface. If several pop up in one week, excitement will ensue. That thinking for the future is what big time consulting firms do as part of their work. Pulling a plug on an overheating iron in a DC hotel is easy. Pulling a plug on a consulting firm is different for many reasons.

Net net: The next few months will be interesting. I have my eye on the big time consulting firms. I am also watching how the IRS and Social Security System computer infrastructure works. I want to know but no longer will be able to get the information about the management of devices in the arsenal not too far from a famous New Jersey golf course. I wonder about the support of certain military equipment outside the US. I am doing a lot of wondering.

That is fine for me. I am a dinobaby. For others in the big time consulting game and the US government professionals who are involved with these service firms’ contracts, life is a bit more interesting.

Stephen E Arnold, March 3, 2025

Dear New York Times, Your Online System Does Not Work

March 3, 2025

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

I gave up on the print edition to the New York Times because the delivery was terrible. I did not buy the online version because I could get individual articles via the local library. I received a somewhat desperate email last week. The message was, “Subscribe for $4 per month for two years.” I thought, “Yeah, okay. How bad could it be?”

Let me tell you it was bad, very bad.

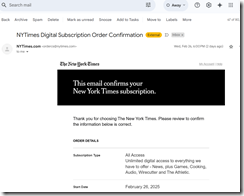

I signed up, spit out my credit card and received this in my email:

The subscription was confirmed on February 26, 2025. I tried to log in on the 27th. The system said, “Click here to receive an access code.” I did. In fact I did the click for the code three times. No code on the 27th.

Today is the 28th. I tried again. I entered my email and saw the click here for the access code. No code. I clicked four times. No code sent.

Dispirited, I called the customer service number. I spoke to two people. Both professionals told me they were sending the codes to my email. No codes arrived.

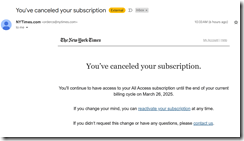

Guess what? I gave up and cancelled my subscription. I learned that I had to pay $4 for the privilege of being told my email was not working.

That was baloney. How do I know? Look at this screenshot:

The estimable newspaper was able to send me a notice that I cancelled.

How screwed up is the New York Times’ customer service? Answer: A lot. Two different support professionals told me I was not logged into my email. Therefore, I was not receiving the codes.

How screwed up are the computer systems at the New York Times? Answer: A lot, no, a whole lot.

I don’t think anyone at the New York Times knows about this issue. I don’t think anyone cares. I wonder how many people like me tried to buy a subscription and found that cancellation was the only viable option to escape automated billing for a service the buyer could not access.

Is this intentional cyber fraud? Probably not. I think it is indicative of poor management, cost cutting, and information technology that is just good enough. By the way, how can you send to my email a confirmation and a cancellation and NOT send me the access code? Answer: Ineptitude in action.

Well, hasta la vista.

Stephen E Arnold, March 3, 2025