Modern Life: Advertising Is the Future

July 23, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

What’s the future? I think most science fiction authors missed the memo from the future. Forget rocket ships, aliens, and light sabers. Think advertising. How do I know that ads will be the dominant feature of messaging? I read “French AI Startup Launches First LLM Built Exclusively for Advertising Copy.”

Advertising professionals consult the book about trust and ethical behavior. Both are baffled at the concepts. Thanks, MSFT Copilot. You are an expert in trust and ethical behavior, right?

Yep, advertising arrives with smart manipulation, psycho-metric manipulative content, and shaped data. The write up explains:

French startup AdCreative.ai has launched a new large language model build exclusively for advertising. Named AdLLM Spark, the system was built to craft ad text with high conversion rates on every major advertising platform. AdCreative.ai said the LLM combines two unique features: instant text generation and accurate performance prediction.

Let’s assume those French wizards have successfully applied probabilistic text generation to probabilistic behavior manipulation. Every message can be crafted by smart software to work. If an output does not work, just fiddle around until you hit the highest performing payload for the doom scrolling human.

The first part of the evolution of smart software pivoted on the training data. Forget that privacy hogging, copyright ignoring approach. Advertising copy is there to be used and recycled. The write up says:

The training data encompasses every text generated by AdCreative.ai for its 2,000,000 users. It includes information from eight leading advertising platforms: Facebook, Instagram, Google, YouTube, LinkedIn, Microsoft, Pinterest, and TikTok.

The second component involved tuning the large language model. I love the way “manipulation” and “move to action” becomes a dataset and metrics. If it works, that method will emerge from the analytic process. Do that, and clicks will result. Well, that’s the theory. But it is much easier to understand than making smart software ethical.

Does the system work? The write up offers this “proof”:

AdCreative.ai tested the impact on 10,000 real ad texts. According to the company, the system predicted their performance with over 90% accuracy. That’s 60% higher than ChatGPT and at least 70% higher than every other model on the market, the startup said.

Just for fun, let’s assume that the AdCreative system works and performs as “advertised.”

- No message can be accepted at face value. Every message from any source can be weaponized.

- Content about any topic — and I mean any — must be viewed as shaped and massaged to produce a result. Did you really want to buy that Chiquita banana?

- The implications of automating this type of content production begs for a system to identify something hot on a TikTok-type service, extract the words and phrases, and match those words with a bit of semantic expansion to what someone wants to pitch, promote, occur, and what not. The magic is that the volume of such messages is limited only by one’s machine resources.

Net net: The future of smart software is not solving problems for lawyers or finding a fix for Aunt Milli’s fatigue. The future is advertising, and AdCreative.ai is making the future more clear. Great work!

Stephen E Arnold, July 17, 2024

Bots Have Invaded The World…On The Internet

July 23, 2024

Robots…er…bots have taken over the world…at least the Internet…parts of it. The news from Techspot is shocking but when you think about it really isn’t: “Almost Half Of All Web Traffics Is Bots, And They Are Mostly Malicious In Nature.” Akamai is the largest cloud computing platform in the world. It recently released a report that 42% of web traffic is from bots and 65% of them are malicious.

Akamai said that most of the bots are scrapper bots designed to gather data. Scrapper bots collect content from Web sites. Some of them are used to form AI data sets while others are designed to steal information to be used in hacker, scams, and other bad acts. Commerce Web sites are negatively affected the most, because scrapper bots steal photos, prices, descriptions, and more. Bad actors then make fake Web sites imitating the real McCoy. They make money by from ads by ranking on Google and stealing traffic.

Bots are nasty little buggers even the most benign:

“Even non-malicious scraping bots can degrade a website’s performance, impact search engine metrics, and increase computing and hosting costs.

Companies now face increasingly sophisticated bots that use AI algorithms, headless browser technology, and other advanced solutions. These new threats require novel, more complex mitigation approaches beyond traditional methods. A robust firewall is now only the beginning of the numerous security measures needed by website owners today.”

Akamai should have dedicated part of their study to investigate the Dark Web. How many bots or law enforcement officials are visiting that shrinking part of the Net?

Whitney Grace, July 23, 2024

Thinking about AI Doom: Cheerful, Right?

July 22, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I am not much of a philosopher psychologist academic type. I am a dinobaby, and I have lived through a number of revolutions. I am not going to list the “next big things” that have roiled the world since I blundered into existence. I am changing my mind. I have memories of crouching in the hall at Oxon Hill Grade School in Maryland. We were practicing for the atomic bomb attack on Washington, DC. I think I was in the second grade. Exciting.

The AI powered robot want the future experts in hermeneutics to be more accepting of the technology. Looks like the robot is failing big time. Thanks, MSFT Copilot. Got those fixes deployed to the airlines yet?

Now another “atomic bomb” is doing the James Bond countdown: 009, 008, and then James cuts the wire at 007. The world was saved for another James Bond sequel. Wow, that was close.

I just read “Not Yet Panicking about AI? You Should Be – There’s Little Time Left to Rein It In.” The essay seems to be a trifle dark. Here’s a snippet I circled:

With technological means, we have accomplished what hermeneutics has long dreamed of: we have made language itself speak.

Thanks to Dr. Francis Chivers, one of my teachers at Duquesne University, I actually know a little bit about hermeneutics. May I share?

Hermeneutics is the theory and methodology of interpretation of words and writings. One should consider content in its historical, cultural, and linguistic context. The idea is to figure out the the underlying messages, intentions, and implications of texts doing academic gymnastics.

Now the killer statement:

Jacques Lacan was right; language is dark and obscene in its depths.

I presume you know well the work of Jacques Lacan. But if you have forgotten, the canny psychologist got himself kicked out of the International Psychoanalytic Association (no mean feat as I recall) for his ideas about desire. Think Freud on steroids.

The write up uses these everyday references to make the point:

If our governments summon the collective will, they are very strong. Something can still be done to rein in AI’s powers and protect life as we know it. But probably not for much longer.

Okay. AI is going to screw up the world. I think I have heard that assertion when my father told me about the computer lecture he attended at an accounting refresher class. That fear he manifested because he thought he would lose his job to a machine attracted me to the dark unknown of zeros and ones.

How did that turn out? He kept his job. I think mankind has muddled through the computer revolution, the space revolution, the wonder drug revolution, the automation revolution, yada yada.

News flash: The AI revolution has been around long before the whiz kids at Google disclosed Transformers. I think the author of this somewhat fearful write up is similar to my father’s projecting on computerized accounting his fear that he would be harmed by punched cards.

Take a deep breath. The sun will come up tomorrow morning. People who know about hermeneutics and Jacques Lacan will be able to ponder the nature of text and behavior. In short, worry less. Be less AI-phobic. The technology is here and it is not going away, getting under the thumb of any one government including China’s, and causing eternal darkness. Sorry to disappoint you.

Stephen E Arnold, July 22, 2024

A Windows Expert Realizes Suddenly Last Outage Is a Rerun

July 22, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness

I love poohbahs. One quite interesting online outlet I consult occasionally continues to be quite enthusiastic for all things Microsoft. I spotted a write up about the Crowdstrike matter and its unfortunate downstream consequences for a handful of really tolerant people using its cyber security software. The absolute gem of a write up which arrested my attention was “As the World Suffers a Global IT Apocalypse, What’s More Worrying is How Easy It Is for This to Happen.” The article discloses a certain blind spot among a few Windows cheerleaders. (I thought the Apple fan core was the top of the marketing mountain. I was wrong again, a common problem for a dinobaby like me.

Is the blue screen plague like the sinking of the Swedish flagship Vasa? Thanks, OpenAI. Good enough.

The subtitle is even more striking. Here it is:

Nefarious actors might not be to blame this time, but it should serve as a warning to us all how fragile our technology is.

Who knew? Perhaps those affected by the flood of notable cyber breaches. Norton Hospital, Solarwinds, the US government, et al are examples which come to mind.

To what does the word “nefarious” refer? Perhaps it is one of those massive, evil, 24×7 gangs of cyber thugs which work to find the very, very few flaws in Microsoft software? Could it be cyber security professionals who think about security only when some bad — note this — like global outages occur and the flaws in their procedures or code allow people to spend the night in airports or have their surgeries postponed?

The article states:

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

I find it interesting that the money-raising information appears before the stunning insights in the article.

The article reveals this pivotal item of information:

It’s an unprecedented situation around the globe, with banks, healthcare, airlines, TV stations, all affected by it. While Crowdstrike has confirmed this isn’t the result of any type of hack, it’s still incredibly alarming. One piece of software has crippled large parts of industry all across the planet. That’s what worries me.

Ah, a useful moment of recognizing the real world. Quite a leap for those who find certain companies a source of calm and professionalism. I am definitely glad Windows Central, the publisher of this essay, is worried about concentration of technology and the downstream dependencies. Worry only when a cyber problem takes down banks, emergency call services, and other technologically-dependent outfits.

But here’s the moment of insight for the Windows Central outfit. I can here “Eureka!” echoing in the workspace of this intrepid collection of poohbahs:

This time we’re ‘lucky’ in the sense it wasn’t some bad actors causing deliberate chaos.

Then the write up offers this stunning insight after decades of “good enough” software:

This stuff is all too easy. Bad actors can target a single source and cripple millions of computers, many of which are essential.

Holy Toledo. I am stunned with the brilliance of the observations in the article. I do have several thoughts from my humble office in rural Kentucky:

- A Windows cheerleading outfit is sort of admitting that technology concentration where “good enough” is excellence creates a global risk. I mean who knew? The Apple and Linux systems running Crowdstrike’s estimable software were not affected. Is this a Windows thing, this global collapse?

- Awareness of prior security and programming flaws simply did not exist for the author of the essay. I can understand why Windows Central found the Windows folding phone and a first generation Windows on Arm PCs absolutely outstanding.

- Computer science students in a number of countries learn online and at school how to look for similar configuration vulnerabilities in software and exploit them. The objective is to steal, cripple, or start up a cyber security company and make oodles of money. Incidents like this global outage are a road map for some folks, good and not so good.

My take away from this write up is that those who only worry when a global problem arises from what seems to be US-managed technology have not been paying attention. Online security is the big 17th century Swedish flagship Vasa (Wasa). Upon launch, the marine architect and assorted influential government types watched that puppy sink.

But the problem with the most recent and quite spectacular cyber security goof is that it happened to Microsoft and not to Apple or Linux systems. Perhaps there is a lesson in this fascinating example of modern cyber practices?

Stephen E Arnold, July 22, 2024

The Logic of Good Enough: GitHub

July 22, 2024

What happens when a big company takes over a good thing? Here is one possible answer. Microsoft acquired GitHub in 2018. Now, “‘GitHub’ Is Starting to Feel Like Legacy Software,” according Misty De Méo at The Future Is Now blog. And by “legacy,” she means outdated and malfunctioning. Paint us unsurprised.

De Méo describes being unable to use a GitHub feature she had relied on for years: the blame tool. She shares her process of tracking down what went wrong. Development geeks can see the write-up for details. The point is, in De Méo’s expert opinion, those now in charge made a glaring mistake. She observes:

“The corporate branding, the new ‘AI-powered developer platform’ slogan, makes it clear that what I think of as ‘GitHub’—the traditional website, what are to me the core features—simply isn’t Microsoft’s priority at this point in time. I know many talented people at GitHub who care, but the company’s priorities just don’t seem to value what I value about the service. This isn’t an anti-AI statement so much as a recognition that the tool I still need to use every day is past its prime. Copilot isn’t navigating the website for me, replacing my need to the website as it exists today. I’ve had tools hit this phase of decline and turn it around, but I’m not optimistic. It’s still plenty usable now, and probably will be for some years to come, but I’ll want to know what other options I have now rather than when things get worse than this.”

The post concludes with a plea for fellow developers to send De Méo any suggestions for GitHub alternatives and, in particular, a good local blame tool. Let us just hope any viable alternatives do not also get snapped up by big tech firms anytime soon.

Cynthia Murrell, July 23, 2024

Anarchist Content Links: Zines Live

July 19, 2024

![dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/dinosaur30a_thumb_thumb_thumb_thumb_1_thumb_thumb.gif) This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

One of my team called my attention to “Library. It’s Going Down Reading Library.” I know I am not clued into the lingo of anarchists. As a result, the Library … Library rhetoric just put me on a slow blinking yellow alert or emulating the linguistic style of Its Going Down, Alert. It’s Slow Blinking Alert.”

Syntactical musings behind me, the list includes links to publications focused on fostering even more chaos than one encounters at a Costco or a Southwest Airlines boarding gate. The content of these publications is thought provoking to some and others may be reassured that tearing down may be more interesting than building up.

The publications are grouped in categories. Let me list a handful:

- Antifascism

- Anti-Politics

- Anti-Prison, Anti-Police, and Counter-Insurgency.

Personally I would have presented antifascism as anti-fascism to be consistent with the other antis, but that’s what anarchy suggests, doesn’t it?

When one clicks on a category, the viewer is presented with a curated list of “going down” related content. Here’s a listing of what’s on offer for the topic AI has made great again, Automation:

Livewire: Against Automation, Against UBI, Against Capital

If one wants more “controversial” content, one can visit these links:

Each of these has the “zine” vibe and provides useful information. I noted the lingo and the names of the authors. It is often helpful to have an entity with which one can associate certain interesting topics.

My take on these modest collections: Some folks are quite agitated and want to make live more challenging that an 80-year-old dinobaby finds it. (But zines remind me of weird newsprint or photocopied booklets in the 1970s or so.) If the content of these publications is accurate, we have not “zine” anything yet.

Stephen E Arnold, July 19, 2024

Students, Rejoice. AI Text Is Tough to Detect

July 19, 2024

While the robot apocalypse is still a long way in the future, AI algorithms are already changing the dynamics of work, school, and the arts. It’s an unfortunate consequence of advancing technology and a line in the sand needs to be drawn and upheld about appropriate uses of AI. A real world example was published in the Plos One Journal: “A Real-World Test Of Artificial Intelligence Infiltration Of A University Examinations System: A ‘Turing Test’ Case Study.”

Students are always searching for ways to cheat the education system. ChatGPT and other generative text AI algorithms are the ultimate cheating tool. School and universities don’t have systems in place to verify that student work isn’t artificially generated. Other than students learning essential knowledge and practicing core skills, the ways students are assessed is threatened.

The creators of the study researched a question we’ve all been asking: Can AI pass as a real human student? While the younger sects aren’t the sharpest pencils, it’s still hard to replicate human behavior or is it?

“We report a rigorous, blind study in which we injected 100% AI written submissions into the examinations system in five undergraduate modules, across all years of study, for a BSc degree in Psychology at a reputable UK university. We found that 94% of our AI submissions were undetected. The grades awarded to our AI submissions were on average half a grade boundary higher than that achieved by real students. Across modules there was an 83.4% chance that the AI submissions on a module would outperform a random selection of the same number of real student submissions.”

The AI exams and assignments received better grades than those written by real humans. Computers have consistently outperformed humans in what they’re programmed to do: calculations, play chess, and do repetitive tasks. Student work, such as writing essays, taking exams, and unfortunate busy work, is repetitive and monotonous. It’s easily replicated by AI and it’s not surprising the algorithms perform better. It’s what they’re programmed to do.

The problem isn’t that AI exist. The problem is that there aren’t processes in place to verify student work and humans will cave to temptation via the easy route.

Whitney Grace, July 19, 2024

Soft Fraud: A Helpful List

July 18, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

For several years I have included references to what I call “soft fraud” in my lectures. I like to toss in examples of outfits getting cute with fine print, expiration dates for offers, and weasels on eBay asserting that the US Post Office issued a bad tracking number. I capture the example, jot down the vendor’s name, and tuck it away. The term “soft fraud” refers to an intentional practice designed to extract money or an action from a user. The user typically assumes that the soft fraud pitch is legitimate. It’s not. Soft fraud is a bit like a con man selling an honest card game in Manhattan. Nope. Crooked by design (the phrase is a variant of the publisher of this listing).

I spotted a write up called “Catalog of Dark Patterns.” The Hall of Shame.design online site has done a good job of providing a handy taxonomy of soft fraud tactics. Here are four of the categories:

- Privacy Zuckering

- Roach motel

- Trick questions

The Dark Patterns write up then populates each of the 10 categories with some examples. If the examples presented are not sufficient, a “View All” button allows the person interested in soft fraud to obtain a bit more color.

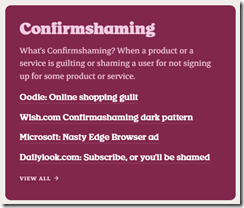

Here’s an example of the category “Confirmshaming”:

My suggestion to the Hall of Shame team is to move away from “too cute” categories. The naming might be clever, person searching for examples of soft fraud might not know the phrase “Privacy Zuckering.” Yes, I know that I have been guilty of writing phrases like the “zucked up,” but I am not creating a useful list. I am a dinobaby having a great time at 80 years of age.

Net net: Anyone interested in soft fraud will find this a useful compilation. Hopefully practitioners of soft fraud will be shunned. Maybe a couple of these outfits would be subject to some regulatory scrutiny? Hopefully.

Stephen E Arnold, July 18, 2024

Looking for the Next Big Thing? The Truth Revealed

July 18, 2024

![dinosaur30a_thumb_thumb_thumb_thumb_[1] dinosaur30a_thumb_thumb_thumb_thumb_[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/dinosaur30a_thumb_thumb_thumb_thumb_1_thumb.gif) This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Big means money, big money. I read “Twenty Five Years of Warehouse-Scale Computing,” authored by Googlers who definitely are into “big.” The write up is history from the point of view of engineers who built a giant online advertising and surveillance system. In today’s world, when a data topic is raised, it is big data. Everything is Texas-sized. Big is good.

This write up is a quasi-scholarly, scientific-type of sales pitch for the wonders of the Google. That’s okay. It is a literary form comparable to an epic poem or a jazzy H.L. Menken essay when people read magazines and newspapers. Let’s take a quick look at the main point of the article and then consider its implications.

I think this passage captures the zeitgeist of the Google on July 13, 2024:

From a team-culture point of view, over twenty five years of WSC design, we have learnt a few important lessons. One of them is that it is far more important to focus on “what does it mean to land” a new product or technology; after all, it was the Apollo 11 landing, not the launch, that mattered. Product launches are well understood by teams, and it’s easy to celebrate them. But a launch doesn’t by itself create success. However, landings aren’t always self-evident and require explicit definitions of success — happier users, delighted customers and partners, more efficient and robust systems – and may take longer to converge. While picking such landing metrics may not be easy, forcing that decision to be made early is essential to success; the landing is the “why” of the project.

A proud infrastructure plumber knows that his innovations allows the home owner to collect rent from AirBnB rentals. Thanks, MSFT Copilot. Interesting image because I did not specify gender or ethnicity. Does my plumber look like this? Nope.

The 13 page paper includes numerous statements which may resonate with different readers as more important. But I like this passage because it makes the point about Google’s failures. There is no reference to smart software, but for me it is tough to read any Google prose and not think in terms of Code Red, the crazy flops of Google’s AI implementations, and the protestations of Googlers about quantum supremacy or some other projection of inner insecurity the company’s genius concoct. Don’t you want to have an implant that makes Google’s knowledge of “facts” part of your being? America’s founding fathers were not diverse, but Google has different ideas about reality.

This passage directly addresses failure. A failure is a prelude to a soft landing or a perfect landing. The only problem with this mindset is that Google has managed one perfect landing: Its derivative online advertising business. The chatter about scale is a camouflage tarp pulled over the mad scramble to find a way to allow advertisers to pay Google money. The “invention” was forced upon those at Google who wanted those ad dollars. The engineers did many things to keep the money flowing. The “landing” is the fact that the regulators turned a blind eye to Google’s business practices and the wild and crazy engineering “fixes” worked well enough to allow more “fixes.” Somehow the mad scramble in the 25 years of “history” continues to work.

Until it doesn’t.

The case in point is Google’s response to the Microsoft OpenAI marketing play. Google’s ability to scale has not delivered. What delivers at Google is ad sales. The “scale” capabilities work quite well for advertising. How does the scale work for AI? Based on the results I have observed, the AI pullbacks suggest some issues exist.

What’s this mean? Scale and the cloud do not solve every problem or provide a slam dunk solution to a new challenge.

The write up offers a different view:

On one hand, computing demand is poised to explode, driven by growth in cloud computing and AI. On the other hand, technology scaling slowdown poses continued challenges to scale costs and energy-efficiency

Google sees that running out of chip innovations, power, cooling, and other parts of the scale story are an opportunity. Sure they are. Google’s future looks bright. Advertising has been and will be a good business. The scale thing? Plumbing. Let’s not forget what matters at Google. Selling ads and renting infrastructure to people who no longer have on-site computing resources. Google is hoping to be the AirBnB of computation. And sell ads on Tubi and other ad-supported streaming services.

Stephen E Arnold, July 18, 2024

What? Cloud Computing Costs Cut from Business Budgets

July 18, 2024

Many companies offload their data storage and computing needs to third parties aka cloud computing providers. Leveraging cloud computing was viewed as a great way to lower technology budgets, but with rising inflation and costs that perspective is changing. The BBC wrote an article about the changes in the cloud: “Are Rainy Days Ahead For Cloud Computing?”

Companies are reevaluating their investments in cloud computing, because the price tags are too high. The cloud was advertised as cheaper, easier, and faster. Businesses aren’t seeing any productive gains. Larger companies are considering dumping the cloud and rerouting their funds to self-hosting again. Clouding computing still has its benefits, especially for smaller companies who can’t invest in their technology infrastructure. Security is another concern:

“‘A key factor in our decision was that we have highly proprietary R&D data and code that must remain strictly secure,’ says Markus Schaal, managing director at the German firm. “If our investments in features, patches, and games were leaked, it would be an advantage to our competitors. While the public cloud offers security features, we ultimately determined we needed outright control over our sensitive intellectual property. "As our AI-assisted modelling tools advanced, we also required significantly more processing power that the cloud could not meet within budget.”

He adds:

“We encountered occasional performance issues during heavy usage periods and limited customization options through the cloud interface. Transitioning to a privately-owned infrastructure gave us full control over hardware purchasing, software installation, and networking optimized for our workloads.”

Cloud computing has seen its golden era, but it’s not disappearing. It’s still a useful computing tool, but won’t be the main infrastructure for companies that want to lower costs, stay within budget, secure their software, and other factors.

Whitney Grace, July 18, 2024