Telegram Notes: Manny, Snoop, and Millions in Minutes

December 24, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In the mass of information my team and I gathered for my new study “The Telegram Labyrinth,” we saw several references to what may be an interesting intersection of Manuel (Manny) Stotz, a hookah company in the Middle East, Snoop Dog (the musical luminary), and Telegram.

At some point in Mr. Stotz’ financial career, he acquired an interest in a company doing business as Advanced Inhalation Rituals or AIR. This firm owned or had an interest in a hookah manufacturer doing business as Al Fakher. By chance, Mr. Stotz interacted with Mr. Snoop Dog. As the two professionals discussed modern business, Mr. Stotz suggested that Mr. Snoop Dog check out Telegram.

Thanks, Venice.ai. I needed smoke coming out of the passenger side window, but smoke existing through the roof is about right for smart software.

Telegram allowed Messenger users to create non fungible tokens. Mr. Snoop Dog thought this was a very interesting idea. In July 2025, Mr. Snoop Dogg

I found the anecdotal Manny Stotz information in social media and crypto centric online services suggestive but not particularly convincing and rarely verifiable.

One assertion did catch my attention. The Snoop Dogg NFT allegedly generated US$12 million in 30 minutes. Is the number in “Snoop Dogg Rakes in $12M in 30 Minutes with Telegram NFT Drop” on the money? I have zero clue. I don’t even know if the release of the NFT or drop took place. Let’s go to the write up:

Snoop Dogg is back in the web3 spotlight, this time partnering with Telegram to launch the messaging app’s first celebrity digital collectibles drop. According to Telegram CEO Pavel Durov, the launch generated $12 million in sales, with nearly 1 million items sold out in just 30 minutes. While the items aren’t minted yet, users purchased the collectibles internally on Telegram, with minting on The Open Network (TON) scheduled to go live later this month [July 2025].

Is this important? It depends on one’s point of view. As an 81 year old dinobaby, I find the comments online about this alleged NFT for a popular musician not too surprising. I have several other dinobaby observations to offer, of course:

- Mr. Stotz allegedly owns shares in a company (possibly more than 50 percent or more of the outfit) that does business in the UAE and other countries where hookahs are popular. That’s AIR.

- Mr. Stotz worked for a short time a a senior manager at the TON Foundation. That’s an organization allegedly 100 percent separate from Telegram. That’s the totally independent, Swiss registered TON Foundation, not to be confused with the other TON Foundation in Abu Dhabi. (I wonder why there are two Telegram linked foundations. Maybe someone will look into that? Perhaps these are legal conventions or something akin to Trojan horses? This dinobaby does not know.

- By happenstance, Mr. Snoop Dogg learned about Telegram NFTs and at the same time Mr. Stotz was immersed in activities related to the Foundation and its new NASDAQ listed property TON Strategy Company, the NFT spun up and then moved forward allegedly.

- Does a regulatory entity monitor and levy tax on the sale of NFTs within Telegram? I mean Mr. Snoop Dogg resides in America. Mr. Stotz resides allegedly in London. The TON Foundation which “runs” the TON blockchain is in United Arab Emirates, and Mr. Pavel Durov is an AirBnB type of entrepreneur — this question of paying taxes is probably above my pay grade which is US$0.00.

One simple question I have is, “Does Mr. Snoop Dogg have an Al Faker hookah?

This is an example of one semi interesting activity involving Mr. Stotz, his companies (Koenigsweg Holdings Ltd Holdings Ltd and its limited liability unit Kingsway Capital) and the Telegram / TON Foundation interactions cross borders, business types, and cultural boundaries. Crypto seems to be a magnetic agent.

As Mr. Snoop Dogg sang in 1994:

“With so much drama in the LBC, it’s kinda hard being Snoop D-O-double-G.” (“Gin and Juice, 1994)

For those familiar with NFT but not LBC, the “LBC” refers to Long Beach, California. There is much mystery surrounding many words and actions in Telegram-related activities.

PS. My team and I are starting an information service called “Telegram Notes.” We have a url, some of the items will be posted to LinkedIn and the cyber crime groups which allowed me to join. We are not sure what other outlets will accept these Telegram-related essays. It’s kinda hard being a double DINO-B-A-BEEE.

Stephen E Arnold, December 24, 2025

All I Want for Xmas Is Crypto: Outstanding Idea GenZ

December 24, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I wish I knew an actual GenZ person. I would love to ask, “What do you want for Christmas?” Because I am a dinobaby, I expect an answer like cash, a sweater, a new laptop, or a job. Nope, wrong.

According to the most authoritative source of real “news” to which I have access, the answer is crypto. “45% of Gen Z Wants This Present for Christmas—Here’s What Belongs on Your Gift List” explains:

[A] Visa survey found that 45% of Gen Z respondents in the United States would be excited to receive cryptocurrency as their holiday gift. (That’s way more than Americans overall, which was only 28%.)

Two geezers try to figure out what their grandchildren want for Xmas. Thanks, Qwen. Good enough.

Why? Here’s the answer from Jonathan Rose, CEO of BlockTrust IRA, a cryptocurrency-based individual retirement account (IRA) platform:

“Gen Z had a global pandemic and watched inflation eat away at the power of the dollar by around 20%. Younger people instinctively know that $100 today will buy them significantly less next Christmas. Asking for an asset that has a fixed supply, such as bitcoin, is not considered gambling to them—it is a logical decision…. We say that bull markets make you money, but bear markets get you rich. Gen Z wants to accumulate an asset that they believe will define the future of finance, at an affordable price. A crypto gift is a clear bet that the current slump is temporary while the digital economy is permanent.”

I like that line “a logical decision.”

The world of crypto is an interesting one.

The Readers Digest explains to a dinobaby how to obtain crypto. Here’s the explanation for a dinobaby like me:

One easy way to gift crypto is by using a major exchange or crypto-friendly trading app like Robinhood, Kraken or Crypto.com. Kraken’s app, for example, works almost like Venmo for digital assets. You buy a cryptocurrency—such as bitcoin—and send it to someone using a simple pay link. The recipient gets a text message, taps the link, verifies their account, and the crypto appears in their wallet. It’s a straightforward option for beginners.

What will those GenZ folks do with their funds? Gig tripping. No, I don’t know what that means.

Several observations:

- I liked getting practical gifts, and I like giving practical gifts. Crypto is not practical. It is, in my opinion, idea for money laundering, not buying sweaters.

- GenZ does have an uncertain future. Not only are those basic skill scores not making someone like me eager to spend time with “units” from this cohort, I am not sure I know how to speak to a GenZ entity. Is that why so many of these young people prefer talking to chatbots? Do dinobabies make the uncomfortable?

- When the Readers Digest explains how to buy crypto, the good old days of a homey anecdote and a summary of an article from a magazine with a reading level above the sixth grade are officially over.

Net net: I am glad I am old.

Stephen E Arnold, December 24, 2025

Way More Goofs for Waymo: Power Failure? Humans at Fault!

December 23, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I found the stories about Google smart Waymo self driving vehicles in the recent San Francisco power failure amusing. Dangerous, yes. A hoot? Absolutely. Google, as its smart software wizards remind us on a PR cadence to make Colgate toothpaste envious, Google is the big dog of smart software. ChatGPT, a loser. Grok, a crazy loser. Chinese open source LLMs. Losers all.

State of the art artificial intelligence makes San Francisco residents celebrate true exceptionalism. Thanks, Venice.ai. Good enough.

I read “Waymo Robotaxis Stop in the Streets during San Francisco Power Outage.” Okay. Google’s Waymo can’t go. Are they electric vehicles suddenly deprived of power? Nope. The smart software did not operate in a way that thrilled riders and motorists when most of San Francisco lost power. The BBC says a very Googley expert said:

"While the Waymo Driver is designed to treat non-functional signals as four-way stops, the sheer scale of the outage led to instances where vehicles remained stationary longer than usual to confirm the state of the affected intersections," a Waymo spokesperson said in a statement provided to the BBC. That "contributed to traffic friction during the height of the congestion," they added.

What other minor issues do the Googley Waymos offer? I noticed the omission of the phrase “out of an abundance of caution.” It is probably out there in some Google quote.

Several observations:

- Google’s wizards will talk about this unlikely event and figure out how to have its cars respond when traffic lights go on the fritz. Will Google be able to fix the problem? Sure. Soon.

- What caused the problem? From Google’s point of view, it was the person responsible for the power failure. Google had nothing to do with that because Google’s Gemini was not autonomously operating the San Francisco power generation system. Someone get on that, please.

- After how many years of testing and how many safe miles (except of course for the bodega cat) will have to pass before Google Waymo does what normal humans would do. Pull over. Or head to a cul de sac in Cow Hollow.

Net net: Google and its estimable wizards can overlook some details. Leadership will take action.

As Elon Musk allegedly said:

"Tesla Robotaxis were unaffected by the SF power outage," Musk posted on X, along with a repost of video showing Waymo vehicles stopped at an intersection with down traffic lights as a line of cars honk and attempt to go around them. Musk also reposted a video purportedly showing a Tesla self-driving car navigating an intersection with non-functioning traffic lights.

Does this mean that Grok is better than Gemini?

Stephen E Arnold, December 23, 2025

France Arrested Pavel. The UK Signals Signal: What Might Follow?

December 23, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

As a dinobaby, I play no part in the machinations of those in the encrypted messaging arena. On one side, are those who argue that encryption helps preserve a human “right” to privacy. On the other hand, are those who say, “Money laundering, kiddie pix, drugs, and terrorism threaten everything.” You will have to pick your side. That decision will dictate how you interpret the allegedly actual factual information in “Creating Apps Like Signal or WhatsApp Could Be Hostile Activity, Claims UK Watchdog.”

An American technology company leader looks at a UK prison and asks the obvious question. The officers escort the tech titan to the new cell. Thanks, Venice.ai. Close enough for a single horse shoe.

“Hostile activity” suggests bad things will happen if a certain behavior persists. These include:

- Fines

- Prohibitions on an online service in a country (this is popular in Iran among other nation states)

- Potential legal hassles (a Heathrow holding cell is probably the Ritz compared to HMP Woodhill)

The write up reports:

Developers of apps that use end-to-end encryption to protect private communications could be considered hostile actors in the UK.

That is the stark warning from Jonathan Hall KC, the government’s Independent Reviewer of State Threats Legislation and Independent Reviewer of Terrorism Legislation

I interpret this as a helpful summary of a UK government brief titled State Threats Legislation in 2024. The timing of Mr. Hall’s observation may “signal” an overt action. That step may not be on the scale of the French arrest of a Russian with a French passport, but it will definitely create a bit of a stir in the American encrypted messaging sector. Believe it or not, the UK is not thrilled with some organizations’ reluctance to provide information relevant to certain UK legal matters.

In my experience, applying the standard “oh, we didn’t get the email” or “we’ll get back to you, thanks” is unlikely to work for certain UK government entities. Although unfailingly polite, there are some individuals who learned quite particular skills in specialized training. The approach, like the French action, can cause surprise among the individuals identified as problematic.

With certain international tensions rising, the UK may seize an opportunity to apply both PR and legal pressure to overcome what may be seen an impolite and ill advised behavior by certain American companies in the end to end encrypted messaging business.

The article “Creating Apps Like Signal” points out:

In his independent review of the Counter-Terrorism and Border Security Act and the newly implemented National Security Act, Hall KC highlights the incredibly broad scope of powers granted to authorities.

The article adds:

While the report’s strong wording may come as a shock, it doesn’t exist in a vacuum. Encrypted apps are increasingly in the crosshairs of UK lawmakers, with several pieces of legislation targeting the technology. Most notably, Apple was served with a technical capability notice under the Investigatory Powers Act (IPA) demanding it weaken the encryption protecting iCloud data. That legal standoff led the tech giant to disable its Advanced Data Protection instead of creating a backdoor.

What will the US companies do? I learned from the write up:

With the battle lines drawn, we can expect a challenging year ahead for services like Signal and WhatsApp. Both companies have previously pledged to leave the UK market rather than compromise their users’ privacy and security.

My hunch is that more European countries may look at France’s action and the “signals” emanating from the UK and conclude, “We too can take steps to deal with the American companies.”

Stephen E Arnold, December 23, 2025

AI Training: The Great Unknown

December 23, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Deloitte used to be an accounting firm. Then the company decided it could so much more. Normal people ask accountants for their opinions. Deloitte, like many other service firms, decided it could just become a general management consulting firm, an information technology company, a conference and event company, and also do the books.

A professional training program for business professionals at a blue chip consulting firm. One person speaks up, but the others keep their thoughts to themselves. How many are updating their LinkedIn profile? How many are wondering if AI will put them out of a job? How many don’t care because the incentives emphasize selling and upselling engagements? Thanks, Venice.ai. Good enough but you are AI and that’s a mark of excellence for some today.

I read an article that suggests a firm like Deloitte is not able to do much of the self assessment and introspection required to make informed decisions that make surprises part of some firms’ standard operating procedure.

This insight appears in “Deloitte’s CTO on a Stunning AI Transformation Stat: Companies Are Spending 93% on Tech and Only 7% on People.” This headline suggests that Deloitte itself is making this error. [Note: This is a wonky link from my feed system. If it disappears, good luck.]

The write up in Fortune Magazine said:

According to Bill Briggs, Deloitte’s chief technology officer, as we move from AI experimentation to impact/value at scale, that fear is driving a lopsided investment strategy where companies are pouring 93% of their AI budget into technology and only 7% into the people expected to use it.

The question that popped into my mind was, “How much money is Deloitte spending relative to smart software on training its staff in AI?” Perhaps the not-so-surprising MBA type “fact” reflects what some Deloitte professionals realize is happening at the esteemed “we can do it in any business discipline” consulting firm?

The explanation is that “the culture, workflow, and training” of a blue chip consulting firm is not extensive. Now with AI finding its way from word processing to looking up a fact, educating employees about AI is given lip service, but is “training” possible. Remember, please, that some consulting firms want those over 55 to depart to retirement. However, what about highly paid experts with being friendly and word smithing their core competencies, can learn how, when, and when not to rely on smart software? Do these “best of the best” from MBA programs have the ability to learn, or are these people situational thinkers; that is, the skill is to be spontaneously helpful, to connect the dots, and reframe what a client tells them so it appears sage-like.

The Deloitte expert says:

“This incrementalism is a hard trap to get out of.”

Is Deloitte out of this incrementalism?

The Deloitte expert (apparently not asked the question by the Fortune reporter) says:

As organizations move from “carbon-based” to “silicon-based” employees (meaning a shift from humans to semiconductor chips, or robots), they must establish the equivalent of an HR process for agents, robots, and advanced AI, and complex questions about liability and performance management. This is going to be hard, because it involves complex questions. He brought up the hypothetical of a human creating an agent, and that agent creating five more generations of agents. If wrongdoing occurs from the fifth generation, whose fault is that? “What’s a disciplinary action? You’re gonna put your line robot…in a timeout and force them to do 10 hours of mandatory compliance training?”

I want to point out that blue chip consulting is a soft skill business. The vaunted analytics and other parade float decorations come from Excel, third parties, or recent hires do the equivalent of college research.

Fortune points to Deloitte and says:

The consequences of ignoring the human side of the equation are already visible in the workforce. According to Deloitte’s TrustID report, released in the third quarter, despite increasing access to GenAI in the workplace, overall usage has actually decreased by 15%. Furthermore, a “shadow AI” problem is emerging: 43% of workers with access to GenAI admit to noncompliance, bypassing employer policies to use unapproved tools. This aligns with previous Fortune reporting on the scourge of shadow AI, as surveys show that workers at up to 90% of companies are using AI tools while hiding that usage from their IT departments. Workers say these unauthorized tools are “easier to access” and “better and more accurate” than the approved corporate solutions. This disconnect has led to a collapse in confidence, with corporate worker trust in GenAI declining by 38% between May and July 2025. The data supports this need for a human-centric approach. Workers who received hands-on AI training and workshops reported 144% higher trust in their employer’s AI than those who did not.

Let’s get back to the question? Is Deloitte training its employees in AI so the “information” sticks and then finds its way into engagements? This passage seems to suggest that the answer is, “No for Deloitte. No for its clients. And no for most organizations.” Judge for yourself:

For Briggs [the Deloitte wizard], the message to the C-suite is clear: The technology is ready, but unless leaders shift their focus to the human and cultural transformation, they risk being left with expensive technology that no one trusts enough to use.

My take is that the blue chip consulting firms are:

- Trying to make AI good enough so headcount and other cost savings like health care can be reduced

- Selling AI consulting to their clients before knowing what will and won’t work in a context different from the consulting firms’

- Developing an understanding that AI cannot do what humans can do; that is, build relationships and sell engagements.

Sort of a pickle.

Stephen E Arnold, December 23, 2025

How to Get a Job in the Age of AI?

December 23, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Two interesting employment related articles appeared in my newsfeeds this morning. Let’s take a quick look at each. I will try to add some humor to these write ups. Some may find them downright gloomy.

The first is “An OpenAI Exec Identifies 3 Jobs on the Cusp of Being Automated.” I want to point out that the OpenAI wizard’s own job seems to be secure from his point of view. The write up points out:

Olivier Godement, the head of product for business products at the ChatGPT maker, shared why he thinks a trio of jobs — in life sciences, customer service, and computer engineering — is on the cusp of automation.

Let’s think about each of these broad categories. I am not sure what life sciences means in OpenAI world. The term is like a giant umbrella. Customer service makes some sense. Companies were trying to ignore, terminate, and prevent any money sucking operation related to answer customer’s questions and complaints for years. No matter how lousy and AI model is, my hunch is that it will be slapped into a customer service role even if it is arguably worse than trying to understand the accent of a person who speaks English as a second or third language.

Young members of “leadership” realize that the AI system used to replace lower-level workers has taken their jobs. Selling crafts on Etsy.com is a career option. Plus, there is politics and maybe Epstein, Epstein, Epstein related careers for some. Thanks, Qwen, you just output a good enough image but you are free at this time (December 13, 2025).

Now we come to computer engineering. I assume the OpenAI person will position himself as an AI adept, which fits under the umbrella of computer engineering. My hunch is that the reference is to coders who do grunt work. The only problem is that the large language model approach to pumping out software can be problematic in some situations. That’s why the OpenAI person is probably not worrying about his job. An informed human has to be in the process of machine-generated code. LLMs do make errors. If the software is autogenerated for one of those newfangled portable nuclear reactors designed to power football field sized data centers, someone will want to have a human check that software. Traditional or next generation nuclear reactors can create some excitement if the software makes errors. Do you want a thorium reactor next to your domicile? What about one run entirely by smart software?

What’s amusing about this write up is that the OpenAI person seems blissfully unaware of the precarious financial situation that Sam AI-Man has created. When and if OpenAI experiences a financial hiccup, will those involved in business products keep their jobs. Oliver might want to consider that eventuality. Some investors are thinking about their options for Sam AI-Man related activities.

The second write up is the type I absolutely get a visceral thrill writing. A person with a connection (probably accidental or tenuous) lets me trot out my favorite trope — Epstein, Epstein, Epstein — as a way capture the peculiarity of modern America. This article is “Bill Gates Predicts That Only Three Jobs Will Be Safe from Being Replaced by AI.” My immediate assumption upon spotting the article was that the type of work Epstein, Epstein, Epstein did would not be replaced by smart software. I think that impression is accurate, but, alas, the write up did not include Epstein, Epstein, Epstein work in its story.

What are the safe jobs? The write up identifies three:

-

Biology. Remember OpenAI thinks life sciences are toast. Okay, which is correct?

-

Energy expertise

-

Work that requires creative and intuitive thinking. (Do you think that this category embraces Epstein, Epstein, Epstein work? I am not sure.)

The write up includes a statement from Bill Gates:

“You know, like baseball. We won’t want to watch computers play baseball,” he said. “So there’ll be some things that we reserve for ourselves, but in terms of making things and moving things, and growing food, over time, those will be basically solved problems.”

Several observations:

-

AI will cause many people to lose their jobs

-

Young people will have to make knick knacks to sell on Etsy or find equally creative ways of supporting themselves

-

The assumption that people will have “regular” jobs, buy houses, go on vacations, and do the other stuff organization man type thinking assumed was operative, is a goner.

Where’s the humor in this? Epstein, Epstein, Epstein and OpenAI debt, OpenAI debt, and OpenAI debt. Ho ho ho.

Stephen E Arnold, December x, 2025

Telegram News: AlphaTON, About Face

December 22, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Starting in January 2026, my team and I will be writing about Telegram’s Cocoon, the firm’s artificial intelligence push. Unlike the “borrow, buy, hype, and promise” approach of some US firms, Telegram is going a different direction. For Telegram, it is early days for smart software. The impact will be that posts in Beyond Search will decrease beginning Christmas week. The new Telegram News posts will be on a different url or service. Our preliminary tests show that a different approach won’t make much difference to the Arnold IT team. Frankly I am not sure how people will find the new service. I will post the links on Beyond Search, but with the exceptional indexing available from Bing, Google, et al, I have zero clue if these services will find our Telegram Notes.

Why am I making this shift?

Here’s one example. With a bit of fancy footwork, a publicly traded company popped into existence a couple of months ago. Telegram itself does not appear to have any connection to this outfit. However, the TON Foundation’s former president set up an outfit called the TON Strategy Co., which is listed on the US NASDAQ. Then following a similar playbook, AlphaTON popped up to provide those who believe in TONcoin a way to invest in a financial firm anchored to TONcoin. Yeah, I know that having these two public companies semi-linked to Telegram’s TON Foundation is interesting.

But even more fascinating is the news story about AlphaTON using some financial fancy dancing to link itself to Andruil. This is one of the companies familiar to those who keep track of certain Silicon Valley outfits generating revenue from Department of War contracts.

What’s the news?

The deal is off. According to “AlphaTON Capital Corp Issues Clarification on Anduril Industries Investment Program.” The word clarification is not one I would have chosen. The deal has vaporized. The write up says:

It has now come to the Company’s attention that the Anduril Industries common stock underlying the economic exposure that was contractually offered to our Company is subject to transfer restrictions and that Anduril will not consent to any such transfer. Due to these material limitations and risk on ownership and transferability, AlphaTON has made the decision to cancel the Anduril tokenized investment program and will not be proceeding with the transaction. The Company remains committed to strategic investments and the tokenization of desirable assets that provide clear ownership rights and align with shareholder value creation objectives.

I interpret this passage to mean, “Fire, Aim, Ready Maybe.”

With the stock of AlphaTON Capital as of December 18, 2025, at about $0.70 at 11 30 am US Eastern, this fancy dancing may end this set with a snappy rendition of Mozart’s Requiem.

That’s why Telegram Notes will be an interesting organization to follow. We think Pavel Durov’s trial in France, the two or maybe one surviving public company, two “foundations” linked to Telegram, and the new Cocoon AI play are going to be more interesting. If Mr. Durov goes to jail, the public company plays fail, and the Cocoon thing dies before it becomes a digital butterfly, I may flow more stories to Beyond Search.

Stay tuned.

Stephen E Arnold, December 22, 2025

How Do You Get Numbers for Copilot? Microsoft Has a Good Idea

December 22, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In past couple of days, I tested some of the latest and greatest from the big tech outfits destined to control information flow. I uploaded text to Gemini, asked it a question answered in the test, and it spit out the incorrect answer. Score one for the Googlers. Then I selected an output from ChatGPT and asked it to determine who was really innovating in a very, very narrow online market space. ChatGPT did not disappoint. It just made up a non-existent person. Okay Sam AI-Man, I think you and Microsoft need to do some engineering.

Could a TV maker charge users to uninstall a high value service like Copilot? Could Microsoft make the uninstall app available for a fee via its online software store? Could both the TV maker and Microsoft just ignore the howls of the demented few who don’t love Copilot? Yeah, I go with ignore. Thanks, Venice.ai. Good enough.

And what did Microsoft do with its Copilot online service? According to Engadget, “LG quietly added an unremovable Microsoft Copilot app to TVs.” The write up reports:

Several LG smart TV owners have taken to Reddit over the past few days to complain that they suddenly have a Copilot app on the device

But Microsoft has a seductive way about its dealings. Engadget points out:

[LG TV owners] cannot uninstall it.

Let’s think about this. Most smart TVs come with highly valuable to the TV maker baloney applications. These can be uninstalled if one takes the time. I don’t watch TV very much, so I just leave the set the way it was. I routinely ignore pleas to update the software. I listen, so I don’t care if weird reminders obscure the visuals.

The Engadget article states:

LG said during the 2025 CES season that it would have a Copilot-powered AI Search in its next wave of TV models, but putting in a permanent AI fixture is sure to leave a bad taste in many customers’ mouths, particularly since Copilot hasn’t been particularly popular among people using AI assistants.

Okay, Microsoft has a vision for itself. It wants to be the AI operating system just as Google and other companies desire. Microsoft has been a bit pushy. I suppose I would come up with ideas that build “numbers” and provide fodder for the Microsoft publicity machine. If I hypothesize myself in a meeting at Microsoft (where I have been but that was years ago), I would reason this way:

- We need numbers.

- Why not pay a TV outfit to install Copilot.

- Then either pay more or provide some inducements to our TV partner to make Copilot permanent; that is, the TV owner has no choice.

The pushback for this hypothetical suggestion would be:

- How much?

- How many for sure?

- How much consumer backlash?

I further hypothesize that I would say:

- We float some trial balloon numbers and go from there.

- We focus on high end models because those people are more likely to be willing to pay for additional Microsoft services

- Who cares about consumer backlash? These are TVs and we are cloud and AI people.

Obviously my hypothetical suggestion or something similar to it took place at Microsoft. Then LG saw the light or more likely the check with some big numbers imprinted on it, and the deal was done.

The painful reality of consumer-facing services is that something like 95 percent of the consumers do not change the defaults. By making something uninstallable will not even register as a problem for most consumers.

Therefore, the logic of the LG play is rock solid. Microsoft can add the LG TVs with Copilot to its confirmed Copilot user numbers. Win.

Microsoft is not in the TV business so this is just advertising. Win

Microsoft is not a consumer product company like a TV set company. Win.

As a result, the lack of an uninstall option makes sense. If a lawyer or some other important entity complains, making Copilot something a user can remove eliminates the problem.

Love those LGs. Next up microwaves, freezers, smart lights, and possibly electric blankets. Numbers are important. Users demonstrate proof that Microsoft is on the right path.

But what about revenue from Copilot. No problem. Raise the cost of other services. Charging Outlook users per message seems like an idea worth pursuing? My hypothetical self would argue with type of toll or taxi meter approach. A per pixel charge in Paint seems plausible as well.

The reality is that I believe LG will backtrack. Does it need the grief?

Stephen E Arnold, December 22, 2025

Modern Management Method with and without Smart Software

December 22, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I enjoy reading and thinking about business case studies. The good ones are few and far between. Most are predictable, almost as if the author was relying on a large language model for help.

“I’m a Tech Lead, and Nobody Listens to Me. What Should I Do?” is an example of a bright human hitting on tactics to become more effective in his job. You can work through the full text of the article and dig out the gems that may apply to you. I want to focus on two points in the write up. The first is the matrix management diagram based on or attributed to Spotify, a music outfit. The second is a method for gaining influence in a modern, let’s go fast company.

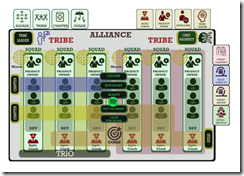

Here’s the diagram that caught my attention:

Instead of the usual business school lingo, you will notice “alliance,” “tribe,” “squad,” and “trio.” I am not sure what these jazzy words mean, but I want to ask you a question, “Looking at this matrix, who is responsible when a problem occurs?” Take you time. I did spend some time looking at this chart, and I formulated several hypotheses:

- The creator wanted to make sure that a member of leadership would have a tough time figuring out who screwed up. If you disagree, that’s okay. I am a dinobaby, and I like those old fashioned flow diagrams with arrows and boxes. In those boxes is the name of the person who has to fix a problem. I don’t know about one’s tribe. I know Problem A is here. Person B is going to fix it. Simple.

- The matrix as displayed allows a lot of people to blame other people. For example, what if the coach is like the leader of the Cleveland Browns, who brilliantly equipped a young quarterback with the incorrect game plan for the first quarter of a football game. Do we blame the coach or do we chase down a product owner? What if the problem is a result of a dependency screw up involving another squad in a different tribe? In practical terms, there is no one with direct responsibility for the problem. Again: Don’t agree? That’s okay.

- The matrix has weird “leadership” or “employment categories” distributed across the X axes at the top of the chart. What’s a chapter? What’s an alliance? What’s self organized and autonomous in a complex technical system? My view is that this is pure baloney designed to make people feel important yet shied any one person from responsibility. I bet some reading this numbered point find my statement out of line. Tough.

The diagram makes clear that the organization is presented as one that will just muddle forward. No one will have responsibility when a problem occurs? No one will know how to fix the problem without dropping other work and reverse engineering what is happening. The chart almost guarantees bafflement when a problem surfaces.

The second item I noticed was this statement or “learning” from the individual who presented the case example. Here’s the passage:

When you solve a real problem and make it visible, people join in. Trust is also built that way, by inviting others to improve what you started and celebrating when they do it better than you.

For this passage hooks into the one about solving a problem; to wit:

Helping people debug. I have never considered myself especially smart, but I have always been very systematic when connecting error messages, code, hypotheses, and system behavior. To my surprise, many people saw this as almost magical. It was not magic. It was a mix of experience, fundamentals, intuition, knowing where to look, and not being afraid to dive into third-party library code.

These two passages describe human interactions. Working with others can result in a collective effort greater than the sum of its parts. It is a human manifestation. One fellow described this a interaction efflorescence. Fancy words for what happens when a few people face a deadline and severe consequences for failure.

Why did I spend time pointing out an organizational structure purpose built to prevent assigning responsibility and the very human observations of the case study author?

The answer is, “What will happen when smart software is tossed into this management structure?” First, people will be fired. The matrix will have lots of empty boxes. Second, the human interaction will have to adapt to the smart software. The smart software is not going to adapt to humans. Don’t believe me. One smart software company defended itself by telling a court it is in our terms of service that suicide in not permissible. Therefore, we are not responsible. The dead kid violated the TOS.

How functional will the company be as the very human insight about solving real problems interfaces with software? Man machine interface? Will that be an issue in a go fast outfit? Nope. The human will be excised as a consequence of efficiency.

Stephen E Arnold, December 23, 2025

Poor Meta! Allegations about Accepting Scam Advertising

December 19, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

That well managed, forward leaning, AI and goggle centric company is in the news again. This time the focus is advertising that is scammy. “Mark Zuckerberg’s Meta Allows Rampant Scam Ads from China While Raking in Billions, Explosive Report Says” states:

According to an investigation by Reuters, Meta earned more than $3 billion in China last year through scam ads for illegal gambling, pornography, and other inappropriate content. That figure represents nearly 19 percent of the company’s $18 billion in total ad revenue from China during the same period. Reuters had previously reported that 10 percent of Meta’s global revenue came from fraudulent ads.

The write up makes a pointed statement:

The investigation suggests Meta knew about the scale of the ad fraud problem on its platforms, but chose not to act because it would have affected revenue.

Guess what happens when senior managers at a large social media outfit pay little attention to what happens around them? Thanks, ChatGPT, good enough.

Let’s assume that the allegations are accurate and verifiable. The question is, “Why did Meta take in billions from scam ads?” My view is that there were several reasons:

- Revenue

- Figuring out what is and is not “spammy” is expensive. Spam may be like the judge’s comment years ago, “I will know it when I see it.” Different people have different perceptions

- Changing the ad sales incentive programs is tricky, time consuming, and expensive.

The logical decision is, based on my limited understanding of how managerial decisions are made at Meta simple: Someone may have said, “Hey, keep doing it until someone makes us stop.”

Why would a very large company adopt this hypothetical response to spammy ads?

My hunch is that management looked the other way. Revenue is important.

Stephen E Arnold, December 19, 2025