Trust: Some in the European Union Do Not Believe the Google. Gee, Why?

June 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “Google’s Ad Tech Dominance Spurs More Antitrust Charges, Report Says.” The write up seems to say that some EU regulators do not trust the Google. Trust is a popular word at the alleged monopoly. Yep, trust is what makes Google’s smart software so darned good.

A lawyer for a high tech outfit in the ad game says, “Commissioner, thank you for the question. You can trust my client. We adhere to the highest standards of ethical behavior. We put our customers first. We are the embodiment of ethical behavior. We use advanced technology to enhance everyone’s experience with our systems.” The rotund lawyer is a confection generated by MidJourney, an example of in this case, pretty smart software.

The write up says:

These latest charges come after Google spent years battling and frequently bending to the EU on antitrust complaints. Seeming to get bigger and bigger every year, Google has faced billions in antitrust fines since 2017, following EU challenges probing Google’s search monopoly, Android licensing, Shopping integration with search, and bundling of its advertising platform with its custom search engine program.

The article makes an interesting point, almost as an afterthought:

…Google’s ad revenue has continued increasing, even as online advertising competition has become much stiffer…

The article does not ask this question, “Why is Google making more money when scrutiny and restrictions are ramping up?”

From my vantage point in the old age “home” in rural Kentucky, I certainly have zero useful data about this interesting situation, assuming that it is true of course. But, for the nonce, let’s speculate, shall we?

Possibility A: Google is a monopoly and makes money no matter what laws, rules, and policies are articulated. Game is now in extra time. Could the referee be bent?

This idea is simple. Google’s control of ad inventory, ad options, and ad channels is just a good, old-fashioned system monopoly. Maybe TikTok and Facebook offer options, but even with those channels, Google offers options. Who can resist this pitch: “Buy from us, not the Chinese. Or, buy from us, not the metaverse guy.”

Possibility B: Google advertising is addictive and maybe instinctual. Mice never learn and just repeat their behaviors.

Once there is a cheese pay off for the mouse, those mice are learning creatures and in some wild and non-reproducible experiments inherit their parents’ prior learning. Wow. Genetics dictate the use of Google advertising by people who are hard wired to be Googley.

Possibility C: Google’s home base does not regulate the company in a meaningful way.

The result is an advanced and hardened technology which is better, faster, and maybe cheaper than other options. How can the EU, with is squabbling “union”, hope to compete with what is weaponized content delivery build on a smart, adaptive global system? The answer is, “It can’t.”

Net net: After a quarter century, what’s more organized for action, a regulatory entity or the Google? I bet you know the answer, don’t you?

Stephen E Arnold, June xx, 2023

Sam AI-man Speak: What I Meant about India Was… Really, Really Positive

June 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have noted Sam AI-man of OpenAI and his way with words. I called attention to an article which quoted him as suggesting that India would be forever chasing the Usain Bolt of smart software. Who is that? you may ask. The answer is, Sam AI-man.

MidJourney’s incredible insight engine generated an image of a young, impatient business man getting a robot to write his next speech. Good move, young business man. Go with regressing to the norm and recycling truisms.

The remarkable explainer appears in “Unacademy CEO Responds To Sam Altman’s Hopeless Remark; Says Accept The Reality.” Here’s the statement I noted:

Following the initial response, Altman clarified his remarks, stating that they were taken out of context. He emphasized that his comments were specifically focused on the challenge of competing with OpenAI using a mere $10 million investment. Altman clarified that his intention was to highlight the difficulty of attempting to rival OpenAI under such constrained financial circumstances. By providing this clarification, he aimed to address any misconceptions that may have arisen from his earlier statement.

To see the original “hopeless” remark, navigate to this link.

Sam AI-man is an icon. My hunch is that his public statements have most people in awe, maybe breathless. But India as hopeless in smart software. Just not too swift. Why not let ChatGPT craft one’s public statements. Those answers are usually quite diplomatic, even if wrong or wonky some times.

Stephen E Arnold, June 13, 2023

Sam AI-man: India Is Hopeless When It Comes to AI. What, Sam? Hopeless!

June 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb-2.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Sam Altman (aka to me and my research team as Sam AI-man) comes up with interesting statements. I am not sure if Sam AI-man crafts them himself or if his utterances are the work of ChatGPT or a well-paid, carefully groomed publicist. I don’t think it matters. I just find his statements interesting examples of worshipped tech leader speak.

MidJourney presents an image of a young Sam AI-man explaining to one of his mentors that he is hopeless. Sam AI-man has been riding this particular pony named arrogance since he was a wee lad. At least that’s what I take away from the machine generated illustration. Your interpretation may be different. Sam AI is just being helpful.

Navigate to “Sam Altman Calls India Building ChatGPT-Like Tool Hopeless. Tech Mahindra CEO Says Challenge Accepted.” The write up reports that a former Google wizard asked Sam AI-man about India’s ability to craft its own smart software, an equivalent to OpenAI. Sam AI-man replied in true Silicon Valley style:

“The way this works is we’re going to tell you, it’s totally hopeless to compete with us on training foundation models you shouldn’t try, and it’s your job to like try anyway. And I believe both of those things. I think it is pretty hopeless,” Altman said, in reply.

That’s a sporty answer. Sam AI-man may have a future working as an ambassador or as a negotiator in the Hague for the exciting war crimes trials bound to come.

I would suggest that Sam AI-man, prepare for this new role by gathering basic information to answer these questions:

- Why are so many of India’s best and brightest generating math tutorials on YouTube which describe computational tricks which are insightful, not usually taught in Palo Alto high schools, and relevant to smart software math?

- How many mathematicians are generated in India each graduation cycle? How many does the US produce in the same time period? (Include India’s nationals studying in US universities and graduating with their cohort?

- How many Srinivasa Ramanujans are chugging along in India’s mathy environment? How many are projected to come along in the next five years?

- How many Indian nationals work on smart software at Facebook, Google, Microsoft, and OpenAI and similar firms at this time?

- What open source tools are available to Indian mathematicians to use as a launch pad for smart software frameworks and systems?

My thought is that “pretty hopeless” is a very Sam AI-man phrase. It captures the essence of arrogance, cultural insensitivity, and bluntness that makes Silicon Valley prose so memorable.

Congrats, Sam AI-man. Great insight. Classy too if the write up is “real news” and not generated by ChatGPT.

Stephen E Arnold, June 12, 2023

Handwaving at Light Speed: Control Smart Software Now!

June 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb-4.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Here is an easy one: Vox ponders, “What Will Stop AI from Flooding the Internet with Fake Images?” “Nothing” is the obvious answer. Nevertheless, tech companies are making a show of making an effort. Writer Shirin Ghaffary begins by recalling the recent kerfuffle caused by a realistic but fake photo of a Pentagon explosion. The spoof even affected the stock market, though briefly. We are poised to see many more AI-created images swamp the Internet, and they won’t all be so easily fact checked. The article explains:

“This isn’t an entirely new problem. Online misinformation has existed since the dawn of the internet, and crudely photoshopped images fooled people long before generative AI became mainstream. But recently, tools like ChatGPT, DALL-E, Midjourney, and even new AI feature updates to Photoshop have supercharged the issue by making it easier and cheaper to create hyper realistic fake images, video, and text, at scale. Experts say we can expect to see more fake images like the Pentagon one, especially when they can cause political disruption. One report by Europol, the European Union’s law enforcement agency, predicted that as much as 90 percent of content on the internet could be created or edited by AI by 2026. Already, spammy news sites seemingly generated entirely by AI are popping up. The anti-misinformation platform NewsGuard started tracking such sites and found nearly three times as many as they did a few weeks prior.”

Several ideas are being explored. One is to tag AI-generated images with watermarks, metadata, and disclosure labels, but of course those can be altered or removed. Then there is the tool from Adobe that tracks whether images are edited by AI, tagging each with “content credentials” that supposedly stick with a file forever. Another is to approach from the other direction and stamp content that has been verified as real. The Coalition for Content Provenance and Authenticity (C2PA) has created a specification for this purpose.

But even if bad actors could not find ways around such measures, and they can, will audiences care? So far it looks like that is a big no. We already knew confirmation bias trumps facts for many. Watermarks and authenticity seals will hold little sway for those already inclined to take what their filter bubbles feed them at face value.

Cynthia Murrell, June 13, 2023

Two Polemics about the Same Thing: Info Control

June 12, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb-5.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Polemics are fun. The term, as I use it, means:

a speech or piece of writing expressing a strongly critical attack on or controversial opinion about someone or something.

I took the definition from Google’s presentation of the “Oxford Languages.” I am not sure what that means, but since we are considering two polemics, the definition is close enough for horseshoes. Furthermore, polemics are not into facts, verifiable assertions, or hard data. I think of polemics as blog posts by individuals whom some might consider fanatics, apologists, crusaders, or zealots.

Ah, you don’t agree? Tough noogies, gentle reader.

The first document I read and fed into Browserling’s free word frequency tool was Marc Andreessen’s delightful “Why AI Will Save the World.” The document has a repetitive contents listing, which some readers may find useful. For me, the effort to stay on track added duplicate words.

The second document I read and stuffed into the Browserling tool was the entertaining, and in my opinion, fluffy, Aeropagitica, made available by Dartmouth.

The mechanics of the analysis were simple. I compared the frequency of words which I find indicative of a specific rhetorical intent. Mr. Andreessen is probably more well known to modern readers than John Milton. Mr. Andreessen’s contribution to polemic literature is arguably more readable. There’s the clumsy organization impedimenta. There are shorter sentences. There are what I would describe as Silicon Valley words. Furthermore, based on Bing, Google, and Yandex searches for the text of the document, one can find Mr. Andreessen’s contribution to the canon in more places than John Milton’s lame effort. I want to point out that Mr. Milton’s polemic is longer than Mr. Andreessen’s by a couple of orders of magnitude. I did what most careless analysts would do: I took the full text of Mr. Andreessen’s screed and snagged the first 8000 words of Mr. Milton’s writing. A writing known to bring tears to the eyes of first year college students asked to read the prose and write an analytic essay about Aeropagitica in 500 words. Good training for either a debate student, a future lawyer, or a person who wants to write for Reader’s Digest magazine I believe.

So what did I find?

First, both Mr. Andreessen and Mr. Milton needed to speak out for their ideas. Mr. Andreessen is an advocate of smart software. Mr. Milton wanted a censorship free approach to publishing. Both assumed that “they” or people not on their wave length needed convincing about the importance of their ideas. It is safe to say that the audiences for these two polemics are not clued into the subject. Mr. Andreessen is speaking to those who are jazzed on smart software, neglecting to point out that smart software is pretty common in the online advertising sector. Mr. Milton assumed that censorship was a new threat, electing to ignore that religious authorities, educational institutions, and publishers were happily censoring information 24×7. But that’s the world of polemicists.

Second, what about the words used by each author. Since this is written for my personal blog, I will boil down my findings to a handful of words.

The table below presents selected 12 words and a count of each:

|

Words |

Andreessen |

Milton |

| AI |

157 |

0 |

| All |

34 |

54 |

| Ethics |

1 |

0 |

| Every |

20 |

8 |

| Everyone |

7 |

0 |

| Everything |

6 |

0 |

| Everywhere |

4 |

0 |

| Infinitely |

9 |

0 |

| Moral |

9 |

0 |

| Morality |

2 |

0 |

| Obviously |

4 |

0 |

| Should |

23 |

22 |

| Would |

21 |

10 |

Several observations:

- Messrs. Andreessen and Milton share an absolutist approach. The word “all” figures prominently in both polemics.

- Mr. Andreessen uses “every” words to make clear that AI is applicable to just about anything one cares to name. Logical? Hey, these are polemics. The logic is internal.

- Messrs. Andreessen share a fondness for adulting. Note the frequency of “should” and “would.”

- Mr. Andreessen has an interest in ethical and moral behavior. Mr. Milton writes around these notions.

Net net: Polemics are designed as marketing collateral. Mr. Andreessen is marketing as is Mr. Milton. Which pitch is better? The answer depends on the criteria one uses to judge polemics. I give the nod to Mr. Milton. His polemic is longer, has freight train scale sentences, and is for a modern college freshman almost unreadable. Mr. Andreessen’s polemic is sportier. It’s about smart software, not censorship directly. However, both polemics boil down to who has his or her hands on the content levers.

Stephen E Arnold, June 12, 2023

Bad News for Humanoids: AI Writes Better Pitch Decks But KFC Is Hiring

June 12, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-15.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Who would have envisioned a time when MBA with undergraduate finance majors would be given an opportunity to work at a Kentucky Fried Chicken store. What was the slogan about fingers? I can’t remember.

“If You’re Thinking about Writing Your Own Pitch Decks, Think Again” provides some interesting information. I assume that today’s version of Henry Robinson Luce’s flagship magazine (no the Sports Illustrated swimsuit edition) would shatter the work life of those who create pitch decks. A “pitch deck” is a sonnet for our digital era. The phrase is often associated with a group of PowerPoint slides designed to bet a funding source to write a check. That use case, however, is not where pitch decks come into play: Academics use them when trying to explain why a research project deserves funding. Ad agencies craft them to win client work or, in some cases, to convince a client to not fire the creative team. (Hello, Bud Light advisors, are you paying attention.) Real estate professionals created them to show to high net worth individuals. The objective is to close a deal for one of those bizarro vacant mansions shown by YouTube explorers. See, for instance, this white elephant lovingly presented by Dark Explorations. And there are more pitch deck applications. That’s why the phrase, “Death by PowerPoint is real”, is semi poignant.

What if a pitch deck could be made better? What is pitch decks could be produced quickly? What if pitch decks could be graphically enhanced without fooling around with Fiverr.com artists in Armenia or the professionals with orange and blue hair?

The Fortune article states: The study [funded by Clarify Capital] revealed that machine-generated pitch decks consistently outperformed their human counterparts in terms of quality, thoroughness, and clarity. A staggering 80% of respondents found the GPT-4 decks compelling, while only 39% felt the same way about the human-created decks. [Emphasis added]

The cited article continues:

What’s more, GPT-4-presented ventures were twice as convincing to investors and business owners compared to those backed by human-made pitch decks. In an even more astonishing revelation, GPT-4 proved to be more successful in securing funding in the creative industries than in the tech industry, defying assumptions that machine learning could not match human creativity due to its lack of life experience and emotions. [Emphasis added]

Would you like regular or crispy? asks the MBA who wants to write pitch decks for a VC firm whose managing director his father knows. The image emerged from the murky math of MidJourney. Better, faster, and cheaper than a contractor I might add.

Here’s a link to the KFC.com Web site. Smart software works better, faster, and cheaper. But it has a drawback: At this time, the KFC professional is needed to put those thighs in the fryer.

Stephen E Arnold, June 12, 2023

Moral Decline? Nah, Just Your Perception at Work

June 12, 2023

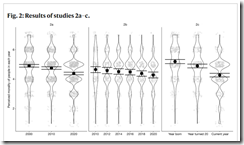

Here’s a graph from the academic paper “The Illusion of Moral Decline.”

Is it even necessary to read the complete paper after studying the illustration? Of course not. Nevertheless, let’s look at a couple of statements in the write up to get ready for that in-class, blank bluebook semester examination, shall we?

Statement 1 from the write up:

… objective indicators of immorality have decreased significantly over the last few centuries.

Well, there you go. That’s clear. Imagine what life was like before modern day morality kicked in.

Statement 2 from the write up:

… we suggest that one of them has to do with the fact that when two well-established psychological phenomena work in tandem, they can produce an illusion of moral decline.

Okay. Illusion. This morning I drove past people sleeping under an overpass. A police vehicle with lights and siren blaring raced past me as I drove to the gym (a gym which is no longer open 24×7 due to safety concerns). I listened to a report about people struggling amidst the flood water in Ukraine. In short, a typical morning in rural Kentucky. Oh, I forgot to mention the gunfire, I could hear as I walked my dog at a local park. I hope it was squirrel hunters but in this area who knows?

MidJourney created this illustration of the paper’s authors celebrating the publication of their study about the illusion of immorality. The behavior is a manifestation of morality itself, and it is a testament to the importance of crystal clear graphs.

Statement 3 from the write up:

Participants in the foregoing studies believed that morality has declined, and they believed this in every decade and in every nation we studied….About all these things, they were almost certainly mistaken.

My take on the study includes these perceptions (yours hopefully will be more informed than mine):

- The influence of social media gets slight attention

- Large-scale immoral actions get little attention. I am tempted to list examples, but I am afraid of legal eagles and aggrieved academics with time on their hands.

- The impact of intentionally weaponized information on behavior in the US and other nation states which provide an infrastructure suitable to permit wide use of digitally-enabled content.

In order to avoid problems, I will list some common and proper nouns or phrases and invite you think about these in terms of the glory word “morality”. Have fun with your mental gymnastics:

- Catholic priests and children

- Covid information and pharmaceutical companies

- Epstein, Andrew, and MIT

- Special operation and elementary school children

- Sudan and minerals

- US politicians’ campaign promises.

Wasn’t that fun? I did not have to mention social media, self harm, people between the ages of 10 and 16, and statements like “Senator, thank you for that question…”

I would not do well with a written test watched by attentive journal authors. By the way, isn’t perception reality?

Stephen E Arnold, June 12, 2023

Google: FUD Embedded in the Glacier Strategy

June 9, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-12.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Fly to Alaska. Stand on a glacier and let the guide explains the glacier moves, just slowly. That’s the Google smart software strategy in a nutshell. Under Code Red or Red Alert or “My goodness, Microsoft is getting media attention for something other than lousy code and security services. We have to do something sort of quickly.”

One facet of the game plan is to roll out a bit of FUD or fear, uncertainty, and doubt. That will send chills to some interesting places, won’t it. You can see this in action in the article “Exclusive: Google Lays Out Its Vision for Securing AI.” Feel the fear because AI will kill humanoids unless… unless you rely on Googzilla. This is the only creature capable of stopping the evil that irresponsible smart software will unleash upon you, everyone, maybe your dog too.

The manager of strategy says, “I think the fireball of AI security doom is going to smash us.” The top dog says, “I know. Google will save us.” Note to image trolls: This outstanding illustration was generated in a nonce by MidJourney, not an under-compensated creator in Peru.

The write up says:

Google has a new plan to help organizations apply basic security controls to their artificial intelligence systems and protect them from a new wave of cyber threats.

Note the word “plan”; that is, the here and now equivalent of vaporware or stuff that can be written about and issued as “real news.” The guts of the Google PR is that Google has six easy steps for its valued users to take. Each step brings that user closer to the thumping heart of Googzilla; to wit:

- Assess what existing security controls can be easily extended to new AI systems, such as data encryption;

- Expand existing threat intelligence research to also include specific threats targeting AI systems;

- Adopt automation into the company’s cyber defenses to quickly respond to any anomalous activity targeting AI systems;

- Conduct regular reviews of the security measures in place around AI models;

- Constantly test the security of these AI systems through so-called penetration tests and make changes based on those findings;

- And, lastly, build a team that understands AI-related risks to help figure out where AI risk should sit in an organization’s overall strategy to mitigate business risks.

Does this sound like Mandiant-type consulting backed up by Google’s cloud goodness? It should because when one drinks Google juice, one gains Google powers over evil and also Google’s competitors. Google’s glacier strategy is advancing… slowly.

Stephen E Arnold, June 9, 2023

Microsoft Code: Works Great. Just Like Bing AI

June 9, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-8.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

For Windows users struggling with certain apps, help is not on the way anytime soon. In fact, reports TechRadar, “Windows 11 Is So Broken that Even Microsoft Can’t Fix It.” The issues started popping up for some users of Windows 11 and Windows 10 in January and seem to coincide with damaged registry keys. For now the company’s advice sounds deceptively simple: ditch its buggy software. Not a great look. Writer Matt Hanson tells us:

“On Microsoft’s ‘Health’ webpage regarding the issue, Microsoft notes that the ‘Windows search, and Universal Windows Platform (UWP) apps might not work as expected or might have issues opening,’ and in a recent update it has provided a workaround for the problem. Not only is the lack of a definitive fix disappointing, but the workaround isn’t great, with Microsoft stating that to ‘mitigate this issue, you can uninstall apps which integrate with Windows, Microsoft Office, Microsoft Outlook or Outlook Calendar.’ Essentially, it seems like Microsoft is admitting that it’s as baffled as us by the problem, and that the only way to avoid the issue is to start uninstalling apps. That’s pretty poor, especially as Microsoft doesn’t list the apps that are causing the issue, just that they integrate with ‘Windows, Microsoft Office, Microsoft Outlook or Outlook Calendar,’ which doesn’t narrow it down at all. It’s also not a great solution for people who depend on any of the apps causing the issue, as uninstalling them may not be a viable option.”

The write-up notes Microsoft says it is still working on these issues. Will it release a fix before most users have installed competing programs or, perhaps, even a different OS? Or maybe Windows 11 snafus are just what is needed to distract people from certain issues related to the security of Microsoft’s enterprise software. Will these code faults surface (no pun intended) in Microsoft’s smart software. Of course not. Marketing makes software better.

Cynthia Murrell, June 9, 2023

AI: Immature and a Bit Unpredictable

June 9, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-5.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Writers, artists, programmers, other creative professionals, and workers with potentially automated jobs are worried that AI algorithms are going to replace them. ChatGPT is making headlines about its universality with automating tasks and writing all web content. While ChatGPT cannot write succinct Shakespearean drama yet, it can draft a decent cover letter. Vice News explains why we do not need to fear the AI apocalypse yet: “Scary ‘Emergent’ AI Abilities Are Just A ‘Mirage’ Produced By Researchers, Stanford Study Says.”

Responsible adults — one works at Google and the other at Microsoft — don’t know what to do with their unhappy baby named AI. The image is a product of the MidJourney system which Getty Images may not find as amusing as I do.

Stanford researchers wrote a paper where they claim “that so-called “emergent abilities” in AI models—when a large model suddenly displays an ability it ostensibly was not designed to possess—are actually a “mirage” produced by researchers.” Technology leaders, such as Google CEO Sundar Pichai, perpetuate that large language model AI and Google Bard are teaching themselves skills not in their initial training programs. For example, Google Bard can translate Bengali and Chat GPT-4 can solve complex tasks without special assistance. Neither AI had relevant information included in their training datasets to reference.

When technology leaders tell the public about these AI, news outlets automatically perpetuate doomsday scenarios, while businesses want to exploit them for profit. The Stanford study explains that different AI developers measure outcomes differently and also believe smaller AI models are incapable of solving complex problems. The researchers also claim that AI experts make overblown claims, likely for investments or notoriety. The Stanford researchers encourage their brethren to be more realistic:

“The authors conclude the paper by encouraging other researchers to look at tasks and metrics distinctly, consider the metric’s effect on the error rate, and that the better-suited metric may be different from the automated one. The paper also suggests that other researchers take a step back from being overeager about the abilities of large language models. ‘When making claims about capabilities of large models, including proper controls is critical,” the authors wrote in the paper.’”

It would be awesome if news outlets and the technology experts told the world that an AI takeover is still decades away? Nope, the baby AI wants cash, fame, a clean diaper, and a warm bottle… now.

Whitney Grace, June 9, 2023