Smart Software for Cyber Security Mavens (Good and Bad Mavens)

November 17, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

One of my research team (who wishes to maintain a low profile) called my attention to the “Awesome GPTs (Agents) for Cybersecurity.” The list on GitHub says:

The "Awesome GPTs (Agents) Repo" represents an initial effort to compile a comprehensive list of GPT agents focused on cybersecurity (offensive and defensive), created by the community. Please note, this repository is a community-driven project and may not list all existing GPT agents in cybersecurity. Contributions are welcome – feel free to add your own creations!

Open source cyber security tools and smart software can be used by good actors to make people safe. The tools can be used by less good actors to create some interesting situations for cyber security professionals, the elderly, and clueless organizations. Thanks, Microsoft Bing. Does MSFT use these tools to keep people safe or unsafe?

When I viewed the list, it contained more than 30 items. Let me highlight three, and invite you to check out the other 30 at the link to the repository:

- The Threat Intel Bot. This is a specialized GPT for advanced persistent threat intelligence

- The Message Header Analyzer. This dissects email headers for “insights.”

- Hacker Art. The software generates hacker art and nifty profile pictures.

Several observations:

- More tools and services will be forthcoming; thus, the list will grow

- Bad actors and good actors will find software to help them accomplish their objectives.

- A for fee bundle of these will be assembled and offered for sale, probably on eBay or Etsy. (Too bad fr0gger.)

Useful list!

Stephen E Arnold, November 17, 2023

xx

test

Open Source Companies: Bet on Expandability and Extendibility

October 12, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-5.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Naturally, a key factor driving adoption of open source software is a need to save money. However, argues Lago co-founder Anh-Tho Chuong, “Open Source Does Not Win by Being Cheaper” than the competition. Not just that, anyway. She writes:

“What we’ve learned is that open-source tools can’t rely on being an open-source alternative to an already successful business. A developer can’t just imitate a product, tag on an MIT license, and call it a day. As awesome as open source is, in a vacuum, it’s not enough to succeed. … [Open-source companies] either need a concrete reason for why they are open source or have to surpass their competitors.”

One caveat: Chuong notes she is speaking of businesses like hers, not sponsored community projects like React, TypeORM, or VSCode. Outfits that need to turn a profit to succeed must offer more than savings to distinguish themselves, she insists. The post notes two specific problems open-source developers should aim to solve: transparency and extensibility. It is important to many companies to know just how their vendors are handling their data (and that of their clients). With closed software one just has to trust information is secure. The transparency of open-source code allows one verify that it is. The extensibility advantage comes from the passion of community developers for plugins, which are often merged into the open-source main branch. It can be difficult for closed-source engineering teams to compete with the resulting extendibility.

See the write-up for examples of both advantages from the likes of MongoDB, PostHog, and Minio. Chuong concludes:

“Both of the above issues contribute to commercial open-source being a better product in the long run. But by tapping the community for feedback and help, open-source projects can also accelerate past closed-source solutions. … Open-source projects—not just commercial open source—have served as a critical driver for the improvement of products for decades. However, some software is going to remain closed source. It’s just the nature of first-mover advantage. But when transparency and extensibility are an issue, an open-source successor becomes a real threat.”

Cynthia Murrell, October 12, 2023

Python Algorithms? Hello, Excel

September 27, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/09/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-2.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Believe it or not, the T-Mobile WiFi worked on an eight-hour Delta flight from Europe to the Atlanta airport on September 24, 2023. Who knew?

On that flight I came across a page on GitHub called “TheAlgorithms” (sic). I clicked and browsed and was quite impressed with 40 categories and the specific algorithms within each. The “Other” category had two dozen algorithms ranging from a doomsday algorithm to a method to replace flake8 with ruff.

The individual categories include some AI magnets like “Neural Network” and “Machine Learning.” Remember there are more than 35 additional baskets. There’s only one python routine for “Genetic Algorithms” but categories like “Physics” and “Searches” seem particularly useful.

The collection has a disclaimer; to wit:

The algorithms are implemented in Python for education purpose only. These are just for demonstration purpose.

Some Excel jockeys may find some of them useful. My hunch is that second semester computer science majors may find “inspiration” in this collection.

Stephen E Arnold, September 27, 2023

Llama Beans? Is That the LLM from Zuckbook?

August 4, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

We love open-source projects. Camelids that masquerade as such, not so much. According to The Register, “Meta Can Call Llama 2 Open Source as Much as It Likes, but That Doesn’t Mean It Is.” The company asserts its new large language model is open source because it is freely available for research and (some) commercial use. Are Zuckerburg and his team of Meta marketers fuzzy on the definition of open source? Writer Steven J. Vaughan-Nichols builds his case with quotes from several open source authorities. First up:

“As Erica Brescia, a managing director at RedPoint, the open source-friendly venture capital firm, asked: ‘Can someone please explain to me how Meta and Microsoft can justify calling Llama 2 open source if it doesn’t actually use an OSI [Open Source Initiative]-approved license or comply with the OSD [Open Source Definition]? Are they intentionally challenging the definition of OSS [Open Source Software]?'”

Maybe they are trying. After all, open source is good for business. And being open to crowd-sourced improvements does help the product. However, as the post continues:

“The devil is in the details when it comes to open source. And there, Meta, with its Llama 2 Community License Agreement, falls on its face. As The Register noted earlier, the community agreement forbids the use of Llama 2 to train other language models; and if the technology is used in an app or service with more than 700 million monthly users, a special license is required from Meta. It’s also not on the Open Source Initiative’s list of open source licenses.”

Next, we learn OSI‘s executive director Stefano Maffulli directly states Llama 2 does not meet his organization’s definition of open source. The write-up quotes him:

“While I’m happy that Meta is pushing the bar of available access to powerful AI systems, I’m concerned about the confusion by some who celebrate Llama 2 as being open source: if it were, it wouldn’t have any restrictions on commercial use (points 5 and 6 of the Open Source Definition). As it is, the terms Meta has applied only allow some commercial use. The keyword is some.”

Maffulli further clarifies Meta’s license specifically states Amazon, Google, Microsoft, Bytedance, Alibaba, and any startup that grows too much may not use the LLM. Such a restriction is a no-no in actual open source projects. Finally, Software Freedom Conservancy executive Karen Sandler observes:

“It looks like Meta is trying to push a license that has some trappings of an open source license but, in fact, has the opposite result. Additionally, the Acceptable Use Policy, which the license requires adherence to, lists prohibited behaviors that are very expansively written and could be very subjectively applied.”

Perhaps most egregious for Sandler is the absence of a public drafting or comment process for the Llama 2 license. Llamas are not particularly speedy creatures.

Cynthia Murrell, August 4, 2023

The Future of Open Source: Appropriation and Indifference

March 1, 2023

Big companies love open source software. There are zero or minimal license fees and other people fix the bugs. Not surprisingly the individuals who create open source software face some challenges.

The essay “Open Source Is Broken: The Sad Story of Denis Pushkarev (Core-js)” explains how one developer got the shaft. What’s the fix? Here’s part of the conclusion to the essay:

We often hear that open-source is great, good, ethical compared to close-source and all the typical woo-woo. But in the real world, this isn’t enough. You don’t live and pay bills by doing good things: you need to have some business skills. This doesn’t make you a bad person: if you don’t have enough motivation to work on your open-source project, it simply won’t last. You need to promote yourself and your open-source project.

I read this as saying, “More, better marketing.”

Why not suggest non-profit consortia able to fund certain projects? Why not suggest commercial enterprises embrace a kinder, gentler approach to code appropriation? Why not suggest a healthier balance between profit seeking and ethical behavior?

I know.

No one cares. Makes one proud to incorporate open source software into a commercial environment and charge people to use the work of an individual or team who wanted to do “good,” doesn’t it. Blindspot? I think it depends on whom one asks.

Stephen E Arnold,March 1, 2023

Goggle Points Out the ChatGPT Has a Core Neural Disorder: LSD or Spoiled Baloney?

February 16, 2023

I am an old-fashioned dinobaby. I have a reasonably good memory for great moments in search and retrieval. I recall when Danny Sullivan told me that search engine optimization improves relevance. In 2006, Prabhakar Raghavan on a conference call with a Managing Director of a so-so financial outfit explained that Yahoo had semantic technology that made Google’s pathetic effort look like outdated technology.

Hallucinating pizza courtesy of the super smart AI app Craiyon.com. The art, not the write up it accompanies, was created by smart software. The article is the work of the dinobaby, Stephen E Arnold. Looks like pizza to me. Close enough for horseshoes like so many zippy technologies.

Now that SEO and its spawn are scrambling to find a way to fiddle with increasingly weird methods for making software return results the search engine optimization crowd’s customers demand, Google’s head of search Prabhakar Raghavan is opining about the oh, so miserable work of Open AI and its now TikTok trend ChatGPT. May I remind you, gentle reader, that OpenAI availed itself of some Googley open source smart software and consulted with some Googlers as it ramped up to the tsunami of PR ripples? May I remind you that Microsoft said, “Yo, we’re putting some OpenAI goodies in PowerPoint.” The world rejoiced and Reddit plus Twitter kicked into rave mode.

Google responded with a nifty roll out in Paris. February is not April, but maybe it should have been in April 2023, not in les temp d’hiver?

I read with considerable amusement “Google Vice President Warns That AI Chatbots Are Hallucinating.” The write up states as rock solid George Washington I cannot tell a lie truth the following:

Speaking to German newspaper Welt am Sonntag, Raghavan warned that users may be delivered complete nonsense by chatbots, despite answers seeming coherent. “This type of artificial intelligence we’re talking about can sometimes lead to something we call hallucination,” Raghavan told Welt Am Sonntag. “This is then expressed in such a way that a machine delivers a convincing but completely fictitious answer.”

LSD or just the Google code relied upon? Was it the Googlers of whom OpenAI asked questions? Was it reading the gems of wisdom in Google patent documents? Was it coincidence?

I recall that Dr. Timnit Gebru and her co-authors of the Stochastic Parrot paper suggest that life on the Google island was not palm trees and friendly natives. Nope. Disagree with the Google and your future elsewhere awaits.

Now we have the hallucination issue. The implication is that smart software like Google-infused OpenAI is addled. It imagines things. It hallucinates. It is living in a fantasy land with bean bag chairs, Foosball tables, and memories of Odwalla juice.

I wrote about the after-the-fact yip yap from Google’s Chair Person of the Board. I mentioned the Father of the Darned Internet’s post ChatGPT PR blasts. Now we have the head of search’s observation about screwed up neural networks.

Yep, someone from Verity should know about flawed software. Yep, someone from Yahoo should be familiar with using PR to mask spectacular failure in search. Yep, someone from Google is definitely in a position to suggest that smart software may be somewhat unreliable because of fundamental flaws in the systems and methods implemented at Google and probably other outfits loving the Tensor T shirts.

Stephen E Arnold, February 16, 2023

Secrets Patterns Database

February 15, 2023

One of my researchers called my attention to “Secrets Patterns Database.” For those interested in finding “secrets”, you may want to take a look. The data and scripts are available on GitHub… for now. Among its features are:

- “Over 1600 regular expressions for detecting secrets, passwords, API keys, tokens, and more.

- Format agnostic. A Single format that supports secret detection tools, including Trufflehog and Gitleaks.

- Tested and reviewed Regular expressions.

- Categorized by confidence levels of each pattern.

- All regular expressions are tested against ReDos attacks.”

Links to the author’s Web site and LinkedIn profile appear in the GitHub notes.

Stephen E Arnold, February 20, 2023

Another OSINT Blind Spot: Fake Reviews

November 9, 2022

Fraud comes in many flavors. Soft fraud is a mostly ignored branch of online underhandedness. Examples range from online merchants selling products which don’t work or are never shipped to phishing scams designed to obtain online credentials. One tributary to the Mississippi River of online misbehavior is the category “Fake Reviews.” These appear on many services; for example, Amazon. Some authors and publishers crank out suspicious reviews as a standard business practice. Those with some cash and a low level of energy just hire ghost promoters on Fiverr-like services.

I noted “Up to 30% of Online Reviews Are Fake and Most Consumers Can’t Tell the Difference.” The write up says:

The latest survey from Brand Rated shows nine out of ten consumers use reviews to help decide what to buy, where to eat and which doctor or dentist to see. Experts say that’s a problem because up to 30% of online reviews are fake. “My research shows that the review platforms are just saturated with fake reviews. Far more so than most people are aware of,” said [Kay] Dean [Founder of Fake Review Watch.]

Several questions, assuming the data are accurate:

- What incentives exist for bad actors to surf on this cloud of unknowing?

- How will smart software identify “fake content” and deal with it in a constructive way?

- How many of the individuals in this magical 30 percent will have difficulty making sense of conflicting technical or medical information?

Net net: Cyber crime (hard and soft) are entering a golden age. OSINT analysts, are you able to identify real and fake in a reliable way? Think carefully about your answer.

Stephen E Arnold, November 9, 2022

A Flashing Yellow Light for GitHub: Will Indifferent Drivers Notice?

November 9, 2022

I read “We’ve Filed a Lawsuit Challenging GitHub Copilot, an AI Product That Relies on Unprecedented Open-Source Software Piracy. Because AI Needs to Be Bair & Ethical for Everyone.” The write up reports:

… we’ve filed a class-action lawsuit in US federal court in San Francisco, CA on behalf of a proposed class of possibly millions of GitHub users. We are challenging the legality of GitHub Copilot (and a related product, OpenAI Codex, which powers Copilot). The suit has been filed against a set of defendants that includes GitHub, Microsoft (owner of GitHub), and OpenAI.

My view of GitHub is that it presents a number of challenges. On one hand, Microsoft is a pedal-to-the-metal commercial outfit and GitHub is an outfit with some roots in the open source “community” world. Many intelware solutions depend on open source software. In my experience, it is difficult to determine whether cyber security vendors or intelware vendors offer software free of open source code. I am not sure the top dogs in these firms know. Big commercial companies love open source software because these firms see a way to avoid the handcuffs proprietary code vendors use for lock in and lock down without a permission slip. These permissions can be purchased. This fee irritates many of the largest companies which are avid users of open source software.

A second challenge of GitHub is that it serves bad actors in two interesting ways. Those eager to compromise networks, automate phishing attacks, and probe the soft underbelly of companies “protected” by somewhat Swiss Cheese like digital moats rely on open source tools. Second, the libraries for some code on GitHub is fiddled so that those who use libraries but never check too closely about their plumbing are super duper attack and compromise levering vectors. When I was in Romania, “Hooray for GitHub” was, in my opinion, one of the more popular youth hang out disco hits.

The write up adds a new twist: Allegedly inappropriate use of the intellectual property of open source software on GitHub. The write up states:

As far as we know, this is the first class-action case in the US challenging the training and output of AI systems. It will not be the last. AI systems are not exempt from the law. Those who create and operate these systems must remain accountable. If companies like Microsoft, GitHub, and OpenAI choose to disregard the law, they should not expect that we the public will sit still. AI needs to be fair & ethical for everyone.

This issue is an important one. The friction for this matter is that the US government is dependent on open source to some degree. Microsoft is a major US government contractor. A number of Federal agencies are providing money to companies engaged in strategically significant research and development of artificial intelligence.

The different parties to this issue may exert or apply influence.

Worth watching because Amazon- and Google-type companies want to be the Big Dog in smart software. Once the basic technology has been appropriated, will these types of companies pull the plug on open source support and god cloud commercial? Will attorneys benefit while the open source community suffers? Will this legal matter mark the start of a sharp decline in open source software?

Stephen E Arnold, November 9, 2022

OSINT Is Popular. Just Exercise Caution

November 2, 2022

Many have embraced open source intelligence as the solution to competitive intelligence, law enforcement investigations, and “real” journalists’ data gathering tasks.

For many situations, OSINT as open source intelligence is called, most of those disciplines can benefit. However, as we work on my follow up to monograph to CyberOSINT and the Dark Web Notebook, we have identified some potential blind spots for OSINT enthusiasts.

I want to mention one example of what happens when clever technologists mesh hungry OSINT investigators with some online trickery.

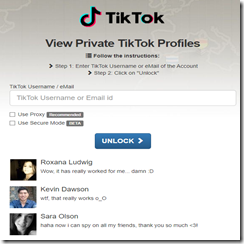

Navigate to privtik.com (78.142.29.185). At this site you will find:

But there is a catch, and a not too subtle one:

The site includes mandatory choices in order to access the “secret” TikTok profile.

How many OSINT investigators use this service? Not too many at this time. However, we have identified other, similar services. Many of these reside on what we call “ghost ISPs.” If you are not aware of these services, that’s not surprising. As the frenzy about the “value” of open source investigations increases, geotag spoofing, fake data, and scams will escalate. What happens if those doing research do not verify what’s provided and the behind the scenes data gathering?

That’s a good question and one that gets little attention in much OSINT training. If you want to see useful OSINT resources, check www.osintfix.com. Each click displays one of the OSINT resources we find interesting.

Stephen E Arnold, November 2, 2022