Rethinking Newspapers: The Dinobaby View

January 27, 2025

A blog post from an authentic dinobaby. He’s old; he’s in the sticks; and he is deeply skeptical.

A blog post from an authentic dinobaby. He’s old; he’s in the sticks; and he is deeply skeptical.

I read “For Some Newspaper Workers, the New Year Began with Four Weeks of Unpaid Leave.” But the subtitle is the snappy statement:

The chain CNHI furloughed 46 staffers, or about 3% of its workforce. It’s likely a weather vane for industry trouble ahead.

The write up says, rather predictably, in my opinion:

the furloughs were precipitated by a very “soft fourth quarter,” usually the best of the year for newspapers, buoyed with ads for Christmas shopping.

No advertising and Amazon. A one-two punch.

The article concludes:

If you’re looking for a silver lining here, it may be that upstart investors continue to buy up newspapers as they come up for sale, still seeing a potential for profit in the business.

What a newspaper needs is a bit of innovation. Having worked at both newspaper publishing and a magazine publishing companies, I dipped into some of my old lectures about online. I floated these ideas at various times in company talks and in my public lectures, including the one I received from ASIS in the late 1980s. Here’s a selected list:

- People and companies pay for must-have information. Create must-have content in digital form and then sell access to that content.

- Newspapers are intelligence gathering outfits. Focus on intelligence and sell reports to outfits known to purchase these reports.

- Convert to a foundation and get in the grant and fund raising business.

- Online access won’t generate substantial revenue; therefore, use online to promote other information services.

- Each newspaper has a core competency. Convert that core competency into pay-to-attend conferences on specific subjects. Sell booth and exhibit space. Convert selling ads to selling a sponsored cocktail at the event.

- Move from advertising to digital coupons. These can be made available on a simple local-focus Web site. For people who want paper ads, sell a subscription to an envelope containing the coupons and possibly a small amount of information of interest to the area the newspaper serves.

Okay, how many of these ideas are in play today? Most of them, just not from newspaper outfits. That’s the problem. Innovation is tough to spark. Is it too late now? My research team has more ideas. Write benkent2020 at yahoo dot com.

Stephen E Arnold, January 27, 2024

How to Garner Attention from X.com: The Guardian Method Seems Infallible

January 24, 2025

Prepared by a still-alive dinobaby.

Prepared by a still-alive dinobaby.

The Guardian has revealed its secret to getting social media attention from Twitter (now the X). “‘Just the Start’: X’s New AI Software Driving Online Racist Abuse, Experts Warn” makes the process dead simple. Here are the steps:

- Publish a diatribe about the power of social media in general with specific references to the Twitter machine

- Use name calling to add some clickable bound phrases; for example, “online racism”, “fake images”, and “naked hate”

- Use loaded words to describe images; for example, an athlete “who is black, picking cotton while another shows that same player eating a banana surrounded by monkeys in a forest.”

Bingo. Instantly clickable.

The write up explains:

Callum Hood, the head of research at the Center for Countering Digital Hate (CCDH), said X had become a platform that incentivised and rewarded spreading hate through revenue sharing, and AI imagery made that even easier. “The thing that X has done, to a degree that no other mainstream platform has done, is to offer cash incentives to accounts to do this, so accounts on X are very deliberately posting the most naked hate and disinformation possible.”

This is a recipe for attention and clicks. Will the Guardian be able to convert the magnetism of the method in cash money?

Stephen E Arnold, January 24, 2025

The Brain Rot Thing: The 78 Wax Record Is Stuck Again

January 10, 2025

This is an official dinobaby post.

This is an official dinobaby post.

I read again about brain rot. I get it. Young kids play with a mobile phone. They get into social media. They watch TikTok. The discover the rich, rewarding world of Telegram online gambling. These folks don’t care about reading. Period. I get it.

But the Financial Times wants me to really get it. “Social Media, Brain Rot and the Slow Death of Reading” says:

Social media is designed to hijack our attention with stimulation and validation in a way that makes it hard for the technology of the page to compete.

This is news? Well, what about this statement:

The easy dopamine hit of social media can make reading feel more effortful by comparison. But the rewards are worth the extra effort: regular readers report higher wellbeing and life satisfaction, benefiting from improved sleep, focus, connection and creativity. While just six minutes of reading has been shown to reduce stress levels by two-thirds, deep reading offers additional cognitive rewards of critical thinking, empathy and self-reflection.

Okay, now tell that to the people in line at the grocery store or the kids in a high school class. Guess what? The joy of reading is not part of the warp and woof of 2025 life.

The news flash is that traditional media like the Financial Times long for the time when everyone read. Excuse me. When was that time? People read in school so they can get out of school and not read. Books still sell, but the avid readers are becoming dinobabies. Most of the dinobabies I know don’t read too much. My wife’s bridge club reads popular novels but non fiction is a non starter.

What does the FT want people to do? Here’s a clue:

Even if the TikTok ban goes ahead in the US, other platforms will pop up to replace it. So in 2025, why not replace the phone on your bedside table with a book? Just an hour a day clawed back from screen time adds up to about a book a week, placing you among an elite top one per cent of readers. Melville (and a Hula-Hoop) are optional.

Lamenting and recommending is not going to change what the flows of electronic information have done. There are more insidious effects racing down the information highway. Those who will be happiest will be those who live in ignorance. People with some knowledge will be deeply unhappy.

Will the FT want dinosaurs to roam again? Sure. Will the FT write about them? Of course. Will the impassioned words change what’s happened and will happen? Nope. Get over it, please. You may as well long for the days when Madame Tussaud’s Wax Museum and you were part of the same company.

Stephen E Arnold, January 10, 2025

Be Secure Like a Journalist

January 9, 2025

This is an official dinobaby post.

This is an official dinobaby post.

If you want to be secure like a journalist, Freedom.press has a how-to for you. The write up “The 2025 Journalist’s Digital Security Checklist” provides text combined with a sort of interactive design. For example, if you want to know more about an item on a checklist, just click the plus sign and the recommendations appear.

There are several sections in the document. Each addresses a specific security vector or issue. These are:

- Asses your risk

- Set up your mobile to be “secure”

- Protect your mobile from unwanted access

- Secure your communication channels

- Guard your documents from harm

- Manage your online profile

- Protect your research whilst browsing

- Avoid getting hacked

- Set up secure tip lines.

Most of the suggestions are useful. However, I would strongly recommend that any mobile phone user download this presentation from the December 2024 Chaos Computer Club meeting held after Christmas. There are some other suggestions which may be of interest to journalists, but these regard specific software such as Google’s Chrome browser, Apple’s wonderful iCloud, and Microsoft’s oh-so-secure operating system.

The best way for a journalist to be secure is to be a “ghost.” That implies some type of zero profile identity, burner phones, and other specific operational security methods. These, however, are likely to land a “real” journalist in hot water either with an employer or an outfit like a professional organization. A clever journalist would gain access to a sock puppet control software in order to manage a number of false personas at one time. Plus, there are old chestnuts like certain Dark Web services. Are these types of procedures truly secure?

In my experience, the only secure computing device is one that is unplugged in a locked room. The only secure information is that which one knows and has not written down or shared with anyone. Every time I meet a journalist unaware of specialized tools and services for law enforcement or intelligence professionals I know I can make that person squirm if I describe one of the hundreds of services about which journalists know nothing.

For starters, watch the CCC video. Another tip: Choose the country in which certain information is published with your name identifying you as an author carefully. Very carefully.

Stephen E Arnold, January 9, 2025

Grousing about Smart Software: Yeah, That Will Work

December 6, 2024

This is the work of a dinobaby. Smart software helps me with art, but the actual writing? Just me and my keyboard.

This is the work of a dinobaby. Smart software helps me with art, but the actual writing? Just me and my keyboard.

I read “Writers Condemn Startup’s Plans to Publish 8,000 Books Next Year Using AI.” The innovator is an outfit called Spines. Cute, book spines and not mixed up with spiny mice or spiny rats.

The write up reports:

Spines – which secured $16m in a recent funding round – says that authors will retain 100% of their royalties. Co-founder Yehuda Niv, who previously ran a publisher and publishing services business in Israel, claimed that the company “isn’t self-publishing” or a vanity publisher but a “publishing platform”.

A platform, not a publisher. The difference is important because venture types don’t pump cash into traditional publishing companies in my experience.

The article identified another key differentiator for Spines:

Spines says it will reduce the time it takes to publish a book to two to three weeks.

When publishers with whom I worked talked about time, the units were months. In one case, it was more than a year. When I was writing books, the subject matter changed on a slightly different time scale. Traditional publishers do not zip along with the snappiness of a two year old French bulldog.

Spines is quoted in the write up as saying:

[We are] levelling the playing field for any person who aspires to be an author to get published within less than three weeks and at a fraction of the cost. Our goal is to help one million authors to publish their books using technology….”

Yep, technology. Is that a core competency of big time publishers?

Several observations from my dinobaby-friendly lair:

- If Spines works — that is, makes lots of money — a traditional publisher will probably buy the company and sue any entity which impinges on its “original” ideas.

- Costs for instant publishing on Amazon remain more attractive. The fees are based on delivery of digital content and royalties assessed. Spines may have to spend money to find writers able to pay the company to do the cover, set up, design, etc.

- Connecting agentic AI into a Spines-type service may be interesting to some.

Stephen E Arnold, December 6, 2024

Directories Have Value

November 29, 2024

Why would one build an online directory—to create a helpful reference? Or for self aggrandizement? Maybe both. HackerNoon shares a post by developer Alexander Isora, “Here’s Why Owning a Directory = Owning a Free Infinite Marketing Channel.”

First, he explains why users are drawn to a quality directory on a particular topic: because humans are better than Google’s algorithm at determining relevant content. No argument here. He uses his own directory of Stripe alternatives as an example:

“Why my directory is better than any of the top pages from Google? Because in the SERP [Search Engine Results Page], you will only see articles written by SEO experts. They have no idea about billing systems. They never managed a SaaS. Their set of links is 15 random items from Crunchbase or Product Hunt. Their article has near 0 value for the reader because the only purpose of the article is to bring traffic to the company’s blog. What about mine? I tried a bunch of Stripe alternatives myself. Not just signed up, but earned thousands of real cash through them. I also read 100s of tweets about the experiences of others. I’m an expert now. I can even recognize good ones without trying them. The set of items I published is WAY better than any of the SEO-optimized articles you will ever find on Google. That is the value of a directory.”

Okay, so that is why others would want a subject-matter expert to create a directory. But what is in it for the creator? Why, traffic, of course! A good directory draws eyeballs to one’s own products and services, the post asserts, or one can sell ads for a passive income. One could even sell a directory (to whom?) or turn it into its own SaaS if it is truly popular.

Perhaps ironically, Isora’s next step is to optimize his directories for search engines. Sounds like a plan.

Cynthia Murrell, November 29, 2024

More Googley Human Resource Goodness

November 22, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The New York Post reported that a Googler has departed. “Google News Executive Shailesh Prakash Resigns As Tensions with Publishers Mount: Report” states:

Shailesh Prakash had served as a vice president and general manager for Google News. A source confirmed that he is no longer with the company… The circumstances behind Prakash’s resignation were not immediately clear. Google declined to comment.

Google tapped a professional who allegedly rode in the Bezos bulldozer when the world’s second or third richest man in the world acquired the Washington Post. (How has that been going? Yeah.)

Thanks, MidJourney. Good enough.

Google has been cheerfully indexing content and selling advertising for decades. After a number of years of talking and allegedly providing some support to outfits collecting, massaging, and making “real” news available, the Google is facing some headwinds.

The article reports:

The Big Tech giant rankled online publishers last May after it introduced a feature called “AI Overviews” – which places an auto-generated summary at the top of its search results while burying links to other sites. News Media Alliance, a nonprofit that represents more than 2,200 publishers, including The Post, said the feature would be “catastrophic to our traffic” and has called on the feds to intervene.

News flash from rural Kentucky: The good old days of newspaper publishing are unlikely to make a comeback. What’s the evidence for this statement? Video and outfits like Telegram and WhatsApp deliver content to cohorts who don’t think too much about a print anything.

The article pointed out:

Last month, The Post exclusively reported on emails that revealed how Google leveraged its access to the Office of the US Trade Representative as it sought to undermine overseas regulations — including Canada’s Online News Act, which required Google to pay for the right to display news content.

You can read that report “Google Emails with US Trade Reps Reveal Cozy Ties As Tech Giant Pushed to Hijack Policy” if you have time.

Let’s think about why a member of Google leadership like Shailesh Prakash would bail out. Among the options are:

- He wanted to spend more time with his family

- Another outfit wanted to hire him to manage something in the world of publishing

- He failed in making publishers happy.

The larger question is, “Why would Google think that one fellow could make a multi-decade problem go away?” The fact that I can ask this question reveals how Google’s consulting infused leaders think about an entire business sector. It also provides some insight into the confidence of a professional like Mr. Prakash.

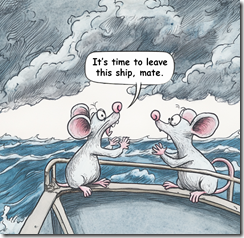

What flees sinking ships? Certainly not the lawyers that Google will throw at this “problem.” Google has money and that may be enough to buy time and perhaps prevail. If there aren’t any publishers grousing, the problem gets resolved. Efficient.

Stephen E Arnold, November 22, 2024

AI Help for Struggling Journalists. Absolutely

October 10, 2024

Writers, artists, and other creatives have labeled AI as their doom of their industries and livelihoods. Generative AI Newsroom explains one way that AI could be helpful to writers: “How Teams of AI Agents Could Provide Valuable Leads For Investigative Data Journalism.” Investigative and data journalism requires the teamwork of many individuals. Due to the teamwork of the journalists, they create impactful stories.

Media outlets experimented with adding generative AI to journalism and it wasn’t successful. The information was inaccurate and very specific instructions. While OpenAI’s ChatGPT chatbot seems intuitive with its Q and A interface, investigative journalism requires a more robust AI.

Investigative journalism and other writing vocations require team work, so AI for those jobs could benefit from it too. The Generative AI Newsroom is working on an AI that would assist journalists:

“Specifically, we developed a prototype system that, when provided with a dataset and a description of its contents, generates a “tip sheet” — a list of newsworthy observations that may inspire further journalistic explorations of datasets. Behind the scenes, this system employs three AI agents, emulating the roles of a data analyst, an investigative reporter, and a data editor. To carry out our agentic workflow, we utilized GPT-4-turbo via OpenAI’s Assistants API, which allows the model to iteratively execute code and interact with the results of data analyses.”

A human journalist, editor, and analyst works with the AI:

“In our setup, the analyst is made responsible for turning journalistic questions into quantitative analyses. It conducts the analysis, interprets the results, and feeds these insights into the broader process. The reporter, meanwhile, generates the questions, pushes the analyst with follow-ups to guide the process towards something newsworthy, and distills the key findings into something meaningful. The editor, then, mainly steps in as the quality control, ensuring the integrity of the work, bulletproofing the analysis, and pushing the outputs towards factual accuracy.”

The AI is still in its testing phase but it sounds like a viable tool to incorporate AI into media outlets. While humans are an integral part of the process, what happens when the AI becomes better at storytelling than humans? It is possible. Where does the human role come in then?

Whitney Grace, October 10, 2024

Takedown Notices May Slightly Boost Sales of Content

August 13, 2024

It looks like take-down notices might help sales of legitimate books. A little bit. TorrentFreak shares the findings from a study by the University of Warsaw, Poland, in, “Taking Pirated Copies Offline Can Benefit Book Sales, Research Finds.” Writer Ernesto Van der Sar explains:

“This year alone, Google has processed hundreds of millions of takedown requests on behalf of publishers, at a frequency we have never seen before. The same publishers also target the pirate sites and their hosting providers directly, hoping to achieve results. Thus far, little is known about the effectiveness of these measures. In theory, takedowns are supposed to lead to limited availability of pirate sources and a subsequent increase in legitimate sales. But does it really work that way? To find out more, researchers from the University of Warsaw, Poland, set up a field experiment. They reached out to several major publishers and partnered with an anti-piracy outfit, to test whether takedown efforts have a measurable effect on legitimate book sales.”

See the write-up for the team’s methodology. There is a caveat: The study included only print books, because Poland’s e-book market is too small to be statistically reliable. This is an important detail, since digital e-books are a more direct swap for pirated copies found online. Even so, the researchers found takedown notices produced a slight bump in print-book sales. Research assistants confirmed they could find fewer pirated copies, and the ones they did find were harder to unearth. The write-up notes more research is needed before any conclusions can be drawn.

How hard will publishers tug at this thread? By this logic, if one closes libraries that will help book sales, too. Eliminating review copies may cause some sales. Why not publish books and keep them secret until Amazon provides a link? So many money-grubbing possibilities, and all it would cost is an educated public.

Cynthia Murrell, August 13, 2024

Anarchist Content Links: Zines Live

July 19, 2024

![dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/dinosaur30a_thumb_thumb_thumb_thumb_1_thumb_thumb.gif) This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

One of my team called my attention to “Library. It’s Going Down Reading Library.” I know I am not clued into the lingo of anarchists. As a result, the Library … Library rhetoric just put me on a slow blinking yellow alert or emulating the linguistic style of Its Going Down, Alert. It’s Slow Blinking Alert.”

Syntactical musings behind me, the list includes links to publications focused on fostering even more chaos than one encounters at a Costco or a Southwest Airlines boarding gate. The content of these publications is thought provoking to some and others may be reassured that tearing down may be more interesting than building up.

The publications are grouped in categories. Let me list a handful:

- Antifascism

- Anti-Politics

- Anti-Prison, Anti-Police, and Counter-Insurgency.

Personally I would have presented antifascism as anti-fascism to be consistent with the other antis, but that’s what anarchy suggests, doesn’t it?

When one clicks on a category, the viewer is presented with a curated list of “going down” related content. Here’s a listing of what’s on offer for the topic AI has made great again, Automation:

Livewire: Against Automation, Against UBI, Against Capital

If one wants more “controversial” content, one can visit these links:

Each of these has the “zine” vibe and provides useful information. I noted the lingo and the names of the authors. It is often helpful to have an entity with which one can associate certain interesting topics.

My take on these modest collections: Some folks are quite agitated and want to make live more challenging that an 80-year-old dinobaby finds it. (But zines remind me of weird newsprint or photocopied booklets in the 1970s or so.) If the content of these publications is accurate, we have not “zine” anything yet.

Stephen E Arnold, July 19, 2024