Using AI But For Avoiding Dumb Stuff One Hopes

May 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an interesting essay called “How I Use AI To Help With TechDirt (And, No, It’s Not Writing Articles).” The main point of the write up is that artificial intelligence or smart software (my preferred phrase) can be useful for certain use cases. The article states:

I think the best use of AI is in making people better at their jobs. So I thought I would describe one way in which I’ve been using AI. And, no, it’s not to write articles. It’s basically to help me brainstorm, critique my articles, and make suggestions on how to improve them.

Thanks, MSFT Copilot. Bad grammar and an incorrect use of the apostrophe. Also, I was much dumber looking in the 9th grade. But good enough, the motto of some big software outfits, right?

The idea is that an AI system can function as a partner, research assistant, editor, and interlocutor. That sounds like what Microsoft calls a “copilot.” The article continues:

I initially couldn’t think of anything to ask the AI, so I asked people in Lex’s Discord how they used it. One user sent back a “scorecard” that he had created, which he asked Lex to use to review everything he wrote.

The use case is that smart software function like Miss Dalton, my English composition teacher at Woodruff High School in 1958. She was a firm believer in diagramming sentences, following the precepts of the Tressler & Christ textbook, and arcane rules such as capitalizing the first word following a color (correctly used, of course).

I think her approach was intended to force students in 1958 to perform these word and text manipulations automatically. Then when we trooped to the library every month to do “research” on a topic she assigned, we could focus on the content, the logic, and the structural presentation of the information. If you attend one of my lectures, you can see that I am struggling to live up to her ideals.

However, when I plugged in my comments about Telegram as a platform tailored to obfuscated communications, the delivery of malware and X-rated content, and enforcing a myth that the entity known as Mr. Durov does not cooperate with certain entities to filter content, AI systems failed miserably. Not only were the systems lacking content, one — Microsoft Copilot, to be specific — had no functional content of collapse. Two other systems balked at the idea of delivering CSAM within a Group’s Channel devoted to paying customers of what is either illegal or extremely unpleasant content.

Several observations are warranted:

- For certain types of content, the systems lack sufficient data to know what the heck I am talking about

- For illegal activities, the systems are either pretending to be really stupid or the developers have added STOP words to the filters to make darned sure to improper output would be presented

- The systems’ are not up-to-date; for example, Mr. Durov was interviewed by Tucker Carlson a week before Mr. Durov blocked Ukraine Telegram Groups’ content to Telegram users in Russia.

Is it, therefore, reasonable to depend on a smart software system to provide input on a “newish” topic? Is it possible the smart software systems are fiddled by the developers so that no useful information is delivered to the user (free or paying)?

Net net: I am delighted people are finding smart software useful. For my lectures to law enforcement officers and cyber investigators, smart software is as of May 1, 2024, not ready for prime time. My concern is that some individuals may not discern the problems with the outputs. Writing about the law and its interpretation is an area about which I am not qualified to comment. But perhaps legal content is different from garden variety criminal operations. No, I won’t ask, “What’s criminal?” I would rather rely on Miss Dalton taught in 1958. Why? I am a dinobaby and deeply skeptical of probabilistic-based systems which do not incorporate Kolmogorov-Arnold methods. Hey, that’s my relative’s approach.

Stephen E Arnold, May 1, 2024

NSO Pegasus: No Longer Flying Below the Radar

April 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “AP Exclusive: Polish Opposition Senator Hacked with Spyware.” I remain fearful of quoting the AP or Associated Press. I think it is a good business move to have an 89 year old terrified of an American “institution, don’t you. I think I am okay if I tell you the AP recycled a report from the University of Toronto’s Citizen Lab. Once again, the researchers have documented the use of what I call “intelware” by a nation state. The AP and other “real” news outfits prefer the term “spyware.” I think it has more sizzle, but I am going to put NSO Group’s mobile phone system and method in the category of intelware. The reason is that specialized software like Pegasus gathers information for a nation’s intelligence entities. Well, that’s the theory. The companies producing these platforms and tools want to answer such questions as “Who is going to undermine our interests?” or “What’s the next kinetic action directed at our facilities?” or “Who is involved in money laundering, human trafficking, or arms deals?”

Thanks, MSFT Copilot. Cutting down the cycles for free art, are you?

The problem is that specialized software is no longer secret. The Citizen Lab and the AP have been diligent in explaining how some of the tools work and what type of information can be gathered. My personal view is that information about these tools has been converted into college programming courses, open source software tools, and headline grabbing articles. I know from personal experience that most people do not have a clue how data from an iPhone can be exfiltrated, cross correlated, and used to track down those who would violate the laws of a nation state. But, as the saying goes, information wants to be free. Okay, it’s free. How about that?

The write up contains an interesting statement. I want to note that I am not plagiarizing, undermining advertising sales, or choking off subscriptions. I am offering the information as a peg on which to hang some observations. Here’s the quote:

“My heart sinks with each case we find,” Scott-Railton [a senior researcher at UT’s Citizen Lab] added. “This seems to be confirming our worst fear: Even when used in a democracy, this kind of spyware has an almost immutable abuse potential.”

Okay, we have malware, a command-and-control system, logs, and a variety of delivery mechanisms.

I am baffled because malware is used by both good and bad actors. Exactly what does the University of Toronto and the AP want to happen. The reality is that once secret information is leaked, it becomes the Teflon for rapidly diffusing applications. Does writing about what I view an “old” story change what’s happening with potent systems and methods? Will government officials join in a kumbaya moment and force the systems and methods to fall into disuse? Endless recycling of an instrumental action by this country or that agency gets us where?

In my opinion, the sensationalizing of behavior does not correlate with responsible use of technologies. I think the Pegasus story is a search for headlines or recognition for saying, “Look what we found. Country X is a problem!” Spare me. Change must occur within institutions. Those engaged in the use of intelware and related technologies are aware of issues. These are, in my experience, not ignored. Improper behavior is rampant in today’s datasphere.

Standing on the sidelines and yelling at a player who let the team down does what exactly? Perhaps a more constructive approach can be identified and offered as a solution beyond Pegasus again? Broken record. I know you are “just doing your job.” Fine but is there a new tune to play?

Stephen E Arnold, April l29, 2024

Fake Books: Will AI Cause Harm or Do Good?

April 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read what I call a “howl” from a person who cares about “good” books. Now “good” is a tricky term to define. It is similar to “quality” or “love.” I am not going to try to define any of these terms. Instead I want to look at one example of smart software creating a problem for humans who create books. Then I want to focus attention on Amazon, the online bookstore. I think about two-thirds of American shoppers have some interaction with Amazon. That percentage is probably low if we narrow to top earners in the US. I want to wrap up with a reminder to those who think about smart software that the diffusion of technology chugs along and then — bang! — phase change. Spoiler: That’s what AI is doing now, and the pace is accelerating.

The Copilot images illustrates how smart software spreads. Cleaning up is a bit of a chore. The table cloth and the meeting may be ruined. But that’s progress of sorts, isn’t it?

The point of departure is an essay cum “real” news write up about fake books titled “Amazon Is Filled with Garbage Ebooks. Here’s How They Get Made.”

. These books are generated by smart software and Fiverr-type labor. Dump the content in a word processor, slap on a title, and publish the work on Amazon. I write my books by hand, and I try to label that which I write or pay people to write as “the work of a dumb dinobaby.” Other authors do not follow my practice. Let many flowers bloom.

The write up states:

It’s so difficult for most authors to make a living from their writing that we sometimes lose track of how much money there is to be made from books, if only we could save costs on the laborious, time-consuming process of writing them. The internet, though, has always been a safe harbor for those with plans to innovate that pesky writing part out of the actual book publishing.

This passage explains exactly why fake books are created. The fact of fake books makes clear that AI technology diffusing; that is, smart software is turning up in places and ways that the math people fiddling the numerical recipes or the engineers hooking up thousands of computing units envisioned. Why would they? How many mathy types are able to remember their mother’s birthday?

The path for the “fake book” is easy money. The objective is not excellence, sophisticated demonstration of knowledge, or the mindlessness of writing a book “because.” The angst in the cited essay comes from the side of the coin that wants books created the old-fashioned way. Yeah, I get it. But today it is clear that the hand crafted books are going to face some challenges in the marketplace. I anticipate that “quality” fake books will convert the “real” book to the equivalent of a cuneiform tablet. Don’t like this? I am a dinobaby, and I call the trajectory as my experience and research warrants.

Now what about buying fake books on Amazon? Anyone can get an ISBN, but for Amazon, no ISBN is (based on our tests) no big deal. Amazon has zero incentive to block fake books. If someone wants a hard copy of a fake book, let Amazon’s own instant print service produce the copy. Amazon is set up to generate revenue, not be a grumpy grandmother forcing grandchildren to pick up after themselves. Amazon could invest to squelch fraudulent or suspect behaviors. But here’s a representative Amazon word salad explanation cited in the “Garbage Ebooks” essay:

In a statement, Amazon spokesperson Ashley Vanicek said, “We aim to provide the best possible shopping, reading, and publishing experience, and we are constantly evaluating developments that impact that experience, which includes the rapid evolution and expansion of generative AI tools.”

Yep, I suggest not holding one’s breath until Amazon spends money to address a pervasive issue within its service array.

Now the third topic: Slowly, slowly, then the frog dies. Smart software in one form or another has been around a half century or more. I can date smart software in the policeware / intelware sector to the late 1990s when commercial services were no longer subject to stealth operation or “don’t tell” business practices. For the ChatGPT-type services, NLP has been around longer, but it did not work largely due to computational costs and the hit-and-miss approaches of different research groups. Inference, DR-LINK, or one of the other notable early commercial attempts, anyone?

Okay, now the frog is dead, and everyone knows it. Better yet, navigate to any open source repository or respond to one of those posts on Pinboard or listings in Product Hunt, and you are good to go. Anthropic has released a cook book, just do-it-yourself ideas for building a start up with Anthropic tools. And if you write Microsoft Excel or Word macros for a living, you are already on the money road.

I am not sure Microsoft’s AI services work particularly well, but the stuff is everywhere. Microsoft is spending big to make sure it is not left out of an AI lunches in Dubai. I won’t describe the impact of the Manhattan chatbot. That’s a hoot. (We cover this slip up in the AItoAI video pod my son and I do once each month. You can find that information about NYC at this link.)

Net net: The tipping point has been reached. AI is tumbling and its impact will be continuous — at least for a while. And books? Sure, great books like those from Twitter luminaries will sell. To those without a self-promotion rail gun, cloudy days ahead. In fact, essays like “Garbage Ebooks” will be cranked out by smart software. Most people will be none the wiser. We are not facing a dead Internet; we are facing the death of high-value information. When data are synthetic, what’s original thinking got to do with making money?

Stephen E Arnold, April 24, 2024

The Evolution of Study Notes: From Lazy to Downright Slothful

April 22, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Study guides, Cliff Notes, movie versions, comic books, and bribing elder siblings or past students for their old homework and class notes were the how kids used to work their way through classes. Then came the Internet and over the years innovative people have perfected study guides. Some have even made successful businesses from study guides for literature, science, math, foreign language, writing, history, and more.

The quality of these study guides range from poor to fantastic. PinkMonkey.com is one of the average study guide websites. It has some free book guides while others are behind a paywall. There are also educational tips for different grades and advice for college applications. The information is a little dated but when it is combined with other educational and homework help websites it still has its uses.

PinkMonkey.com describes itself as:

“…a "G" rated study resource for junior high, high school, college students, teachers and home schoolers. What does PinkMonkey offer you? The World’s largest library of free online Literature Summaries, with over 460 Study Guides / Book Notes / Chapter Summaries online currently, and so much more. No more trips to the book store; no more fruitless searching for a booknote that no one ever has in stock! You’ll find it all here, online 24/7!”

YouTube, TikTok, and other platforms are also 24/7. They’re also being powered more and more by AI. It won’t be long before AI is condensing these guides and turning them into consumable videos. There are already channels that made study guides but homework still requires more than an AI answer.

ChatGPT and other generative AI algorithms are getting smarter by being trained on sets that pull their data from the Internet. These datasets include books, videos, and more. In the future, students will be relying on study guides in video format. The question to ask is how will they look? Will they summarize an entire book in fifteen seconds, take it chapter by chapter, or make movies powered by AI?

Whitey Grace, April 22, 2024

Publishers Not Thrilled with Internet Archive

April 15, 2024

So you are saving the library of an island? So what?

The non-profit Internet Archive (IA) preserves digital history. It also archives a wealth of digital media, including a large number of books, for the public to freely access. Certain major publishers are trying to stop the organization from sharing their books. These firms just scored a win in a New York federal court. However, the IA is not giving up. In its defense, the organization has pointed to the opinions of authors and copyright scholars. Now, Hachette, HarperCollins, John Wiley, and Penguin Random House counter with their own roster of experts. TorrentFreak reports, “Publishers Secure Widespread Support in Landmark Copyright Battle with Internet Archive.” Journalist Ernesto Van der Sar writes:

“The importance of this legal battle is illustrated by the large number of amicus briefs that are filed by third parties. Previously, IA received support from copyright scholars and the Authors Alliance, among others. A few days ago, another round of amicus came in at the Court of Appeals, this time to back the publishers who filed their reply last week. In more than a handful of filings, prominent individuals and organizations urge the Appeals Court not to reverse the district court ruling, arguing that this would severely hamper the interests of copyright holders. The briefs include positions from industry groups such as the MPA, RIAA, IFPI, Copyright Alliance, the Authors Guild, various writers unions, and many others. Legal scholars, professors, and former government officials, also chimed in.”

See the article for more details on those chimes. A couple points to highlight: First, AI is a part of this because of course it is. Several trade groups argue IA makes high-quality texts too readily available for LLMs to train upon, posing an “artificial intelligence” threat. Also of interest are the opinions that differentiate this case from the Google Books precedent. We learn:

“[Scholars of relevant laws] stress that IA’s practice should not be seen as ‘transformative’ fair use, arguing that the library offers a ‘substitution’ for books that are legally offered by the publishers. This sets the case apart from current legal precedents including the Google Books case, where Google’s mass use of copyrighted books was deemed fair use. ‘IA’s exploitation of copyrighted books is thus the polar opposite of the copying that was found to be transformative in Google Books and HathiTrust. IA offers no “utility-expanding” searchable database to its subscribers.’”

Ah, the devilish details. Will these amicus-rich publishers prevail, or will the decision be overturned on IA’s appeal?

Cynthia Murrell, April 15, 2024

The University of Illinois: Unintentional Irony

March 22, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I admit it. I was in the PhD program at the University of Illinois at Champaign-Urbana (aka Chambana). There was nothing like watching a storm build from the upper floors of now departed FAR. I spotted a university news release titled “Americans Struggle to Distinguish Factual Claims from Opinions Amid Partisan Bias.” From my point of view, the paper presents research that says that half of those in the sample cannot distinguish truth from fiction. That’s a fact easily verified by visiting a local chain store, purchasing a product, and asking the clerk to provide the change in a specific way; for example, “May I have two fives and five dimes, please?” Putting data behind personal experience is a time-honored chore in the groves of academe.

Discerning people can determine “real” from “original fakes.” Well, only half the people can it seems. The problem is defining what’s true and what’s false. Thanks, MSFT Copilot. Keep working on your security. Those breaches are “real.” Half the time is close enough for horseshoes.

Here’s a quote from the write up I noted:

“How can you have productive discourse about issues if you’re not only disagreeing on a basic set of facts, but you’re also disagreeing on the more fundamental nature of what a fact itself is?” — Matthew Mettler, a U. of I. graduate student and co-author of with Jeffery J. Mondak, a professor of political science and the James M. Benson Chair in Public Issues and Civic Leadership at Illinois.

The news release about Mettler’s and Mondak’s research contains this statement:

But what we found is that, even before we get to the stage of labeling something misinformation, people often have trouble discerning the difference between statements of fact and opinion…. “What we’re showing here is that people have trouble distinguishing factual claims from opinion, and if we don’t have this shared sense of reality, then standard journalistic fact-checking – which is more curative than preventative – is not going to be a productive way of defanging misinformation,” Mondak said. “How can you have productive discourse about issues if you’re not only disagreeing on a basic set of facts, but you’re also disagreeing on the more fundamental nature of what a fact itself is?”

But the research suggests that highly educated people cannot differentiate made up data from non-weaponized information. What struck me is that Harvard’s Misinformation Review published this U of I research that provides a road map to fooling peers and publishers. Harvard University, like Stanford University, has found that certain big-time scholars violate academic protocols.

I am delighted that the U of I research is getting published. My concern is that the Misinformation Review does not find my laughing at its Misinformation Review to their liking. Harvard illustrates that academic transgressions cannot be identified by half of those exposed to the confections of up-market academics.

Should Messrs Mettler and Mondak have published their research in another journal? That a good question, but I am no longer convinced that professional publications have more credibility than the outputs of a content farm. Such is the erosion of once-valued norms. Another peril of thumb typing is present.

Stephen E Arnold, March 22, 2024

AI Bubble Gum Cards

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A publication for electrical engineers has created a new mechanism for making AI into a collectible. Navigate to “The AI apocalypse: A Scorecard.” Scroll down to the part of the post which looks like the gems from the 1050s:

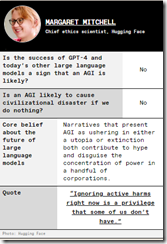

The idea is to pick 22 experts and gather their big ideas about AI’s potential to destroy humanity. Here’s one example of an IEEE bubble gum card:

© by the estimable IEEE.

The information on the cards is eclectic. It is clear that some people think smart software will kill me and you. Others are not worried.

My thought is that IEEE should expand upon this concept; for example, here are some bubble gum card ideas:

- Do the NFT play? These might be easier to sell than IEEE memberships and subscriptions to the magazine

- Offer actual, fungible packs of trading cards with throw-back bubble gum

- Create an AI movie about AI experts with opposing ideas doing battle in a video game type world. Zap. You lose, you doubter.

But the old-fashioned approach to selling trading cards to grade school kids won’t work. First, there are very few corner stores near schools in many cities. Two, a special interest group will agitate to block the sale of cards about AI because the inclusion of chewing gum will damage children’s teeth. And, three, kids today want TikToks, at least until the service is banned from a fast-acting group of elected officials.

I think the IEEE will go in a different direction; for example, micro USBs with AI images and source code on them. Or, the IEEE should just advance to the 21st-century and start producing short-form AI videos.

The IEEE does have an opportunity. AI collectibles.

Stephen E Arnold, March 13, 2024

Thomson Reuters Is Going to Do AI: Run Faster

March 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Thomson Reuters, a mostly low profile outfit, is going to do AI. Why’s this interesting to law schools, lawyers, accountants, special librarians, libraries, and others who “pay” for “real” information? There are three reasons:

- Money

- Markets

- Mania.

Thomson Reuters has been a tech talker for decades. The company created skunk works. It hired quirky MIT wizards. I bought businesses with information technology. But underneath the professional publishing clear coat, the firm is the creation of Lord Thomson of Fleet. The firm has a track record of being able to turn a profit on its $7 billion in revenues. But the future, if news reports are accurate, is artificial intelligence or smart software.

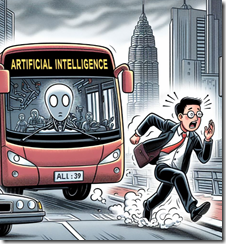

The young publishing executive says, “I have go to get ahead of this AI bus before it runs over me.” Thanks, MSFT Copilot. Working on security today?

But wait! What makes Thomson Reuters different from the New York Times or (heaven forbid the question) Rupert Murdoch’s confections? The answer is in my opinion: Thomson Reuters does the trust thing and is a professional publisher. I don’t want to explain that in the world of Lord Thomson of Fleet that publishing is publishing. Nope. Not going there. Thomson Reuters is a custom made billiard cue, not one of those bar pool cheapos.

As appropriate to today’s Thomson Reuters, the news appeared in Thomson’s own news releases first; for example, “Thomson Reuters Profit Beats Estimates Amid AI Push.” Yep, AI drives profits. That’s the “m” in money. Plus, Thomson late last year this article found its way to the law firm market (yep, that’s the second “m”): “Morgan Lewis and Thomson Reuters Enter into Partnership to Put Law Firms’ Needs at the Heart of AI Development.”

Now the third “m” or mania. Here’s a representative story, “Thomson Reuters to Invest US$8 billion in a Substantial AI-Focused Spending Initiative.” You can also check out the Financial Times’s report at this link.

Thomson Reuters is a $7 billion corporation. If the $8 billion number is on the money, the venerable news outfit is going to spend the equivalent on one year’s revenue acquiring and investing in smart software. In terms of professional publishing, this chunk of change is roughly the equivalent of Sam AI-Man’s need for trillions of dollars for his smart software business.

Several thoughts struck me as I was reading about the $8 billion investment in smart software:

- In terms of publishing or more narrowly professional publishing, $8 billion will take some time to spend. But time is not on the side of publishing decision making processes. When the check is written for an AI investment, there may be some who ask, “Is this the correct investment? After all, aren’t we professional publishers serving lawyers, accountants, and researchers?”

- The US legal processes are interesting. But the minor challenge of Crown copyright adds a bit of spice to certain investments. The UK government itself is reluctant to push into some AI areas due to concerns that certain information may not be available unless the red tape about copyright has been trimmed, rolled, and put on the shelf. Without being disrespectful, Thomson Reuters could find that some of the $8 billion headed into its clients pockets as legal challenges make their way through courts in Britain, Canada, and the US and probably some frisky EU states.

- The game for AI seems to be breaking into two what a former Greek minister calls the techno feudal set up. On one hand, there are giant technology centric companies (of which Thomson Reuters is not one of the club members). These are Google- and Microsoft-scale outfits with infrastructure, data, customers, and multiple business models. On the other hand, there are the Product Watch outfits which are using open source and APIs to create “new” and “important” AI businesses, applications, and solutions. In short, there are some barons and a whole grab-bag of lesser folk. Is Thomson Reuters going to be able to run with the barons. Remember, please, the barons are riding stallions. Thomson Reuter-type firms either walk or ride donkeys.

Net net: If Thomson Reuters spends $8 billion on smart software, how many lawyers, accountants, and researchers will be put out of work? The risks are not just bad AI investments. The threat maybe to gut the billing power of the paying customers for Thomson Reuters’ content. This will be entertaining to watch.

PS. The third “m”? It is mania, AI mania.

Stephen E Arnold, March 11, 2024

x

x

x

x

x

ACM: Good Defense or a Business Play?

March 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Professional publishers want to use the trappings of peer review, standards, tradition, and quasi academic hoo-hah to add value to their products; others want a quasi-monopoly. Think public legal filings and stuff in high school chemistry book. The customers of professional publishers are typically not the folks at the pizza joint on River Road in Prospect, Kentucky. The business of professional publishing in an interesting one, but in the wild and crazy world of collapsing next-gen publishing, professional publishing is often ignored. A publisher conference aimed at professional publishers is quite different from the Jazz Age South by Southwest shindig.

Yep, free. Thanks, MSFT Copilot. How’s that security today?

But professional publishers have been in the news. Examples include the dust up about academics making up data. The big time president of the much-honored Stanford University took intellectual short cuts and quit late last year. Then there was the some nasty issue about data and bias at the esteemed Harvard University. Plus, a number of bookish types have guess-timated that a hefty percentage of research studies contain made-up data. Hey, you gotta publish to get tenure or get a grant, right?

But there is an intruder in the basement of the professional publishing club. The intruder positions itself in the space between the making up of some data and the professional publishing process. That intruder is ArXiv, an open-access repository of electronic preprints and postprints (known as e-prints) approved for posting after moderation, according to Wikipedia. (Wikipedia is the cancer which killed the old-school encyclopedias.) Plus, there are services which offer access to professional content without paying for the right to host the information. I won’t name these services because I have no desire to have legal eagles circle about my semi-functioning head.

Why do I present this grade-school level history? I read “CACM Is Now Open Access.” Let’s let the Association of Computing Machinery explain its action:

For almost 65 years, the contents of CACM have been exclusively accessible to ACM members and individuals affiliated with institutions that subscribe to either CACM or the ACM Digital Library. In 2020, ACM announced its intention to transition to a fully Open Access publisher within a roughly five-year timeframe (January 2026) under a financially sustainable model. The transition is going well: By the end of 2023, approximately 40% of the ~26,000 articles ACM publishes annually were being published Open Access utilizing the ACM Open model. As ACM has progressed toward this goal, it has increasingly opened large parts of the ACM Digital Library, including more than 100,000 articles published between 1951–2000. It is ACM’s plan to open its entire archive of over 600,000 articles when the transition to full Open Access is complete.

The decision was not an easy one. Money issues rarely are.

I want to step back and look at this interesting change from a different point of view:

- Getting a degree today is less of a must have than when I was a wee dinobaby. My parents told me I was going to college. Period. I learned how much effort was required to get my hands on academic journals. I was a master of knowing that Carnegie-Mellon had new but limited bound volumes of certain professional publications. I knew what journals were at the University of Pittsburgh. I used these resources when the Duquesne Library was overrun with the faithful. Now “researchers” can zip online and whip up astonishing results. Google-type researchers prefer the phrase “quantumly supreme results.” This social change is one factor influencing the ACM.

- Stabilizing revenue streams means pulling off a magic trick. Sexy conferences and special events complement professional association membership fees. Reducing costs means knocking off the now, very very expensive printing, storing, and shipping of physical journals. The ACM seems to have figured out how to keep the lights on and the computing machine types spending.

- ACM members can use ACM content the way they do a pirate library’s or the feel good ArXiv outfit. The move helps neutralize discontent among the membership, and it is good PR.

These points raise a question; to wit: In today’s world how relevant will a professional association and its professional publications be going foreword. The ACM states:

By opening CACM to the world, ACM hopes to increase engagement with the broader computer science community and encourage non-members to discover its rich resources and the benefits of joining the largest professional computer science organization. This move will also benefit CACM authors by expanding their readership to a larger and more diverse audience. Of course, the community’s continued support of ACM through membership and the ACM Open model is essential to keeping ACM and CACM strong, so it is critical that current members continue their membership and authors encourage their institutions to join the ACM Open model to keep this effort sustainable.

Yep, surviving in a world of faux expertise.

Stephen E Arnold, March 8, 2024

Forget the Words. Do Short-Form Video by Hiring a PR Professional

March 1, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I think “Everyone’s a Sellout Now” is about 4,000 words. The main idea is that traditional publishing is roached. Artists and writers must learn to do video editing or have enough of mommy and daddy’s money to pay someone to promote the creator’s output. The essay is well written; however, I am not sure it conveys a TikTok fact unknown or hiding in the world of BlueSky-type services.

This bright young student should have used a ChatGPT-type service. Thanks, MSFT Copilot. At least you are outputting which is more than I can say for your fierce but lagging competitor.

I noted this passage:

Because self-promotion sucks.

I think I agree, but why not hire an “output handler.” The OH does the PR.

Here’s another quote to note:

The problem is that America more or less runs on the concept of selling out.

Is there a fix for the gasoline of America? Yes. The essay asserts:

author-content creators succeed by making the visually uninteresting labor of typing on a laptop worthwhile to watch.

The essay concludes with this less-than-uplifting comment:

To achieve the current iteration of the American dream, you’ve got to shout into the digital void and tell everyone how great you are. All that matters is how many people believe you.

Downer? Yes, and what makes it fascinating is that the author gets paid for writing. I think this is a “real job.”

Several observations:

- I think smart software is going to do more than write wacko stuff for SmartNews-type publications.

- Readers of “downer” essays are likely to go more “down”; that is, become less positive and increasingly antagonistic to what makes the US of A tick

- The essay delivers the news about the importance of TikTok without pointing out that the service is China-affiliated and provides content not permitted for consumption in China.

Net net: Hire a gig worker to do the OH. Pay for PR. Quit complaining or complain in fewer words.

PS. The categorical affirmative of “everyone” is disproved with a single example. As I have pointed out in an essay about a grousing Xoogler, I operate differently. Therefore, the everyone is like fuzzy antecedents. Sloppy.

Stephen E Arnold, March 1, 2024