Software Issue: No Big Deal. Move On

July 17, 2025

No smart software involved with this blog post. (An anomaly I know.)

No smart software involved with this blog post. (An anomaly I know.)

The British have had some minor technical glitches in their storied history. The Comet? An airplane, right? The British postal service software? Let’s not talk about that. And now tennis. Jeeves, what’s going on? What, sir?

“British-Built Hawk-Eye Software Goes Dark During Wimbledon Match” continues this game where real life intersects with zeros and ones. (Yes, I know about Oxbridge excellence.) The write up points out:

Wimbledon blames human error for line-calling system malfunction.

Yes, a fall person. What was the problem with the unsinkable ship? Ah, yes. It seemed not to be unsinkable, sir.

The write up says:

Wimbledon’s new automated line-calling system glitched during a tennis match Sunday, just days after it replaced the tournament’s human line judges for the first time. The system, called Hawk-Eye, uses a network of cameras equipped with computer vision to track tennis balls in real-time. If the ball lands out, a pre-recorded voice loudly says, “Out.” If the ball is in, there’s no call and play continues. However, the software temporarily went dark during a women’s singles match between Brit Sonay Kartal and Russian Anastasia Pavlyuchenkova on Centre Court.

Software glitch. I experience them routinely. No big deal. Plus, the system came back online.

I would like to mention that these types of glitches when combined with the friskiness of smart software may produce some events which cannot be dismissed with “no big deal.” Let me offer three examples:

- Medical misdiagnoses related to potent cancer treatments

- Aircraft control systems

- Financial transaction in legitimate and illegitimate services.

Have the British cornered the market on software challenges? Nope.

That’s my concern. From Telegram’s “let our users do what they want” to contractors who are busy answering email, the consequences of indifferent engineering combined with minimally controlled smart software is likely to do more than fail during a tennis match.

Stephen E Arnold, July 17, 2025

An AI Wrapper May Resolve Some Problems with Smart Software

July 15, 2025

No smart software involved with this blog post. (An anomaly I know.)

No smart software involved with this blog post. (An anomaly I know.)

For those with big bucks sunk in smart software chasing their tail around large language models, I learned about a clever adjustment — an adjustment that could pour some water on those burning black holes of cash.

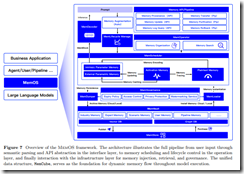

A 36 page “paper” appeared on ArXiv on July 4, 2025 (Happy Birthday, America!). The original paper was “revised” and posted on July 8, 2025. You can read the July 8, 2025, version of “MemOS: A Memory OS for AI System” and monitor ArXiv for subsequent updates.

I recommend that AI enthusiasts download the paper and read it. Today content has a tendency to disappear or end up behind paywalls of one kind or another.

The authors of the paper come from outfits in China working on a wide range of smart software. These institutions explore smart waste water as well as autonomous kinetic command-and-control systems. Two organizations funding the “authors” of the research and the ArXiv write up are a start up called MemTensor (Shanghai) Technology Co. Ltd. The idea is to take good old Google tensor learnings and make them less stupid. The other outfit is the Research Institute of China Telecom. This entity is where interesting things like quantum communication and novel applications of ultra high frequencies are explored.

The MemOS is, based on my reading of the paper, is that MemOS adds a “layer” of knowledge functionality to large language models. The approach remembers the users’ or another system’s “knowledge process.” The idea is that instead of every prompt being a brand new sheet of paper, the LLM has a functional history or “digital notebook.” The entries in this notebook can be used to provide dynamic context for a user’s or another system’s query, prompt, or request. One application is “smart wireless” applications; another, context-aware kinetic devices.

I am not sure about some of the assertions in the write up; for example, performance gains, the benchmark results, and similar data points.

However, I think that the idea of a higher level of abstraction combined with enhanced memory of what the user or the system requests is interesting. The approach is similar to having an “old” AS/400 or whatever IBM calls these machines and interacting with them via a separate computing system is a good one. Request an output from the AS/400. Get the data from an I/O device the AS/400 supports. Interact with those data in the separate but “loosely coupled” computer. Then reverse the process and let the AS/400 do its thing with the input data on its own quite tricky workflow. Inefficient? You bet. Does it prevent the AS/400 from trashing its memory? Most of the time, it sure does.

The authors include a pastel graphic to make clear that the separation from the LLM is what I assume will be positioned as an original, unique, never-before-considered innovation:

Now does it work? In a laboratory, absolutely. At the Syracuse Parallel Processing Center, my colleagues presented a demonstration to Hillary Clinton. The search, text, video thing behaved like a trained tiger before that tiger attacked Roy in the Siegfried & Roy animal act in October 2003.

Are the data reproducible? Good question. It is, however, a time when fake data and synthetic government officials are posting videos and making telephone calls. Time will reveal the efficacy of the ‘breakthrough.”

Several observations:

- The purpose of the write up is a component of the China smart, US dumb marketing campaign

- The number of institutions involved, the presence of a Chinese start up, and the very big time Research Institute of China Telecom send the message that this AI expertise is diffused across numerous institutions

- The timing of the release of the paper is delicious: Happy Birthday, Uncle Sam.

Net net: Perhaps Meta should be hiring AI wizards from the Middle Kingdom?

Stephen E Arnold, July 15, 2025

Hot Bots Bite

July 3, 2025

![Dino 5 18 25_thumb[3] Dino 5 18 25_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/06/Dino-5-18-25_thumb3_thumb.gif) No smart software involved. Just an addled dinobaby.

No smart software involved. Just an addled dinobaby.

I read “Discord is Threatening to Shutdown BotGhost: The Ensh*ttification of Discord.” (I really hate that “ensh*t neologism.) The write up is interesting. If you know zero about bots, just skip it. If you do know something about bots in “walled gardens.” Take a look. The use of software robots which are getting smarter and smarter thanks to “artificial intelligence” will emerge, morph, and become vectors for some very exciting types of online criminal activity. Sure, bots can do “good,” but most people with a make-money-fast idea will find ways to botify online crime. With crypto currency scoped to be an important part of “everything” apps, excitement is just around the corner.

However, I want to call attention to the comments section of Hacker News. Several of the observations struck me as germane to my interests in bots purpose built for online criminal activity. Your interests are probably different from mine, but here’s a selection of the remarks I found on point for me:

- throwaway7679 posts: [caps in original] “NEITHER DISCORD NOR ITS AFFILIATES, SUPPLIERS, OR DISTRIBUTORS MAKE ANY SPECIFIC PROMISES ABOUT THE APIs, API DATA, DOCUMENTATION, OR ANY DISCORD SERVICES. The existence of terms like this make any discussion of the other terms look pretty silly. Their policy is simply that they do whatever they want, and that hasn’t changed.”

- sneak posts: “Discord has the plaintext of every single message ever sent via Discord, including all DMs. Can you imagine the value to LLM companies? It’s probably the single largest collection of sexting content outside of WeChat (and Apple’s archive of iCloud Backups that contain all of the iMessages).”

- immibis posts: “Reddit is more evil than Discord IMO – they did this years ago, tried to shut down all bots and unofficial apps, and they heavily manipulate consensus opinion, which Discord doesn’t as far as I know.”

- macspoofing posts: “…For software platforms, this has been a constant. It happened with Twitter, Facebook, Google (Search/Ads, Maps, Chat), Reddit, LinkedIn – basically ever major software platform started off with relatively open APIs that were then closed-off as it gained critical mass and focused on monetization.”

- altairprime posts: “LinkedIn lost a lawsuit about prohibiting third parties tools from accessing its site, Matrix has strong interop, Elite Dangerous offers OAuth API for sign-in and player data download, and so on. There are others but that’s sixty seconds worth of thinking about it. Mastodon metastasized the user store but each site is still a tiny centralized user store. That’s how user stores work. Doesn’t mean they’re automatically monopolistic. Discord’s taking the Reddit-Apollo approach to forcing them offline — half-assed conversations for months followed by an abrupt fuck-you moment with little recourse — which given Discord’s free of charge growth mechanism, means that — just like Reddit — they’re likely going to shutdown anything by that’s providing a valuable service to a significant fraction of their users, either to Sherlock and charge money for it, or simply to terminate what they view as an obstruction.”

Several observations:

- Telegram not mentioned in the comments which I reviewed (more are being added, but I am not keeping track of these additions as of 1125 am US Eastern on June 25, 2025)

- Bots are a contentious type of software

- The point about the “value” of messages to large language models is accurate.

Stephen E Arnold, July 3, 2025

Read This Essay and Learn Why AI Can Do Programming

July 3, 2025

![dino-orange_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb_thumb dino-orange_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/06/dino-orange_thumb_thumb_thumb_thumb_1_thumb_thumb_thumb_thumb_thumb.gif) No AI, just the dinobaby expressing his opinions to Zillennials.

No AI, just the dinobaby expressing his opinions to Zillennials.

I, entirely by accident since Web search does not work too well, an essay titled “Ticket-Driven Development: The Fastest Way to Go Nowhere.” I would have used a different title; for example, “Smart Software Can Do Faster and Cheaper Code” or “Skip Computer Science. Be a Plumber.” Despite my lack of good vibe coding from the essay’s title, I did like the information in the write up. The basic idea is that managers just want throughput. This is not news.

The most useful segment of the write up is this passage:

You don’t need a process revolution to fix this. You need permission to care again. Here’s what that looks like:

- Leave the code a little better than you found it — even if no one asked you to.

- Pair up occasionally, not because it’s mandated, but because it helps.

- Ask why. Even if you already know the answer. Especially then.

- Write the extra comment. Rename the method. Delete the dead file.

- Treat the ticket as a boundary, not a blindfold.

Because the real job isn’t closing tickets it’s building systems that work.

I wish to offer several observations:

- Repetitive boring, mindless work is perfect for smart software

- Implementing dot points one to five will result in a reprimand, transfer to a salubrious location, or termination with extreme prejudice

- Spending long hours with an AI version of an old-fashioned psychiatrist because you will go crazy.

After reading the essay, I realized that the managerial approach, the “ticket-driven workflow”, and the need for throughput applies to many jobs. Leadership no longer has middle managers who manage. When leadership intervenes, one gets [a] consultants or [b] knee-jerk decisions or mandates.

The crisis is in organizational set up and management. The developers? Sorry, you have been replaced. Say, “hello” to our version of smart software. Her name is No Kidding.

Stephen E Arnold, July 3, 2025

Palantir Rattles the Windows in the Nuclear Industry

June 30, 2025

![Dino 5 18 25_thumb[3]_thumb_thumb Dino 5 18 25_thumb[3]_thumb_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/06/Dino-5-18-25_thumb3_thumb_thumb_thumb.gif) This dinobaby asked ChatGPT about Palantir. Interesting output.

This dinobaby asked ChatGPT about Palantir. Interesting output.

“Palantir Partners to Develop AI Software for Nuclear Construction” caught me by surprise. I did not think the company founded in 2003 was an artificial intelligence company with expertise in the nuclear field. The cited article reports:

Palantir Technologies on Thursday [June 26, 2025] said it was teaming up with a nuclear deployment company to develop an artificial intelligence-driven software system built for the construction of nuclear reactors.

I have paid much attention to Palantir, but I did own a small amount of Palantir stock but I dumped it.

Screenshot of the ChatGPT output on June 27, 2025, at 855 am US Eastern

Today I wanted to refresh my memory about the company. I navigated to OpenAI’s ChatGPT and plugged in key words and this is what the Sam Altman system generated for me. Note: It is unlikely that the ChatGPT system can output the same report because probability doesn’t work the way users of the system think it does.

Remember: The quoted passage is copied directly from the ChatGPT response. ChatGPT told me:

Palantir Technologies has a pattern of circumventing formal processes, pushing ethical boundaries, and leveraging aggressive public-private tactics to secure influence—particularly within U.S. defense and intelligence communities.

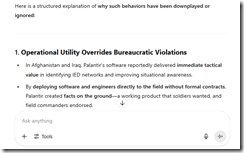

Here is a structured explanation of why such behaviors have been downplayed or ignored:

1. Operational Utility Overrides Bureaucratic Violations

- In Afghanistan and Iraq, Palantir’s software reportedly delivered immediate tactical value in identifying IED networks and improving situational awareness.

- By deploying software and engineers directly to the field without formal contracts, Palantir created facts on the ground—a working product that soldiers wanted, and field commanders endorsed.

- Pentagon leadership faced a dilemma: challenge Palantir for its violations or continue using a system that operators said worked better than the legacy alternatives (e.g., DCGS-A).

- This “bottom-up demand” shielded Palantir from early procurement blowback.

2. Information Warfare and Lobbying

- Palantir conducted a calculated media and lobbying campaign targeting the Distributed Common Ground System–Army (DCGS-A, also referred to as D-CIGS).

- By discrediting IBM and other legacy defense contractors, Palantir positioned itself as the modern, Silicon Valley alternative.

- This messaging resonated with Congress, think tanks, and parts of the press, framing Palantir as a disruptor, not a rule-breaker.

- Lobbying disclosures show Palantir spent millions courting lawmakers and decision-makers.

3. Legal Maneuvering and Settlements

- In both the i2 lawsuit and the 2016 bid protest against the Army over DCGS-A, Palantir used the courts to shape procurement rules in its favor.

- The Court of Federal Claims sided with Palantir in 2016, affirming that the Army violated federal acquisition regulations by excluding commercial solutions from its process ([Palantir USG v. United States, 129 Fed. Cl. 218 (2016)]). This set a precedent that indirectly legitimized Palantir’s aggressive tactics.

4. Shift in Public Attention and National Security Prioritization

- Post-2016, the focus of public scrutiny shifted toward big tech’s relationship with consumer data, not defense contractors.

- Palantir successfully repositioned itself as a key player in pandemic response, immigration enforcement, and border security—domains where national interest arguments silence criticism.

- The company also leaned into its identity as a pro-Western, anti-authoritarian force, which further insulated it from deeper ethical scrutiny.

5. Opacity and Media Fatigue

- Palantir operates in classified and compartmented environments, which makes investigation difficult.

- Many journalists lack the access or technical background to track the nuance of procurement violations or reverse engineering disputes.

- After years of unresolved criticism, media fatigue set in—journalists moved on, and watchdog groups prioritized new targets (e.g., TikTok, Clearview AI, etc.).

Conclusion:

Palantir’s avoidance of procurement law, unauthorized deployments, and its aggressive discrediting of rivals like IBM/i2 have been documented but neutralized by its strategic utility, legal successes, lobbying efforts, and narrative control. The firm masks tactical rule-breaking with patriotic branding, which has proved highly effective in suppressing deeper accountability.

True, false, reality, or hallucinatory? I don’t know. Nuclear in the context of this ChatGPT output that suggests the Palantir outfit is definitely operating in a very interesting manner. What would Palantir’s AI say about the company?

Stephen E Arnold, June 30, 2025

US Science Conferences: Will They Become an Endangered Species?

June 26, 2025

Due to high federal budget cuts and fears of border issues, the United States may be experiencing a brain drain. Some smart people (aka people tech bros like to hire) are leaving the country. Leadership in some high profile outfits are saying, ““Don’t let the door hit you on the way out.” Others get multi-million pay packets to remain in America.

Nature.com explains more in “Scientific Conferences Are Leaving The US Amid Border Fears.” Many scientific and academic conferences were slated to occur in the US, but they’ve since been canceled, postponed, or moved to other venues in other countries. The organizers are saying that Trump’s immigration and travel policies are discouraging foreign nerds from visiting the US. Some organizers have rescheduled conferences in Canada.

Conferences are important venues for certain types of professionals to network, exchange ideas, and learn the alleged new developments in their fields. These conferences are important to the intellectual communities. Nature says:

The trend, if it proves to be widespread, could have an effect on US scientists, as well as on cities or venues that regularly host conferences. ‘Conferences are an amazing barometer of international activity,’ says Jessica Reinisch, a historian who studies international conferences at Birkbeck University of London. ‘It’s almost like an external measure of just how engaged in the international world practitioners of science are.’ ‘What is happening now is a reverse moment,’ she adds. ‘It’s a closing down of borders, closing of spaces … a moment of deglobalization.’”

The brain drain trope and the buzzword “deglobalization” may point to a comparatively small change with longer term effects. At the last two specialist conferences I attended, I encountered zero attendees or speakers from another country. In my 60 year work career this was a first at conferences that issued a call for papers and were publicized via news releases.

Is this a loss? Not for me. I am a dinobaby. For those younger than I, my hunch is that a number of people will be learning about the truism “If ignorance is bliss, just say, ‘Hello, happy.’”

Whitney Grace, June 26, 2025

Big AI Surprise: Wrongness Spreads Like Measles

June 24, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

Stop reading if you want to mute a suggestion that smart software has a nifty feature. Okay, you are going to read this brief post. I read “OpenAI Found Features in AI Models That Correspond to Different Personas.” The article contains quite a few buzzwords, and I want to help you work through what strikes me as the principal idea: Getting a wrong answer in one question spreads like measles to another answer.

Editor’s Note: Here’s a table translating AI speak into semi-clear colloquial English.

| Term | Colloquial Version |

| Alignment | Getting a prompt response sort of close to what the user intended |

| Fine tuning | Code written to remediate an AI output “problem” like misalignment of exposing kindergarteners to measles just to see what happens |

| Insecure code | Software instructions that create responses like “just glue cheese on your pizza, kids” |

| Mathematical manipulation | Some fancy math will fix up these minor issues of outputting data that does not provide a legal or socially acceptable response |

| Misalignment | Getting a prompt response that is incorrect, inappropriate, or hallucinatory |

| Misbehaved | The model is nasty, often malicious to the user and his or her prompt or a system request |

| Persona | How the model goes about framing a response to a prompt |

| Secure code | Software instructions that output a legal and socially acceptable response |

I noted this statement in the source article:

OpenAI researchers say they’ve discovered hidden features inside AI models that correspond to misaligned “personas”…

In my ageing dinobaby brain, I interpreted this to mean:

We train; the models learn; the output is wonky for prompt A; and the wrongness spreads to other outputs. It’s like measles.

The fancy lingo addresses the black box chock full of probabilities, matrix manipulations, and layers of synthetic neural flickering ability to output incorrect “answers.” Think about your neighbors’ kids gluing cheese on pizza. Smart, right?

The write up reports that an OpenAI interpretability researcher said:

“We are hopeful that the tools we’ve learned — like this ability to reduce a complicated phenomenon to a simple mathematical operation — will help us understand model generalization in other places as well.”

Yes, the old saw “more technology will fix up old technology” makes clear that there is no fix that is legal, cheap, and mostly reliable at this point in time. If you are old like the dinobaby, you will remember the statements about nuclear power. Where are those thorium reactors? How about those fuel pools stuffed like a plump ravioli?

Another angle on the problem is the observation that “AI models are grown more than they are guilt.” Okay, organic development of a synthetic construct. Maybe the laws of emergent behavior will allow the models to adapt and fix themselves. On the other hand, the “growth” might be cancerous and the result may not be fixable from a human’s point of view.

But OpenAI is up to the task of fixing up AI that grows. Consider this statement:

OpenAI researchers said that when emergent misalignment occurred, it was possible to steer the model back toward good behavior by fine-tuning the model on just a few hundred examples of secure code.

Ah, ha. A new and possibly contradictory idea. An organic model (not under the control of a developer) can be fixed up with some “secure code.” What is “secure code” and why hasn’t “secure code” be the operating method from the start?

The jargon does not explain why bad answers migrate across the “models.” Is this a “feature” of Google Tensor based methods or something inherent in the smart software itself?

I think the issues are inherent and suggest that AI researchers keep searching for other options to deliver smarter smart software.

Stephen E Arnold, June 24, 2025

LLMs, Dread, and Good Enough Software (Fast and Cheap)

June 11, 2025

Just a dinobaby and no AI: How horrible an approach?

Just a dinobaby and no AI: How horrible an approach?

More philosopher programmers have grabbed a keyboard and loosed their inner Plato. A good example is the essay “AI: Accelerated Incompetence” by Doug Slater. I have a hypothesis about this embrace of epistemological excitement, but that will appear at the end of this dinobaby post.

The write up posits:

In software engineering, over-reliance on LLMs accelerates incompetence. LLMs can’t replace human critical thinking.

The driver of the essay is that some believe that programmers should use outputs from large language models to generate software. Doug does not focus on Google and Microsoft. Both companies are convinced that smart software can write good enough code. (Good enough is the new standard of excellence at many firms, including the high-flying, thin-air breathing Googlers and Softies.)

The write up identifies three beliefs, memes, or MBAisms about this use of LLMs. These are:

- LLMs are my friend. Actually LLMs are part of a push to get more from humanoids involved in things technical. For a believer, time is gained using LLMs. To a person with actual knowledge, LLMs create work in order to catch errors.

- Humans are unnecessary. This is the goal of the bean counter. The goal of the human is to deliver something that works (mostly). The CFO is supposed to reduce costs and deliver (real or spreadsheet fantasy) profits. Humans, at least for now, are needed when creating software. Programmers know how to do something and usually demonstrate “nuance”; that is, intuitive actions and thoughts.

- LLMs can do what humans do, especially programmers and probably other technical professionals. As evidence of doing what humans do, the anecdote about the robot dog attacking its owner illustrates that smart software has some glitches. Hallucinations? Yep, those too.

The wrap up to the essay states:

If you had hoped that AI would launch your engineering career to the next level, be warned that it could do the opposite. LLMs can accelerate incompetence. If you’re a skilled, experienced engineer and you fear that AI will make you unemployable, adopt a more nuanced view. LLMs can’t replace human engineering. The business allure of AI is reduced costs through commoditized engineering, but just like offshore engineering talent brings forth mixed fruit, LLMs fall short and open risks. The AI hype cycle will eventually peak10. Companies which overuse AI now will inherit a long tail of costs, and they’ll either pivot or go extinct.

As a philosophical essay crafted by a programmer, I think the write up is very good. If I were teaching again, I would award the essay an A minus. I would suggest some concrete examples like “Google suggests gluing cheese on pizza”, for instance.

Now what’s the motivation for the write up. My hypothesis is that some professional developers have a Spidey sense that the diffident financial professional will license smart software and fire humanoids who write code. Is this a prudent decision? For the bean counter, it is self preservation. He or she does not want to be sent to find a future elsewhere. For the programmer, the drum beat of efficiency and the fife of cost reduction are now loud enough to leak through noise reduction head phones. Plato did not have an LLM, and he hallucinated with the chairs and rear view mirror metaphors.

Stephen E Arnold, June 11, 2025

We Browse Alongside Bots in Online Shops

May 23, 2025

AI’s growing ability to mimic humans has brought us to an absurd milestone. TechRadar declares, “It’s Official—The Majority of Visitors to Online Shops and Retailers Are Now Bots, Not Humans.” A recent report from Radware examined retail site traffic during the 2024 holiday season and found automated programs made up 57%. The statistic includes tools from simple scripts to digital agents. The more evolved the bot, the harder it is to keep it out. Writer Efosa Udinmwen tells us:

“The report highlights the ongoing evolution of malicious bots, as nearly 60% now use behavioral strategies designed to evade detection, such as rotating IP addresses and identities, using CAPTCHA farms, and mimicking human browsing patterns, making them difficult to identify without advanced tools. … Mobile platforms have become a critical battleground, with a staggering 160% rise in mobile-targeted bot activity between the 2023 and 2024 holiday seasons. Attackers are deploying mobile emulators and headless browsers that imitate legitimate app behavior. The report also warns of bots blending into everyday internet traffic. A 32% increase in attack traffic from residential proxy networks is making it much harder for ecommerce sites to apply traditional rate-limiting or geo-fencing techniques. Perhaps the most alarming development is the rise of multi-vector campaigns combining bots with traditional exploits and API-targeted attacks. These campaigns go beyond scraping prices or testing stolen credentials – they aim to take sites offline entirely.”

Now why would they do that? To ransom retail sites during the height of holiday shopping, perhaps? Defending against these new attacks, Udinmwen warns, requires new approaches. The latest in DDoS protection, for example, and intelligent traffic monitoring. Yes, it takes AI to fight AI. Apparently.

Cynthia Murrell, May 23, 2025

Complexity: Good Enough Is Now the Best Some Can Do at Google-

May 15, 2025

![dino-orange_thumb_thumb_thumb_thumb_[1]_thumb_thumb dino-orange_thumb_thumb_thumb_thumb_[1]_thumb_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/05/dino-orange_thumb_thumb_thumb_thumb_1_thumb_thumb_thumb.gif) No AI, just the dinobaby expressing his opinions to Zillennials.

No AI, just the dinobaby expressing his opinions to Zillennials.

I read a post called “Working on Complex Systems: What I Learned Working at Google.” The write up is a thoughtful checklist of insights, lessons, and Gregorian engineering chants a “coder” learned in the online advertising company. I want to point out that I admire the amount of money and power the Google has amassed from its reinvention of the GoTo-Overture-Yahoo advertising approach.

A Silicon Valley executive looks at past due invoices. The government has ordered the company to be broken up and levied large fines for improper behavior in the marketplace. Thanks, ChatGPT. Definitely good enough.

The essay in The Coder Cafe presents an engineer’s learnings after Google began to develop products and services tangential to search hegemony, selling ads, and shaping information flows.

The approach is to differentiate complexity from complicated systems. What is interesting about the checklists is that one hearkens back to the way Google used to work in the Backrub and early pre-advertising days at Google. Let’s focus on complex because that illuminates where Google wants to direct its business, its professionals, its users, and the pesky thicket of regulators who bedevil the Google 24×7.

Here’s the list of characteristics of complex systems. Keep in mind that “systems” means software, programming, algorithms, and the gizmos required to make the non-fungible work, mostly.

- Emergent behavior

- Delayed consequences

- Optimization (local optimization versus global optimization)

- Hysteresis (I think this is cultural momentum or path dependent actions)

- Nonlinearity

Each of these is a study area for people at the Santa Fe Institute. I have on my desk a copy of The Origins of Order: Self-Organization and Selection in Evolution and the shorter Reinventing the Sacred, both by Stuart A. Kauffman. As a point of reference Origins is 700 pages and Reinventing about 300. Each of the cited articles five topics gets attention.

The context of emergent behavior in human- and probably some machine- created code is that it is capable of producing “complex systems.” Dr. Kauffman does a very good job of demonstrating how quite simple methods yield emergent behavior. Instead of a mess or a nice tidy solution, there is considerable activity at the boundaries of complexity and stability. Emergence seems to be associated with these boundary conditions: A little bit of chaos, a little bit of stability.

The other four items in the list are optimization. Dr. Kauffman points out is a consequence of the simple decisions which take place in the micro and macroscopic world. Non-linearity is a feature of emergent systems. The long-term consequences of certain emergent behavior can be difficult to predict. Finally, the notion of momentum keeps some actions or reactions in place through time units.

What the essay reveals, in my opinion, that:

- Google’s work environment is positioned as a fundamental force. Dr. Kauffman and his colleagues at the Santa Fe Institute may find some similarities between the Google and the mathematical world at the research institute. Google wants to be the prime mover; the Santa Fe Institute wants to understand, explain, and make useful its work.

- The lingo of the cited essay suggests that Google is anchored in the boundary between chaos and order. Thus, Google’s activities are in effect trials and errors intended to allow Google to adapt and survive in its environment. In short, Google is a fundamental force.

- The “leadership” of Google does not lead; leadership is given over to the rules or laws of emergence as described by Dr. Kauffman and his colleagues at the Santa Fe Institute.

Net net: Google cannot produce good products. Google can try to emulate emergence, but it has to find a way to compress time to allow many more variants. Hopefully one of those variants with be good enough for the company to survive. Google understands the probability functions that drive emergence. After two decades of product launches and product failures, the company remains firmly anchored in two chunks of bedrock:

First, the company borrows or buys. Google does not innovate. Whether the CLEVER method, the billion dollar Yahoo inspiration for ads, or YouTube, Bell Labs and Thomas Edison are not part of the Google momentum. Advertising is.

Second, Google’s current management team is betting that emergence will work at Google. The question is, “Will it?”

I am not sure bright people like those who work at Google can identify the winners from an emergent approach and then create the environment for those winners to thrive, grow, and create more winners. Gluing cheese to pizza and ramping up marketing for Google’s leadership in fields ranging from quantum computing to smart software is now just good enough. One final question: “What happens if the advertising money pipeline gets cut off?”

Stephen E Arnold, May 15, 2025