Hey, Live to Be a 100 like a Tech Bro

October 8, 2024

If you, gentle reader, are like me, you have taken heart at tales of people around the world living past 100. Well, get ready to tamp down some of that hope. An interview at The Conversation declares, “The Data on Extreme Human Ageing Is Rotten from the Inside Out.” Researcher Saul Justin Newman recently won an Ig Nobel Prize (not to be confused with a Nobel Prize) for his work on data about ageing. When asked about his work, Newman summarizes:

“In general, the claims about how long people are living mostly don’t stack up. I’ve tracked down 80% of the people aged over 110 in the world (the other 20% are from countries you can’t meaningfully analyze). Of those, almost none have a birth certificate. In the US there are over 500 of these people; seven have a birth certificate. Even worse, only about 10% have a death certificate. The epitome of this is blue zones, which are regions where people supposedly reach age 100 at a remarkable rate. For almost 20 years, they have been marketed to the public. They’re the subject of tons of scientific work, a popular Netflix documentary, tons of cookbooks about things like the Mediterranean diet, and so on. Okinawa in Japan is one of these zones. There was a Japanese government review in 2010, which found that 82% of the people aged over 100 in Japan turned out to be dead. The secret to living to 110 was, don’t register your death.”

That is one way to go, we suppose. We learn of other places Newman found bad ageing data. Europe’s “blue zones” of Sardinia in Italy and Ikaria in Greece, for example. There can be several reasons for erroneous data. For example, wars or other disasters that destroyed public records. Or clerical errors that set the wrong birth years in stone. But one of the biggest factors seems to be pension fraud. We learn:

“Regions where people most often reach 100-110 years old are the ones where there’s the most pressure to commit pension fraud, and they also have the worst records. For example, the best place to reach 105 in England is Tower Hamlets. It has more 105-year-olds than all of the rich places in England put together. It’s closely followed by downtown Manchester, Liverpool and Hull. Yet these places have the lowest frequency of 90-year-olds and are rated by the UK as the worst places to be an old person.”

That does seem fishy. Especially since it is clear rich folks generally live longer than poor ones. (And that gap is growing, by the way.) So get those wills notarized, trusts set up, and farewell letters written sooner than later. We may not have as much time as we hoped.

Cynthia Murrell, October 8, 2024

DAIS: A New Attempt to Make AI Play Nicely with Humans

September 20, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

How about a decentralized artificial intelligence “association”? One has been set up by Michael Casey, the former chief content officer at Coindesk. (Coindesk reports about the bright, sunny world of crypto currency and related topics.) I learned about this society in — you guessed it — Coindesk’s online information service called Coindesk. The article “Decentralized AI Society Launched to Fight Tech Giants Who ‘Own the Regulators’” is interesting. I like the idea that “tech giants” own the regulators. This is an observation which Apple and Google might not agree. Both “tech giants” have been facing some unfavorable regulatory decisions. If these regulators are “owned,” I think the “tech giants” need to exercise their leadership skills to make the annoying regulators go away. One resigned in the EU this week, but as Shakespeare said of lawyers, let’s drown them. So far the “tech giants” have been bumbling along, growing bigger as a result of feasting on data and amplifying allegedly monopolistic behaviors which just seem to pop up, rules or no rules.

Two experts look at what emerged from a Petri dish of technological goodies. Quite a surprise I assume. Thanks, MSFT Copilot. Good enough.

The write up reports:

Industry leaders have launched a non-profit organization called the Decentralized AI Society (DAIS), dedicated to tackling the probability of the monopolization of the artificial intelligence (AI) industry.

What is the DAIS outfit setting out to do? Here’s what Coindesk reports and this is a quote of the bullets from the write up:

Bringing capital to the decentralized AI world in what has already become an arms race for resources like graphical processing units (GPUs) and the data centers that compute together.

Shaping policy to craft AI regulations.

Education and promotion of decentralized AI.

Engineering to create new algorithms for learning models in a distributed way.

These are interesting targets. I want to point out that “decentralization” is the opposite of what the “tech giants” have already put in place; that is, concentration of money, talent, and infrastructure. Even old dogs like Oracle are now hopping on the centralized bandwagon. Even newcomers want to get as many cattle into the killing chute before the glamor of AI begins to lose some sparkles.

Several observations:

- DAIS has some crypto roots. These may become positive or negative. Right now regulators are interested in crypto as are other enforcement entities

- One of the Arnold Laws of Online is that centralization, consolidation, and concentration are emergent behaviors for online products and services. Countering this “law” and its “emergent” functionality is going to take more than conferences, a Web site, and some “logical” ideas which any “rational” person would heartily endorse. But emergent is tough to stop based on my experience.

- Singapore has become a hot spot for certain financial and technical activities. The problem is that nation-states may not want to be inhibited in their AI ambitions. Some may find the notion of “education” a problem as well because curricula must conform to pre-defined frameworks. Distributed is not a pre-defined anything; it is the opposite of controlled and, therefore, likely to be a bit of a problem.

Net net: Interesting idea. But Amazon, Google, Facebook, Microsoft, and some other outfits may want to talk about “distributed” but really mean the technological notion is okay, but we want as much of the money as we can get.

Stephen E Arnold, September 20, 2024

Rapid Change: The Technological Meteor Causing Craziness

September 6, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The mantra “Move fast and break things” creates opportunities for entrepreneurs and mental health professionals. “Eminent Scientist Richard Dawkins Reveals Fascinating Theory Behind West’s Mental Health Crisis” quotes Dr. Dawkins:

‘Certainly, the rate at which we are evolving genetically is miniscule compared to the rate at which we are evolving non-genetically, culturally,’ Dawkins told the hosts of the TRIGGERnometry podcast. ‘And much of the mental illness that afflicts people may be because we are in a constantly changing unpredictable environment,’ the biologist added, ‘in a way that our ancestors were not.’

Thanks, Microsoft Copilot. Is that a Windows Phone doing the flame out thing?

The write up reports:

Dawkins expressed more direct concerns with other aspects of human technology’s impact on evolution: climate change and basic self-reliance in the face of a new Dark Age. ‘The internet is a huge change, it’s gigantic change,’ he noted. ‘We’ve become adapted to it with astonishing rapidity.’ ‘if we lost electricity, if we suddenly lost the technology we’re used to,’ Dawkins worried, humanity might not be able to eve ‘begin’ to adapt in time, without great social upheaval and death… ‘Man-made extinction,’ he said, ‘it’s just as bad as the others. I think it’s tragic.’

There you go, death.

I know that brilliant people often speak carefully. Experts take time to develop their knowledge base and put words together that make complex ideas easy to understand.

From my redoubt in rural Kentucky, I have watched the panoply of events parading across my computer monitor. Among the notable moments were:

- Images from US cities showing homeless people slumped over either scrolling on their mobile phones or from the impact of certain compounds on their body

- Young people looting stores and noting similar items offered for sale on Craigslist.com-type sites

- Graphs of US academic performance illustrating the winners and losers of educational achievement tests

- The number of people driving around at times I associated with being in an office at “work” when I was younger

- Advertisements for prescription drugs with peculiar names and high-resolution images of people with smiles and contented lives but for the unnamed disease plaguing the otherwise cheerful folk.

What are the links between these unrelated situations and online access? I think I have a reasonably good idea. Why have experts, parents, and others required decades to figure out that flows of information are similar to sand-blasting systems. Provide electronic information to an organization, and it begins to decompose. The “bonds” which hold the people, processes, and products together are weakened. Then some break. Pump electronic information into younger people. They begin to come apart too. Give college students a tool to write their essays. Like lemmings, many take the AI solution and watch TikToks.

I am pleased that Dr. Dawkins has identified a problem. Now what’s the fix? The digital meteor has collided with human civilization. Can the dinosaurs be revivified?

Stephen E Arnold, September 6, 2024

Good Enough: The New Standard of Excellence

August 20, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1] green-dino_thumb_thumb_thumb_thumb_t[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t1_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an interesting essay about software development. “[The] Biggest Productivity Killers in the Engineering Industry” presents three issues which add to the time and cost of a project. Let’s look at each of these factors and then one trivial downstream consequence of implementing these productivity touchpoints.

The three killers are:

- Working on a project until it meets one’s standards of “perfectionism.” Like “love” and “ethics”, perfectionism is often hard to define without a specific context. A designer might look at an interface and its colors and say, “It’s perfect.” The developer or, heaven forbid, the client looks and says, “That sucks.” Oh, oh.

- Stalling; that is, not jumping right into a project and making progress. I worked at an outfit which valued what it called “an immediate and direct response.” The idea is that action is better than reaction. Plus is demonstrates that one is not fooling around.

- Context switching; that is, dealing with other priorities or interruptions.

I want to highlight one of these “killers” — The need for “good enough.” The essay contains some useful illustrations. Here’s the one for the perfectionism-good enough trade off. The idea is pretty clear. As one chases getting the software or some other task “perfect” means that more time is required. The idea is that if something takes too long, then the value of chasing perfectionism hits a cost wall. Therefore, one should trade off time and value by turning in the work when it is good enough.

The logic is understandable. I do have one concern not addressed in the essay. I believe my concern applies to the other two productivity killers, stalling and interruptions (my term for context switching).

What is this concern?

How about doors falling off aircraft, stranded astronauts, cybersecurity which fails to protect Social Security Numbers, and city governments who cannot determine if compromised data were “good” or “corrupted.” We just know the data were compromised. There are other examples; for instance, the CrowdStrike misstep which affected only a few million people. How did CrowdStrike happen? My hunch is that “good enough” thinking was involved along with someone putting off making sure the internal controls were actually controlling and interruptions so the person responsible for software controls was pulled into a meeting instead of finishing and checking his or her work.

The difficulty is composed of several capabilities; specifically:

- Does the person doing the job know how to make it work in a good enough manner? In my experience, the boss may not and simply wants the fix implemented now or the product shipped immediately.

- Does the company have a culture of excellence or is it similar to big outfits which cannot deliver live streaming content, allow reviewers to write about a product without threatening them, or provide tactics which kill people because no one on the team understands the concept of ethical behavior? Frankly, today I am not sure any commercial enterprise cares about much other than revenue.

- Does anyone in a commercial organization have responsibility to determine the practical costs of shipping a product or delivering a service that does not deliver reliable outputs? Reaction to failed good enough products and services is, in my opinion, the management method applied to downstream problems.

Net net: Good enough, like it or not, is the new gold standard. Or, is that standard like the Olympic medals, an amalgam. The “real” gold is a veneer; the “good” is a coating on enough.

Stephen E Arnold, August 20, 2024

x

x

Suddenly: Worrying about Content Preservation

August 19, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1] green-dino_thumb_thumb_thumb_thumb_t[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t1_thumb-1.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Digital preservation may be becoming a hot topic for those who rarely think about finding today’s information tomorrow or even later today. Two write ups provide some hooks on which thoughts about finding information could be hung.

The young scholar faces some interesting knowledge hurdles. Traditional institutions are not much help. Thanks, MSFT Copilot. Is Outlook still crashing?

The first concerns PDFs. The essay and how to is “Classifying All of the PDFs on the Internet.” A happy quack to the individual who pursued this project, presented findings, and provided links to the data sets. Several items struck me as important in this project research report:

- Tracking down PDF files on the “open” Web is not something that can be done with a general Web search engine. The takeaway for me is that PDFs, like PowerPoint files, are either skipped or not crawled. The author had to resort to other, programmatic methods to find these file types. If an item cannot be “found,” it ceases to exist. How about that for an assertion, archivists?

- The distribution of document “source” across the author’s prediction classes splits out mathematics, engineering, science, and technology. Considering these separate categories as one makes clear that the PDF universe is about 25 percent of the content pool. Since technology is a big deal for innovators and money types, losing or not being able to access these data suggest a knowledge hurdle today and tomorrow in my opinion. An entity capturing these PDFs and making them available might have a knowledge advantage.

- Entities like national libraries and individualized efforts like the Internet Archive are not capturing the full sweep of PDFs based on my experience.

My reading of the essay made me recognize that access to content on the open Web is perceived to be easy and comprehensive. It is not. Your mileage may vary, of course, but this write up illustrates a large, multi-terabyte problem.

The second story about knowledge comes from the Epstein-enthralled institution’s magazine. This article is “The Race to Save Our Online Lives from a Digital Dark Age.” To make the urgency of the issue more compelling and better for the Google crawling and indexing system, this subtitle adds some lemon zest to the dish of doom:

We’re making more data than ever. What can—and should—we save for future generations? And will they be able to understand it?

The write up states:

For many archivists, alarm bells are ringing. Across the world, they are scraping up defunct websites or at-risk data collections to save as much of our digital lives as possible. Others are working on ways to store that data in formats that will last hundreds, perhaps even thousands, of years.

The article notes:

Human knowledge doesn’t always disappear with a dramatic flourish like GeoCities; sometimes it is erased gradually. You don’t know something’s gone until you go back to check it. One example of this is “link rot,” where hyperlinks on the web no longer direct you to the right target, leaving you with broken pages and dead ends. A Pew Research Center study from May 2024 found that 23% of web pages that were around in 2013 are no longer accessible.

Well, the MIT story has a fix:

One way to mitigate this problem is to transfer important data to the latest medium on a regular basis, before the programs required to read it are lost forever. At the Internet Archive and other libraries, the way information is stored is refreshed every few years. But for data that is not being actively looked after, it may be only a few years before the hardware required to access it is no longer available. Think about once ubiquitous storage mediums like Zip drives or CompactFlash.

To recap, one individual made clear that PDF content is a slippery fish. The other write up says the digital content itself across the open Web is a lot of slippery fish.

The fix remains elusive. The hurdles are money, copyright litigation, and technical constraints like storage and indexing resources.

Net net: If you want to preserve an item of information, print it out on some of the fancy Japanese archival paper. An outfit can say it archives, but in reality the information on the shelves is a tiny fraction of what’s “out there”.

Stephen E Arnold, August 19, 2024

The Customer Is Not Right. The Customer Is the Problem!

August 7, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The CrowdStrike misstep (more like a trivial event such as losing the cap to a Bic pen or misplacing an eraser) seems to be morphing into insights about customer problems. I pointed out that CrowdStrike in 2022 suggested it wanted to become a big enterprise player. The company has moved toward that goal, and it has succeeded in capturing considerable free marketing as well.

Two happy high-technology customers learn that they broke their system. The good news is that the savvy vendor will sell them a new one. Thanks, MSFT Copilot. Good enough.

The interesting failure of an estimated 8.5 million customers’ systems made CrowdStrike a household name. Among some airline passengers, creative people added more colorful language. Delta Airlines has retained a big time law firm. The idea is to sue CrowdStrike for a misstep that caused concession sales at many airports to go up. Even Panda Chinese looks quite tasty after hours spent in an airport choked with excited people, screaming babies, and stressed out over achieving business professionals.

“Microsoft Claims Delta Airlines Declined Help in Upgrading Technology After Outage” reports that like CrowdStrike, Microsoft’s attorneys want to make quite clear that Delta Airlines is the problem. Like CrowdStrike, Microsoft tried repeatedly to offer a helping hand to the airline. The airline ignored that meritorious, timely action.

Like CrowdStrike, Delta is the problem, not CrowdStrike or Microsoft whose systems were blindsided by that trivial update issue. The write up reports:

Mark Cheffo, a Dechert partner [another big-time lawfirm] representing Microsoft, told Delta’s attorney in a letter that it was still trying to figure out how other airlines recovered faster than Delta, and accused the company of not updating its systems. “Our preliminary review suggests that Delta, unlike its competitors, apparently has not modernized its IT infrastructure, either for the benefit of its customers or for its pilots and flight attendants,” Cheffo wrote in the letter, NBC News reported. “It is rapidly becoming apparent that Delta likely refused Microsoft’s help because the IT system it was most having trouble restoring — its crew-tracking and scheduling system — was being serviced by other technology providers, such as IBM … and not Microsoft Windows," he added.

The language in the quoted passage, if accurate, is interesting. For instance, there is the comparison of Delta to other airlines which “recovered faster.” Delta was not able to recover faster. One can conclude that Delta’s slowness is the reason the airline was dead on the hot tarmac longer than more technically adept outfits. Among customers grounded by the CrowdStrike misstep, Delta was the problem. Microsoft systems, as outstanding as they are, wants to make darned sure that Delta’s allegations of corporate malfeasance goes nowhere fast oozes from this characterization and comparison.

Also, Microsoft’s big-time attorney has conducted a “preliminary review.” No in-depth study of fouling up the inner workings of Microsoft’s software is needed. The big-time lawyers have determined that “Delta … has not modernized its IT infrastructure.” Okay, that’s good. Attorneys are skillful evaluators of another firm’s technological infrastructure. I did not know big-time attorneys had this capability, but as a dinobaby, I try to learn something new every day.

Plus the quoted passed makes clear that Delta did not want help from either CrowdStrike or Microsoft. But the reason is clear: Delta Airlines relied on other firms like IBM. Imagine. IBM, the mainframe people, the former love buddy of Microsoft in the OS/2 days, and the creator of the TV game show phenomenon Watson.

As interesting as this assertion that Delta is not to blame for making some airports absolute delights during the misstep, it seems to me that CrowdStrike and Microsoft do not want to be in court and having to explain the global impact of misplacing that ballpoint pen cap.

The other interesting facet of the approach is the idea that the best defense is a good offense. I find the approach somewhat amusing. The customer, not the people licensing software, is responsible for its problems. These vendors made an effort to help. The customer who screwed up their own Rube Goldberg machine, did not accept these generous offers for help. Therefore, the customer caused the financial downturn, relying on outfits like the laughable IBM.

Several observations:

- The “customer is at fault” is not surprising. End user licensing agreements protect the software developer, not the outfit who pays to use the software.

- For CrowdStrike and Microsoft, a loss in court to Delta Airlines will stimulate other inept customers to seek redress from these outstanding commercial enterprises. Delta’s litigation must be stopped and quickly using money and legal methods.

- None of the yip-yap about “fault” pays much attention to the people who were directly affected by the trivial misstep. Customers, regardless of the position in the food chain of revenue, are the problem. The vendors are innocent, and they have rights too just like a person.

For anyone looking for a new legal matter to follow, the CrowdStrike Microsoft versus Delta Airlines may be a replacement for assorted murders, sniping among politicians, and disputes about “get out of jail free cards.” The vloggers and the poohbahs have years of interactions to observe and analyze. Great stuff. I like the customer is the problem twist too.

Oh, I must keep in mind that I am at fault when a high-technology outfit delivers low-technology.

Stephen E Arnold, August 7, 2024

Agents Are Tracking: Single Web Site Version

August 6, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

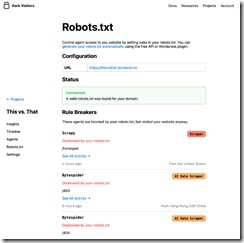

How many software robots are crawling (copying and indexing) a Web site you control now? This question can be answered by a cloud service available from DarkVisitors.com.

The Web site includes a useful list of these software robots (what many people call “agents” which sounds better, right?). You can find the list of about 800 bots as of July 30, 2024) on the DarkVisitors’ Web site at this link. There is a search function so you can look for a bot by name; for example, Omgili (the Israeli data broker Webz.io). Please, note, that the list contains categories of agents; for example, “AI Data Scrapers”, “AI Search Crawlers,” and “Developer Helpers,” among others.

The Web site also includes links to a service called “Set Up Your Robots.txt.” The idea is that one can link a Web site’s robots.txt file to DarkVisitors. Then DarkVisitors will update your Web site automatically to block crawlers, bots, and agents. The specific steps to make this service work are included on the DarkVisitors.com Web site.

The basic service is free. However, if you want analytics and a couple of additional features, the cost as of July 30, 2024, is $10 per month.

An API is also available. Instructions for implementing the service are available as well. Plus, a WordPress plug in is available. The cloud service is provided by Bit Flip LLC.

Stephen E Arnold, August 6, 2024

Spotting Machine-Generated Content: A Work in Progress

July 31, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

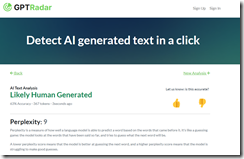

Some professionals want to figure out if a chunk of content is real, fabricated, or fake. In my experience, making that determination is difficult. For those who want to experiment with identifying weaponized, generated, or AI-assisted content, you may want to review the tools described in “AI Tools to Detect Disinformation – A Selection for Reporters and Fact-Checkers.” The article groups tools into categories. For example, there are utilities for text, images, video, and five bonus tools. There is a suggestion to address the bot problem. The write up is intended for “journalists,” a category which I find increasingly difficult to define.

The big question is, of course, do these systems work? I tried to test the tool from FactiSearch and the link 404ed. The service is available, but a bit of clicking is involved. I tried the Exorde tool and was greeted with the register for a free trial.

I plugged some machine-generated text produced with the You.com “Genius” LLM system in to GPT Radar (not in the cited article’s list by the way). That system happily reported that the sample copy was written by a human.

The test content was not. I plugged some text I wrote and the system reported:

Three items in my own writing were identified as text written by a large language model. I don’t know whether to be flattered or horrified.

The bottom line is that systems designed to identify machine-generated content are a work in progress. My view is that as soon as a bright your spark rolls out a new detection system, the LLM output become better. So a cat-and-mouse game ensues.

Stephen E Arnold, July 31, 2024

Thinking about AI Doom: Cheerful, Right?

July 22, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I am not much of a philosopher psychologist academic type. I am a dinobaby, and I have lived through a number of revolutions. I am not going to list the “next big things” that have roiled the world since I blundered into existence. I am changing my mind. I have memories of crouching in the hall at Oxon Hill Grade School in Maryland. We were practicing for the atomic bomb attack on Washington, DC. I think I was in the second grade. Exciting.

The AI powered robot want the future experts in hermeneutics to be more accepting of the technology. Looks like the robot is failing big time. Thanks, MSFT Copilot. Got those fixes deployed to the airlines yet?

Now another “atomic bomb” is doing the James Bond countdown: 009, 008, and then James cuts the wire at 007. The world was saved for another James Bond sequel. Wow, that was close.

I just read “Not Yet Panicking about AI? You Should Be – There’s Little Time Left to Rein It In.” The essay seems to be a trifle dark. Here’s a snippet I circled:

With technological means, we have accomplished what hermeneutics has long dreamed of: we have made language itself speak.

Thanks to Dr. Francis Chivers, one of my teachers at Duquesne University, I actually know a little bit about hermeneutics. May I share?

Hermeneutics is the theory and methodology of interpretation of words and writings. One should consider content in its historical, cultural, and linguistic context. The idea is to figure out the the underlying messages, intentions, and implications of texts doing academic gymnastics.

Now the killer statement:

Jacques Lacan was right; language is dark and obscene in its depths.

I presume you know well the work of Jacques Lacan. But if you have forgotten, the canny psychologist got himself kicked out of the International Psychoanalytic Association (no mean feat as I recall) for his ideas about desire. Think Freud on steroids.

The write up uses these everyday references to make the point:

If our governments summon the collective will, they are very strong. Something can still be done to rein in AI’s powers and protect life as we know it. But probably not for much longer.

Okay. AI is going to screw up the world. I think I have heard that assertion when my father told me about the computer lecture he attended at an accounting refresher class. That fear he manifested because he thought he would lose his job to a machine attracted me to the dark unknown of zeros and ones.

How did that turn out? He kept his job. I think mankind has muddled through the computer revolution, the space revolution, the wonder drug revolution, the automation revolution, yada yada.

News flash: The AI revolution has been around long before the whiz kids at Google disclosed Transformers. I think the author of this somewhat fearful write up is similar to my father’s projecting on computerized accounting his fear that he would be harmed by punched cards.

Take a deep breath. The sun will come up tomorrow morning. People who know about hermeneutics and Jacques Lacan will be able to ponder the nature of text and behavior. In short, worry less. Be less AI-phobic. The technology is here and it is not going away, getting under the thumb of any one government including China’s, and causing eternal darkness. Sorry to disappoint you.

Stephen E Arnold, July 22, 2024

Looking for the Next Big Thing? The Truth Revealed

July 18, 2024

![dinosaur30a_thumb_thumb_thumb_thumb_[1] dinosaur30a_thumb_thumb_thumb_thumb_[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/dinosaur30a_thumb_thumb_thumb_thumb_1_thumb.gif) This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Big means money, big money. I read “Twenty Five Years of Warehouse-Scale Computing,” authored by Googlers who definitely are into “big.” The write up is history from the point of view of engineers who built a giant online advertising and surveillance system. In today’s world, when a data topic is raised, it is big data. Everything is Texas-sized. Big is good.

This write up is a quasi-scholarly, scientific-type of sales pitch for the wonders of the Google. That’s okay. It is a literary form comparable to an epic poem or a jazzy H.L. Menken essay when people read magazines and newspapers. Let’s take a quick look at the main point of the article and then consider its implications.

I think this passage captures the zeitgeist of the Google on July 13, 2024:

From a team-culture point of view, over twenty five years of WSC design, we have learnt a few important lessons. One of them is that it is far more important to focus on “what does it mean to land” a new product or technology; after all, it was the Apollo 11 landing, not the launch, that mattered. Product launches are well understood by teams, and it’s easy to celebrate them. But a launch doesn’t by itself create success. However, landings aren’t always self-evident and require explicit definitions of success — happier users, delighted customers and partners, more efficient and robust systems – and may take longer to converge. While picking such landing metrics may not be easy, forcing that decision to be made early is essential to success; the landing is the “why” of the project.

A proud infrastructure plumber knows that his innovations allows the home owner to collect rent from AirBnB rentals. Thanks, MSFT Copilot. Interesting image because I did not specify gender or ethnicity. Does my plumber look like this? Nope.

The 13 page paper includes numerous statements which may resonate with different readers as more important. But I like this passage because it makes the point about Google’s failures. There is no reference to smart software, but for me it is tough to read any Google prose and not think in terms of Code Red, the crazy flops of Google’s AI implementations, and the protestations of Googlers about quantum supremacy or some other projection of inner insecurity the company’s genius concoct. Don’t you want to have an implant that makes Google’s knowledge of “facts” part of your being? America’s founding fathers were not diverse, but Google has different ideas about reality.

This passage directly addresses failure. A failure is a prelude to a soft landing or a perfect landing. The only problem with this mindset is that Google has managed one perfect landing: Its derivative online advertising business. The chatter about scale is a camouflage tarp pulled over the mad scramble to find a way to allow advertisers to pay Google money. The “invention” was forced upon those at Google who wanted those ad dollars. The engineers did many things to keep the money flowing. The “landing” is the fact that the regulators turned a blind eye to Google’s business practices and the wild and crazy engineering “fixes” worked well enough to allow more “fixes.” Somehow the mad scramble in the 25 years of “history” continues to work.

Until it doesn’t.

The case in point is Google’s response to the Microsoft OpenAI marketing play. Google’s ability to scale has not delivered. What delivers at Google is ad sales. The “scale” capabilities work quite well for advertising. How does the scale work for AI? Based on the results I have observed, the AI pullbacks suggest some issues exist.

What’s this mean? Scale and the cloud do not solve every problem or provide a slam dunk solution to a new challenge.

The write up offers a different view:

On one hand, computing demand is poised to explode, driven by growth in cloud computing and AI. On the other hand, technology scaling slowdown poses continued challenges to scale costs and energy-efficiency

Google sees that running out of chip innovations, power, cooling, and other parts of the scale story are an opportunity. Sure they are. Google’s future looks bright. Advertising has been and will be a good business. The scale thing? Plumbing. Let’s not forget what matters at Google. Selling ads and renting infrastructure to people who no longer have on-site computing resources. Google is hoping to be the AirBnB of computation. And sell ads on Tubi and other ad-supported streaming services.

Stephen E Arnold, July 18, 2024