The Google and Its AI Peers Guzzle Water. Yep, Guzzle

October 6, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Much has been written about generative AI’s capabilities and its potential ramifications for business and society. Less has been stated about its environmental impact. The AP highlights this facet of the current craze in its article, “Artificial Intelligence Technology Behind ChatGPT Was Built in Iowa—With a Lot of Water.” Iowa? Who knew? Turns out, there is good reason to base machine learning operations, especially the training, in such a chilly environment. Reporters Matt O’Brien and Hannah Fingerhut write:

“Building a large language model requires analyzing patterns across a huge trove of human-written text. All of that computing takes a lot of electricity and generates a lot of heat. To keep it cool on hot days, data centers need to pump in water — often to a cooling tower outside its warehouse-sized buildings. In its latest environmental report, Microsoft disclosed that its global water consumption spiked 34% from 2021 to 2022 (to nearly 1.7 billion gallons, or more than 2,500 Olympic-sized swimming pools), a sharp increase compared to previous years that outside researchers tie to its AI research.”

During the same period, Google’s water usage surge by 20% according to the company. Notably, Google was strategic about where it guzzled this precious resource: it kept usage steady in Oregon, where there was already criticism about its water usage. But its consumption doubled outside Las Vegas, famously one of the nation’s hottest and driest regions. Des Moines, Iowa, on the other hand is a much cooler and wetter locale. We learn:

“In some ways, West Des Moines is a relatively efficient place to train a powerful AI system, especially compared to Microsoft’s data centers in Arizona that consume far more water for the same computing demand. … For much of the year, Iowa’s weather is cool enough for Microsoft to use outside air to keep the supercomputer running properly and vent heat out of the building. Only when the temperature exceeds 29.3 degrees Celsius (about 85 degrees Fahrenheit) does it withdraw water, the company has said in a public disclosure.”

Though merely a trickle compared to what the same work would take in Arizona, that summer usage is still a lot of water. Microsoft’s Iowa data centers swilled about 11.5 million gallons in July of 2022, the month just before GPT-4 graduated training. Naturally, both Microsoft and Google insist they are researching ways to use less water. It be nice if environmental protection were more than an afterthought.

The write-up introduces us to Shaolei Ren, a researcher at the University of California, Riverside. His team is working to calculate the environmental impact of generative AI enthusiasm. Their paper is due later this year, but they estimate ChatGPT swigs more than 16 ounces of water for every five to 50 prompts, depending on the servers’ location and the season. Will big tech find a way to curb AI’s thirst before it drinks us dry?

Cynthia Murrell, October 6, 2023

AI and Increasing Inequality: Smart Software Becomes the New Dividing Line

August 16, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

“Will AI Be an Economic Blessing or Curse?” engages is prognosticative “We will be sorry” analysis. Yep, I learned about this idea in Dr. Francis Chivers’ class about Epistemology at Duquesne University. Wow! Exciting. The idea is that knowing is phenomenological. Today’s manifestation of this mental process is in the “fake data” and “alternative facts” approach to knowledge.

An AI engineer cruising the AI highway. This branch of the road does not permit boondocking or begging. MidJourney disappointed me again. Sigh.

Nevertheless, the article makes a point I find quite interesting; specifically, the author invites me to think about the life of a peasant in the Middle Ages. There were some technological breakthroughs despite the Dark Ages and the charmingly named Black Death. Even though plows improved and water wheels were rediscovered, peasants were born into a social system. The basic idea was that the poor could watch rich people riding through fields and sometimes a hovel in pursuit of fun, someone who did not meet meet their quota of wool, or a toothsome morsel. You will have to identify a suitable substitute for the morsel token.

The write up points out (incorrectly in my opinion):

“AI has got a lot of potential – but potential to go either way,” argues Simon Johnson, professor of global economics and management at MIT Sloan School of Management. “We are at a fork in the road.”

My view is that the AI smart software speedboat is roiling the data lakes. Once those puppies hit 70 mph on the water, the casual swimmers or ill prepared people living in houses on stilts will be disrupted.

The write up continues:

Backers of AI predict a productivity leap that will generate wealth and improve living standards. Consultancy McKinsey in June estimated it could add between $14 trillion and $22 trillion of value annually – that upper figure being roughly the current size of the U.S economy.

On the bright side, the write up states:

An OECD survey of some 5,300 workers published in July suggested that AI could benefit job satisfaction, health and wages but was also seen posing risks around privacy, reinforcing workplace biases and pushing people to overwork.

“The question is: will AI exacerbate existing inequalities or could it actually help us get back to something much fairer?” said Johnson.

My view is not populated with an abundance of happy faces. Why? Here are my observations:

- Those with knowledge about AI will benefit

- Those with money will benefit

- Those in the right place at the right time and good luck as a sidekick will benefit

- Those not in Groups one, two, and three will be faced with the modern equivalent of laboring as a peasant in the fields of the Loire Valley.

The idea that technology democratizes is not in line with my experience. Sure, most people can use an automatic teller machine and a mobile phone functioning as a credit card. Those who can use, however, are not likely to find themselves wallowing in the big bucks of the firms or bureaucrats who are in the AI money rushes.

Income inequality is one visible facet of a new data flyway. Some get chauffeured; others drift through it. Many stand and marvel at rushing flows of money. Some hold signs with messages like “Work needed” or “Homeless. Please, help.”

The fork in the road? Too late. The AI Flyway has been selected. From my vantage point, one benefit will be that those who can drive have some new paths to explore. For many, maybe orders of magnitude more people, the AI Byway opens new areas for those who cannot afford a place to live.

The write up assumes the fork to the AI Flyway has not been taken. It has, and it is not particularly scenic when viewed from a speeding start up gliding on neural networks.

Stephen E Arnold, August 16, 2023

Self Driving Cars: Would You Run in Front of One?

August 7, 2023

I worked in what is called by some “Plastic Fantastic.” If you have not heard the phrase, you may have missed the quips which included this phrase in several high profile, big money companies in Silicon Valley. Oh, include Cupertino and a few other outposts. Walnut Creek, I am sorry for you.

If one were to live in Berkeley and have the thrilling option of driving over the Bay Bridge or taking a change with 92 skidoo, the idea of having a car which would drive itself at three miles per hour is obvious. Also, anyone with an opportunity to use 101 or the Foothills would have a similar thought. Why drive? Why not rig a car to creep along?

One bright driver says, “Self driving cars will solve this problem.” His passenger says, “Isn’t this a self driving car? Aren’t we going the wrong way on a one-way street?” MidJourney understands traffic jams because its guardrails are high.

And what do you know? The self driving car idea captured attention. How is that going after much money and many years of effort? And here’s a better question: Would you run in front of one? Would you encourage your child to stand in front of one to test the auto-braking function? Go to a dealership selling smart cars and ask the sales professional (if you can find one) to let you drive a vehicle toward the sales professional. I tried this at two dealerships and what do you know? No auto sales professional accepted this idea. One dealership had an orange cone which I could use to test auto breaking.

I read “America’s Most Tech-Forward City Has Doubts about Self-Driving Cars.” I do not want to be harsh, but cities do not have doubts. People do. The Murdoch “real” journalists report that people (not cities) will embrace the idea of letting a Silicon Valley inspired vehicle ferry them around without a bit of trepidation. Okay, fear. There I said it. How about the confidence a vehicle without a steering wheel or brake inspires?

If you want to read what is painfully obvious, navigate to the original story.

Oh, the writer is unlikely to be found standing on 101 testing the efficacy of the smart cars. Mr. Murdoch? Yeah, he might give it a whirl. My suggestion is to be confident in the land of Plastic Fantastic. It thrives on illusion. Reality can kill, crash, or just stall at a key intersection. AI can hallucinate and may overlook the squashed jogger. But whiz kids sitting on 101 envision a smarter world. Doesn’t everyone sit on highways like 101 every day?

Stephen E Arnold, August 7, 2023

Learning Means Effort, Attention, and Discipline. No, We Have AI, or AI Has Us

July 4, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-8.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

My newsfeed of headlines produced a three-year young essay titled “How to Learn Better in the Digital Age.” The date on the document is November 2020. (Have you noticed how rare a specific date on a document appears?)

MidJourney provided this illustration of me doing math homework with both hands in 1952. I was fatter and definitely uglier than the representation in the picture. I want to point out: [a] no mobile phone, [b] no calculator, [c] no radio or TV, [d] no computer, and [e] no mathy father breathing down my neck. (He was busy handling the finances of a weapons manufacturer which dabbled in metal coat hangers.) Was homework hard? Nope, just part of the routine in Campinas, Brazil, and the thrilling Calvert Course.

The write up contains a simile which does not speak to me; namely, the functioning of the human brain is emulated to some degree in smart software. I am not in that dog fight. I don’t care because I am a dinobaby.

For me the important statement in the essay, in my opinion, is this one:

… we need to engage with what we encounter if we wish to absorb it long term. In a smartphone-driven society, real engagement, beyond the share or like or retweet, got fundamentally difficult – or, put another way, not engaging got fundamentally easier. Passive browsing is addictive: the whole information supply chain is optimized for time spent in-app, not for retention and proactivity.

I marvel at the examples of a failure to learn. United Airlines strands people. The CEO has a fix: Take a private jet. Clerks in convenience stores cannot make change even when the cash register displays the amount to return to the customer. Yeah, figuring out pennies, dimes, and quarters is a tough one. New and expensive autos near where I live sit on the side of the road awaiting a tow truck from the Land Rover- or Maserati-type dealer. The local hospital has been unable to verify appointments and allegedly find some X-ray images eight weeks after a cyber attack on an insecure system. Hip, HIPPA hooray, Hip HIPPA hooray. I have a basket of other examples, and I would wager $1.00US you may have one or two to contribute. But why? The impact of poor thinking, reading, math, and writing skills are abundant.

Observations:

- AI will take over routine functions because humans are less intelligent and diligent than when I was a fat, slow learning student. AI is fast and good enough.

- People today will not be able to identify or find information to validate or invalidate an output from a smart system; therefore, those who are intellectually elite will have their hands on machines that direct behavior, money, and power.

- Institutions — staffed by employees who look forward to a coffee break more than working hard — will gladly license smart workflow revolution.

Exciting times coming. I am delighted I a dinobaby and not a third-grade student juggling a mobile, an Xbox, an iPad, and a new M2 Air. I was okay with a paper and pencil. I just wanted to finish my homework and get the best grade I could.

Stephen E Arnold, July

Milestones in 2023 Technology: Wondrous Markers Indeed

June 21, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-32.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I zipped through my messages this morning. Two items helped me think about the milieu of mid 2023.

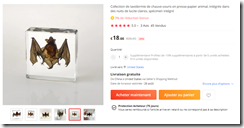

The first is a memento of the health challenges and its downstream effects. The bat in a lucite block is available in some countries from Alieexpress. You can — if you wish — try to order the item from this url which I verified on June 21, 2023, at 1038 am US Eastern time: https://shorturl.at/wyAFL. My reaction to this item was slightly negative, but I think it would be a candidate for a gain-of-function researcher at the local college or university.

The other item is “What is AI Marketing? A Basic Guide to Explosive Growth in 2023.” This article — allegedly written by a humanoid — states:

The real deal with AI marketing is about augmenting human capabilities, not eliminating them. Think of it like your very own marketing superpower, helping you reach the right people, at the right time, with the right message. Or are you worried AI might make marketing impersonal and robotic? Well, the surprising truth is that AI can actually make your marketing more human. It can save you oodles of time and energy so that you can focus on the tasks that truly matter.

I am not sure about the phrase “truly matter,” but the concept of using smart software to bombard me with more advertising is definitely a thought starter. My question is, “How can I filter these synthetic messages?”

The question you may want to ask me, “How on earth are bats associated with a disease linked to smart software used to generate advertising that truly matters?

The answer in my opinion is “gain of function.” The idea is not to make something better. The objective is to amplify certain effects.

Now that I think about it, perhaps the bat and its potential to make life miserable is the perfect metaphor for summer 2023. Perhaps I should use You.com’s smart software to help me think the idea through?

Nah. I am good. Diseased bats and smart software lashed to marketing. Perfect.

Stephen E Arnold, June 21, 2023

Software Cannot Process Numbers Derived from Getty Pix, Honks Getty Legal Eagle

June 6, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-3.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “Getty Asks London Court to Stop UK Sales of Stability AI System.” The write up comes from a service which, like Google, bandies about the word trust with considerable confidence. The main idea is that software is processing images available in the form of Web content, converting these to numbers, and using the zeros and ones to create pictures.

The write up states:

The Seattle-based company [Getty] accuses the company of breaching its copyright by using its images to “train” its Stable Diffusion system, according to the filing dated May 12, [2023].

I found this statement in the trusted write up fascinating:

Getty is seeking as-yet unspecified damages. It is also asking the High Court to order Stability AI to hand over or destroy all versions of Stable Diffusion that may infringe Getty’s intellectual property rights.

When I read this, I wonder if the scribes upon learning about the threat Gutenberg’s printing press represented were experiencing their “Getty moment.” The advanced technology of the adapted olive press and hand carved wooden letters meant that the quill pen champions had to adapt or find their future emptying garderobes (aka chamber pots).

Scribes prepare to throw a Gutenberg printing press and the evil innovator Gutenberg in the Rhine River. Image was produced by the evil incarnate code of MidJourney. Getty is not impressed like letters on paper with the outputs of Beelzebub-inspired innovations.

How did that rebellion against technology work out? Yeah. Disruption.

What happens if the legal system in the UK and possibly the US jump on the no innovation train? Japan’s decision points to one option: Using what’s on the Web is just fine. And China? Yep, those folks in the Middle Kingdom will definitely conform to the UK and maybe US rules and regulations. What about outposts of innovation in Armenia? Johnnies on the spot (not pot, please). But what about those computer science students at Cambridge University? Jail and fines are too good for them. To the gibbet.

Stephen E Arnold, June 6, 2023

Will McKinsey Be Replaced by AI: Missing the Point of Money and Power

May 12, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read a very unusual anti-big company and anti-big tech essay called “Will AI Become the New McKinsey?” The thesis of the essay in my opinion is expressed in this statement:

AI is a threat because of the way it assists capital.

The argument upon which this assertion is perched boils down to capitalism, in its present form, in today’s US of A is roached. The choices available to make life into a hard rock candy mountain world are start: Boast capitalism so that it like cancer kills everything including itself. The other alternative is to wait for the “government” to implement policies to convert the endless scroll into a post-1984 theme park.

Let’s consider McKinsey. Whether the firm likes it or not, it has become the poster child and revenue model for other services firms. Paying to turn on one’s steering wheel heating element is an example of McKinsey-type thinking. The fentanyl problem is an unintended consequence of offering some baller ideas to a few big pharma outfits in the Us. There are other examples. I prefer to focus on some intellectual characteristics which make the firm into the symbol of that which is wrong with the good old US of A; to wit:

- MBA think. Numbers drive decisions, not feel good ideas like togetherness, helping others, and emulating Twitch’s AI powered ask_Jesus program. If you have not seen this, check it out at this link. It has 64 viewers as I write this on May 7, 2023 at 2 pm US Eastern.

- Hitting goals. These are either expressed as targets to consultants or passed along by executives to the junior MBAs pushing the mill stone round and round with dot points, charts, graphs, and zippy jargon speak. The incentive plan and its goals feed the MBAs. I think of these entities as cattle with some brains.

- Being viewed as super smart. I know that most successful consultants know they are smart. But many smart people who work at consulting firms like McKinsey are more insecure than an 11 year old watching an Olympic gymnast flip and spin in a effortless manner. To overcome that insecurity, the MBA consultant seeks approval from his/her/its peers and from clients who eagerly pick the option the report was crafted to make a no-brainer. Yes, slaps on the back, lunch with a senior partner, and identified as a person who would undertake grinding another rail car filled with wheat.

The essay, however, overlooks a simple fact about AI and similar “it will change everything” technology.

The technology does not do anything. It is a tool. The action comes from the individuals smart enough, bold enough, and quick enough to implement or apply it first. Once the momentum is visible, then the technology is shaped, weaponized, and guided to targets. The technology does not have much of a vote. In fact, technology is the mill stone. The owner of the cattle is running the show. The write up ignores this simple fact.

One solution is to let the “government” develop policies. Another is for the technology to kill itself. Another is for those with money, courage, and brains to develop an ethical mindset. Yeah, good luck with these.

The government works for the big outfits in the good old US of A. No firm action against monopolies, right? Why? Lawyers, lobbyists, and leverage.

What’s the essay achieve? [a] Calling attention to McKinsey helps McKinsey sell. [b] Trying to gently push a lefty idea is tough when those who can’t afford an apartment in Manhattan are swiping their iPhones and posting on BlueSky. [c] Accepting the reality that technology serves those who understand and have the cash to use that technology to gain more power and money.

Ugly? Only for those excluded from the top of the social pyramid and juicy jobs at blue chip consulting firms, expertise in manipulating advanced systems and methods, and the mindset to succeed in what is the only game in town.

PS. MBAs make errors like the Bud Light promotion. That type of mistake, not opioid tactics, may be an instrument of change. But taming AI to make a better, more ethical world. That’s a comedy hook worthy of the next Sundar & Prabhakar show.

Stephen E Arnold, May 12, 2023

Researchers Break New Ground with a Turkey Baster and Zoom

April 4, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I do not recall much about my pre-school days. I do recall dropping off at different times my two children at their pre-schools. My recollections are fuzzy. I recall horrible finger paintings carried to the automobile and several times a month, mashed pieces of cake. I recall quite a bit of laughing, shouting, and jabbering about classmates whom I did not know. Truth be told I did not want to meet these progeny of highly educated, upwardly mobile parents who wore clothes with exposed logos and drove Volvo station wagons. I did not want to meet the classmates. The idea of interviewing pre-kindergarten children struck me as a waste of time and an opportunity to get chocolate Baskin & Robbins cake smeared on my suit. (I am a dinobaby, remember. Dress for success. White shirt. Conservative tie. Yada yada._

I thought (briefly, very briefly) about the essay in Science Daily titled “Preschoolers Prefer to Learn from a Competent Robot Than an Incompetent Human.” The “real news” article reported without one hint of sarcastic ironical skepticism:

We can see that by age five, children are choosing to learn from a competent teacher over someone who is more familiar to them — even if the competent teacher is a robot…

Okay. How were these data gathered? I absolutely loved the use of Zoom, a turkey baster, and nonsense terms like “fep.”

Fascinating. First, the idea of using Zoom and a turkey baster would never roamed across this dinobaby’s mind. Second, the intuitive leap by the researchers that pre-schoolers who finger-paint would like to undertake this deeply intellectual task with a robot, not a human. The human, from my experience, is necessary to prevent the delightful sprouts from eating the paint. Third, I wonder if the research team’s first year statistics professor explained the concept of a valid sample.

One thing is clear from the research. Teachers, your days are numbered unless you participate in the Singularity with Ray Kurzweil or are part of the school systems’ administrative group riding the nepotism bus.

“Fep.” A good word to describe certain types of research.

Stephen E Arnold, April 4, 2023

Subscription Thinking: More Risky Than 20-Somethings Think

March 2, 2023

I am delighted I don’t have to sit in meetings with GenX, GenY, and GenZ MBAs any longer. Now I talk to other dinobabies. Why am I not comfortable with the younger bright as a button humanoids? Here’s one reason: “Volkswagen Briefly Refused to Track Car with Abducted Child Inside until It Received Payment.”

I can visualize the group figuring out to generate revenue instead of working to explain and remediate the fuel emission scam allegedly perpetrated by Volkswagen. The reasoning probably ran along the lines, “Hey let’s charge people for monitoring a VW.” Another adds: “Wow, easy money and we avoid the blow back BMW got when it wanted money for heated seats.”

Did the VW young wizards consider downsides of the problem? Did the super bright money spinning ask, “What contingencies are needed for a legitimate law enforcement request?” My hunch is that someone mentioned these and other issues, but the team was thinking about organic pizza for lunch or why the coffee pods were plain old regular coffee.

The cited article states:

The Sheriff’s Office of Lake County, Illinois, has reported on Facebook about a car theft and child abduction incident that took place last week. Notably, it said that a Volkswagen Atlas with tracking technology built in was stolen from a woman and when the police tried asking VW to track the vehicle, it refused until it received payment.

The company floundered and then assisted. The child was unharmed.

Good work VW. Now about software in your electric vehicles and the emission engineering issue? What do I hear?

The sweet notes of Simon & Garfunkel “Sound of Silence”? So relaxing and stress free: Just like the chatter of those who were trying to rescue the child.

No, I never worry about how the snow plow driver gets to work, thank you. I worry about incomplete thinking and specious methods of getting money from a customer.

Stephen E Arnold, March 2, 2023

Why Governments and Others Outsource… Almost Everything

January 24, 2023

I read a very good essay called “Questions for a New Technology.” The core of the write up is a list of eight questions. Most of these are problems for full-time employees. Let me give you one example:

Are we clear on what new costs we are taking on with the new technology? (monitoring, training, cognitive load, etc)

The challenge strike me as the phrase “new technology.” By definition, most people in an organization will not know the details of the new technology. If a couple of people do, these individuals have to get the others up to speed. The other problem is that it is quite difficult for humans to look at a “new technology” and know about the knock on or downstream effects. A good example is the craziness of Facebook’s dating objective and how the system evolved into a mechanism for social revolution. What in-house group of workers can tackle problems like that once the method leaves the dorm room?

The other questions probe similarly difficult tasks.

But my point is that most governments do not rely on their full time employees to solve problems. Years ago I gave a lecture at Cebit about search. One person in the audience pointed out that in that individual’s EU agency, third parties were hired to analyze and help implement a solution. The same behavior popped up in Sweden, the US, and Canada and several other countries in which I worked prior to my retirement in 2013.

Three points:

- Full time employees recognize the impossibility of tackling fundamental questions and don’t really try

- The consultants retained to answer the questions or help answer the questions are not equipped to answer the questions either; they bill the client

- Fundamental questions are dodged by management methods like “let’s push decisions down” or “we decide in an organic manner.”

Doing homework and making informed decisions is hard. A reluctance to learn, evaluate risks, and implement in a thoughtful manner are uncomfortable for many people. The result is the dysfunction evident in airlines, government agencies, hospitals, education, and many other disciplines. Scientific research is often non reproducible. Is that a good thing? Yes, if one lacks expertise and does not want to accept responsibility.

Stephen E Arnold, January 25, 2023