IslandInText Reborn: TLDRThis

March 16, 2020

Many years ago (maybe 25+), we tested a desktop summarization tool called IslandInText. [#1 below] I believe, if my memory is working today, this was software developed in Australia by Island Software. There was a desktop version and a more robust system for large-scale summarizing of text. In the 1980s, there was quite a bit of interest in automatic summarization of text. Autonomy’s system could be configured to generate a précis if one was familiar with that system. Google’s basic citation is a modern version of what smart software can do to suggest what’s in a source item. No humans needed, of course. Too expensive and inefficient for the big folks I assume.

For many years, human abstract and indexing professionals were on staff. Our automated systems, despite their usefulness, could not handle nuances, special inclusions in source documents like graphs and tables, list of entities which we processed with the controlled term MANYCOMPANIES, and other specialized functions. I would point out that most of today’s “modern” abstracting and indexing services are simply not as good as the original services like ABI / INFORM, Chemical Abstracts, Engineering Index, Predicasts, and other pioneers in the commercial database sector. (Anyone remember Ev Brenner? That’s what I thought, gentle reader. One does not have to bother oneself with the past in today’s mobile phone search expert world.)

For a number of years, I worked in the commercial database business. In order to speed the throughput of our citations to pharmaceutical, business, and other topic domains – machine text summarization was of interest to me and my colleagues.

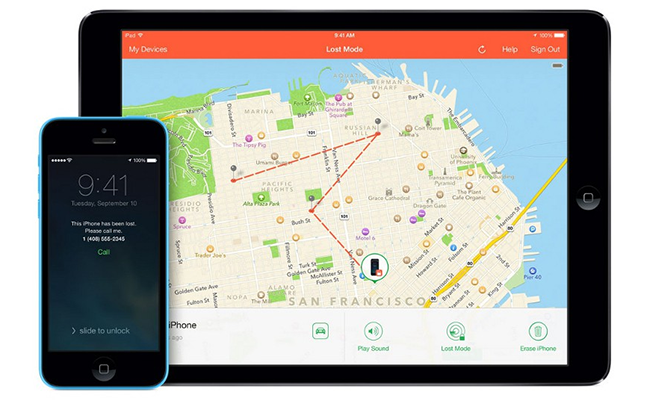

A reader informed me that a new service is available. It is called TLDRThis. Here’s what the splash page looks like:

One can paste text or provide a url, and the system returns a synopsis of the source document. (The advanced service generates a more in dept summary, but I did not test this. I am not too keen on signing up without knowing what the terms and conditions are.) There is a browser extension for the service. For this url, the system returned this summary:

Enterprise Search: The Floundering Fish!

Stephen E. Arnold Monitors Search,Content Processing,Text Mining,Related Topics His High-Tech Nerve Center In Rural Kentucky.,He Tries To Winnow The Goose Feathers The Giblets. He Works With Colleagues,Worldwide To Make This Web Log Useful To Those Who Want To Go,Beyond Search . Contact Him At Sa,At,Arnoldit.Com. His Web Site,With Additional Information About Search Is | Oct 27, 2011 | Time Saved: 5 mins

- I am thinking about another monograph on the topic of “enterprise search.” The subject seems to be a bit like the motion picture protagonist Jason.

- The landscape of enterprise search is pretty much unchanged.

- But the technology of yesterday’s giants of enterprise search is pretty much unchanged.

- The reality is that the original Big Five had and still have technology rooted in the mid to late 1990s.

We noted several positive functions; for example, identifying the author and providing a synopsis of the source, even the goose feathers’ reference. On the downside, the system missed the main point of the article; that is, enterprise search has been a bit of a chimera for decades. Also, the system ignored the entities (company names) in the write up. These are important in my experience. People search for names, concepts, and events. The best synopses capture some of the entities and tell the reader to get the full list and other information from the source document. I am not sure what to make of the TLDRThis’ display of a picture which makes zero sense without the context of the full article. I fed the system a PDF which did not compute and I tried a bit.ly link which generated a request to refresh the page, not the summary.

To get an “advanced summary”, one must sign up. I did not choose to do that. I have added this site to our “follow” list. I will make a note to try and find out who developed this service.

The pricing ranges from free for basic summarization to $60 per year for Bronze level service. Among its features are 100 summaries per month and “exclusive features”. These are coming soon. The top level service is $10 per month. The fee includes 300 summaries a month and “exclusive features.” These are also coming soon. The Platinum service is $20 per month and includes 1,000 summaries per month. These are “better” and will include forthcoming advanced features.

Stay tuned.

[#1 ] In the early 1990s, search and retrieval was starting to move from the esoteric world of commercial databases to desktop and UNIX machines. IslandSoft, founded in 1993, offered a search and retrieval system. My files from this time revealed that IslandSoft’s description of its system could be reused by today’s search and retrieval marketers. Here’s what IslandSoft said about InText:

IslandInTEXT is a document retrieval and management application for PCs and Unix workstations. IslandInTEXT’s powerful document analysis engine lets users quickly access documents through plain English queries, summarize large documents based on content rather than key words, and automatically route incoming text and documents to user-defined SmartFolders. IslandInTEXT offers the strongest solution yet to help organize and utilize information with large numbers of legacy documents residing on PCs, workstations, and servers as well as the proliferation of electronic mail documents and other data. IslandInTEXT supports a number of popular word processing formats including IslandWrite, Microsoft Word, and WordPerfect plus ASCII text.

IslandInTEXT Includes:

- File cabinet/file folder metaphor.

- HTML conversion.

- Natural language queries for easily locating documents.

- Relevancy ranking of query results.

- Document summaries based on statistical relevance from 1 to 99% of the original document—create executive summaries of large documents instantly. [This means that the user can specify how detailed the summarization was; for example, a paragraph or a page or two.]

- Summary Options. Summaries can be based on key word selection, key word ordering, key sentences, and many more.

[For example:] SmartFolder Routing. Directs incoming text and documents to user-defined folders. Hot Link Pointers. Allow documents to be viewed in their native format without creating copies of the original documents. Heuristic/Learning Architecture. Allows InTEXT to analyze documents according to the author’s style.

A page for InText is still online as of today at http://www.intext.com/. The company appears to have ceased operations in 2010. Data in my files indicate that the name and possibly the code is owned by CP Software, but I have not verified this. I did not include InText in my first edition of Enterprise Search Report, which I wrote in 2003 and 2004. The company had falled behind market leaders Autonomy, Endeca, and Fast Search & Transfer.

I am surprised at how many search and retrieval companies today are just traveling along well worn paths in the digital landscape. Does search work? Nope. That’s why there are people who specialize, remember things, and maintain personal files. Mobile device search means precision and recall are digital dodo birds in my opinion.

Stephen E Arnold, March 16, 2020

Venntel: Some Details

February 18, 2020

Venntel in Virginia has the unwanted attention of journalists. The company provides mobile location data and services. Like many of the firms providing specialized services to the US government, Venntel makes an effort to communicate with potential government customers via trade shows, informal gatherings, and referrals.

Venntel’s secret sauce is cleaner mobile data. The company says:

Over 50% of location data is flawed. Venntel’s proprietary platform efficiently distinguishes between erroneous data and data of value. The platform delivers 100% validated data, allowing your team to focus on results – not data quality.

NextGov reported in “Senator Questions DHS’ Use of Cellphone Location Data for Immigration Enforcement” some information about the company; for example:

- Customers include DHS and CBP

- Mobile and other sources of location data are available from the company

- The firm offers software

- Venntel, like Oracle and other data aggregators, obtains information from third-party sources; for example, marketing companies brokering mobile phone app data

Senator. Ed Markey, a democrat from Massachusetts, has posed questions to the low profile company and has requested answers by March 3, 2020.

A similar issued surfaced for other mobile data specialists. Other geo-analytic specialists work overtime to have zero public facing profile. Example, you ask. Try to chase down information about Geogence. (Bing and Google try their darnedest to change “Geogence” to “geofence.” This is a tribute to the name choice the stakeholders of Geogence have selected, and a clever exploitation of Bing’s and Google’s inept attempts to “help” its users find information.

If you want to get a sense of what can be done with location data, check out this video which provides information about the capabilities of Maltego, a go-to system to analyze cell phone records and geolocate actions. The video is two years old, but it is representative of the basic functions. Some specialist companies wrap more user friendly interfaces and point-and-click templates for analysts and investigators to use. There are hybrid systems which combine Analyst Notebook type functions with access to email and mobile phone data. Unlike the Watson marketing, IBM keeps these important services in the background because the company wants to focus on the needs of its customers, not on the needs of “real” journalists chasing “real news.”

DarkCyber laments the fact that special services companies which try to maintain a low profile and serve a narrow range of customers is in the news.

Stephen E Arnold, February 18, 2020

Acquiring Data: Addressing a Bottleneck

February 12, 2020

Despite all the advances in automation and digital technology, humans are still required to manually input information into computers. While modern technology makes automation easier than ever millions of hours are spent on data entry. Artificial intelligence and deep learning could be the key to ending data entry says Venture Beat article, “How Rossum Is Using Deep Learning To Extract Data From Any Document.”

Rossum is an AI startup based in Prague, Czechoslovakia, founded by Tomas Gogar, Tomas Tunys, and Petr Baudis. Rossum was started in 2017 and its client list has grown to include top tier clients: IBM, Box, Siemens, Bloomberg, and Siemens. Its recent project focuses on using deep learning to end invoice data entry. Instead of relying entirely on optical character recognition (OCR) Rossum uses “cognitive data capture” that trains machines to evaluate documents like a human. Rossum’s cognitive data capture is like an OCR upgrade:

“OCR tools rely on different sets of rules and templates to cover every type of invoice they may come across. The training process can be slow and time-consuming, given that a company may need to create hundreds of new templates and rule sets. In contrast, Rossum said its cloud-based software requires minimal effort to set up, after which it can peruse a document like a human does — regardless of style or formatting — and it doesn’t rely on fully structured data to extract the content companies need. The company also claims it can extract data 6 times faster than with manual entry while saving companies up to 80% in costs.”

Rossum’s cloud approach to cognitive data capture differentiates it from similar platforms due to being located on the cloud. Because Rossum does not need on-site installation, all of Rossum’s rescuers and engineering goes directly to client support. It is similar to Salesforce’s software-as-a-service model established in 1999.

The cognitive data capture tool works faster and unlike its predecessors:

“Rossum’s pretrained AI engine can be tried and tested within a couple of minutes of integrating its REST API. As with any self-respecting machine learning system, Rossum’s AI adapts as it learns from customers’ data. Rossum claims an average accuracy rate of around 95%, and in situations where its system can’t identify the correct data fields, it asks a human operator for feedback to improve from.”

Rossum is not searching to replace human labor, instead they want to free up human time to focus on more complex problems.

Whitney Grace, February 12, 2020

TemaTres: Open Source Indexing Tool Updated

February 11, 2020

Open source software is the foundation for many proprietary software startups, including the open source developers themselves. Most open source software tends to lag in the manner of updates and patches, but TemaTres recently updated according to blog post, “TemaTres 3.1 Release Is Out! Open Source Web Tool To Manage Controlled Vocabularies.”

TemaTres is an open source vocabulary server designed to manage controlled vocabularies, taxonomies, and thesauri. The recent update includes the following:

“Utility for importing vocabularies encoded in MARC-XML format

- Utility for the mass export of vocabulary in MARC-XML format

- New reports about global vocabulary structure (ex: https://r020.com.ar/tematres/demo/sobre.php?setLang=en#global_view)

- Distribution of terms according to depth level

- Distribution of sum of preferred terms and the sum of alternative terms

- Distribution of sum of hierarchical relationships and sum of associative relationships

- Report about terms with relevant degree of centrality in the vocabulary (according to prototypical conditions)

- Presentation of terms with relevant degree of centrality in each facet

- New options to config the presentation of notes: define specific types of note as prominent (the others note types will be presented in collapsed div).

- Button for Copy to clipboard the terms with indexing value (Copy-one-click button)

- New user login scheme (login)

- Allows to config and add Google Analytics tracking code (parameter in config.tematres.php file)

- Improvements in standard exposure of metadata tags

- Inclusion of the term notation or code in the search box predictive text

- Compatibility with PHP 7.2”

TemaTres does updates frequently, but it is monitored. The main ethos about open source is to give back as much as you take. TemaTres appears to follow this modus operandi. It TemaTres wants to promote its web image, the organization should really upgrade its Web site, fix the broken links, and provide more information on what the software actually does.

Whitney Grace, February 11, 2020

Need a Specialized String Matcher for Tracking Entities?

January 21, 2020

Specialized services are available to track strings; for example, the name of an entity (person, place, event), an email handle, or any other string. These services may not be offered to the public. A potential customer has to locate a low profile operation, go through a weird series of interactions, and then work quite hard to get a demo of the super stealthy technology. Once the “I am a legitimate customer” drill is complete, the individual wanting to use the stealthy service has to pay hundreds, thousands, or even more per month. In our DarkCyber video program we have profiled some of these businesses.

No more.

The technology and possibly a massive expansion of monitoring is poised to make tools reserved for government agencies available to anyone with an Internet connection and a credit card. Brandchirps.com provides:

Online reputation management monitoring. The idea is that when the string entered in the standing query service appears, the user will be modified. The company says:

We allow you to input your brand, your name, or other data so you make sure your reputation stays up to date.

The service tracks competitors too. The service is easy to use:

Simply enter your competitor’s names and keep track of what they are doing right, or doing wrong!

How much does the service cost? Are we talking a letter verifying that you are working for law enforcement or an intelligence agency? A six figure budget? A staff of technologists.

Nope.

The cost of the service (as of January 20, 2020) is:

- $7 per month for five keywords

- $16 per month for 20 keywords

Several observations:

- The cost for this service which allegedly monitors the Web and social media is very low. Government organizations strapped for cash are likely to check out this service.

- The system does not cover the Dark Web and other “interesting” content, but that could be changed by licensing data sets from specialists, assuming legal and financial requirements of the Dark Web content aggregators can be negotiated by Brandchirps.

- It is not clear at this time if the service monitors metadata on images and videos, podcast titles, descriptions, and metadata, or other high-value content.

- The world of secret monitoring and alerts has become more accessible which can inspire innovators to make use of this tool in novel ways.

Net net: Brandchirps is one more example of a technique once removed from general public access that has lost its mantle of secrecy. Will this type of service force the hand of specialized vendors? Yep.

Stephen E Arnold, January 21, 2020

Why Archived Information Can Be Useful

January 11, 2020

There’s nothing like a ubiquitous service like email and systems for keeping copies of information. Online is interesting and often surprising. This thought struck DarkCyber while reading the Time Magazine article “‘This Airplane Is Designed by Clowns.’ Internal Boeing Messages Describe Efforts to Dodge FAA Scrutiny of MAX.” Here’s the passage of interest:

“This airplane is designed by clowns, who in turn are supervised by monkeys,” said one company pilot in messages to a colleague in 2016, which Boeing disclosed publicly late Thursday.

Will the clowns and monkeys protest.

Another statement which comes directly from the Guide Book for Captain Obvious Rhetoric, which may have influenced this Time Magazine editorial insight:

The communications threaten to upend Boeing’s efforts to rebuild public trust in the 737 Max…

Ah, email and magazines. One good thing, however. No references to AI, NLP, or predictive analytics appear in the write up.

Stephen E Arnold, January 11, 2020

Linguistics: Becoming Useful to Regular People?

January 8, 2020

Now here is the linguistic reference app I have been waiting for: IDEA’s “In Other Words.” Finally, an online resource breaks the limiting patterns left over from book-based resources like traditional dictionaries and thesauri. The app puts definitions into context by supplying real-world examples from both fiction and nonfiction works of note from the 20th and 21st centuries. It also lets users explore several types of linguistic connections. Not surprisingly, this thoroughly modern approach leverages a combination of artificial and human intelligence. Here is how they did it:

“Building on the excellent definitions written by the crowd-sourced editors at Wiktionary, IDEA’s lexicographic team wrote more than 2,700 short, digestible definitions for all common words, including ‘who,’ ‘what,’ and ‘the.’ For over 100k other words that also have Wikipedia entries, we included a snippet of the article as well. To power the app, our team created the IDEA Linguabase, a database of word relationships built on an analysis of various published and open source dictionaries and thesauri, an artificial intelligence analysis of a large corpus of published content, and original lexicographic work. Our app offers relationships for over 300,000 terms and presents over 60 million interrelationships. These include close relationships, such as synonyms, as well as broader associations and thousands of interesting lists, such as types of balls, types of insects, words for nausea, and kinds of needlework. Additionally, the app has extensive information on word families (e.g., ‘jump,’ ‘jumping’) and common usage (‘beautiful woman’ vs. ‘handsome man’), revealing words that commonly appear before or after a word in real use. In Other Words goes beyond the traditional reference text by allowing users to explore interesting facts about words and wordplay, such as common letter patterns and phonetics/rhymes.”

The team has endeavored to give us an uncluttered, intuitive UI that makes it quick to look up a word and easy to follow a chain of meanings and associations. Users can also save and share what they have found across devices. Be warned, though—In Other Words does not shy away from salty language; it even points out terms that were neutral in one time period and naughty in another. (They will offer a sanitized version for families and schools.) They say the beta version is coming soon and will be priced at $4.99, or $25 with a custom tutorial. We look forward to it.

Cynthia Murrell, January 8, 2020

Megaputer Spans Text Analysis Disciplines

January 6, 2020

What exactly do we mean by “text analysis”? That depends entirely on the context. Megaputer shares a useful list of the most popular types in its post, “What’s in a Text Analysis Tool?” The introduction explains:

“If you ask five different people, ‘What does a Text Analysis tool do?’, it is very likely you will get five different responses. The term Text Analysis is used to cover a broad range of tasks that include identifying important information in text: from a low, structural level to more complicated, high-level concepts. Included in this very broad category are also tools that convert audio to text and perform Optical Character Recognition (OCR); however, the focus of these tools is on the input, rather than the core tasks of text analysis. Text Analysis tools not only perform different tasks, but they are also targeted to different user bases. For example, the needs of a researcher studying the reactions of people on Twitter during election debates may require different Text Analysis tasks than those of a healthcare specialist creating a model for the prediction of sepsis in medical records. Additionally, some of these tools require the user to have knowledge of a programming language like Python or Java, whereas other platforms offer a Graphical User Interface.”

The list begins with two of the basics—Part-of-Speech (POS) Taggers and Syntactic Parsing. These tasks usually underpin more complex analysis. Concordance or Keyword tools create alphabetical lists of a text’s words and put them into context. Text Annotation Tools, either manual or automated, tag parts of a text according to a designated schema or categorization model, while Entity Recognition Tools often use knowledge graphs to identify people, organizations, and locations. Topic Identification and Modeling Tools derive emerging themes or high-level subjects using text-clustering methods. Sentiment Analysis Tools diagnose positive and negative sentiments, some with more refinement than others. Query Search Tools let users search text for a word or a phrase, while Summarization Tools pick out and present key points from lengthy texts (provided they are well organized.) See the article for more on any of these categories.

The post concludes by noting that most text analysis platforms offer one or two of the above functions, but that users often require more than that. This is where the article shows its PR roots—Megaputer, as it happens, offers just such an all-in-one platform called PolyAnalyst. Still, the write-up is a handy rundown of some different text-analysis tasks.

Based in Bloomington, Indiana, Megaputer launched in 1997. The company grew out of AI research from the Moscow State University and Bauman Technical University. Just a few of their many prominent clients include HP, Johnson & Johnson, American Express, and several US government offices.

Cynthia Murrell, January 02, 2020

Cambridge Analytica: Maybe a New Name and Some of the Old Methods?

December 29, 2019

DarkCyber spotted an interesting factoid in “HH Plans to Work with the Re-Branded Cambridge Analytica to Influence 2021 Elections.”

The new company, Auspex International, will keep former Cambridge Analytica director Mark Turnbull at the helm.

Who is HH? He is President Hakainde Hichilema, serving at this time in Zambia.

The business focus of Auspex is, according to the write up:

We’re not a data company, we’re not a political consultancy, we’re not a research company and we’re not necessarily just a communications company. We’re a combination of all four.—Ahmad *Al-Khatib, a Cairo born investor

You can obtain some information about Auspex at this url: https://www.auspex.ai/.

DarkCyber noted the use of the “ai” domain. See the firm’s “What We Believe” information at this link. It is good to have a reason to get out of bed in the morning.

Stephen E Arnold, December 29, 2019

Insight from a Microsoft Professional: Susan Dumais

December 1, 2019

Dr. Susan Dumais is Microsoft Technical Fellow and Deputy Lab Director of MSR AI. She knows that search has evolved from discovering information to getting tasks done. In order. To accomplish tasks, search queries are a fundamental and they are rooted in people’s information needs. The Microsoft Research Podcast interviewed Dr. Dumais in the episode, “HCI, IR, And The Search For Better Search With Dr. Susan Dumais.”

Dr. Dumais shared that most of her work centered around search stems from frustrations she encountered with her own life. These included trouble learning Unix OS and vast amounts of spam. At the beginning of the podcast, she runs down the history of search and how it has changed in the past twenty years. Search has become more intuitive, especially give the work Dr. Dumais did when providing context to search.

“Host: Context in anything makes a difference with language and this is integrally linked to the idea of personalization, which is a buzz word in almost every area of computer science research these days: how can we give people a “valet service” experience with their technical devices and systems? So, tell us about the technical approaches you’ve taken on context in search, and how they’ve enabled machines to better recognize or understand the rich contextual signals, as you call them, that can help humans improve their access to information?

Susan Dumais: If you take a step back and consider what a web search engine is, it’s incredibly difficult to understand what somebody is looking for given, typically, two to three words. These two to three words appear in a search box and what you try to do is match those words against billions of documents. That’s a really daunting challenge. That challenge becomes a little easier if you can understand things about where the query is coming from. It doesn’t fall from the sky, right? It’s issued by a real live human being. They have searched for things in the longer term, maybe more acutely in the current session. It’s situated in a particular location in time. All of those signals are what we call context that help understand why somebody might be searching and, more importantly, what you might do to help them, what they might mean by that. You know, again, it’s much easier to understand queries if you have a little bit of context about it.”

Dr. Dumais has a practical approach to making search work for the average user. It is the everyday tasks that build up that power how search is shaped and its functionality. She represents an enlightened technical expert that understands the perspective of the end user.

Whitney Grace, November 30, 2019