Meta Being Meta: Move Fast and Snap Threads

July 31, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I want to admit that as a dinobaby I don’t care about [a] Barbie, [b] X [pronouced “ech” or “yech”], twit, or tweeter, [c] Zuckbook, meta-anything, or broken threads. Others seem to care deeply. The chief TWIT (Leo Laporte) — who is valiantly trying to replicate the success of the non-advertising “value for value” model for podcasting — cares about the Tweeter. He can be the one and only TWIT; the Twitter is now X [pronouced “ech” or “yech”], a delightful letter which evokes a number of interesting Web sites when auto-fill is relying on click popularity for relevance. Many of those younger than I care about the tweeter; for instance, with Twitter as a tailwind, some real journalists were able to tell their publisher employers, “I am out of here.” But with the tweeter in disarray does an opportunity exist for the Zuck to cause the tweeter to eXit?

A modern god among mortals looks at the graffito on the pantheon. Anger rises. Blood lust enflames the almighty. Then digital divinity savagely snarls, “Attack now. And render the glands from every musky sheep-ox in my digital domain. Move fast, or you will spend one full day with Cleggus Bombasticus. And you know that is sheer sheol.” [Ah, alliteration. But what is “sheol”?]

Plus, I can name one outfit interested in the Musky Zucky digital cage match, the Bezos bulldozer’s “real” news machine. I read “Move Fast and Beat Musk: The Inside Story of How Meta Built Threads,” which was ground out by the spinning wheels of “real” journalists. I would have preferred a different title; for instance my idea is in italics, Move fast and zuck up! but that’s just my addled view of the world.

The WaPo write up states:

Threads drew more than 100 million users in its first five days — making it, by some estimations, the most successful social media app launch of all time. Threads’ long-term success is not assured. Weeks after its July 5 launch, analytics firms estimated that the app’s usage dropped by more than half from its early peak. And Meta has a long history of copycat products or features that have failed to gain traction…

That’s the story. Take advantage of the Musker’s outstanding management to create a business opportunity for a blue belt in an arcane fighting method. Some allegedly accurate data suggest that “Most of the 100 million people who signed up for Threads stopped using it.”

Why would usage allegedly drop?

The Bezos bulldozer “real” news system reports:

Meta’s [Seine] Kim responded, “Our industry leading integrity enforcement tools and human review are wired into Threads.”

Yes, a quote to note.

Several observations:

- Threads arrived with the baggage of Zuckbook. Early sign ups decided to not go back to pick up the big suitcases.

- The persistence of users to send IXXes (pronounced ech, a sound similar to the “etch” in retch) illustrates one of Arnold’s Rules of Online: Once an online habit is formed, changing behavior may be impossible without just pulling the plug. Digital addiction is a thing.

- Those surfing on the tweeter to build their brand are loath to admit that without the essentially free service their golden goose is queued to become one possibly poisonous Chicken McNugget.

Snapped threads? Yes, even those wrapped tightly around the Musker. Thus, I find one of my co-worker’s quips apt: “Move fast and zuck up.”

Stephen E Arnold, July 31, 2023

Cyber Security Firms Gear Up: Does More Jargon Mean More Sales? Yes, Yes, Yes

July 31, 2023

I read a story which will make stakeholders in cyber security firms turn cartwheels. Imagine not one, not two, not three, but 10 uncertainty inducing, sleepless night making fears.

The young CEO says, “I can’t relax. I just see endless strings of letters floating before my eyes: EDR EPP XDR ITDR, MTD, M, SASE, SSE, UES, and ZTNA. My heavens, ZTNA. Horrible. Who can help me?” MidJourney has a preference for certain types of feminine CEOs. I wonder if there is bias in the depths of the machine.

Navigate to “The Top 10 Technologies Defining the Future of Cybersecurity.” Read the list. Now think about how vulnerable your organization is. You will be compromised. The only question is, “When?”

What are these fear inducers? I will provide the acronyms. You will have to go to the cited article and learn what they mean. Think of this as a two-punch FUD moment. I provide the acronyms which are unfamiliar and mildly disconcerting. Then read the explanations and ask, “Will I have to buy bigger, better, and more cyber security services?” I shall answer your question this way, “Does an electric vehicle require special handling when the power drops to a goose egg?”

Here are the FUD-ronyms:

- EDR

- EPP

- XDR

- ITDR

- MTD

- M

- SASE

- SSE

- UES

- ZTNA.

Scared yet?

Stephen E Arnold, July 31, 2023

Google: Running the Same Old Game Plan

July 31, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Google has been running the same old game plan since the early 2000s. But some experts are unaware of its simplicity. In the period from 2002 to 2004, I did a number of reports for my commercial clients about Google. In 2004, I recycled some of the research and analysis into The Google Legacy. The thesis of the monograph, published in England by the now defunct Infonortics Ltd. explained the infrastructure for search was enhanced to provide an alternative to commercial software for personal, business, and government use. The idea that a search-and-retrieval system based on precedent technology and funded in part by the National Science Foundation with a patent assigned to Stanford University could become Googzilla was a difficult idea to swallow. One of the investment banks who paid for our research got the message even though others did not. I wonder if that one group at the then world’s largest software company remembers my lecture about the threat Google posed to a certain suite of software applications? Probably not. The 20 somethings and the few suits at the lecture looked like kindergarteners waiting for recess.

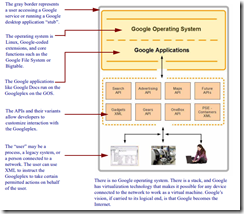

I followed up The Google Legacy with Google Version 2.0: The Calculating Predator. This monograph was again based on proprietary research done for my commercial clients. I recycled some of the information, scrubbing that which was deemed inappropriate for anyone to buy for a few British pounds. In that work, I rather methodically explained that Google’s patent documents provided useful information about why the mere Web search engine was investing in some what seemed like odd-ball technologies like software janitors. I reworked one diagram to show how the Google infrastructure operated like a prison cell or walled garden. The idea is that once one is in, one may have to work to get past the gatekeeper to get out. I know the image from a book does not translate to a blog post, but, truth be told, I am disinclined to recreate art. At age 78, it is often difficult to figure out why smart drawing tools are doing what they want, not what I want.

Here’s the diagram:

The prison cell or walled garden (2006) from Google Version 2.0: The Calculating Predator, published by Infonortics Ltd., 2006. And for any copyright trolls out there, I created the illustration 20 years ago, not Alamy and not Getty and no reputable publisher.

Three observations about the diagram are: [a] The box, prison cell, or walled garden contains entities, [b] once “in” there is a way out but the exit is via Google intermediated, defined, and controlled methods, and [c] anything in the walled garden perceives that the prison cell is the outside world. The idea obviously is for Google to become the digital world which people will perceive as the Internet.

I thought about my decades old research when I read “Google Tries to Defend Its Web Environment Integrity as Critics Slam It as Dangerous.” The write up explains that Google wants to make online activity better. In the comments to the article, several people point out that Google is using jargon and fuzzy misleading language to hide its actual intentions with the WEI.

The critics and the write up miss the point entirely: Look at the diagram. WEI, like the AMP initiative, is another method added to existing methods for Google to extend its hegemony over online activity. The patent, implement, and explain approach drags out over years. Attention spans, even for academics who make up data like the president of Stanford University, are not interested in anything other than personal goal achievement. Finding out something visible for years is difficult. When some interesting factoid is discovered, few accept it. Google has a great brand, and it cares about user experience and the other fog the firm generates.

MidJourney created this nice image of a Googler preparing for a presentation to the senior management of Google in 2001. In that presentation, the wizard was outlining Google’s fundamental strategy: Fake left, go right. The slogan for the company, based on my research, keep them fooled. Looking the wrong way is the basic rule of being a successful Googler, strategist, or magician.

Will Google WEI win? It does not matter because Google will just whip up another acronym, toss some verbal froth around, and move forward. What is interesting to me is Google’s success. Points I have noted over the years are:

- Kindergarten colors, Google mouse pads, and talking like General Electric once did about “bringing good things” continues to work

- Google’s dominance is not just accepted, changing or blocking anything Google wants to do is sacrilegious. It has become a sacred digital cow

- The inability of regulators to see Google as it is remains a constant, like Google’s advertising revenue

- Certain government agencies could not perform their work if Google were impeded in any significant way. No, I will not elaborate on this observation in a public blog post. Don’t even ask. I may make a comment in my keynote at the Massachusetts / New York Association of Crime Analysts’ conference in early October 2023. If you can’t get in, you are out of luck getting information on Point Four.

Net net: Fire up your Chrome browser. Look for reality in the Google search results. Turn cartwheels to comply with Google’s requirements. Pay money for traffic via Google advertising. Learn how to create good blog posts from Google search engine optimization experts. Use Google Maps. Put your email in Gmail. Do the Google thing. Then ask yourself, “How do I know if the information provided by Google is “real”? Just don’t get curious about synthetic data for Google smart software. Predictions about Big Brother are wrong. Google, not the government, is the digital parent whom you embraced after a good “Backrub.” Why change from high school science thought processes? If it ain’t broke, don’t fix it.

Stephen E Arnold, July 31, 2023

The Frontier Club: Doing Good with AI?

July 28, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read some of the stories about several big outfits teaming to create “the frontier model forum.” I have no idea what the phrase means.

MidJourney created this interesting representation of a meeting of a group similar to the Frontier Model Forum. True, MidJourney presented young people in what seems to be an intense, intellectual discussion. Upon inspection, the subject is the décor for a high school prom. Do the decorations speak to the millions who are going without food, or do the decorations underscore the importance of high value experiences for those with good hair? I have no idea, but it reminds me of a typical high school in-group confabulation.

To fill the void, I turned to the gold standard in technology Pablum and the article “Major Generative AI Players Join to Create the Frontier Model Forum.” That’s a good start. I think I interpreted collusion between the syllables of the headline.

I noted this passage, hoping to satisfy my curiosity: According to a statement issued by the four companies [Anthropic, Google, Microsoft, and OpenAI] Wednesday, the Forum will offer membership to organizations that design and develop large-scale generative AI tools and platforms that push the boundaries of what’s currently possible in the field. The group says those “frontier” models require participating organizations to “demonstrate a strong commitment to frontier model safety,” and to be “willing to contribute to advancing the Forum’s efforts by

participating in joint initiatives.”

Definitely clear. Are there companies not in the list? I know of several in France, China has some independent free thinkers beavering away at AI, and probably a handful of others. Don’t they count?

The article makes it clear that doing good results from the “frontier” thing. I had a high school history teacher named Earl Skaggs. His avocation was documenting the interesting activities which took place on the American frontier. He was a veritable analog Wiki on the subjects of claim jumping, murder, robbery, swindling, rustling, and illegal gambling. I am confident that this high-tech “frontier” thing will be ethical, stable, and focused on the good of the people. Am I an unenlightened dinobaby?

I noted this statement:

“Companies creating AI technology have a responsibility to ensure that it is safe, secure, and remains under human control,” Brad Smith, Microsoft vice chair and president, said in a statement. “This initiative is a vital step to bring the tech sector together in advancing AI responsibly and tackling the challenges so that it benefits all of humanity.”

Mr. Smith is famous for his explanation of 1,000 programmers welded into a cyber attack force to take advantage of Microsoft. He also may be unaware of Israel’s smart weapons; for example, see the comments in “Revolutionizing Warfare: Israel Implements AI Systems in Military Operations.” Obviously the frontier thing is designed to prevent such weaponization. Since Israel is chugging away with smart weapons in use, my hunch is that the PR jargon handwaving is not working.

Net net: How long will the meetings of the “frontier thing” become contentious? One of my team said, “Never, this group will never meet in person. PR is the goal.” Goodness, this person is skeptical. If I were an Israeli commander using smart weapons to protect my troops, I would issue orders to pull back the smart stuff and use the same outstanding tactics evidenced by a certain nation state’s warriors in central Europe. How popular would that make the commander?

Do I know what the Frontier Model Forum is? Yep, PR.

Stephen E Arnold, July 28, 2023

Young People Are Getting News from Sources I Do Not Find Helpful. Sigh.

July 28, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-47.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

“TikTok Is the Most Popular News Source for 12 to 15-Year-Olds, Says Ofcom” presents some interesting data. First, however, let’s answer the question, “What’s an Ofcom?” It is a UK government agency regulates communication in the UK. From mobile to mail, Ofcom is there. Like most government entities, it does surveys.

Now what did the Ofcom research discover? Here are three items:

“You mean people used to hold this grimy paper thing and actually look at it to get information?” asks one young person. The other says, “Yes, I think it is called a maga-bean or maga-zeen or maga-been, something like that.” Thanks for this wonderful depiction of bafflement, MidJourney.

- In the UK, those 12 to 15 get their news from TikTok.

- The second most popular source of news is the Zuckbook’s Instagram.

- Those aged from 16 to 24 are mired in the past, relying on social media and mobile phones.

Interesting, but I was surprised that a traditional printed newspaper did not offer more information about the impact of this potentially significant trend on newspapers, printed books, and printed magazines.

Assuming the data are correct, as those 12 to 15 age, their behavior patterns may suggest that today’s dark days for traditional media were a bright, sunny afternoon.

Stephen E Arnold, July 28, 2023

Digital Delphis: Predictions More Reliable Than Checking Pigeon Innards, We Think

July 28, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-52.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

One of the many talents of today’s AI is apparently a bit of prophecy. Interconnected examines “Computers that Live Two Seconds in the Future.” Blogger Matt Webb pulls together three examples that, to him, represent an evolution in computing.

The AI computer, a digital Delphic oracle, gets some love from its acolytes. One engineer says, “Any idea why the system is hallucinating?” The other engineer replies, “No clue.” MidJourney shows some artistic love to hard-working, trustworthy computer experts.

His first digital soothsayer is Apple’s Vision Pro headset. This device, billed as a “spatial computing platform,” takes melding the real and virtual worlds to the next level. To make interactions as realistic as possible, the headset predicts what a user will do next by reading eye movements and pupil dilation. The Vision Pro even flashes visuals and sounds so fast as to be subliminal and interprets the eyes’ responses. Ingenious, if a tad unsettling.

The next example addresses a very practical problem: WavePredictor from Next Ocean helps with loading and unloading ships by monitoring wave movements and extrapolating the next few minutes. Very helpful for those wishing to avoid cargo sloshing into the sea.

Finally, Webb cites a development that both excites and frightens programmers: GitHub Copilot. Though some coders worry this and similar systems will put them out of a job, others see it more as a way to augment their own brilliance. Webb paints the experience as a thrilling bit of time travel:

“It feels like flying. I skip forwards across real-time when writing with Copilot. Type two lines manually, receive and get the suggestion in spectral text, tab to accept, start typing again… OR: it feels like reaching into the future and choosing what to bring back. It’s perhaps more like the latter description. Because, when you use Copilot, you never simply accept the code it gives you. You write a line or two, then like the Ghost of Christmas Future, Copilot shows you what might happen next – then you respond to that, changing your present action, or grabbing it and editing it. So maybe a better way of conceptualizing the Copilot interface is that I’m simulating possible futures with my prompt then choosing what to actualize. (Which makes me realize that I’d like an interface to show me many possible futures simultaneously – writing code would feel like flying down branching time tunnels.)”

Gnarly dude! But what does all this mean for the future of computing? Even Webb is not certain. Considering operating systems that can track a user’s focus, geographic location, communication networks, and augmented reality environments, he writes:

“The future computing OS contains of the model of the future and so all apps will be able to anticipate possible futures and pick over them, faster than real-time, and so… …? What happens when this functionality is baked into the operating system for all apps to take as a fundamental building block? I don’t even know. I can’t quite figure it out.”

Us either. Stay tuned dear readers. Oh, let’s assume the wizards get the digital Delphic oracle outputting the correct future. You know, the future that cares about humanoids.

Cynthia Murrell, July 28, 2023

AI and Malware: An Interesting Speed Dating Opportunity?

July 27, 2023

Note: Dinobaby here: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid. Services are now ejecting my cute little dinosaur gif. (´?_?`) Like my posts related to the Dark Web, the MidJourney art appears to offend someone’s sensibilities in the datasphere. If I were not 78, I might look into these interesting actions. But I am and I don’t really care.

AI and malware. An odd couple? One of those on my research team explained at lunch yesterday that an enterprising bad actor could use one of the code-savvy generative AI systems and the information in the list of resources compiled by 0xsyr0 and available on GitHub here. The idea is that one could grab one or more of the malware development resources and do some experimenting with an AI system. My team member said the AmsiHook looked interesting as well as Freeze. Is my team member correct? Allegedly next week he will provide an update at our weekly meeting. My question is, “Do the recent assertions about smart software cover this variant of speed dating?”

Stephen E Arnold, July 27, 2023

Netflix Has a Job Opening. One Job Opening to Replace Many Humanoids

July 27, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “As Actors Strike for AI Protections, Netflix Lists $900,000 AI Job.” Obviously the headline is about AI, money, and entertainment. Is the job “real”? Like so much of the output of big companies, it is difficult to determine how much is clickbait, how much is surfing on “real” journalists’ thirst for the juicy info, and how much is trolling? Yep, trolling. Netflix drives a story about AI’s coming to Hollywood.

The write up offers Hollywood verbiage and makes an interesting point:

The [Netflix job] listing points to AI’s uses for content creation:“Artificial Intelligence is powering innovation in all areas of the business,” including by helping them to “create great content.” Netflix’s AI product manager posting alludes to a sprawling effort by the business to embrace AI, referring to its “Machine Learning Platform” involving AI specialists “across Netflix.”

The machine learning platform or MLP is an exercise in cost control, profit maximization, and presaging the future. If smart software can generate new versions of old content, whip up acceptable facsimiles, and eliminate insofar as possible the need for non-elite humans — what’s not clear.

The $900,000 may be code for “Smart software can crank out good enough content at lower cost than traditional Hollywood methods.” Even the TikTok and YouTube “stars” face an interesting choice: [a] Figure out how to offload work to smart software or [b] learn to cope with burn out, endless squabbles with gatekeepers about money, and the anxiety of becoming a has-been.

Will humans, even talented ones, be able to cope with the pressure smart software will exert on the production of digital content? Like the junior attorney and cannon fodder for blue chip consulting companies, AI is moving from spitting out high school essays to more impactful outputs.

One example is the integration of smart software into workflows. The jargon about this enabling use of smart software is fluid. The $900,000 job focuses on something that those likely to be affected can understand: A good enough script and facsimile actors and actresses with a mouse click.

But the embedded AI promises to rework the back office processes and the unseen functions of humans just doing their jobs. My view is that there will be $900K per year jobs but far fewer of them than there are regular workers. What is the future for those displaced?

Crafting? Running yard sales? Creating fine art?

Stephen E Arnold, July 27, 2023

Ethics Are in the News — Now a Daily Feature?

July 27, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-46.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

It is déjà vu all over again, or it seems like it. I read “Judge Finds Forensic Scientist Henry Lee Liable for Fabricating Evidence in a Murder Case.” Yep, that is the story. Scientist Lee allegedly has a knack for non-fiction; that is, making up stuff or arranging items in a special way. One of my relatives founded Hartford, Connecticut, in the 1635. I am not sure he would have been on board with this make-stuff-up approach to data. (According to our family lore, John Arnold was into beating people with a stick.) Dr. Lee is a big wheel because he worked on the 1995 running-through-airports trial. The cited article includes this interesting sentence:

[Scientist] Lee’s work in several other cases has come under scrutiny…

No one is watching. A noted scientist helps himself to the cookies in the lab’s cookie jar. He is heard mumbling, “Cookies. I love cookies. I am going to eat as many of these suckers as I can because I am alone. And who cares about anyone else in this lab? Not me.” Chomp chomp chomp. Thanks, MidJourney. You depicted an okay scientist but refused to create an image of a great leader whom I identified by proper name. For this I paid money?

Let me mention three ethics incidents which for one reason or another hit my radar:

- MIT accepting cash from every young person’s friend Jeffrey Epstein. He allegedly killed himself. He’s off the table.

- The Harvard ethics professor who made up data. She’s probably doing consulting work now. I don’t know if she will get back into the classroom. If she does it might be in the Harvard Business School. Those students have a hunger for information about ethics.

- The soon-to-be-departed president of Stanford University. He may find a future using ChatGPT or an equivalent to write technical articles and angling for a gig on cable TV.

What do these allegedly true incidents tell us about the moral fiber of some people in positions of influence? I have a few ideas. Now the task is remediation. When John Arnold chopped wood in Hartford, justice involved ostracism, possibly a public shaming, or rough justice played out to the the theme from Hang ‘Em High.

Harvard, MIT, and Stanford: Aren’t universities supposed to set an example for impressionable young minds? What are the students learning? Anything goes? Prevaricate? Cut corners? Grub money?

Imagine sweatshirts with the college logo and these words on the front and back of the garment. Winner. Some at Amazon, Apple, Facebook, Google, Microsoft, and OpenAI might wear them to the next off-site. I would wager that one turns up in the Rayburn House Office Building wellness room.

Stephen E Arnold, July 27, 2023

AI Leaders and the Art of Misdirection

July 27, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-51.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Lately, leaders at tech companies seem to have slipped into a sci-fi movie.

“Trust me. AI is really good. I have been working to create a technology which will help the world. I want to make customers you, Senator, trust us. I and other AI executives want to save whales. We want the snail darter to thrive. We want the homeless to have suitable housing. AI will deliver this and more plus power and big bucks to us!” asserts the sincere AI wizard with a PhD and an MBA.

Rein in our algorithmic monster immediately before it takes over the world and destroys us all! But AI Snake Oil asks, “Is Avoiding Extinction from AI Really an Urgent Priority?” Or is it a red herring? Writers Seth Lazar, Jeremy Howard, and Arvind Narayanan consider:

“Start with the focus on risks from AI. This is an ambiguous phrase, but it implies an autonomous rogue agent. What about risks posed by people who negligently, recklessly, or maliciously use AI systems? Whatever harms we are concerned might be possible from a rogue AI will be far more likely at a much earlier stage as a result of a ‘rogue human’ with AI’s assistance. Indeed, focusing on this particular threat might exacerbate the more likely risks. The history of technology to date suggests that the greatest risks come not from technology itself, but from the people who control the technology using it to accumulate power and wealth. The AI industry leaders who have signed this statement are precisely the people best positioned to do just that. And in calling for regulations to address the risks of future rogue AI systems, they have proposed interventions that would further cement their power. We should be wary of Prometheans who want to both profit from bringing the people fire, and be trusted as the firefighters.”

Excellent point. But what, specifically, are the rich and powerful trying to distract us from here? Existing AI systems are already causing harm, and have been for some time. Without mitigation, this problem will only worsen. There are actions that can be taken, but who can focus on that when our very existence is (supposedly) at stake? Probably not our legislators.

Cynthia Murrell, July 27, 2023