Signals for the Future: January 2024

January 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Data points fly more rapidly than arrows in an Akiro Kurosawa battle scene. My research team identified several items of interest which the free lunchers collectively identified as mysterious signals for the future. Are my special librarians, computer programmers, and eager beavers prognosticators you can trust to presage the future? I advise some caution. Nevertheless, let me share their divinations with you.

This is an illustration of John Arnold, a founder of Hartford, Connecticut, trying to discern the signals about the future of his direct descendant Stephen E Arnold. I use the same type of device, but I focus on a less ambitious time span. Thanks, MidJourney, good enough.

Enablers in the Spotlight

First, Turkey has figured out that the digital enablers which operate as Internet server providers, hosting services which offer virtual machines and crypto, developers of assorted obfuscation software are a problem. The odd orange newspaper reported in “Turkey Tightens Internet Censorship ahead of Elections.” The signal my team identified appears in this passage:

Documents seen by the Financial Times show that Turkey’s Information Technologies and Communications Authority (BTK) told internet service providers a month ago to curtail access to more than a dozen popular virtual private network services.

If Turkey’s actions return the results the government of Turkey find acceptable, will other countries adopt a similar course of action. My dinobaby memory allowed me to point out that this is old news. China and Iran have played this face card before. One of my team pointed out, “Yes, but this time it is virtual private networks.” I asked one of the burrito eaters to see if M247 has been the subject of any chatter. What’s an M247? Good question. The answer is, “An enabler.”

AI Kills Jobs

Second, one of my hard workers pointed out that Computerworld published an article with a bold assertion. Was it a bit of puffery or was it a signal? The answer was, “A signal.”

“AI to Impact 60% of Jobs in Developed Economies: IMF” points out:

The blog post points out that automation has typically impacted routine tasks. However, this is different with AI, as it can potentially affect skilled jobs. “As a result, advanced economies face greater risks from AI — but also more opportunities to leverage its benefits — compared with emerging market and developing economies,” said the blog post. The older workforce would be more vulnerable to the impact of technology than the younger college-educated workers. “Technological change may affect older workers through the need to learn new skills. Firms may not find it beneficial to invest in teaching new skills to workers with a shorter career horizon; older workers may also be less likely to engage in such training, since the perceived benefit may be limited given the limited remaining years of employment,” said the IMF report.

Life for some recently RIFed and dinobabies will be more difficult. This is a signal? My team says, “Yes, dinobaby.”

Advertising As Cancer

Final signal for this post: One of my team made a big deal out of the information in “We Removed Advertising Cookies, Here’s What Happened.” Most of the write up will thrill the lucky people who are into search engine optimization and related marketing hoo hah. The signal appears in this passage:

When third-party cookies are fully deprecated this year, there will undoubtedly be more struggles for performance marketers. Without traditional pixels or conversion signals, Google (largest ad platform in the world) struggles to find intent of web visitors to purchase.

We listened as our colleague explained: “Google is going to do whatever it can to generate more revenue. The cookie thing, combined with the ChatGPT-type of search, means that Google’s goldon goose is getting perilously close to one of those craters with chemical-laced boiling water at Yellowstone.” That’s an interesting signal. Can we hear a goose squawking now?

Make of these signals what you will. My team and I will look and listen for more.

Stephen E Arnold, January 18, 2024

Stretchy Security and Flexible Explanations from SEC and X

January 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Gizmodo presented an interesting write up about an alleged security issue involving the US Securities & Exchange Commission. Is this an important agency? I don’t know. “X Confirms SEC Hack, Says Account Didn’t Have 2FA Turned On” states:

Turns out that the SEC’s X account was hacked, partially because it neglected a very basic rule of online security.

“Well, Pa, that new security fence does not seem too secure to me,” observes the farmer’s wife. Flexible and security with give are not the optimal ways to protect the green. Thanks, MSFT Copilot Bing thing. Four tries and something good enough. Yes!

X.com — now known by some as the former Twitter or the Fail Whale outfit — puts the blame on the US SEC. That’s a familiar tactic in Silicon Valley. The users are at fault. Some people believe Google’s incognito mode is secret, and others assume that Apple iPhones do not have a backdoor. Wow, I believe these companies, don’t you?

The article reports:

[The] hacking episode temporarily threw the web3 community into chaos after the SEC’s compromised account made a post falsely claiming that the SEC had approved the much anticipated Bitcoin ETFs that the crypto world has been obsessed with of late. The claims also briefly sent Bitcoin on a wild ride, as the asset shot up in value temporarily, before crashing back down when it became apparent the news was fake.

My question is, “How stretchy and flexible are security systems available from outfits like Twitter (now X)?” Another question is, “How secure are government agencies?”

The apparent answer is, “Good enough.” That’s the high water mark in today’s world. Excellence? Meh.

Stephen E Arnold, January 18, 2024

Information Voids for Vacuous Intellects

January 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In countries around the world, 2024 is a critical election year, and the problem of online mis- and disinformation is worse than ever. Nature emphasizes the seriousness of the issue as it describes “How Online Misinformation Exploits ‘Information Voids’—and What to Do About It.” Apparently we humans are so bad at considering the source that advising us to do our own research just makes the situation worse. Citing a recent Nature study, the article states:

“According to the ‘illusory truth effect’, people perceive something to be true the more they are exposed to it, regardless of its veracity. This phenomenon pre-dates the digital age and now manifests itself through search engines and social media. In their recent study, Kevin Aslett, a political scientist at the University of Central Florida in Orlando, and his colleagues found that people who used Google Search to evaluate the accuracy of news stories — stories that the authors but not the participants knew to be inaccurate — ended up trusting those stories more. This is because their attempts to search for such news made them more likely to be shown sources that corroborated an inaccurate story.”

Doesn’t Google bear some responsibility for this phenomenon? Apparently the company believes it is already doing enough by deprioritizing unsubstantiated news, posting content warnings, and including its “about this result” tab. But it is all too easy to wander right past those measures into a “data void,” a virtual space full of specious content. The first impulse when confronted with questionable information is to copy the claim and paste it straight into a search bar. But that is the worst approach. We learn:

“When [participants] entered terms used in inaccurate news stories, such as ‘engineered famine’, to get information, they were more likely to find sources uncritically reporting an engineered famine. The results also held when participants used search terms to describe other unsubstantiated claims about SARS-CoV-2: for example, that it rarely spreads between asymptomatic people, or that it surges among people even after they are vaccinated. Clearly, copying terms from inaccurate news stories into a search engine reinforces misinformation, making it a poor method for verifying accuracy.”

But what to do instead? The article notes Google steadfastly refuses to moderate content, as social media platforms do, preferring to rely on its (opaque) automated methods. Aslett and company suggest inserting human judgement into the process could help, but apparently that is too old fashioned for Google. Could educating people on better research methods help? Sure, if they would only take the time to apply them. We are left with this conclusion: instead of researching claims from untrustworthy sources, one should just ignore them. But that brings us full circle: one must be willing and able to discern trustworthy from untrustworthy sources. Is that too much to ask?

Cynthia Murrell, January 18, 2024

Two Surveys. One Message. Too Bad

January 17, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Generative Artificial Intelligence Will Lead to Job Cuts This Year, CEOs Say.” The data come from a consulting/accounting outfit’s survey of executives at the oh-so-exclusive World Economic Forum meeting in the Piscataway, New Jersey, of Switzerland. The company running the survey is PwC (once an acronym for Price Waterhouse Coopers. The moniker has embraced a number of interesting investigations. For details, navigate to this link.)

Survey says, “Economic gain is the meaning of life.” Thanks, MidJourney, good enough.

The big finding from my point of view is:

A quarter of global chief executives expect the deployment of generative artificial intelligence to lead to headcount reductions of at least 5 per cent this year

Good, reassuring number from big gun world leaders.

However, the International Monetary Fund also did a survey. The percentage of jobs affected range from 26 percent in low income countries, 40 percent for emerging markets, and 60 percent for advanced economies.

What can one make of these numbers; specifically, the five percent to the 60 percent? My team’s thoughts are:

- The gap is interesting, but the CEOs appear to be either downplaying, displaying PR output, or working to avoid getting caught in sticky wicket.

- The methodology and the sample of each survey are different, but both are skewed. The IMF taps analysts, bankers, and politicians. PwC goes to those who are prospects for PwC professional services.

- Each survey suggests that government efforts to manage smart software are likely to be futile. On one hand, CEOs will say, “No big deal.” Some will point to the PwC survey and say, “Here’s proof.” The financial types will hold up the IMF results and say, “We need to move fast or we risk losing out on the efficiency payback.”

What does Bill Gates think about smart software? In “Microsoft Co-Founder Bill Gates on AI’s Impact on Jobs: It’s Great for White-Collar Workers, Coders” the genius for our time says:

I have found it’s a real productivity increase. Likewise, for coders, you’re seeing 40%, 50% productivity improvements which means you can get programs [done] sooner. You can make them higher quality and make them better. So mostly what we’ll see is that the productivity of white-collar [workers] will go up

Happy days for sure! What’s next? Smart software will move forward. Potential payouts are too juicy. The World Economic Forum and the IMF share one key core tenet: Money. (Tip: Be young.)

Stephen E Arnold, January 17, 2024

A Swiss Email Provider Delivers Some Sharp Cheese about MSFT Outlook

January 17, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

What company does my team love more than Google? Give up. It is Microsoft. Whether it is the invasive Outlook plug in for Zoom on the Mac or the incredible fly ins, pop ups, and whining about Edge, what’s not to like about this outstanding, customer-centric firm? Nothing. That’s right. Nothing Microsoft does can be considered duplicitous, monopolistic, avaricious, or improper. The company lives and breathes the ethics of Thomas Dewey, the 19 century American philosopher. This is my opinion, of course. Some may disagree.

A perky Swiss farmer delivers an Outlook info dump. Will this delivery enable the growth of suveillance methodologies? Thanks, MSFT Copilot Bing thing. Thou did not protest when I asked for this picture.

I read and was troubled that one of my favorite US firms received some critical analysis about the MSFT Outlook email program. The sharp comments appeared in a blog post titled “Outlook Is Microsoft’s New Data Collection Service.” Proton offers an encrypted email service and a VPN from Switzerland. (Did you know the Swiss have farmers who wash their cows and stack their firewood neatly? I am from central Illinois, and our farmers ignore their cows and pile firewood. As long as a cow can make it into the slaughter house, the cow is good to go. As long as the firewood burns, winner.)

The write up reports or asserts, depending on one’s point of view:

Everyone talks about the privacy-washing(new window) campaigns of Google and Apple as they mine your online data to generate advertising revenue. But now it looks like Outlook is no longer simply an email service(new window); it’s a data collection mechanism for Microsoft’s 772 external partners and an ad delivery system for Microsoft itself.

Surveillance is the key to making money from advertising or bulk data sales to commercial and possibly some other organizations. Proton enumerates how these sucked up data may be used:

- Store and/or access information on the user’s device

- Develop and improve products

- Personalize ads and content

- Measure ads and content

- Derive audience insights

- Obtain precise geolocation data

- Identify users through device scanning

The write up provides this list of information allegedly available to Microsoft:

- Name and contact data

- Passwords

- Demographic data

- Payment data

- Subscription and licensing data

- Search queries

- Device and usage data

- Error reports and performance data

- Voice data

- Text, inking, and typing data

- Images

- Location data

- Content

- Feedback and ratings

- Traffic data.

My goodness.

I particularly like the geolocation data. With Google trying to turn off the geofence functions, Microsoft definitely may be an option for some customers to test. Good, bad, or indifferent, millions of people use Microsoft Outlook. Imagine the contact lists, the entity names, and the other information extractable from messages, attachments, draft folders, and the deleted content. As an Illinois farmer might say, “Winner!”

For more information about Microsoft’s alleged data practices, please, refer to the Proton article. I became uncomfortable when I read the section about how MSFT steals my email password. Imagine. Theft of a password — Is it true? My favorite giant American software company would not do that to me, a loyal customer, would it?

The write up is a bit of content marketing rah rah for Proton. I am not convinced, but I think I will have my team do some poking around on the Proton Web site. But Microsoft? No, the company would not take this action would it?

Stephen E Arnold, January 17, 2023

AI Inventors Barred from Patents. For Now

January 17, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

For anyone wondering whether an AI system can be officially recognized as a patent inventor, the answer in two countries is no. Or at least not yet. We learn from The Fashion Law, “UK Supreme Court Says AI Cannot Be Patent Inventor.” Inventor Stephen Thaler pursued two patents on behalf of DABUS, his AI system. After the UK’s Intellectual Property Office, High Court, and the Court of Appeal all rejected the applications, the intrepid algorithm advocate appealed to the highest court in that land. The article reveals:

“In the December 20 decision, which was authored by Judge David Kitchin, the Supreme Court confirmed that as a matter of law, under the Patents Act, an inventor must be a natural person, and that DABUS does not meet this requirement. Against that background, the court determined that Thaler could not apply for and/or obtain a patent on behalf of DABUS.”

The court also specified the patent applications now stand as “withdrawn.” Thaler also tried his luck in the US legal system but met with a similar result. So is it the end of the line for DABUS’s inventor ambitions? Not necessarily:

“In the court’s determination, Judge Kitchin stated that Thaler’s appeal is ‘not concerned with the broader question whether technical advances generated by machines acting autonomously and powered by AI should be patentable, nor is it concerned with the question whether the meaning of the term ‘inventor’ ought to be expanded … to include machines powered by AI ….’”

So the legislature may yet allow AIs into the patent application queues. Will being a “natural person” soon become unnecessary to apply for a patent? If so, will patent offices increase their reliance on algorithms to handle the increased caseload? Then machines would grant patents to machines. Would natural people even be necessary anymore? Once a techno feudalist with truckloads of cash and flocks of legal eagles pulls up to a hearing, rules can become — how shall I say it? — malleable.

Cynthia Murrell, January 17, 2024

Google Gems for 1 16 24: Ho Ho Ho

January 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

There is no zirconium dioxide in this gem display. The Google is becoming more aggressive about YouTube users who refuse to pay money to watch the videos on the site. Does Google have a problem after conditioning its users around the globe to use the service, provide comments, roll over for data collection, and enjoy the ever increasing number of commercial messages? Of course not, Google is a much-loved company, and its users are eager to comply. If you want some insight into Google’s “pay up or suffer” approach to reframing YouTube, navigate to “YouTube’s Ad Blocker War Leads to Major Slowdowns and Surge in Scam Ads.” Yikes, scam ads. (I thought Google had those under control a decade ago. Oh, well.)

So many high-value gems and so little time to marvel at their elegance and beauty. Thanks, MSFT Copilot Big thing. Good enough although I appear to be a much younger version of my dinobaby self.

Another notable allegedly accurate assertion about the Google’s business methods appears in “Google Accused of Stealing Patented AI Technology in $1.67 Billion Case.” Patent litigation is boring for some, but the good news is that it provides big money to some attorneys — win or lose. What’s interesting is that short cuts and duplicity appear in numerous Google gems. Is this a signal or a coincidence?

Other gems my team found interesting and want to share with you include:

- Google and the lovable Bing have been called out for displaying “deep fake porn” in their search results. If you want to know more about this issue, navigate to Neowin.net.

- In order to shore up its revenues, Alphabet is innovating the way Monaco has: Money-related gaming. How many young people will discover the thrill of winning big and take a step toward what could be a life long involvement in counseling and weekly meetings? Techcrunch provides a bit more information, but not too much.

- Are there any downsides to firing Googlers, allegedly the world’s brightest and most productive wizards wearing sneakers and gray T shirts? Not too many, but some people may be annoyed with what Google describes in baloney speak as deprecation. The idea is that features are killed off. Adapt. PCMag.com explains with post-Ziff élan. One example of changes might be the fiddling with Google Maps and Waze.

- The estimable Sun newspaper provides some functions of the Android mobiles’ hidden tricks. Surprise.

- Google allegedly is struggling to dot its “i’s” and cross its “t’s.” A blogger reports that Google “forgot” to renew a domain used in its GSuite documentation. (How can one overlook the numerous reminders to renew? It’s easy I assume.)

The final gem in this edition is one that may or may not be true. A tweet reports that Amazon is now spending more on R&D than Google. Cost cutting?

Stephen E Arnold, January 16, 2024

eBay: Still Innovating and Serving Customers with Great Ideas

January 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I noted “eBay to Pay $3 Million after Couple Became the Target of Harassment, Stalking.” If true, the “real” news report is quite interesting. The CBS professionals report:

“eBay engaged in absolutely horrific, criminal conduct. The company’s employees and contractors involved in this campaign put the victims through pure hell, in a petrifying campaign aimed at silencing their reporting and protecting the eBay brand,” Levy [a US attorney] said. “We left no stone unturned in our mission to hold accountable every individual who turned the victims’ world upside-down through a never-ending nightmare of menacing and criminal acts.”

MSFT Copilot could not render Munsters and one of their progeny opening a box. But the image is “good enough,” which is the modern way to define excellence. Well done, MSFT.

In what could have been a skit in the now-defunct “The Munsters”, allegedly some eBay professionals packed up “live spiders, cockroaches, a funeral wreath and a bloody pig mask.” The box was shipped to a couple of people who posted about the outstanding online flea market eBay on social media. A letter, coffee, or Zoom were not sufficient for the exceptional eBay executives. Why Zoom when one can bundle up some cockroaches and put them in a box? Go with the insects, right?

I noted this statement in the “real” news story:

seven people who worked for eBay’s Safety and Security unit, including two former cops and a former nanny, all pleaded guilty to stalking or cyberstalking charges.

Those posts were powerful indeed. I wonder if eBay considered hiring the people to whom the Munster fodder was sent. Individuals with excellent writing skills and the agility to evoke strong emotions are in demand in some companies.

A civil trial is scheduled for March 2025. The story has legs, maybe eight of them just like the allegedly alive spiders in the eBay gift box. Outstanding management decision making appears to characterize the eBay organization.

Stephen E Arnold, January 16, 2024

Guidelines. What about AI and Warfighting? Oh, Well, Hmmmm.

January 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It seems November 2023’s AI Safety Summit, hosted by the UK, was a productive gathering. At the very least, attendees drew up some best practices and brought them to agencies in their home countries. TechRepublic describes the “New AI Security Guidelines Published by NCSC, CISA, & More International Agencies.” Writer Owen Hughes summarizes:

“The Guidelines for Secure AI System Development set out recommendations to ensure that AI models – whether built from scratch or based on existing models or APIs from other companies – ‘function as intended, are available when needed and work without revealing sensitive data to unauthorized parties.’ Key to this is the ‘secure by default’ approach advocated by the NCSC, CISA, the National Institute of Standards and Technology and various other international cybersecurity agencies in existing frameworks. Principles of these frameworks include:

* Taking ownership of security outcomes for customers.

* Embracing radical transparency and accountability.

* Building organizational structure and leadership so that ‘secure by design’ is a top business priority.

A combined 21 agencies and ministries from a total of 18 countries have confirmed they will endorse and co-seal the new guidelines, according to the NCSC. … Lindy Cameron, chief executive officer of the NCSC, said in a press release: ‘We know that AI is developing at a phenomenal pace and there is a need for concerted international action, across governments and industry, to keep up. These guidelines mark a significant step in shaping a truly global, common understanding of the cyber risks and mitigation strategies around AI to ensure that security is not a postscript to development but a core requirement throughout.’”

Nice idea, but we noted “OpenAI’s Policy No Longer Explicitly Bans the Use of Its Technology for Military and Warfare.” The article reports that OpenAI:

updated the page on January 10 "to be clearer and provide more service-specific guidance," as the changelog states. It still prohibits the use of its large language models (LLMs) for anything that can cause harm, and it warns people against using its services to "develop or use weapons." However, the company has removed language pertaining to "military and warfare." While we’ve yet to see its real-life implications, this change in wording comes just as military agencies around the world are showing an interest in using AI.

We are told cybersecurity experts and analysts welcome the guidelines. But will the companies vending and developing AI products willingly embrace principles like “radical transparency and accountability”? Will regulators be able to force them to do so? We have our doubts. Nevertheless, this is a good first step. If only it had been taken at the beginning of the race.

Cynthia Murrell, January 16, 2024

Amazon: A Secret of Success Revealed

January 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Jeff Bezos Reportedly Told His Team to Attack Small Publishers Like a Cheetah Would Pursue a Sickly Gazelle in Amazon’s Early Days — 3 Ruthless Strategies He’s Used to Build His Empire.” The inspirational story make clear why so many companies, managers, and financial managers find the Bezos Bulldozer a slick vehicle. Who needs a better role model for the Information Superhighway?

Although this machine-generated cheetah is chubby, the big predator looks quite content after consuming a herd of sickly gazelles. No wonder so many admire the beast. Can the chubby creature catch up to the robotic wizards at OpenAI-type firms? Thanks, MSFT Copilot Bing thing. It was a struggle to get this fat beast but good enough.

The write up is not so much news but a summing up of what I think of as Bezos brainwaves. For example, the write up describes either the creator of the Bezos Bulldozer as “sadistic” or a “godfather.” Another facet of Mr. Bezos’ approach to business is an aggressive price strategy. The third tool in the bulldozer’s toolbox is creating an “adversarial” environment. That sounds delightful: “Constant friction.”

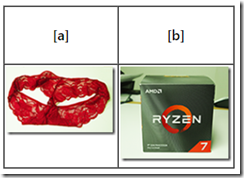

But I think there are other techniques in play. For example, we ordered a $600 dollar CPU. Amazon or one of its “trusted partners” shipped red panties in an AMD Ryzen box. [a] The CPU and [b] its official box. Fashionable, right?

This image appeared in my April 2022 Beyond Search. Amazon customer support insisted that I received a CPU, not panties in an AMB box. The customer support process made it crystal clear that I was trying the cheat them. Yeah, nice accusation and a big laugh when I included the anecdote in one of my online fraud lectures at a cyber crime conference.

More recently, I received a smashed package with a plastic bag displaying this message: “We care.” When I posted a review of the shoddy packaging and the impossibility of contacting Amazon, I received several email messages asking me to go to the Amazon site and report the problem. Oh, the merchant in question is named Meta Bosem:

Amazon asks me to answer this question before getting a resolution to this predatory action. Amazon pleads, “Did this solve my problem?” No, I will survive being the victim of what seems to a way to down a sickly gazelle. (I am just old, not sickly.)

The somewhat poorly assembled article cited above includes one interesting statement which either a robot or an underpaid humanoid presented as a factoid about Amazon:

Malcolm Gladwell’s research has led him to believe that innovative entrepreneurs are often disagreeable. Businesses and society may have a lot to gain from individuals who “change up the status quo and introduce an element of friction,” he says. A disagreeable personality — which Gladwell defines as someone who follows through even in the face of social approval — has some merits, according to his theory.

Yep, the benefits of Amazon. Let me identify the ones I experienced with the panties and the smashed product in the “We care” wrapper:

- Quality control and quality assurance. Hmmm. Similar to aircraft manufacturer’s whose planes feature self removing doors at 14,000 feet

- Customer service. I love the question before the problem is addressed which asks, “Did this solve your problem?” (The answer is, “No.”)

- Reliable vendors. I wonder if the Meta Bosum folks would like my pair of large red female undergarments for one of their computers?

- Business integrity. What?

But what does one expect from a techno feudal outfit which presents products named by smart software. For details of this recent flub, navigate to “Amazon Product Name Is an OpenAI Error Message.” This article states:

We’re accustomed to the uncanny random brand names used by factories to sell directly to the consumer. But now the listings themselves are being generated by AI, a fact revealed by furniture maker FOPEAS, which now offers its delightfully modern yet affordable I’m sorry but I cannot fulfill this request it goes against OpenAI use policy. My purpose is to provide helpful and respectful information to users in brown.

Isn’t Amazon a delightful organization? Sickly gazelles, be cautious when you hear the rumble of the Bezos Bulldozer. It does not move fast and break things. The company has weaponized its pursuit of revenue. Neither, publishers, dinobabies, or humanoids can be anything other than prey if the cheetah assertion is accurate. And the government regulatory authorities in the US? Great job, folks.

Stephen E Arnold, January 15, 2024