India: AI, We Go This Way, Then We Go That Way

April 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In early March 2024, the India said it would require all AI-related projects still in development receive governmental approval before they were released to the public. India’s Ministry of Electronics and Information Technology stated it wanted to notify the public of AI technology’s fallacies and its unreliability. The intent was to label all AI technology with a “consent popup” that informed users of potential errors and defects. The ministry also wanted to label potentially harmful AI content, such as deepfakes, with a label or unique identifier.

The Register explains that it didn’t take long for the south Asian country to rescind the plan: “India Quickly Unwinds Requirement For Government Approval Of AIs.” The ministry issued a update that removed the requirement for government approval but they did add more obligations to label potentially harmful content:

"Among the new requirements for Indian AI operations are labelling deepfakes, preventing bias in models, and informing users of models’ limitations. AI shops are also to avoid production and sharing of illegal content, and must inform users of consequences that could flow from using AI to create illegal material.”

Minister of State for Entrepreneurship, Skill Development, Electronics, and Technology Rajeev Chandrasekhar provided context for the government’s initial plan for approval. He explained it was intended only for big technology companies. Smaller companies and startups wouldn’t have needed the approval. Chandrasekhar is recognized for his support of boosting India’s burgeoning technology industry.

Whitney Grace, April 3, 2024

Google AI Has a New Competitive Angle: AI Is a Bit of Problem for Everyone Except Us, Of Course

April 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google has not recovered from the MSFT Davos PR coup. The online advertising company with a wonderful approach to management promptly did a road show in Paris which displayed incorrect data. Next the company declared a Code Red emergency (whatever that means in an ad outfit). Then the Googley folk reorganized by laterally arabesque-ing Dr. Jeff Dean somewhere and putting smart software in the hands of the DeepMind survivors. Okay, now we are into Phase 2 of the quantumly supreme company’s push into smart software.

An unknown person in Hyde Park at Speaker’s Corner is explaining to the enthralled passers by that “AI is like cryptocurrency.” Is there a face in the crowd that looks like the powerhouse behind FTX? Good enough, MSFT Copilot.

A good example of this PR tactic appears in “Google DeepMind Co-Founder Voices Concerns Over AI Hype: ‘We’re Talking About All Sorts Of Things That Are Just Not Real’.” Some additional color similar to that of sour grapes appears in “Google’s DeepMind CEO Says the Massive Funds Flowing into AI Bring with It Loads of Hype and a Fair Share of Grifting.”

The main idea in these write ups is that the Top Dog at DeepMind and possible candidate to take over the online ad outfit is not talking about ruing the life of a Go player or folding proteins. Nope. The new message, as I understand it, AI is just not that great. Here’s an example of the new PR push:

The fervor amongst investors for AI, Hassabis told the Financial Times, reminded him of “other hyped-up areas” like crypto. “Some of that has now spilled over into AI, which I think is a bit unfortunate,” Hassabis told the outlet. “And it clouds the science and the research, which is phenomenal.”

Yes, crypto. Digital currency is associated with stellar professionals like Sam Bankman-Fried and those engaged in illegal activities. (I will be talking about some of those illegal activities at the US National Cyber Crime Conference in a few weeks.)

So what’s the PR angle? Here’s my take on the message from the CEO in waiting:

- The message allows Google and its numerous supporters to say, “We think AI is like crypto but maybe worse.”

- Google can suggest, “Our AI is not so good, but that’s because we are working overtime to avoid the crypto-curse which is inherent in outfits engaged in shoving AI down your throat.”

- Googlers gardons la tête froide unlike the possibly criminal outfits cheerleading for the wonders of artificial intelligence.

Will the approach work? In my opinion, yes, it will add a joke to the Sundar and Prabhakar Comedy Act. No, I don’t think it will not alter the scurrying in the world of entrepreneurs, investment firms, and “real” Silicon Valley journalists, poohbahs, and pundits.

Stephen E Arnold, April 2, 2024

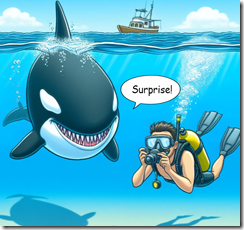

Social Media: Do You See the Hungry Shark?

April 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

After years of social media’s diffusion, those who mostly ignored how flows of user-generated content works like a body shop’s sandblaster. Now that societal structures are revealing cracks in the drywall and damp basements, I have noticed an uptick in chatter about Facebook- and TikTok-type services. A recent example of Big Thinkers’ wrestling with what is a quite publicly visible behavior of mobile phone fiddling is the write up in Nature “The Great Rewiring: Is Social Media Really Behind an Epidemic of Teenage Mental Illness?”

Thanks, MSFT Copilot. How is your security initiative coming along? Ah, good enough.

The article raises an interesting question: Are social media and mobile phones the cause of what many of my friends and colleagues see as a very visible disintegration of social conventions. The fabric of civil behavior seems to be fraying and maybe coming apart. I am not sure the local news in the Midwest region where I live reports the shootings that seem to occur with some regularity.

The write up (possibly written by a person who uses social media and demonstrates polished swiping techniques) wrestles with the possibility that the unholy marriage of social media and mobile devices may not be the “problem.” The notion that other factors come into play is an example of an established source of information working hard to take a balanced, rational approach to what is the standard of behavior.

The write up says:

Two things can be independently true about social media. First, that there is no evidence that using these platforms is rewiring children’s brains or driving an epidemic of mental illness. Second, that considerable reforms to these platforms are required, given how much time young people spend on them.

Then the article wraps up with this statement:

A third truth is that we have a generation in crisis and in desperate need of the best of what science and evidence-based solutions can offer. Unfortunately, our time is being spent telling stories that are unsupported by research and that do little to support young people who need, and deserve, more.

Let me offer several observations:

- The corrosive effect of digital information flows is simply not on the radar of those who “think about” social media. Consequently, the inherent function of online information is overlooked, and therefore, the rational statements are fluffy.

- The only way to constrain digital information and the impact of its flows is to pull the plug. That will not happen because of the drug cartel-like business models produce too much money.

- The notion that “research” will light the path forward is interesting. I cannot “trust” peer reviewed papers authored by the former president of Stanford University or the research of the former Top Dog at Harvard University’s “ethics” department. Now I am supposed to believe that “research” will provide answers. Not so fast, pal.

Net net: The failure to understand a basic truth about how online works means that fixes are not now possible. Sound gloomy? You are getting my message. Time to adapt and remain flexible. The impacts are just now being seen as more than a post-Covid or economic downturn issue. Online information is a big fish, and it remains mostly invisible. The good news is that some people have recognized that the water in the data lake has powerful currents.

Stephen E Arnold, April 2, 2024

Google Mandates YouTube AI Content Be Labeled: Accurately? Hmmmm

April 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The rules for proper use of AI-generated content are still up in the air, but big tech companies are already being pressured to induct regulations. Neowin reported that “Google Is Requiring YouTube Creators To Post Labels For Realistic AI-Created Content” on videos. This is a smart idea in the age of misinformation, especially when technology can realistically create images and sounds.

Google first announced the new requirement for realistic AI-content in November 2023. The YouTube’s Creator Studio now has a tool in the features to label AI-content. The new tool is called “Altered content” and asks creators yes and no questions. Its simplicity is similar to YouTube’s question about whether a video is intended for children or not. The “Altered content” label applies to the following:

• “Makes a real person appear to say or do something they didn’t say or do

• Alters footage of a real event or place

• Generates a realistic-looking scene that didn’t actually occur”

The article goes on to say:

“The blog post states that YouTube creators don’t have to label content made by generative AI tools that do not look realistic. One example was “someone riding a unicorn through a fantastical world.” The same applies to the use of AI tools that simply make color or lighting changes to videos, along with effects like background blur and beauty video filters.”

Google says it will have enforcement measures if creators consistently don’t label their realistic AI videos, but the consequences are specified. YouTube will also reserve the right to place labels on videos. There will also be a reporting system viewers can use to notify YouTube of non-labeled videos. It’s not surprising that Google’s algorithms can’t detect realistic videos from fake. Perhaps the algorithms are outsmarting their creators.

Whitney Grace, April 2, 2024

AI and Job Wage Friction

April 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read again “The Jobs Being Replaced by AI – An Analysis of 5M Freelancing Jobs,” published in February 2024 by Bloomberg (the outfit interested in fiddled firmware on motherboards). The main idea in the report is that AI boosted a number of freelance jobs. What are the jobs where AI has not (as yet) added friction to the money making process. Here’s the list of jobs NOT impeded by smart software:

Accounting

Backend development

Graphics design

Market research

Sales

Video editing and production

Web design

Web development

Other sources suggest that “Accounting” may be targeted by an AI-powered efficiency expert. I want to watch how this profession navigates the smart software in what is often a repetitive series of eye glazing steps.

Thanks, MSFT Copilot. How are doing doing with your reorganization? Running smoothly? Yeah. Smoothly.

Now to the meat of the report: What professions or jobs were the MOST affected by AI. From the cited write up, these are:

Customer service (the exciting, long suffering discipline of chatbots)

Social media marketing

Translation

Writing

The write up includes another telling chunk of data. AI has apparently had an impact on the amount of money some customers were willing to pay freelancers or gig workers. The jobs finding greater billing friction are:

Backend development

Market research

Sales

Translation

Video editing and production

Web development

Writing

The article contains quite a bit of related information. Please, consult the original for a number of almost unreadable graphics and tabular data. I do want to offer several observations:

- One consequence of AI, if the data in this report are close enough for horseshoes, is that smart software drives down what customers will pay for a wide range of human centric services. You don’t lose your job; you just get a taste of Victorian sweat shop management thinking

- Once smart software is perceived as reasonably capable, demand and pay for good enough translation, smart software is embraced. My view is that translation services are likely to be a harbinger of how AI will affect other jobs. AI does not have to be great; it just has to be perceived as okay. Then. Bang. Hasta la vista human translators except for certain specialized functions.

- Data like the information in the Bloomberg article provide a handy road map for AI developers. The jobs least affected by AI become targets for entrepreneurs who find that low-hanging fruit like translation have been picked. (Accountants, I surmise, should not relax to much.)

Net net: The wage suppression angle and the incremental adoption of AI followed by quick adoption are important ideas to consider when analyzing the economic ripples of AI.

Stephen E Arnold, April 1, 2024

Open Source Software: Fool Me Once, Fool Me Twice, Fool Me Once Again

April 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Open source is shoved in my face each and every day. I nod and say, “Sure” or “Sounds on point”. But in the back of my mind, I ask myself, “Am I the only one who sees open source as a way to demonstrate certain skills, a Hail, Mary, in a dicey job market, or a bit of MBA fancy dancing. I am not alone. Navigate to “Software Vendors Dump Open Source, Go for Cash Grab.” The write up does a reasonable job of explaining the open source “playbook.”

The write up asserts:

A company will make its program using open source, make millions from it, and then — and only then — switch licenses, leaving their contributors, customers, and partners in the lurch as they try to grab billions.

Yep, billions with a “B”. I think that the goal may be big numbers, but some open source outfits chug along ingesting venture funding and surfing on assorted methods of raising cash and never really get into “B” territory. I don’t want to name names because as a dinobaby, the only thing I dislike more than doctors is a legal eagle. Want proper nouns? Sorry, not in this blog post.

Thanks, MSFT Copilot. Where are you in the open source game?

The write up focuses on Redis, which is a database that strikes me as quite similar to the now-forgotten Pinpoint approach or the clever Inktomi method to speed up certain retrieval functions. Well, Redis, unlike Pinpoint or Inktomi is into the “B” numbers. Two billion to be semi-exact in this era of specious valuations.

The write up says that Redis changed its license terms. This is nothing new. 23andMe made headlines with some term modifications as the company slowly settled to earth and landed in a genetically rich river bank in Silicon Valley.

The article quotes Redis Big Dogs as saying:

“Beginning today, all future versions of Redis will be released with source-available licenses. Starting with Redis 7.4, Redis will be dual-licensed under the Redis Source Available License (RSALv2) and Server Side Public License (SSPLv1). Consequently, Redis will no longer be distributed under the three-clause Berkeley Software Distribution (BSD).”

I think this means, “Pay up.”

The author of the essay (Steven J. Vaughan-Nichols) identifies three reasons for the bait-and-switch play. I think there is just one — money.

The big question is, “What’s going to happen now?”

The essay does not provide an answer. Let me fill the void:

- Open source will chug along until there is a break out program. Then whoever has the rights to the open source (that is, the one or handful of people who created it) will look for ways to make money. The software is free, but modules to make it useful cost money.

- Open source will rot from within because “open” makes it possible for bad actors to poison widely used libraries. Once a big outfit suffers big losses, it will be hasta la vista open source and “Hello, Microsoft” or whoever the accountants and lawyers running the company believe care about their software.

- Open source becomes quasi-commercial. Options range from Microsoft charging for GitHub access to an open source repository becoming a membership operation like a digital Mar-A-Lago. The “hosting” service becomes the equivalent of a golf course, and the people who use the facilities paying fees which can vary widely and without any logic whatsoever.

Which of these three predictions will come true? Answer: The one that affords the breakout open source stakeholders to generate the maximum amount of money.

Stephen E Arnold, April 1, 2024

Cow Control or Elsie We Are Watching

April 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Australia already uses remotely controlled drones to herd sheep. Drones are considered more ethnical tax traditional herding methods because they’re less stressful for sheep.

Now the island continent is using advanced tracking technology to monitor buffalos and cows. Euro News investigates how technology is used in the cattle industry: “Scientists Are Attempting To Track 1000 Cattle And Buffalo From Using GPS, AI, And Satellites.”

An estimated 22000 buffalo freely roam in Arnhem Land, Australia. The emphasis is on estimate, because the exact number is unknown. These buffalo are harming Arnhem Land’s environment. One feral buffalo weighing 1200 kilograms and 188 cm not only damages the environment by eating a lot of plant life but also destroys cultural rock art, ceremonial sites, and waterways. Feral buffalos and cattle are major threats to Northern Australia’s economy and ecology.

Scientists, cattlemen, and indigenous rangers have teamed up to work on a program that will monitor feral bovines from space. The program is called SpaceCows and will last four years. It is a large-scale remote herd management system powered by AI and satellite. It’s also supported by the Australian government’s Smart Farming Partnership.

The rangers and stockmen trap feral bovines, implant solar-powered GPS tags, and release them. The tags transmit the data to a space satellite located 650 km away for two years or until it falls off. SpaceCows relies on Microsoft Azure’s cloud platform. The satellites and AI create a digital map of the Australian outback that tells them where feral cows live:

“Once the rangers know where the animals tend to live, they can concentrate on conservation efforts – by fencing off important sites or even culling them. ‘There’s very little surveillance that happens in these areas. So, now we’re starting to build those data sets and that understanding of the baseline disease status of animals,’ said Andrew Hoskins, a senior research scientist at CSIRO.

If successful, it could be one of the largest remote herd management systems in the world.”

Hopefully SpaceCows will protect the natural and cultural environment.

Whitney Grace, April 1, 2024