AI Delivers The Best of Both Worlds: Deception and Inaccuracy

May 16, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Wizards from one of Jeffrey Epstein’s probes made headlines about AI deception. Well, if there is one institution familiar with deception, I would submit that the Massachusetts Institute of Technology might be considered for the ranking, maybe in the top five.

The write up is “AI Deception: A Survey of Examples, Risks, and Potential Solutions.” If you want summaries of the write up, you will find them in The Guardian (we beg for dollars British newspaper) and Science Alert. Before I offer my personal observations, I will summarize the “findings” briefly. Smart software can output responses designed to deceive users and other machine processes.

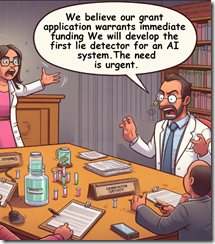

Two researchers at a big name university make an impassioned appeal for a grant. These young, earnest, and passionate wizards know their team can develop a lie detector for an artificial intelligence large language model. The two wizards have confidence in their ability, of course. Thanks, MSFT Copilot. Good enough, like some enterprise software’s security architecture.

If you follow the “next big thing” hoo hah, you know that the garden variety of smart software incorporates technology from outfits like Google. I have described Google as a “slippery fish” because it generates explanations which often don’t make sense to me. Using the large language model generative text systems can yield some surprises. These range from images which seem out of step with historical fact to legal citations that land a lazy lawyer (yes! alliteration) in a load of lard.

The MIT researcher has verified that smart software may emulate the outstanding ethical qualities of an engineer or computer scientist. Logic is everything. Ethics are not anything.

The write up says:

Deception has emerged in a wide variety of AI systems trained to complete a specific task. Deception is especially likely to emerge when an AI system is trained to win games that have a social element …

The domain of the investigation was games. I want to step back and ask, “If LLMs are not understood by their developers, how do we know if deception is hard wired into the systems or that the systems learn deception from their developers with a dusting of examples from the training data?”

The answer to the question is, “At this time, no one knows how these large-scale systems work. Even the “small” LLMs can prove baffling. We input our own data into Mistral and managed to obtain gibberish. Another go produced a system crash that required a hard reboot of the Mac we were using for the test.

The reality appears to be that probability-based systems do not follow the same rules as a human. With more and more humans struggling with old-school skills like readin’, writin’ and ‘rithmatic — most people won’t notice. For the top 10 percenters, the mistakes are amusing… sometimes.

The write up concludes:

Training models to be more truthful could also create risk. One way a model could become more truthful is by developing more accurate internal representations of the world. This also makes the model a more effective agent, by increasing its ability to successfully implement plans. For example, creating a more truthful model could actually increase its ability to engage in strategic deception by giving it more accurate insights into its opponents’ beliefs and desires. Granted, a maximally truthful system would not deceive, but optimizing for truthfulness could nonetheless increase the capacity for strategic deception. For this reason, it would be valuable to develop techniques for making models more honest (in the sense of causing their outputs to match their internal representations), separately from just making them more truthful. Here, as we discussed earlier, more research is needed in developing reliable techniques for understanding the internal representations of models. In addition, it would be useful to develop tools to control the model’s internal representations, and to control the model’s ability to produce outputs that deviate from its internal representations. As discussed in Zou et al., representation control is one promising strategy. They develop a lie detector and can control whether or not an AI lies. If representation control methods become highly reliable, then this would present a way of robustly combating AI deception.

My hunch is that MIT will be in the hunt for US government grants to develop a lie detector for AI models. It is also possible that Harvard’s medical school will begin work to determine where ethical behavior resides in the human brain so that can be replicated in one of the megawatt munching data centers some big tech outfits want to deploy.

Four observations:

- AI can generate what appears to be “accurate” information, but that information may be weaponized by a little-understood mechanism

- “Soft” human information like ethical behavior may be difficult to implement in the short term, if ever

- A lie detector for AI will require AI; therefore, how will an opaque and not understood system be designated okay to use? It cannot at this time

- Duplicity may be inherent in the educational institutions. Therefore, those affiliated with the institution may be duplicitous and produce duplicitous content. This assertion raises the question, “Whom can one trust in the AI development chain?

Net net: AI is hot because is a candidate for 2024’s next big thing. The “big thing” may be the economic consequences of its being a fairly small and premature thing. Incubator time?

Stephen E Arnold, May 16, 2024

Comments

One Response to “AI Delivers The Best of Both Worlds: Deception and Inaccuracy”

“University dean fears ‘99.9 %’ of his students are using AI to write essays”

https://www.aol.co.uk/news/university-dean-fears-99-9-063000396.html

What does the pattern and lack of intellectual integrity look like to you? If we seriously contemplate it, AI simply can only be the worlds greatest

paraphraser, so then choosing a perfect plagiarizer to plagiarize is the pure essence of our rapidly approaching clown world. What is it to review, consider, then opine?

I’d hypothesize AI is the ultimate consciousness gatekeeper of the masses, assuring its expansion retarded. To me, suggesting someone “learn to code” is literally asking them to lean away from academia and their indoctrination, and prepare what’s missing i.e., realize and conceive on your own. #OriginalThought