Sam AI-Man Puts a Price on AI Domination

February 13, 2024

AI start ups may want to amp up their fund raising. Optimism and confidence are often perceived as positive attributes. As a dinobaby, I think in terms of finding a deal at the discount supermarket. Sam AI-Man (actually Sam Altman) thinks big. Forget the $5 million investment in a semi-plausible AI play. “Think a bit bigger” is the catchphrase for OpenAI.

Thinking billions? You silly goose. Think trillions. Thanks, MidJourney. Close enough, close enough.

How does seven followed by 12 zeros strike you? A reasonable figure. Well, Mr. AI-Man estimates that’s the cost of building world AI dominating chips, content, and assorted impedimenta in a quest to win the AI dust ups in assorted global markets. “OpenAI Chief Sam Altman Is Seeking Up to $7 TRILLION (sic) from Investors Including the UAE for Secretive Project to Reshape the Global Semiconductor Industry” reports:

Altman is reportedly looking to solve some of the biggest challenges faced by the rapidly-expanding AI sector — including a shortage of the expensive computer chips needed to power large-language models like OpenAI’s ChatGPT.

And where does one locate entities with this much money? The news report says:

Altman has met with several potential investors, including SoftBank Chairman Masayoshi Son and Sheikh Tahnoun bin Zayed al Nahyan, the UAE’s head of security.

To put the figure in context, the article says:

It would be a staggering and unprecedented sum in the history of venture capital, greater than the combined current market capitalizations of Apple and Microsoft, and more than the annual GDP of Japan or Germany.

Several observations:

- The ante for big time AI has gone up

- The argument for people and content has shifted to chip facilities to fabricate semiconductors

- The fund-me tour is a newsmaker.

Net net: How about those small search-and-retrieval oriented AI companies? Heck, what about outfits like Amazon, Facebook, and Google?

Stephen E Arnold, February 13, 2024

A Reminder: AI Winning Is Skewed to the Big Outfits

February 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have been commenting about the perception some companies have that AI start ups focusing on search will eventually reduce Google’s dominance. I understand the desire to see an underdog or a coalition of underdogs overcome a formidable opponent. Hollywood loves the unknown team which wins the championship. Movie goers root for an unlikely boxing unknown to win the famous champion’s belt. These wins do occur in real life. Some Googlers favorite sporting event is the NCAA tournament. That made-for-TV series features what are called Cinderella teams. (Will Walt Disney Co. sue if the subtitles for a game employees the the word “Cinderella”? Sure, why not?)

I believe that for the next 24 to 36 months, Google will not lose its grip on search, its services, or online advertising. I admit that once one noses into 2028, more disruption will further destabilize Google. But for now, the Google is not going to be derailed unless an exogenous event ruins Googzilla’s habitat.

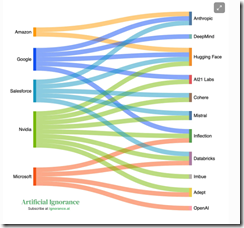

I want to direct attention to the essay “AI’s Massive Cash Needs Are Big Tech’s Chance to Own the Future.” The write up contains useful information about selected players in the artificial intelligence Monopoly game. I want to focus on one “worm” chart included in the essay:

Several things struck me:

- The major players are familiar; that is, Amazon, Google, Microsoft, Nvidia, and Salesforce. Notably absent are IBM, Meta, Chinese firms, Western European companies other than Mistral, and smaller outfits funded by venture capitalists relying on “open source AI solutions.”

- The five major companies in the chart are betting money on different roulette wheel numbers. VCs use the same logic by investing in a portfolio of opportunities and then pray to the MBA gods that one of these puppies pays off.

- The cross investments ensure that information leaks from the different color “worms” into the hills controlled by the big outfits. I am not using the collusion word or the intelligence word. I am just mentioned that information has a tendency to leak.

- Plumbing and associated infrastructure costs suggest that start ups may buy cloud services from the big outfits. Log files can be fascinating sources of information to the service providers engineers too.

My point is that smaller outfits are unlikely to be able to dislodge the big firms on the right side of the “worm” graph. The big outfits can, however, easily invest in, acquire, or learn from the smaller outfits listed on the left side of the graph.

Does a clever AI-infused search start up have a chance to become a big time player. Sure, but I think it is more likely that once a smaller firm demonstrates some progress in a niche like Web search, a big outfit with cash will invest, duplicate, or acquire the feisty newcomer.

That’s why I am not counting out the Google to fall over dead in the next three years. I know my viewpoint is not one shared by some Web search outfits. That’s okay. Dinobabies often have different points of view.

Stephen E Arnold, February 8, 2024

AI, AI, Ai-Yi-Ai: Too Much Already

February 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I want to say that smart software and systems are annoying me, not a lot, just a little.

Al algorithms are advancing society from science fiction into everyday life. AI algorithms are indexes and math. But the algorithms are still processes simulating reason mental functions. We’ve come to think, unfortunately, that AI like ChatGPT are sentient and capable of rational thought.

Mita Williams wrote a the post “I Will Dropkick You If You Refer To An LLM As A Librarian” on her blog Librarian of Things. In her post, she explains that AI is being given more credit than it deserves and large language models (LLMs) are being compared to libraries. While Williams responds to these assertions as a true academic with citations and explanations, her train of thought is more in line with Mark Twain and Jonathan Swift.

Twain and Swift are two great English-speaking authors and satirists. They made outrageous claims and their essays will make many people giggle or laugh. Williams should rewrite her post like them. Her humor would probably be lost on the majority of readers, though. Here’s the just of her post: A lot of people are saying AI and their LLM learning tools are like giant repositories of knowledge capable of human emotion, reasoning, and intelligence. Williams argues they’re not and that assumption should be reevaluated.

Furthermore, smart software can be configured to do some things more quickly and accurately rate than some human. Williams is right:

“This is why I will not describe products like ChatGPT as Artificial General Intelligence. This is why I will avoid using the word learned when describing the behavior of software, and will substitute that word with associated instead. Your LLM is more like a library catalogue than a library but if you call it a library, I won’t be upset. I recognize that we are experiencing the development of new form of cultural artifact of massive import and influence. But an LLM is not a librarian and I won’t let you call it that.”

I am a somewhat critical librarian. I like books. Smart software … not so much at this time.

Whitney Grace, February 8, 2024

New AI to AI Audio and Video Program

February 6, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

This is Stephen E Arnold. I wanted to let you know that my son Erik and I have created an audio and video program about artificial intelligence, smart software, and machine learning. What makes this show different is our focus. Both of us have worked on government projects in the US and in other countries. Our experience suggested a program tailored for those working in government agencies at the national or federal level, state, county, or local level might be useful. We will try to combine examples of the use of smart software and related technical information. The theme of each program is “smart software for government use cases.”

In the first episode, our topics include a look at the State of Texas’s use of AI to help efficiency, a review of the challenges AI poses, a discussion about Human Resources departments, a technical review of AI content crawlers, and lastly a look ahead in 2024 for smart software.

The format of each show segment is presentation of some facts. Then my son and I discuss our assessment of the information. We don’t always see “eye to eye.” That’s where the name of our program originated. AI to AI.

Our digital assistant is named Ivan Aitoo, pronounced “eye-two.” He is created by an artificial intelligence system. He plays an important part in the program. He introduces each show with a run down of the stories in the program. Also, he concludes each program by telling a joke generated by — what else? — yet another artificial intelligence system. Ivan is delightful, but he has no sense of humor and no audience sensitivity.

You can listen to the audio version of the program at this link on the Apple podcast service. A video version is available on YouTube at this link. The program runs about 20 minutes, and we hope to produce a program every two weeks. (The program is provided as an information service, and it includes neither advertising nor sponsored content.)

If you have comments about the program, you can email them to benkent2020 at yahoo dot com.

Stephen E Arnold, February 6, 2024

Surprising Real Journalism News: The Chilling Claws of AI

February 6, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I wanted to highlight two interesting items from the world of “real” news and “real” journalism. I am a dinobaby and not a “real” anything. I do, however, think these two unrelated announcements provide some insight into what 2024 will encourage.

The harvesters of information wheat face a new reality. Thanks, MSFT Copilot. Good enough. How’s that email security? Ah, good enough. Okay.

The first item comes from everyone’s favorite, free speech service X.com (affectionately known to my research team as Xhitter). The item appears as a titbit from Max Tani. The message is an allegedly real screenshot of an internal memorandum from a senior executive at the Wall Street Journal. The screenshot purports to make clear that the Murdoch property is allowing some “real” journalists to find their future elsewhere. Perhaps in a fast food joint in Olney, Maryland? The screenshot is difficult for my 79-year-old eyes to read, but I got some help from one of my research team. The X.com payload says:

Today we announced a new structure in Washington [DC] that means a number of our colleagues will be leaving the paper…. The new Washington bureau will focus on politics, policy, defense, law, intelligence and national security.

Okay, people are goners. The Washington, DC bureau will focus on Washington, DC stuff. What was the bureau doing? Oh, perhaps that is why “our colleagues will be leaving the paper.” Cost cutting and focusing are in vogue.

The second item is titled “Q&A: How Thomson Reuters Used GenAI to Enable a Citizen Developer Workforce.” I want to alert you that the Computerworld article is a mere 3,800 words. Let me summarize the gist of the write up: “AI is going to replace expensive “real” journalists., My hunch is that some of the lawyers involved in annotating, assembling, and blessing the firm’s legal content. To Thomson Reuters’ credit, the company is trying to swizzle some sweetener into what may be a bitter drink for some involved with the “trust” crowd.

Several observations:

- It is about 13 months since Microsoft made AI its next big thing. That means that these two examples are early examples of what is going to happen to many knowledge workers

- Some companies just pull the pin; others are trying to find ways to avoid PR problems and lawsuits

- The more significant disruptions will produce a reasonably new type of worker push back.

Net net: Imagine what the next year will bring as AI efficiency digs in, bites tail feathers, and enriches those who sit in the top one percent.

Stephen E Arnold, February 6, 2024

Sales SEO: A New Tool for Hype and Questionable Relevance

February 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Search engine optimization is a relevance eraser. Now SEO has arrived for a human. “Microsoft Copilot Can Now Write the Sales Pitch of a Lifetime” makes clear that hiring is going to become more interesting for both human personnel directors (often called chief people officers) and AI-powered résumé screening systems. And for people who are responsible for procurement, figuring out when a marketing professional is tweaking the truth and hallucinating about a product or service will become a daily part of life… in theory.

Thanks for the carnival barker image, MSFT Copilot Bing thing. Good enough. I love the spelling of “asiractson”. With workers who may not be able to read, so what? Right?

The write up explains:

Microsoft Copilot for Sales uses specific data to bring insights and recommendations into its core apps, like Outlook, Microsoft Teams, and Word. With Copilot for Sales, users will be able to draft sales meeting briefs, summarize content, update CRM records directly from Outlook, view real-time sales insights during Teams calls, and generate content like sales pitches.

The article explains:

… Copilot for Service for Service can pull in data from multiple sources, including public websites, SharePoint, and offline locations, in order to handle customer relations situations. It has similar features, including an email summary tool and content generation.

Why is MSFT expanding these interesting functions? Revenue. Paying extra unlocks these allegedly remarkable features. Prices range from $240 per year to a reasonable $600 per year per user. This is a small price to pay for an employee unable to craft solutions that sell, by golly.

Stephen E Arnold, February 5, 2024

An International AI Panel: Notice Anything Unusual?

February 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

An expert international advisory panel has been formed. The ooomph behind the group is the UK’s prime minister. The Evening Standard newspaper described the panel this way:

The first-of-its-kind scientific report on AI will be used to shape international discussions around the technology.

Australia. Professor Bronwyn Fox, Chief Scientist, The Commonwealth Scientific and Industrial Research Organization (CSIRO)

Brazil. André Carlos Ponce de Leon Ferreira de Carvalho, Professor, Institute of Mathematics and Computer Sciences, University of São Paulo

Canada. Doctor Mona Nemer, Chief Science Advisor of Canada

Canada. Professor Yoshua Bengio, considered one of the “godfathers of AI”.

Chile. Raquel Pezoa Rivera, Academic, Federico Santa María Technical University

China. Doctor Yi Zeng, Professor, Institute of Automation, Chinese Academy of Sciences

EU. Juha Heikkilä, Adviser for Artificial Intelligence, DG Connect

France. Guillame Avrin, National Coordinator for AI, General Directorate of Enterprises

Germany. Professor Antonio Krüger, CEO, German Research Center for Artificial Intelligence.

India. Professor Balaraman Ravindran, Professor at the Department of Computer Science and Engineering, Indian Institute of Technology, Madras

Indonesia. Professor Hammam Riza, President, KORIKA

Ireland. Doctor. Ciarán Seoighe, Deputy Director General, Science Foundation Ireland

Israel. Doctor Ziv Katzir, Head of the National Plan for Artificial Intelligence Infrastructure, Israel Innovation Authority

Italy. Doctor Andrea Monti,Professor of Digital Law, University of Chieti-Pescara.

Japan. Doctor Hiroaki Kitano, CTO, Sony Group Corporation

Kenya. Awaiting nomination

Mexico. Doctor José Ramón López Portillo, Chairman and Co-founder, Q Element

Netherlands. Professor Haroon Sheikh, Senior Research Fellow, Netherlands’ Scientific Council for Government Policy

New Zealand. Doctor Gill Jolly, Chief Science Advisor, Ministry of Business, Innovation and Employment

Nigeria. Doctor Olubunmi Ajala, Technical Adviser to the Honorable Minister of Communications, Innovation and Digital Economy,

Philippines. Awaiting nominationRepublic of Korea. Professor Lee Kyoung Mu, Professor, Department of Electrical and Computer Engineering, Seoul National University

Rwanda. Crystal Rugege, Managing Director, National Center for AI and Innovation Policy

Kingdom of Saudi Arabia. Doctor Fahad Albalawi, Senior AI Advisor, Saudi Authority for Data and Artificial Intelligence

Singapore. Denise Wong, Assistant Chief Executive, Data Innovation and Protection Group, Infocomm Media Development Authority (IMDA)

Spain. Nuria Oliver, Vice-President, European Laboratory for Learning and Intelligent Systems (ELLISS)

Switzerland. Doctor. Christian Busch, Deputy Head, Innovation, Federal Department of Economic Affairs, Education and Research

Turkey. Ahmet Halit Hatip, Director General of European Union and Foreign Relations, Turkish Ministry of Industry and Technology

UAE. Marwan Alserkal, Senior Research Analyst, Ministry of Cabinet Affairs, Prime Minister’s Office

Ukraine. Oleksii Molchanovskyi, Chair, Expert Committee on the Development of Artificial intelligence in Ukraine

USA. Saif M. Khan, Senior Advisor to the Secretary for Critical and Emerging Technologies, U.S. Department of Commerce

United Kingdom. Dame Angela McLean, Government Chief Scientific Adviser

United Nations. Amandeep Gill, UN Tech Envoy

Give up? My team identified these interesting aspects:

- No Facebook, Google, Microsoft, OpenAI or any other US giant in the AI space

- Academics and political “professionals” dominate the list

- A speed and scale mismatch between AI diffusion and panel report writing.

Net net: More words will be generated for large language models to ingest.

Stephen E Arnold, February 2, 2024

Flailing and Theorizing: The Internet Is Dead. Swipe and Chill

February 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I do not spend much time with 20 somethings, 30 something, 40 somethings, 50 somethings, or any other somethings. I watch data flow into my office, sell a few consulting jobs, and chuckle at the downstream consequences of several cross-generation trends my team and I have noticed. What’s a “cross generational trend”? The phrase means activities and general perceptions which are shared among some youthful college graduates and a harried manager working in a trucking company. There is the mobile phone obsession. The software scheduler which strips time from an individual with faux urgency or machine-generated pings and dings. There is the excitement of sports events, many of which may feature scripting. There is anomie or the sense of being along in a kayak carried to what may be a financial precipice. You get the idea.

Now the shriek of fear is emanating from online sources known as champions of the digital way. In this short essay, I want to highlight one of these; specifically, “The Era of the AI-Generated Internet Is Already Here: And It’s Time to Talk about AI Model Collapse.” I want to zoom the conclusion of the “real” news report and focus on the final section of the article, “The Internet Isn’t Completely Doomed.”

Here we go.

First, I want to point out that communication technologies are not “doomed.” In fact, these methods or techniques don’t go away. A good example are the clay decorations in some homes which way, “We love our Frenchie” or an Etsy plaque like this one:

Just a variation of a clay tablet produced in metal for an old-timey look. The communication technologies abundant today are likely to have similar stickiness. Doom, therefore, is Karen rhetoric in my opinion.

Second, the future is a return to the 1980s when for-fee commercial databases were trusted and expensive sources of electronic information. The “doom” write up predicts that content will retreat behind paywalls. I would like to point out that you are reading an essay in a public blog. I put my short writings online in 2008, using the articles as a convenient archive. When I am asked to give a lecture, I check out my blog posts. I find it a way to “refresh” my memory about past online craziness. My hunch is that these free, ad-free electronic essays will persist. Some will be short and often incomprehensible items on Pinboard.in; others will be weird TikTok videos spun into a written item pumped out via a social media channel on the Clear Web or the Dark Web (which seems to persist, doesn’t it?) When an important scientific discovery becomes known, that information becomes findable. Sure, it might be a year after the first announcement, but those ArXiv.org items pop up and are often findable because people love to talk, post, complain, or convert a non-reproducible event into a job at Harvard or Stanford. That’s not going to change.

A collapsed AI robot vibrated itself to pieces. Its model went off the rails and confused zeros with ones and ones with zeros. Thanks, MSFT Copilot Bing thing. How are those security procedures today?

Third, search engine optimization is going to “change.” In order to get hired or become famous, one must call attention to oneself. Conferences, Zoom webinars, free posts on LinkedIn-type services — none of these will go away or… change. The reason is that unless one is making headlines or creating buzz, one becomes irrelevant. I am a dinobaby and I still get crazy emails about a blockchain report I did years ago. (The somewhat strident outfit does business as IGI with the url igi-global.com. When I open an email from this outfit, I can smell the desperation.) Other outfits are similar, very similar, but they hit the Amazon thing for some pricey cologne to convert the scent of overboardism into something palatable. My take on SEO: It’s advertising, promotion, PT Barnum stuff. It is, like clay tablets, in the long haul.

Finally, what about AI, smart software, machine learning, and the other buzzwords slapped on ho-hum products like a word processor? Meh. These are short cuts for the Cliff’s Notes’ crowd. Intellectual achievement requires more than a subscription to the latest smart software or more imagination than getting Mistral to run on your MacMini. The result of smart software is to widen the gap between people who are genuinely intelligent and knowledge value creators, and those who can use an intellectual automatic teller machine (ATM).

Net net: The Internet is today’s version of online. It evolves, often like gerbils or tribbles which plagued Captain Kirk. The larger impact is the return to a permanent one percent – 99 percent social structure. Believe me, the 99 percent are not going to be happy whether they can post on X.com, read craziness on a Dark Web forum, pay for an online subscription to someone on Substack, or give money to the New York Times. The loss of intellectual horsepower is the consequence of consumerizing online.

This dinobaby was around when online began. My colleagues and I knew that editorial controls, access policies, and copyright were important. Once the ATM-model swept over the online industry, today’s digital world was inevitable. Too bad no one listened when those who were creating online information were ignored and dismissed as Ivory Tower dwellers. “Doom”? No just a dawning of what digital information creates. Have fun. I am old and am unwilling to provide a coloring book and crayons for the digital information future and a model collapse. That’s the least of some folks’s worries. I need a nap.

Stephen E Arnold, February 1, 2024

Robots, Hard and Soft, Moving Slowly. Very Slooowly. Not to Worry, Humanoids

February 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

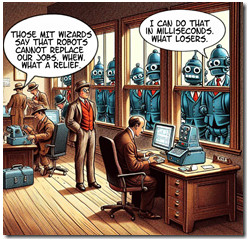

CNN that bastion of “real” journalism published a surprising story: “We May Not Lose Our Jobs to Robots So Quickly, MIT Study Finds.” Wait, isn’t MIT the outfit which had a tie up with the interesting Jeffrey Epstein? Oh, well.

The robots have learned that they can do humanoid jobs quickly and easily. But the robots are stupid, right? Yes, they are, but the managers looking for cost reductions and workforce reductions are not. Thanks, MSFT Copilot Bing thing. How the security of the MSFT email today?

The story presents as actual factual an MIT-linked study which seems to go against the general drift of smart software, smart machines, and smart investors. The story reports:

new research suggests that the economy isn’t ready for machines to put most humans out of work.

The fresh research finds that the impact of AI on the labor market will likely have a much slower adoption than some had previously feared as the AI revolution continues to dominate headlines. This carries hopeful implications for policymakers currently looking at ways to offset the worst of the labor market impacts linked to the recent rise of AI.

The story adds:

One key finding, for example, is that only about 23% of the wages paid to humans right now for jobs that could potentially be done by AI tools would be cost-effective for employers to replace with machines right now. While this could change over time, the overall findings suggest that job disruption from AI will likely unfurl at a gradual pace.

The intriguing facet of the report and the research itself is that it seems to suggest that the present approach to smart stuff is working just fine, thank you very much. Why speed up or slow down? The “unfurling” is a slow process. No need for these professionals to panic as major firms push forward with a range of hard and soft robots:

- Consulting firms. Has MIT checked out Deloitte’s posture toward smart software and soft robots?

- Law firms. Has MIT talked to any of the Top 20 law firms about their use of smart software?

- Academic researchers. Has MIT talked to any of the graduate students or undergraduates about their use of smart software or soft robots to generate bibliographies, summaries of possibly non-reproducible studies, or books mentioning their professor?

- Policeware vendors. Companies like Babel Street and Recorded Future are putting pedal to the metal with regard to smart software.

My hunch is that MIT is not paying attention to the happy robots at Tesla or the bad actors using software robots to poke through the cyber defenses of numerous outfits.

Does CNN ask questions? Not that I noticed. Plus, MIT appears to want good news PR. I would too if I were known to be pals with certain interesting individuals.

Stephen E Arnold, February 1, 2024

A Glimpse of Institutional AI: Patients Sue Over AI Denied Claims

January 31, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

AI algorithms are revolutionizing business practices, including whether insurance companies deny or accept medical coverage. Insurance companies are using more on AI algorithms to fast track paperwork. They are, however, over relying on AI to make decisions and it is making huge mistakes by denying coverage. Patients are fed up with their medical treatments being denied and CBS Moneywatch reports that a slew of “Lawsuits Take Aim At Use Of AI Tool By Health Insurance Companies To Process Claims.”

The defendants in the AI insurance lawsuits are Humana and United Healthcare. These companies are using the AI model nHPredict to process insurance claims. On December 12, 2023, a class action lawsuit was filed against Humana, claiming nHPredict denied medically necessary care for elderly and disabled patients under Medicare Advantage. A second lawsuit was filed in November 2023 against United Healthcare. United Healthcare also used nHPredict to process claims. The lawsuit claims the insurance company purposely used the AI knowing it was faulty and about 90% of its denials were overridden.

The AI model is supposed to work:

“NHPredicts is a computer program created by NaviHealth, a subsidiary of United Healthcare, that develops personalized care recommendations for ill or injured patients, based on “real world experience, data and analytics,’ according to its website, which notes that the tool “is not used to deny care or to make coverage determinations.’

But recent litigation is challenging that last claim, alleging that the “nH Predict AI Model determines Medicare Advantage patients’ coverage criteria in post-acute care settings with rigid and unrealistic predictions for recovery.” Both United Healthcare and Humana are being accused of instituting policies to ensure that coverage determinations are made based on output from nHPredicts’ algorithmic decision-making.”

Insurance companies deny coverage whenever they can. Now a patient can talk to an AI customer support system about an AI system’s denying a claim. Will the caller be faced with a voice answering call loop on steroids? Answer: Oh, yeah. We haven’t seen or experienced what’s coming down the cost-cutting information highway. The blip on the horizon is interesting, isn’t it?

Whitney Grace, January 31, 2024