A Webinar Adds Value to Data

January 11, 2014

Connotate is offering a webinar called, “Big Data: The Portal To New Value Propositions.” The webinar summary explains what most big data people already know: that with all the new data available, there are new ways to cash in. The summary continues with that people generate data everyday with everything they do on the Internet and that companies have been collecting this information for years. Did you also know that as well as a physical identity that people also have a virtual identity? This is very basic knowledge here. Finally the summary gets to the point about how business value propositions will supply new opportunities, but also leads to possible risks.

After the summary, there is a list of topics that will be covered in the webinar:

· “Review the process of creating big data-based value propositions and illustrate many examples in science and health, finance, publishing and advertising.

· Explore which companies are successful, which are not and why.

· Review the mechanics: How to use unstructured content and combine it with structured data.

· Focus on data extraction, the “curation” process, the organization of value-based schemas and analytics.

· Analyze the ultimate delivery of value propositions that rest on the unique combination of unique data sets responding to a specific need.”

Big data has been around long enough that there should be less of a focus on how the data is gathered and more on the importance of value propositions. Value propositions demonstrate how the data can yield the results and how they can be used. Data value debates have been going on for awhile, especially on LinkedIn. If Connotate and Outsell know how to turn data into dollars, advertise that instead of repeating big data specs.

Whitney Grace, January 11, 2014

Sponsored by ArnoldIT.com, developer of Augmentext

Big Data 2013 Wrapup

January 10, 2014

2013 was the year that big data became big business, says Alex Handy in his San Diego Times article, “Big Data 2013: Another Big Year.” Handy explains that big data made the transformation when enterprises deployed Hadoop in production environments and NoSQL people spread data around on servers. These two combined situations resulted in disseminating massive amounts of data and employing enterprise systems to manage the information.

Hadoop 2.0 was the key player in big data getting bigger, because the software went from needing an experienced user to handle it to a more general-purpose usage along with map/reduce as the batch processing method. Hadoop was not the only item that helped make big data grow. Many other projects and software had a hand in making big data a burgeoning market. The one most comparable to Hadoop 2.0 was NoSQL databases:

“NoSQL databases continued to gain traction thanks to a never-ending need to spread data around the globe in a highly available and consistent form. To that end, a number of new transactional databases, some calling themselves “New SQLs,” cropped up this past year. NuoDB, FoundationDB and VoltDB all brought databases to market in 2013 that offered transactional support based on the ideas and techniques shown in the Google Spanner paper.”

The established NoSQL names: DataStax, Cassandra, MongoDB, and Crouchbase, however, squabbled over asserting their dominance in the market. The new year looks to be another big data year and the article implies that Basho, Sqrrl, and Hortonworks are the names to keep an eye out for. At this point in the big data game, everything needs to be watched out for.

Whitney Grace, January 10, 2014

Sponsored by ArnoldIT.com, developer of Augmentext

The IBM Watson PR Blitz Continues

January 9, 2014

Content marketing is alive and well at IBM. I read two Watson related stories this morning. Let’s look at each and see if there are hints about how IBM will generate $10 billion in revenue from the game show winning Watson information system.

The New York Times

“IBM Is Betting Watson Can Earn Its Keep” appears on page B 9 of the hard copy which arrives in Harrod’s Creek most days. A digital instance of this Quentin Hardy write up may be online at http://nyti.ms/1krYgfx. If not, contact a Google Penguin for guidance.

The write up contains a quote to note:

Virginia M. Rometty, CEO of IBM: Watson does more than find the needle in the haystack. It understands the haystack. It understands concepts.

The best haystack quote I have heard came from Matt Kohl, student of Gerald Salton and founder of Personal Library Software. Dr. Kohl pointed out that that haystacks involve needles, multiple haystacks and multiple needles, and other nuances that make clear how difficult locating information can be.

The quote attributed to Ms. Rometty also nods to Autonomy’s marketing. Autonomy, since 1996, emphasized that one of the core functions of the Bayesian-Shannon-Laplace-Volterra method was identifying concepts automatically. Are IBM and arch rival Hewlett Packard using the same 18 year old marketing lingo? If so, I wonder how that will play out against the real-life struggles HP seems to be experiencing in the information retrieval sector.

There are several other interesting points in the content marketing-style article:

- IBM is “giving Watson $1 billion and a nice office.” I wonder if the nuance of “giving” is better than “investing.”

- $100 million will be allocated “for venture investments related to Watson’s so-called data analysis and recommendation technology.” One hopes that IBM’s future acquisitions deliver value. IBM already owns iPhrase, a “smart search system,” some of Dr. Ramanathan Guha’s semantic technology, Vivisimo, and the text processing component of SPSS called Clementine. That’s a lot of in hand technology, but IBM wants to buy more. What are the costs of integration?

- IBM has to figure out how to “cohere” with other IBM initiatives. Is Cognos now part of Watson? What happens to the IBM Almaden research flowing from Web Fountain and similar initiatives? What is the role of Lucene, which I heard is the plumbing of Watson?

The IBM write up will get wide pick up, but the article strikes me as raising some serious questions about Watson initiative. There may be 750 eager developers wanting to write applications for Watson. I am waiting for an Internet accessible demonstration against a live data set.

The Wall Street Journal, Round 2, January 9, 2014

The day after running “IBM Struggles to turn Watson into Big Business”, the real news outfit ran a second story called “IBM Set to Expand Watson’s Reach.” I saw this on page B2 of the hard copy that arrived in Harrod’s Creek this morning. Progress. There was no WSJ delivery on January 6 and January 7 because it was too cold. You may be able to locate a digital version of the story at http://on.wsj.com/1ikQa3X. (Same Penguin advice applies if the article is not available online.)

This January 9, 2014, story includes a quote to note:

Michael Rhodin, IBM senior vice president, Watson unit: We are now moving into more of a rapid expansion phase. We’ve made incredible progress. There is lots more to do. We would not be pursuing it if we did not think think had big commercial potential.

We then learn that by 2018, Watson will generate $1 billion per year. Autonomy was founded in 1996 and at the time of its purchase by Hewlett Packard, the company reported revenue in the $800 million range. IBM wants to generate more revenue from search in less time than Autonomy. No other enterprise search and content processing vendor has been able to match Autonomy’s performance. In fact, Autonomy’s rapid growth after 2004 was due in part to acquisitions. Autonomy paid about $500 million for Verity and IBM’s $100 million for investments may not buy much in a search sector that has consolidated. Oracle paid about $1 billion for Endeca which generated about $130 million a year in 2011.

Net Net

Watson has better PR than most of the search and content processing companies I track. How many people at the Watson unit pay attention to SRCH2, Open Search Server, Sphinx Search, SearchDaimon, the Dassault Cloud 360 system, and the dozens and dozens of other companies pitching information retrieval solutions.

I would wager that the goals for Watson are unachievable in the time frame outlined. The ability of a large company to blast past Autonomy’s revenue benchmark will require agility, flexibility, price wizardry, and a product that delivers verifiable value.

As the second Wall Street Journal points out, “IBM is looking to revive growth after six straight quarters of revenue declines.”

IBM may be better at content marketing than hitting the revenue targets for Watson at the same time Hewlett Packard is trying to generate massive revenues from the Autonomy technology. Will Google sit on its hands as IBM and HP scoop up the enterprise deals? What about Amazon? Its search system is a so-so offering, but it can offer some sugar treats to organizations looking to kick tires with reduced risk.

Many organizations are downloading open source search and data management systems. These are good enough when smart software is still a work in progress. With 2,000 people working on Watson, the trajectory of this solution will be interesting to follow.

Stephen E Arnold, January 9, 2014

If You Seek Variety Do Not Turn to Big Data

January 3, 2014

I am sure that you have heard that Big Data is capable of working with all data types. According to Information Week’s article, “Variety’s The Spice Of Life-And Bane Of Big Data” the newest trend has problem and the biggest is handling the variety of data. The article argues that it is impractical to funnel ad hoc data source into a central schema. The better alternative is to use optional schema also known as RDF standards or semantic web technologies. How can trading one schema for another make a difference?

The article states:

“When data is accessible using the simple RDF triples model, you can mix data from different sources and use the SPARQL query language to find connections and patterns with no need to predefine schema. Leveraging RDF doesn’t require data migration, but can take advantage of middleware tools that dynamically make relational databases, spreadsheets, and other data sources available as triples. Schema metadata can be dynamically pulled from data. It is stored and queried the same way as data.”

RDF acts like a sieve. It allows the unnecessary data information to run through the small holes, leaving the relevant stuff behind for quicker access. It sounds like a perfect alternative, except it comes with its own set of challenges. The good news is that these problems can easily be resolved with a little training and practice.

Whitney Grace, January 03, 2014

Sponsored by ArnoldIT.com, developer of Augmentext

Attivio is Synonymous with Partnership

December 21, 2013

If you need a business intelligence solution, apparently Attivio is the one stop shop to go. Attivio has formed two strategic partnerships. The Providence Journal announced that “Actian And Attivio OEM Agreement Accelerates Big Data Business Value By Integrating Big Content.” Actian, a big data analytics company, has an OEM agreement with Attivio to use its Active Intelligence Engine (AIE) to ramp their data analytics solution. AIE completes Actian’s goal to deliver analytics on all types of data from social media to surveys to research documents.

The article states:

” ‘Big Content has become a vital piece in the Big Data puzzle,’ said David Schubmehl, Research Director, IDC. ‘The majority of enterprise information created today is human-generated, but legacy systems have traditionally required processing structured data and unstructured content separately. The addition of Attivio AIE to Actian ParAccel provides an extremely cost-effective option that delivers impressive performance and value.’ “

Panorama announced on its official Web site that, “Panorama And Attivio Announce BI Technology Alliance Partnership.” The AIE will be combined with Panorama’s software to improve the business value of content and big data. Panorama’s BI solution will use the AIE to streamline enterprise decision-making processes by eliminating the need to switch between applications to access data. This will speed up business productivity and improve data access.

The article explains:

“ ‘One of the goals of collaborative BI is to connect data, insights and people within the organization,’ said Sid Probstein, CTO at Attivio. ‘The partnership with Panorama achieves this because it gives customers seamless and intuitive discovery of information from sources as varied as corporate BI to semi-structured data and unstructured content.’”

Attivio is a tool used to improve big data projects to enhance usage of data. The company’s strategy to be a base for other solutions to be built on is similar to what Fulcrum Technologies did in 1985.

Whitney Grace, December 21, 2013

Sponsored by ArnoldIT.com, developer of Augmentext

Doctor Autonomy I Presume

December 20, 2013

HP is putting Autonomy in a pith helmet and sending them to the jungle, according to ZDNet,’s article “HP’s Earth Insights Deploys Big Data Tech Against Eco Threats.” HP has transformed big data into an ecology tool. Vertica, HP’s big data technology is helping ecologists detect risks to endangered species. The new endeavor is called Earth Insights and it is a joint venture between Conservation International-a non-governmental group. Earth Insights speeds up the analysis of environmental data with the addition of being in real-time and with better accuracy.

HP is also using this opportunity to show off its product line and its applications for different fields. The company is proving that big data is not only reserved for the retail and business sectors, but science can take advantage of its potential as well. The entire project uses Vertica, Hadoop, and Autonomy IDOL to analyze the 1.4 million photos, climate measurements, and three terabytes of biodiversity information from cameras and climate sensors.

“The results of the analytics will be shared with protected area managers, as well as with governments, academic institutions, non-governmental bodies and the private sector, so that they can act to protect threatened wildlife and develop policies to address threats to habitats. According to the company, the project is already yielding new information indicating declines in a significant number of the species monitored.”

Technology is seen as an opposing force to nature, but in this case technology is being used to preserve nature. It reminds me of how a Brazilian tribe used Google and an Android phone to save their home in the Amazon.

Whitney Grace, December 20, 2013

Sponsored by ArnoldIT.com, developer of Augmentext

Big Data Experimentation: a Business Model for the 21st Century

December 17, 2013

The article Big Data Demands Nonstop Experimentation on Infoworld targets old school executives living in what the article sums up as a conservative dream world where experimenting with Big data is a radical time waster. The article suggests a shift in the fundamental approach to Big data from the “causal density” model to a continual and ideally randomized experimental model.

The article explains, quoting blogger Michael Walker:

“Under this approach, business model fine-tuning becomes a never-ending series of practical experiments. Data scientists evolve into an operational function, running their experiments 24-7 with the full support and encouragement of senior business executives… Any shift toward real-world experimentation requires the active support of the senior stakeholders — such as the chief marketing officer — whose business operations will be impacted. As Walker states: ”…Business and public policy leaders need to support and adequately fund experimentation by the data science and business analytics teams.”

This may just be the information cash-strapped, revenue-hungry firms need. No one said Big Data was easy or quick, but what is clear is that a commitment to experimentation is needed, lots of experimentation. The article cites Google and Facebook as 21st Century success stories already embracing this model, in fact founded on the idea of experimentation.

Chelsea Kerwin, December 17, 2013

Sponsored by ArnoldIT.com, developer of Augmentext

Big Data: Is Grilling Better with Math?

December 16, 2013

Is there a connection between Big Data and grilling? Is there a connection between Big Data and your business?

I read “Big Data Beyond Business Intelligence: Rise Of The MBAs.” The write up is chock full of statements about large data sets and the numerical recipes required to tame them. But none of the article’s surprising comments matches one point I noticed.

Here’s the quote:

Software automation can’t improve without reorganizing a company around its data. Consider it organizational self-reflection, learning from every interaction humans have with work-related machines. Collaborative, social software is at the heart of this interaction. Software must find innovative ways to interface data with employees, visualization being the most promising form of data democratization.

I will be the first to admit that the economic revolution has left some businesses reeling, particularly in rural Kentucky. Other parts of the country are, according to some pundits, bursting with health.

Is a business reorganization better with Big Data?

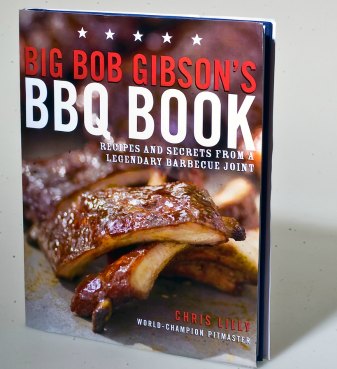

Will Big Data deliver better grilled meat? Buy a copy of this book by Lilly and Gibson and see if there are ways to reorganize the business of grilling around self reflection. Big Data cannot deliver a sure fire winning steak? Will Big Data deliver for other businesses?

But for the business that is working hard to make sales, meet payroll, and serve its customers, Big Data as a concept is one facet of senior managers’ work. Information is important to a business. The idea that more information will contribute to better decisions is one of the buttons that marketers enjoy mashing. Software is useful, but it is by itself not a panacea. Software can sink a business as well as float it.

However, figuring out the nuances buried within Big Data, a term that is invoked, not defined, is difficult. The rise of the data scientist is a reminder that having volumes of data to review requires skills many do not possess. Data integrity is one issue. Another is the selection of mathematical tools to use. Then there is the challenge of configuring the procedures to deliver outputs that make sense.

Big Data Still Faces a Few Hitches

December 15, 2013

Writer Mellisa Tolentino assesses the state of big data in, “Big Data Economy: The Promises + Hindrances of BI, Advanced Analytics” at SiliconAngle. Pointing to the field’s expected $50 billion in revenue by 2017, she says the phenomenon has given rise to a “Data Economy.” The article notes that enterprises in a number of industries have been employing big data tech to increase their productivity and efficiency.

However, there are still some wrinkles to be ironed out. One is the cumbersome process of pulling together data models and curating data sources, a real time suck for IT departments. This problem, though, may find resolution in nascent services that will take care of all that for a fee. The biggest issue may be the debate about open source solutions.

The article explains:

“Proponents of the open-source approach argue that it will be able to take advantage of community innovations across all aspects of product development, that it’s easier to get customers especially if they offer fully-functioning software for free. Plus, they say it is easier to get established partners that could easily open up market opportunities.

Unfortunately, the fully open-source approach has some major drawbacks. For example, the open-source community is often not united, making progress slower. This affects the long-term future of the product and revenue; plus, businesses that offer only services are harder to scale. As for the open core approach, though it has the potential to create value differentiation faster than the open source community, experts say it can easily lose its value when the open-source community catches up in terms of functionality.”

Tolentino adds that vendors can find themselves in a reputational bind when considering open source solutions: If they eschew the open core approach, they may be seen as refusing to support the open source community. However, if they do embrace open source solutions, some may accuse them of taking advantage of that community. Striking the balance while doing what works best for one’s company is the challenge.

Cynthia Murrell, December 15, 2013

Sponsored by ArnoldIT.com, developer of Augmentext

BA Insight Makes Deloitte Fast 500 List

December 14, 2013

It looks like BA Insight is growing and growing. Yahoo Finance shares, “BA Insight Ranked Number 393 Fastest Growing Company in North America on Deloitte’s 2013 Technology Fast 500 (TM).” The list ranks the 500 fastest-growing: tech, media, telecom, life sciences, and clean tech companies on this continent. The evaluation is based on percentage fiscal year revenue growth from 2008 to 2012. (See the article for conditions contenders must meet.)

We learn:

“BA Insight’s Chief Executive Officer, Massood Zarrabian credits the emergence of Big Data and the market demand for search-driven applications for the company’s revenue growth. He said, ‘We are honored to be ranked among the fastest growing technology companies in North America. BA Insight has been focused on developing the BAI Knowledge Integration Platform that enables organization to implement powerful search-driven applications rapidly, at a fraction of the cost, time, and risk of traditional alternatives. Additionally, we have partnered with visionary organizations to transform their enterprise search engines into knowledge engines giving them full access to organizational knowledge assets.'”

The press release notes that BA Insight has grown 193 percent over five years. Interesting—while other firms are struggling, BA Insight has almost doubled. But from what to what? The write-up does not say.

BA Insight has set out to redefine enterprise search to make it more comprehensive and easier to use. Founded in 2004, the company is headquartered in Boston and keeps its technology center in New York City. Some readers may be interested to know that the company is currently hiring for the Boston office.

Cynthia Murrell, December 14, 2013

Sponsored by ArnoldIT.com, developer of Augmentext