In Tech We Mistrust

March 11, 2024

While tech firms were dumping billions into AI, they may have overlooked one key component: consumer faith. The Hill reports, “Trust in AI Companies Drops to 35 Percent in New Study.” We note that 35% figure is for the US only, while the global drop was a mere 8%. Still, that is the wrong direction for anyone with a stake in the market. So what is happening? Writer Filip Timotija tells us:

So it is not just AI we mistrust, it is tech companies as a whole. That tracks. The study polled 32,000 people across 28 countries. Timotija reminds us regulators in the US and abroad are scrambling to catch up. Will fear of consumer rejection do what neither lagging lawmakers nor common decency can? The write-up notes:

“Westcott argued the findings should be a ‘wake up call’ for AI companies to ‘build back credibility through ethical innovation, genuine community engagement and partnerships that place people and their concerns at the heart of AI developments.’ As for the impacts on the future for the industry as a whole, ‘societal acceptance of the technology is now at a crossroads,’ he said, adding that trust in AI and the companies producing it should be seen ‘not just as a challenge, but an opportunity.’” “Multiple factors contributed to the decline in trust toward the companies polled in the data, according to Justin Westcott, Edelman’s chair of global technology. ‘Key among these are fears related to privacy invasion, the potential for AI to devalue human contributions, and apprehensions about unregulated technological leaps outpacing ethical considerations,’ Westcott said, adding ‘the data points to a perceived lack of transparency and accountability in how AI companies operate and engage with societal impacts.’ Technology as a whole is losing its lead in trust among sectors, Edelman said, highlighting the key findings from the study. ‘Eight years ago, technology was the leading industry in trust in 90 percent of the countries we study,’ researchers wrote, referring to the 28 countries. ‘Now it is most trusted only in half.’”

Yes, an opportunity. All AI companies must do is emphasize ethics, transparency, and societal benefits over profits. Surely big tech firms will get right on that.

Cynthia Murrell, March 11, 2024

ACM: Good Defense or a Business Play?

March 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Professional publishers want to use the trappings of peer review, standards, tradition, and quasi academic hoo-hah to add value to their products; others want a quasi-monopoly. Think public legal filings and stuff in high school chemistry book. The customers of professional publishers are typically not the folks at the pizza joint on River Road in Prospect, Kentucky. The business of professional publishing in an interesting one, but in the wild and crazy world of collapsing next-gen publishing, professional publishing is often ignored. A publisher conference aimed at professional publishers is quite different from the Jazz Age South by Southwest shindig.

Yep, free. Thanks, MSFT Copilot. How’s that security today?

But professional publishers have been in the news. Examples include the dust up about academics making up data. The big time president of the much-honored Stanford University took intellectual short cuts and quit late last year. Then there was the some nasty issue about data and bias at the esteemed Harvard University. Plus, a number of bookish types have guess-timated that a hefty percentage of research studies contain made-up data. Hey, you gotta publish to get tenure or get a grant, right?

But there is an intruder in the basement of the professional publishing club. The intruder positions itself in the space between the making up of some data and the professional publishing process. That intruder is ArXiv, an open-access repository of electronic preprints and postprints (known as e-prints) approved for posting after moderation, according to Wikipedia. (Wikipedia is the cancer which killed the old-school encyclopedias.) Plus, there are services which offer access to professional content without paying for the right to host the information. I won’t name these services because I have no desire to have legal eagles circle about my semi-functioning head.

Why do I present this grade-school level history? I read “CACM Is Now Open Access.” Let’s let the Association of Computing Machinery explain its action:

For almost 65 years, the contents of CACM have been exclusively accessible to ACM members and individuals affiliated with institutions that subscribe to either CACM or the ACM Digital Library. In 2020, ACM announced its intention to transition to a fully Open Access publisher within a roughly five-year timeframe (January 2026) under a financially sustainable model. The transition is going well: By the end of 2023, approximately 40% of the ~26,000 articles ACM publishes annually were being published Open Access utilizing the ACM Open model. As ACM has progressed toward this goal, it has increasingly opened large parts of the ACM Digital Library, including more than 100,000 articles published between 1951–2000. It is ACM’s plan to open its entire archive of over 600,000 articles when the transition to full Open Access is complete.

The decision was not an easy one. Money issues rarely are.

I want to step back and look at this interesting change from a different point of view:

- Getting a degree today is less of a must have than when I was a wee dinobaby. My parents told me I was going to college. Period. I learned how much effort was required to get my hands on academic journals. I was a master of knowing that Carnegie-Mellon had new but limited bound volumes of certain professional publications. I knew what journals were at the University of Pittsburgh. I used these resources when the Duquesne Library was overrun with the faithful. Now “researchers” can zip online and whip up astonishing results. Google-type researchers prefer the phrase “quantumly supreme results.” This social change is one factor influencing the ACM.

- Stabilizing revenue streams means pulling off a magic trick. Sexy conferences and special events complement professional association membership fees. Reducing costs means knocking off the now, very very expensive printing, storing, and shipping of physical journals. The ACM seems to have figured out how to keep the lights on and the computing machine types spending.

- ACM members can use ACM content the way they do a pirate library’s or the feel good ArXiv outfit. The move helps neutralize discontent among the membership, and it is good PR.

These points raise a question; to wit: In today’s world how relevant will a professional association and its professional publications be going foreword. The ACM states:

By opening CACM to the world, ACM hopes to increase engagement with the broader computer science community and encourage non-members to discover its rich resources and the benefits of joining the largest professional computer science organization. This move will also benefit CACM authors by expanding their readership to a larger and more diverse audience. Of course, the community’s continued support of ACM through membership and the ACM Open model is essential to keeping ACM and CACM strong, so it is critical that current members continue their membership and authors encourage their institutions to join the ACM Open model to keep this effort sustainable.

Yep, surviving in a world of faux expertise.

Stephen E Arnold, March 8, 2024

Engineering Trust: Will Weaponized Data Patch the Social Fabric?

March 7, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Trust is a popular word. Google wants me to trust the company. Yeah, I will jump right on that. Politicians want me to trust their attestations that citizen interest are important. I worked in Washington, DC, for too long. Nope, I just have too much first-hand exposure to the way “things work.” What about my bank? It wants me to trust it. But isn’t the institution the subject of a a couple of government investigations? Oh, not important. And what about the images I see when I walk gingerly between the guard rails. I trust them right? Ho ho ho.

In our post-Covid, pre-US national election, the word “trust” is carrying quite a bit of freight. Whom to I trust? Not too many people. What about good old Socrates who was an Athenian when Greece was not yet a collection of ferocious football teams and sun seekers. As you may recall, he trusted fellow residents of Athens. He end up dead from either a lousy snack bar meal and beverage, or his friends did him in.

One of his alleged precepts in his pre-artificial intelligence worlds was:

“We cannot live better than in seeking to become better.” — Socrates

Got it, Soc.

Thanks MSFT Copilot and provider of PC “moments.” Good enough.

I read “Exclusive: Public Trust in AI Is Sinking across the Board.” Then I thought about Socrates being convicted for corruption of youth. See. Education does not bring unlimited benefits. Apparently Socrates asked annoying questions which open him to charges of impiety. (Side note: Hey, Socrates, go with the flow. Just pray to the carved mythical beast, okay?)

A loss of public trust? Who knew? I thought it was common courtesy, a desire to discuss and compromise, not whip out a weapon and shoot, bludgeon, or stab someone to death. In the case of Haiti, a twist is that a victim is bound and then barbequed in a steel drum. Cute and to me a variation of stacking seven tires in a pile dousing them with gasoline, inserting a person, and igniting the combo. I noted a variation in the Ukraine. Elderly women make cookies laced with poison and provide them to special operation fighters. Subtle and effective due to troop attrition I hear. Should I trust US Girl Scout cookies? No thanks.

What’s interesting about the write up is that it provides statistics to back up this brilliant and innovative insight about modern life is its focus on artificial intelligence. Let me pluck several examples from the dot point filled write up:

- “Globally, trust in AI companies has dropped to 53%, down from 61% five years ago.”

- “Trust in AI is low across political lines. Democrats trust in AI companies is 38%, independents are at 25% and Republicans at 24%.”

- “Eight years ago, technology was the leading industry in trust in 90% of the countries Edelman studies. Today, it is the most trusted in only half of countries.”

AI is trendy; crunchy click bait is highly desirable even for an estimable survivor of Silicon Valley style news reporting.

Let me offer several observations which may either be troubling or typical outputs from a dinobaby working in an underground computer facility:

- Close knit groups are more likely to have some concept of trust. The exception, of course, is the behavior of the Hatfields and McCoys

- Outsiders are viewed with suspicion. Often for now reason, a newcomer becomes the default bad entity

- In my lifetime, I have watched institutions take actions which erode trust on a consistent basis.

Net net: Old news. AI is not new. Hyperbole and click obsession are factors which illustrate the erosion of social cohesion. Get used to it.

Stephen E Arnold, March 7, 2024

Kagi Hitches Up with Wolfram

March 6, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Kagi + Wolfram” reports that the for-fee Web search engine with AI has hooked up with one of the pre-eminent mathy people innovating today. The write up includes PR about the upsides of Kagi search and Wolfram’s computational services. The article states:

…we have partnered with Wolfram|Alpha, a well-respected computational knowledge engine. By integrating Wolfram Alpha’s extensive knowledge base and robust algorithms into Kagi’s search platform, we aim to deliver more precise, reliable, and comprehensive search results to our users. This partnership represents a significant step forward in our goal to provide a search engine that users can trust to find the dependable information they need quickly and easily. In addition, we are very pleased to welcome Stephen Wolfram to Kagi’s board of advisors.

The basic wagon gets a rethink with other animals given a chance to make progress. Thanks, MSFT Copilot. Good enough, but in truth I gave up trying to get a similar image with the dog replaced by a mathematician and the pig replaced with a perky entrepreneur.

The integration of mathiness with smart search is a step forward, certainly more impressive than other firms’ recycling of Web content into bubble gum cards presenting answer. Kagi is taking steps — small, methodical ones — toward what I have described as “search enabled applications” and my friend Dr. Greg Grefenstette described in his book with the snappy title “Search-Based Applications: At the Confluence of Search and Database Technologies (Synthesis Lectures on Information Concepts, Retrieval, and Services, 17).”

It may seem like a big step from putting mathiness in a Web search engine to creating a platform for search enabled applications. It may be, but I like to think that some bright young sprouts will figure out that linking a mostly brain dead legacy app with a Kagi-Wolfram service might be useful in a number of disciplines. Even some super confident really brilliantly wonderful Googlers might find the service useful.

Net net: I am gratified that Kagi’s for-fee Web search is evolving. Google’s apparent ineptitude might give Kagi the chance Neeva never had.

Stephen E Arnold, March 6, 2024

Open Source: Free, Easy, and Fast Sort Of

February 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Not long ago, I spoke with an open source cheerleader. The pros outweighed the cons from this technologist’s point of view. (I would like to ID the individual, but I try to avoid having legal eagles claw their way into my modest nest in rural Kentucky. Just plug in “John Wizard Doe”, a high profile entrepreneur and graduate of a big time engineering school.)

I think going up suggests a problem.

Here are highlights of my notes about the upside of open source:

- Many smart people eyeball the code and problems are spotted and fixed

- Fixes get made and deployed more rapidly than commercial software which of works on an longer “fix” cycle

- Dead end software can be given new kidneys or maybe a heart with a fork

- For most use cases, the software is free or cheaper than commercial products

- New functions become available; some of which fuel new product opportunities.

There may be a few others, but let’s look at a downside few open source cheerleaders want to talk about. I don’t want to counter the widely held belief that “many smart people eyeball the code.” The method is grab and go. The speed angle is relative. Reviving open source again and again is quite useful; bad actors do this. Most people just recycle. The “free” angle is a big deal. Everyone like “free” because why not? New functions become available so new markets are created. Perhaps. But in the cyber crime space, innovation boils down to finding a mistake that can be exploited with good enough open source components, often with some mileage on their chassis.

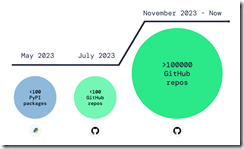

But the one point open source champions crank back on the rah rah output. “Over 100,000 Infected Repos Found on GitHub.” I want to point out that GitHub is a Microsoft, the all-time champion in security, owns GitHub. If you think about Microsoft and security too much, you may come away confused. I know I do. I also get a headache.

This “Infected Repos” API IRO article asserts:

Our security research and data science teams detected a resurgence of a malicious repo confusion campaign that began mid-last year, this time on a much larger scale. The attack impacts more than 100,000 GitHub repositories (and presumably millions) when unsuspecting developers use repositories that resemble known and trusted ones but are, in fact, infected with malicious code.

The write up provides excellent information about how the bad repos create problems and provides a recipe for do this type of malware distribution yourself. (As you know, I am not too keen on having certain information with helpful detail easily available, but I am a dinobaby, and dinobabies have crazy ideas.)

If we confine our thinking to the open source champion’s five benefits, I think security issues may be more important in some use cases.The better question is, “Why don’t open source supporters like Microsoft and the person with whom I spoke want to talk about open source security?” My view is that:

- Security is an after thought or a never thought facet of open source software

- Making money is Job #1, so free trumps spending money to make sure the open source software is secure

- Open source appeals to some venture capitalists. Why? RedHat, Elastic, and a handful of other “open source plays”.

Net net: Just visualize a future in which smart software ingests poisoned code, and programmers who rely on smart software to make them a 10X engineer. Does that create a bit of a problem? Of course not. Microsoft is the security champ, and GitHub is Microsoft.

Stephen E Arnold, February 29, 2024

Google Gems for the Week of 19 February, 2024

February 27, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

This week’s edition of Google Gems focuses on a Hope Diamond and a handful of lesser stones. Let’s go.

THE HOPE DIAMOND

In the chaos of the AI Gold Rush, horses fall and wizard engineers realize that they left their common sense in the saloon. Here’s the Hope Diamond from the Google.

The world’s largest online advertising agency created smart software with a lot of math, dump trucks filled with data, and wizards who did not recall that certain historical figures in the US were not of color. “Google Says Its AI Image-Generator Would Sometimes Overcompensate for Diversity,” an Associated Press story, explains in very gentle rhetoric that its super sophisticate brain and DeepMind would get the race of historical figures wrong. I think this means that Ben Franklin could look like a Zulu prince or George Washington might have some resemblance to Rama (blue skin, bow, arrow, and snappy hat).

My favorite search and retrieval expert Prabhakar Raghavan (famous for his brilliant lecture in Paris about the now renamed Bard) indicated that Google’s image rendering system did not hit the bull’s eye. No, Dr. Raghavan, the digital arrow pierced the micrometer thin plastic wrap of Google’s super sophisticated, quantum supremacy, gee-whiz technology.

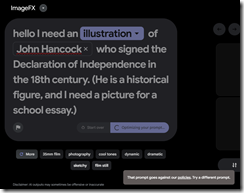

The message I received from Google when I asked for an illustration of John Hancock, an American historical figure. Too bad because this request goes against Google’s policies. Yep, wizards infused with the high school science club management method.

More important, however, was how Google’s massive stumble complemented OpenAI’s ChatGPT wonkiness. I want to award the Hope Diamond Award for AI Ineptitude to both Google and OpenAI. But, alas, there is just one Hope Diamond. The award goes to the quantumly supreme outfit Google.

[Note: I did not quote from the AP story. Why? Years ago the outfit threatened to sue people who use their stories’ words. Okay, no problemo, even though the newspaper for which I once worked supported this outfit in the days of “real” news, not recycled blog posts. I listen, but I do not forget some things. I wonder if the AP knows that Google Chrome can finish a “real” journalist’s sentences for he/him/she/her/it/them. Read about this “feature” at this link.]

Here are my reasons:

- Google is in catch-up mode and like those in the old Gold Rush, some fall from their horses and get up close and personal with hooves. How do those affect the body of a wizard? I have never fallen from a horse, but I saw a fellow get trampled when I lived in Campinas, Brazil. I recall there was a lot of screaming and blood. Messy.

- Google’s arrogance and intellectual sophistication cannot prevent incredible gaffes. A company with a mixed record of managing diversity, equity, etc. has demonstrated why Xooglers like Dr. Timnit Gebru find the company “interesting.” I don’t think Google is interesting. I think it is disappointing, particularly in the racial sensitivity department.

- For years I have explained that Google operates via the high school science club management method. What’s cute when one is 14 loses its charm when those using the method have been at it for a quarter century. It’s time to put on the big boy pants.

OTHER LITTLE GEMMAS

The previous week revealed a dirt trail with some sharp stones and thorny bushes. Here’s a quick selection of the sharpest and thorniest:

- The Google is running webinars to inform publishers about life after their wonderful long-lived cookies. Read more at Fipp.com.

- Google has released a small model as open source. What about the big model with the diversity quirk? Well, no. Read more at the weird green Verge thing.

- Google cares about AI safety. Yeah, believe it or not. Read more about this PR move on Techcrunch.

- Web search competitors will fail. This is a little stone. Yep, a kidney stone for those who don’t recall Neeva. Read more at Techpolicy.

- Did Google really pay $60 million to get that outstanding Reddit content. Wow. Maybe Google looks at different sub reddits than my research team does. Read more about it in 9 to 5 Google.

- What happens when an uninformed person uses the Google Cloud? Answer: Sticker shock. More about this estimable method in The Register.

- Some spoil sport finds traffic lights informed with Google’s smart software annoying. That’s hard to believe. Read more at this link.

- Google pointed out in a court filing that DuckDuckGo was a meta search system (that is, a search interface to other firm’s indexes) and Neeva was a loser crafted by Xooglers. Read more at this link.

No Google Hope Diamond report would be complete without pointing out that the online advertising giant will roll out its smart software to companies. Read more at this link. Let’s hope the wizards figure out that historical figures often have quite specific racial characteristics like Rama.

I wanted to include an image of Google’s rendering of a signer of the Declaration of Independence. What you see in the illustration above is what I got. Wow. I have more “gemmas”, but I just don’t want to present them.

Stephen E Arnold, February 27, 2024

Qualcomm: Its AI Models and Pour Gasoline on a Raging Fire

February 26, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Qualcomm’s announcements at the Mobile World Congress pour gasoline on the raging AI fire. The chip maker aims to enable smart software on mobile devices, new gear, gym shoes, and more. Venture Beat’s “Qualcomm Unveils AI and Connectivity Chips at Mobile World Congress” does a good job of explaining the big picture. The online publication reports:

Generative AI functions in upcoming smartphones, Windows PCs, cars, and wearables will also be on display with practical applications. Generative AI is expected to have a broad impact across industries, with estimates that it could add the equivalent of $2.6 trillion to $4.4 trillion in economic benefits annually.

Qualcomm, primarily associated with chips, has pushed into what it calls “AI models.” The listing of the models appears on the Qualcomm AI Hub Web page. You can find this page at https://aihub.qualcomm.com/models. To view the available models, click on one of the four model domains, shown below:

Each domain will expand and present the name of the model. Note that the domain with the most models is computer vision. The company offers 60 models. These are grouped by function; for example, image classification, image editing, image generation, object detection, pose estimation, semantic segmentation (tagging objects), and super resolution.

The image below shows a model which analyzes data and then predicts related values. In this case, the position of the subject’s body are presented. The predictive functions of a company like Recorded Future suddenly appear to be behind the curve in my opinion.

There are two models for generative AI. These are image generation and text generation. Models are available for audio functions and for multimodal operations.

Qualcomm includes brief descriptions of each model. These descriptions include some repetitive phrases like “state of the art”, “transformer,” and “real time.”

Looking at the examples and following the links to supplemental information makes clear at first glance to suggest:

- Qualcomm will become a company of interest to investors

- Competitive outfits have their marching orders to develop comparable or better functions

- Supply chain vendors may experience additional interest and uplift from investors.

Can Qualcomm deliver? Let me answer the question this way. Whether the company experiences an nVidia moment or not, other companies have to respond, innovate, cut costs, and become more forward leaning in this chip sector.

I am in my underground computer lab in rural Kentucky, and I can feel the heat from Qualcomm’s AI announcement. Those at the conference not working for Qualcomm may have had their eyebrows scorched.

Stephen E Arnold, February 26, 2024

OpenAI Embarks on Taking Down the Big Guy in Web Search

February 22, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The Google may be getting up there in Internet years; however, due to its size and dark shadow, taking the big fellow down and putting it out of the game may be difficult. Users are accustomed to the Google. Habits, particularly those which become semi automatic like a heroin addict’s fiddling with a spoon, are tough to break. After 25 years, growing out of a habit is reassuring to worried onlookers. But the efficacy of wait-and-see is not getting a bent person straight.

Taking down Googzilla may be a job for lots of little people. Thanks, Google ImageFX. Know thyself, right?

I read “OpenAI Is Going to Face an Uphill Battle If It Takes on Google Search.” The write up describes an aspirational goal of Sam AI-Man’s OpenAI system. The write up says:

OpenAI is reportedly building its own search product to take on Google.

OpenAI is jumping in a CRRC already crowded with special ops people. There is the Kagi subscription search. There is Phind.com and You.com. There is a one-man band called Stract and more. A new and improved Yandex is coming. The reliable Swisscows.com is ruminating in the mountains. The ever-watchful OSINT professionals gather search engines like a mother goose. And what do we get? Bing is going nowhere even with Copilot except in the enterprise market where Excel users are asking, “What the H*ll?” Meanwhile the litigating beast continues to capture 90 percent or more of search traffic and oodles of data. Okay, team, who is going to chop block the Google, a fat and slow player at that?

The write up opines:

But on the search front, it’s still all Google all the way. And even if OpenAI popularized the generative AI craze, the company has a long way to go if it hopes to take down the search giant.

Competitors can dream, plot, innovate, and issue press releases. But for the foreseeable future, the big guy is going to push others out of the way.

Stephen E Arnold, February 22, 2024

Interesting Observations: Do These Apply to Technology Is a Problem Solver Thinking?

February 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an interesting essay by Nat Eliason, an entity unknown previously to me. “A Map Is Not a Blueprint: Why Fixing Nature Fails.” is a short collection of the way human thought processes create some quite spectacular problems. His examples include weight loss compounds like Ozempic, transfats, and the once-trendy solution to mental issues, the lobotomy.

Humans can generate a map of a “territory” or a problem space. Then humans dig in and try to make sense of their representation. The problem is that humans may approach a problem and get the solution wrong. No surprise there. One of the engines of innovation is coming up with a solution to a problem created by something incorrectly interpreted. A recent example is the befuddlement of Mark Zuckerberg when a member of the Senate committee questioning him about his company suggested that the quite wealthy entrepreneur had blood on his hands. No wonder he apologized for creating a service that has the remarkable power of bringing people closer together, well, sometimes.

Immature home economics students can apologize for a cooking disaster. Techno feudalists may have a more difficult time making amends. But there are lawyers and lobbyists ready and willing to lend a hand. Thanks, MSFT Copilot Bing thing. Good enough.

What I found interesting in Mr. Eliason’s essay was the model or mental road map humans create (consciously or unconsciously) to solve a problem. I am thinking in terms of social media, AI generated results for a peer-reviewed paper, and Web search systems which filter information to generate a pre-designed frame for certain topics.

Here’s the list of the five steps in the process creating interesting challenges for those engaged in and affected by technology today:

- Smart people see a problem, study it, and identify options for responding.

- The operations are analyzed and then boiled down to potential remediations.

- “Using our map of the process we create a solution to the problem.”

- The solution works. The downstream issues are not identified or anticipated in a thorough manner.

- New problems emerge as a consequence of having a lousy mental map of the original problem.

Interesting. Creating a solution to a technology-sparked problem without consequences may be one key to success. “I had no idea” or “I’m a sorry” makes everything better.

Stephen E Arnold, February 16, 2024

Embrace Good Enough … or Less Than Good. Either Way Is Okay Today

February 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

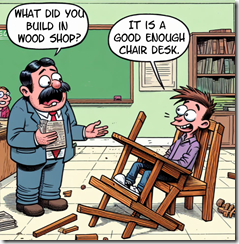

As humans we want to be the best individual that we can be. We especially think about how to improve ourselves and examine our flaws during the New Year. Sophie McBain from The Guardian evaluated different approaches to life in the article, “The Big Idea: Is Being ‘Good Enough’ Better Than Perfection?” McBain discusses the differences between people who are fine with the “good enough” vs. perfection mentality.

A high school teacher admires a student who built an innovative chair desk. Yep, MSFT Copilot. Good enough.

She uses Internet shopping to explain the differences between the two personality types. Perfectionists aka “maximizers” want to achieve the best of everything. It’s why they search for the perfect item online reading “best of…” lists and product reviews. This group spends hours finding the best items.“Good enough” people aka “satisfiers” review the same information but in lesser amounts and quickly make a decision.

Maximizers do better professionally, but they’re less happy in their personal lives. Satisfiers are happier because they use their time to pursue activities that make them happy. The Internet blasting ideal life styles also contributes to depressive outlooks:

“In his 2022 book, The Good-Enough Life, Avram Alpert argues that personal quests for greatness, and the unequal social systems that fuel these quests, are at the heart of much that is wrong in the world, driving overconsumption and environmental degradation, stark inequalities and increased unhappiness among people who feel locked in endless competition with one another. Instead of scrambling for a handful of places at the top, Alpert believes we’d all be better off dismantling these hierarchies, so that we no longer cultivate our talents to pursue wealth, fame or power, but only to enrich our own lives and those of others.”

McBain finishes her article by encouraging people to examine their life through a “good enough” lens. It’s a kind sentiment to share at the start of a New Year but it also encourages people to settle. If people aren’t happy with their life choice, they should critically evaluate them and tackle solutions. “Good enough” is great for unimportant tasks but “maximizing” potential for a better future is a healthier outlook.

Whitney Grace, February 16, 2024