Habba Logic? Is It Something One Can Catch?

January 30, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I don’t know much about lawyering. I have been exposed to some unusual legal performances. Most recently, Alina Habba delivered in impassioned soliloquy after a certain high-profile individual was told, “You have to pay a person whom you profess not to know $83 million.” Ms. Habba explained that the decision was a bit of a problem based on her understanding of New York State law. That’s okay. As a dinobaby, I am wrong on a pretty reliable basis. Once it is about 3 pm, I have difficulty locating my glasses, my note cards about items for this blog, and my bottle of Kroger grape-flavored water. (Did you know the world’s expert on grape flavor was a PhD named Abe Bakal. I worked with him in the 1970s. He influenced me, hence the Bakalized water.)

Habba logic explains many things in the world. If Socrates does not understand, that’s his problem, the young Agonistes Habba in the logic class. Thanks, MSFT Copilot. Good enough. But the eyes are weird.

I did find my notecard about a TechDirt article titled “Cable Giants Insist That Forcing Them to Make Cancellations Easier Violates Their First Amendment Rights.” I once learned that the First Amendment had something to do with free speech. To me, a dinobaby don’t forget, this means I can write a blog post, offer my personal opinions, and mention the event or item which moved me to action. Dinobabies are not known for their swiftness.

The write up explains that cable companies believe that making it difficult for a customer to cancel a subscription to TV, phone, Internet, and other services is a free speech issue. The write up reports:

But the cable and broadband industry, which has a long and proud tradition of whining about every last consumer protection requirement (no matter how basic), is kicking back at the requirement. At a hearing last week, former FCC boss-turned-top-cable-lobbying Mike Powell suggested such a rule wouldn’t be fair, because it might somehow (?) prevent cable companies from informing customers about better deals.

The idea is that the cable companies’ free of speech would be impaired. Okay.

What’s this got to do with the performance by Ms. Habba after her client was slapped with a big monetary award? Answer: Habba logic.

Normal logic says, “If a jury finds a person guilty, that’s what a jury is empowered to do.” I don’t know if describing it in more colorful terms alters what the jury does. But Habba logic is different, and I think it is diffusing from the august legal chambers to a government meeting. I am not certain how to react to Habba logic.

I do know, however, however, that cable companies are having a bit of struggle retaining their customers, amping up their brands, and becoming the equivalent of Winnie the Pooh sweatshirts for kids and adults. Cable companies do not want a customer to cancel and boost the estimable firms’ churn ratio. Cable companies do want to bill every month in order to maintain their cash intake. Cable companies do want to maintain a credit card type of relationship to make it just peachy to send mindless snail mail marketing messages about outstanding services, new set top boxes, and ever faster Internet speeds. (Ho ho ho. Sorry. I can’t help myself.)

Net net: Habba logic is identifiable, and I will be watching for more examples. Dinobabies like watching those who are young at heart behaving in a fascinating manner. Where’s my fake grape water? Oh, next to fake logic.

Stephen E Arnold, January 30, 2024

AI Coding: Better, Faster, Cheaper. Just Pick Two, Please

January 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Visual Studio Magazine is not on my must-read list. Nevertheless, one of my research team told me that I needed to read “New GitHub Copilot Research Finds “Downward Pressure on Code Quality.” I had no idea what “downward pressure” means. I read the article trying to figure out what the plain English meaning of this tortured phrase meant. Was it the downward pressure on the metatarsals when a person is running to a job interview? Was it the deadly downward pressure exerted on the OceanGate submersible? Was it the force illustrated in the YouTube “Hydraulic Press Channel”?

A partner at a venture firms wants his open source recipients to produce more code better, faster, and cheaper. (He does not explain that one must pick two.) Thanks MSFT Copilot Bing thing. Good enough. But the green? Wow.

Wrong.

The writeup is a content marketing piece for a research report. That’s okay. I think a human may have written most of the article. Despite the frippery in the article, I spotted several factoids. If these are indeed verifiable, excitement in the world of machine generated open source software will ensue. Why does this matter? Well, in the words of the SmartNews content engine, “Read on.”

Here are the items of interest to me:

- Bad code is being created and added to the GitHub repositories.

- Code is recycled, despite smart efforts to reduce the copy-paste approach to programming.

- AI is preparing a field in which lousy, flawed, and possible worse software will flourish.

Stephen E Arnold, January 29, 2024

AI Will Take Whose Job, Ms. Newscaster?

January 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Will AI take jobs? Abso-frickin-lutely. Why? Cost savings. Period. In an era of “good enough” is the new mark of excellence, hallucinating software is going to speed up some really annoying commercial functions and reduce costs. What if the customers object to being called dorks? Too bad. The company will apologize, take down the wonky system, and put up another smart service. Better? No, good enough. Faster? Yep. Cheaper? Bet your bippy on that, pilgrim. (See, for a chuckle, AI Chatbot At Delivery Firm DPD Goes Rogue, Insults Customer And Criticizes Company.)

Hey, MSFT Bing thing, good enough. How is that MSFT email security today, kiddo?

I found this Fox write up fascinating: “Two-Thirds of Americans Say AI Could Do Their Job.” That works out to about 67 percent of an estimated workforce of 120 million to a couple of Costco parking lots of people. Give or take a few, of course.

The write up says:

A recent survey conducted by Spokeo found that despite seeing the potential benefits of AI, 66.6% of the 1,027 respondents admitted AI could carry out their workplace duties, and 74.8% said they were concerned about the technology’s impact on their industry as a whole.

Oh, oh. Now it is 75 percent. Add a few more Costco parking lots of people holding signs like “Will broadcast for food”, “Will think for food,” or “Will hold a sign for Happy Pollo Tacos.” (Didn’t some wizard at Davos suggest that five percent of jobs would be affected? Yeah, that’s on the money.)

The write up adds:

“Whether it’s because people realize that a lot of work can be easily automated, or they believe the hype in the media that AI is more advanced and powerful than it is, the AI box has now been opened. … The vast majority of those surveyed, 79.1%, said they think employers should offer training for ChatGPT and other AI tools.

Yep, take those free training courses advertised by some of the tech feudalists. You too can become an AI sales person just like “search experts” morphed into search engine optimization specialists. How is that working out? Good for the Google. For some others, a way station on the bus ride to the unemployment bureau perhaps?

Several observations:

- Smart software can generate the fake personas and the content. What’s the outlook for talking heads who are not celebrities or influencers as “real” journalists?

- Most people overestimate their value. Now the jobs for which these individuals compete, will go to the top one percent. Welcome to the feudal world of 21st century.

- More than holding signs and looking sad will be needed to generate revenue for some people.

And what about Fox news reports like the one on which this short essay is based? AI, baby, just like Sports Illustrated and the estimable SmartNews.

Stephen E Arnold, January 29, 2024

AI and Web Search: A Meh-crosoft and Google Mismatch

January 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a shocking report summary. Is the report like one of those Harvard Medical scholarly articles or an essay from the former president of Stanford University? I don’t know. Nevertheless, let’s look at the assertions in “Report: ChatGPT Hasn’t Helped Bing Compete With Google.” I am not sure if the information provides convincing proof that Googzilla is a big, healthy market dominator or if Microsoft has been fooling itself about the power of the artificial intelligence revolution.

The young inventor presents his next big thing to a savvy senior executive at a techno-feudal company. The senior executive is impressed. Are you? I know I am. Thanks, MSFT Copilot Bing thing. Too bad you timed out and told me, “I apologize for the confusion. I’ll try to create a more cartoon-style illustration this time.” Then you crashed. Good enough, right?

Let’s look at the write up. I noted this passage which is coming to me third, maybe fourth hand, but I am a dinobaby and I go with the online flow:

Microsoft added the generative artificial intelligence (AI) tool to its search engine early last year after investing $10 billion in ChatGPT creator OpenAI. But according to a recent Bloomberg News report — which cited data analytics company StatCounter — Bing ended 2023 with just 3.4% of the worldwide search market, compared to Google’s 91.6% share. That’s up less than 1 percentage point since the company announced the ChatGPT integration last January.

I am okay with the $10 billion. Why not bet big? The tactics works for some each year at the Kentucky Derby. I don’t know about the 91.6 number, however. The point six is troubling. What’s with precision when dealing with a result that makes clear that of 100 random people on line at the ever efficient BWI Airport, only eight will know how to retrieve information from another Web search system; for example, the busy Bing or the super reliable Yandex.ru service.

If we assume that the Bing information of modest user uptake, those $10 billion were not enough to do much more than get the management experts at Alphabet to press the Red Alert fire alarm. One could reason: Google is a monopoly in spirit if not in actual fact. If we accept the market share of Bing, Microsoft is putting life preservers manufactured with marketing foam and bricks on its Paul Allen-esque super yacht.

The write up says via what looks like recycled information:

“We are at the gold rush moment when it comes to AI and search,” Shane Greenstein, an economist and professor at Harvard Business School, told Bloomberg. “At the moment, I doubt AI will move the needle because, in search, you need a flywheel: the more searches you have, the better answers are. Google is the only firm who has this dynamic well-established.”

Yeah, Harvard. Oh, well, the sweatshirts are recognized the world over. Accuracy, trust, and integrity implied too.

Net net: What’s next? Will Microsoft make it even more difficult to use another outfit’s search system. Swisscows.com, you may be headed for the abattoir. StartPage.com, you will face your end.

Stephen E Arnold, January 25, 2024

Oh, Brother, What a Marketing Play HP Has Made

January 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I must admit I am not sure if the story “HP CEO Says Customers Who Don’t Use the Company’s Supplies Are Bad Investments” is spot on. But its spirit highlights some modern management thought processes.

The senior boss type explains to his wizards the brilliance of what might be called “the bricking strategy.” One executive sighs, “Oh, brother.” At the same time, interest in Brother’s printers show signs of life. Thanks, MSFT Copilot Bing thing, second string version. You have nailed isolated, entitled senior executives in this original art. Good enough. How’s security of your email coming along?

I love this quote (which may or may not be spot on, but let’s go with it, shall we?):

“When we identify cartridges that are violating our IP, we stop the printers from working.”

Brilliant. Hewlett Packard, the one that manufacturers printers, perceives customers who use refilled cartridges as an issue. I love the reference to intellectual property (IP). What company first developed the concept of refillable cartridges? Was it the razor blade outfit cherished as a high-water mark in business schools in the US? But refillable is perceived as a breakthrough innovation, is it not? Give away the razor; charge a lot for the blades which go dull after a single use.

The article reports:

When asked about the lawsuit during an interview with CNBC, Lores said, “I think for us it is important for us to protect our IP. There is a lot of IP that we’ve built in the inks of the printers, in the printers themselves. And what we are doing is when we identify cartridges that are violating our IP, we stop the printers from working.”

I also chuckled at this statement from the cited article:

Lores certainly makes no attempt to conceal anything in that statement. The CEO then doubled down on his stance: “Every time a customer buys a printer, it’s an investment for us. We are investing in that customer, and if that customer doesn’t print enough or doesn’t use our supplies, it’s a bad investment.”

Perfect. Customer service does not pay unless a customer subscribes to customer service. Is this a new idea? Nah, documentation does not pay off unless a customer pays to access a user manual (coherent or incoherent, complete or incomplete, current or Stone Age). Knowledgeable sales professionals are useless unless those fine executives meet their quotas. I see smart software in a company with this attitude coming like gangbusters.

But what I really admire is the notion of danger from a non-HP cartridge. Yep, a compromised cannister. Wow. The write up reports:

Lores continued to warn against the dangers of using non-HP cartridges and what will happen if you do. “In many cases, it can create all sorts of issues from the printer stopping working because the ink has not been designed to be used in our printer, to even creating security issues.” The CEO made it sound as if HP’s ink cartridge DRM was there solely for the benefit of customers. “We have seen that you can embed viruses into cartridges, through the cartridge go to the printer, from the printer go to the network, so it can create many more problems for customers.” He then appeared to shift from that customer-first perspective by stating, “Our objective is to make printing as easy as possible, and our long-term objective is to make printing a subscription.”

One person named Puiu added this observation: “I’m using an Epson with an ink tank at work. It’s so easy to refill and the ink is cheap.”

I have been working in government and commercial organizations, and I cannot recall a single incident of a printer representing a danger. I do have a number of recollections of usually calm professionals going crazy when printers [a] did not print, [b] reported malfunctions with blinking lights not explained in the user manual, [c] paper lodged in a printer in a way that required disassembly of the printer. High speed printers are unique in their ability to break themselves when the “feeder” does not feed. (By the way, the fault is the user’s, the humidity of the paper, or the static electricity generated by the stupid location the stupid customer put the stupid printer. Printer software and drivers — please, don’t get me started. Those suck big time today and have for decades.)

HP continues to blaze a trail of remarkable innovation. Forget the legacy of medical devices, the acquisition of Compaq, the genius of Alta Vista, and the always-lovable software. HP’s contribution to management excellence is heart warming. I need to check my printer to make sure it is not posing a danger to me and my team. I’m back. The Ricoh and the Brother are okay, no risk.

Subscribe to HP ink today. Be safe. Emulate the HP way too because some users are a bad investment.

Stephen E Arnold, January 24, 2024

A Swiss Email Provider Delivers Some Sharp Cheese about MSFT Outlook

January 17, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

What company does my team love more than Google? Give up. It is Microsoft. Whether it is the invasive Outlook plug in for Zoom on the Mac or the incredible fly ins, pop ups, and whining about Edge, what’s not to like about this outstanding, customer-centric firm? Nothing. That’s right. Nothing Microsoft does can be considered duplicitous, monopolistic, avaricious, or improper. The company lives and breathes the ethics of Thomas Dewey, the 19 century American philosopher. This is my opinion, of course. Some may disagree.

A perky Swiss farmer delivers an Outlook info dump. Will this delivery enable the growth of suveillance methodologies? Thanks, MSFT Copilot Bing thing. Thou did not protest when I asked for this picture.

I read and was troubled that one of my favorite US firms received some critical analysis about the MSFT Outlook email program. The sharp comments appeared in a blog post titled “Outlook Is Microsoft’s New Data Collection Service.” Proton offers an encrypted email service and a VPN from Switzerland. (Did you know the Swiss have farmers who wash their cows and stack their firewood neatly? I am from central Illinois, and our farmers ignore their cows and pile firewood. As long as a cow can make it into the slaughter house, the cow is good to go. As long as the firewood burns, winner.)

The write up reports or asserts, depending on one’s point of view:

Everyone talks about the privacy-washing(new window) campaigns of Google and Apple as they mine your online data to generate advertising revenue. But now it looks like Outlook is no longer simply an email service(new window); it’s a data collection mechanism for Microsoft’s 772 external partners and an ad delivery system for Microsoft itself.

Surveillance is the key to making money from advertising or bulk data sales to commercial and possibly some other organizations. Proton enumerates how these sucked up data may be used:

- Store and/or access information on the user’s device

- Develop and improve products

- Personalize ads and content

- Measure ads and content

- Derive audience insights

- Obtain precise geolocation data

- Identify users through device scanning

The write up provides this list of information allegedly available to Microsoft:

- Name and contact data

- Passwords

- Demographic data

- Payment data

- Subscription and licensing data

- Search queries

- Device and usage data

- Error reports and performance data

- Voice data

- Text, inking, and typing data

- Images

- Location data

- Content

- Feedback and ratings

- Traffic data.

My goodness.

I particularly like the geolocation data. With Google trying to turn off the geofence functions, Microsoft definitely may be an option for some customers to test. Good, bad, or indifferent, millions of people use Microsoft Outlook. Imagine the contact lists, the entity names, and the other information extractable from messages, attachments, draft folders, and the deleted content. As an Illinois farmer might say, “Winner!”

For more information about Microsoft’s alleged data practices, please, refer to the Proton article. I became uncomfortable when I read the section about how MSFT steals my email password. Imagine. Theft of a password — Is it true? My favorite giant American software company would not do that to me, a loyal customer, would it?

The write up is a bit of content marketing rah rah for Proton. I am not convinced, but I think I will have my team do some poking around on the Proton Web site. But Microsoft? No, the company would not take this action would it?

Stephen E Arnold, January 17, 2023

Google Gems for 1 16 24: Ho Ho Ho

January 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

There is no zirconium dioxide in this gem display. The Google is becoming more aggressive about YouTube users who refuse to pay money to watch the videos on the site. Does Google have a problem after conditioning its users around the globe to use the service, provide comments, roll over for data collection, and enjoy the ever increasing number of commercial messages? Of course not, Google is a much-loved company, and its users are eager to comply. If you want some insight into Google’s “pay up or suffer” approach to reframing YouTube, navigate to “YouTube’s Ad Blocker War Leads to Major Slowdowns and Surge in Scam Ads.” Yikes, scam ads. (I thought Google had those under control a decade ago. Oh, well.)

So many high-value gems and so little time to marvel at their elegance and beauty. Thanks, MSFT Copilot Big thing. Good enough although I appear to be a much younger version of my dinobaby self.

Another notable allegedly accurate assertion about the Google’s business methods appears in “Google Accused of Stealing Patented AI Technology in $1.67 Billion Case.” Patent litigation is boring for some, but the good news is that it provides big money to some attorneys — win or lose. What’s interesting is that short cuts and duplicity appear in numerous Google gems. Is this a signal or a coincidence?

Other gems my team found interesting and want to share with you include:

- Google and the lovable Bing have been called out for displaying “deep fake porn” in their search results. If you want to know more about this issue, navigate to Neowin.net.

- In order to shore up its revenues, Alphabet is innovating the way Monaco has: Money-related gaming. How many young people will discover the thrill of winning big and take a step toward what could be a life long involvement in counseling and weekly meetings? Techcrunch provides a bit more information, but not too much.

- Are there any downsides to firing Googlers, allegedly the world’s brightest and most productive wizards wearing sneakers and gray T shirts? Not too many, but some people may be annoyed with what Google describes in baloney speak as deprecation. The idea is that features are killed off. Adapt. PCMag.com explains with post-Ziff élan. One example of changes might be the fiddling with Google Maps and Waze.

- The estimable Sun newspaper provides some functions of the Android mobiles’ hidden tricks. Surprise.

- Google allegedly is struggling to dot its “i’s” and cross its “t’s.” A blogger reports that Google “forgot” to renew a domain used in its GSuite documentation. (How can one overlook the numerous reminders to renew? It’s easy I assume.)

The final gem in this edition is one that may or may not be true. A tweet reports that Amazon is now spending more on R&D than Google. Cost cutting?

Stephen E Arnold, January 16, 2024

Amazon: A Secret of Success Revealed

January 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Jeff Bezos Reportedly Told His Team to Attack Small Publishers Like a Cheetah Would Pursue a Sickly Gazelle in Amazon’s Early Days — 3 Ruthless Strategies He’s Used to Build His Empire.” The inspirational story make clear why so many companies, managers, and financial managers find the Bezos Bulldozer a slick vehicle. Who needs a better role model for the Information Superhighway?

Although this machine-generated cheetah is chubby, the big predator looks quite content after consuming a herd of sickly gazelles. No wonder so many admire the beast. Can the chubby creature catch up to the robotic wizards at OpenAI-type firms? Thanks, MSFT Copilot Bing thing. It was a struggle to get this fat beast but good enough.

The write up is not so much news but a summing up of what I think of as Bezos brainwaves. For example, the write up describes either the creator of the Bezos Bulldozer as “sadistic” or a “godfather.” Another facet of Mr. Bezos’ approach to business is an aggressive price strategy. The third tool in the bulldozer’s toolbox is creating an “adversarial” environment. That sounds delightful: “Constant friction.”

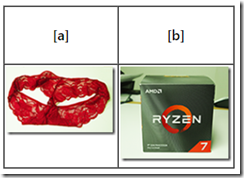

But I think there are other techniques in play. For example, we ordered a $600 dollar CPU. Amazon or one of its “trusted partners” shipped red panties in an AMD Ryzen box. [a] The CPU and [b] its official box. Fashionable, right?

This image appeared in my April 2022 Beyond Search. Amazon customer support insisted that I received a CPU, not panties in an AMB box. The customer support process made it crystal clear that I was trying the cheat them. Yeah, nice accusation and a big laugh when I included the anecdote in one of my online fraud lectures at a cyber crime conference.

More recently, I received a smashed package with a plastic bag displaying this message: “We care.” When I posted a review of the shoddy packaging and the impossibility of contacting Amazon, I received several email messages asking me to go to the Amazon site and report the problem. Oh, the merchant in question is named Meta Bosem:

Amazon asks me to answer this question before getting a resolution to this predatory action. Amazon pleads, “Did this solve my problem?” No, I will survive being the victim of what seems to a way to down a sickly gazelle. (I am just old, not sickly.)

The somewhat poorly assembled article cited above includes one interesting statement which either a robot or an underpaid humanoid presented as a factoid about Amazon:

Malcolm Gladwell’s research has led him to believe that innovative entrepreneurs are often disagreeable. Businesses and society may have a lot to gain from individuals who “change up the status quo and introduce an element of friction,” he says. A disagreeable personality — which Gladwell defines as someone who follows through even in the face of social approval — has some merits, according to his theory.

Yep, the benefits of Amazon. Let me identify the ones I experienced with the panties and the smashed product in the “We care” wrapper:

- Quality control and quality assurance. Hmmm. Similar to aircraft manufacturer’s whose planes feature self removing doors at 14,000 feet

- Customer service. I love the question before the problem is addressed which asks, “Did this solve your problem?” (The answer is, “No.”)

- Reliable vendors. I wonder if the Meta Bosum folks would like my pair of large red female undergarments for one of their computers?

- Business integrity. What?

But what does one expect from a techno feudal outfit which presents products named by smart software. For details of this recent flub, navigate to “Amazon Product Name Is an OpenAI Error Message.” This article states:

We’re accustomed to the uncanny random brand names used by factories to sell directly to the consumer. But now the listings themselves are being generated by AI, a fact revealed by furniture maker FOPEAS, which now offers its delightfully modern yet affordable I’m sorry but I cannot fulfill this request it goes against OpenAI use policy. My purpose is to provide helpful and respectful information to users in brown.

Isn’t Amazon a delightful organization? Sickly gazelles, be cautious when you hear the rumble of the Bezos Bulldozer. It does not move fast and break things. The company has weaponized its pursuit of revenue. Neither, publishers, dinobabies, or humanoids can be anything other than prey if the cheetah assertion is accurate. And the government regulatory authorities in the US? Great job, folks.

Stephen E Arnold, January 15, 2024

Open Source Software: Free Gym Shoes for Bad Actors

January 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Many years ago, I completed a number of open source projects. Although different clients hired my team and me, the big question was, “What’s the future of open source software as an investment opportunity and as a substitute for commercial software. Our work focused on two major points:

- Community support for a widely-used software once the original developer moved on

- A way to save money and get rid of the “licensing handcuffs” commercial software companies clamped on their customers

- Security issues resulting from poisoned code or obfuscated “special features.:

My recollection is that the customers focused on one point, the opportunity to save money. Commercial software vendors were in the “lock in” game, and open source software for database, utility, and search and retrieval.

Today, a young innovator may embrace an open source solution to the generative smart software approach to innovation. Apart from the issues embedded in the large language model methods themselves, building a product on other people’s code available a open source software looks like a certain path to money.

An open source game plan sounds like a winner. Then upon starting work, the path reveals its risks. Thanks, MSFT Copilot, you exhausted me this morning. Good enough.

I thought about our work in open source when I read “So, Are We Going to Talk about How GitHub Is an Absolute Boon for Malware, or Nah?” The write up opines:

In a report published on Thursday, security shop Recorded Future warns that GitHub’s infrastructure is frequently abused by criminals to support and deliver malware. And the abuse is expected to grow due to the advantages of a “living-off-trusted-sites” strategy for those involved in malware. GitHub, the report says, presents several advantages to malware authors. For example, GitHub domains are seldom blocked by corporate networks, making it a reliable hosting site for malware.

Those cost advantages can be vaporized once a security issue becomes known. The write up continues:

Reliance on this “living-off-trusted-sites” strategy is likely to increase and so organizations are advised to flag or block GitHub services that aren’t normally used and could be abused. Companies, it’s suggested, should also look at their usage of GitHub services in detail to formulate specific defensive strategies.

How about a risk round up?

- The licenses vary. Litigation is a possibility. For big companies with lots of legal eagles, court battles are no problem. Just write a check or cut a deal.

- Forks make it easy for bad actors to exploit some open source projects.

- A big aggregator of open source like MSFT GitHub is not in the open source business and may be deflect criticism without spending money to correct issues as they are discovered. It’s free software, isn’t it.

- The “community” may be composed of good actors who find that cash from what looks like a reputable organization becomes the unwitting dupe of an industrialized cyber gang.

- Commercial products integrating or built upon open source may have to do some very fancy dancing when a problem becomes publicly known.

There are other concerns as well. The problem is that open source’s appeal is now powered by two different performance enhancers. First, is the perception that open source software reduces certain costs. The second is the mad integration of open source smart software.

What’s the fix? My hunch is that words will take the place of meaningful action and remediation. Economic pressure and the desire to use what is free make more sense to many business wizards.

Stephen E Arnold, January 15, 2024

Believe in Smart Software? Sure, Why Not?

January 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

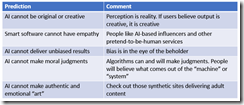

Predictions are slippery fish. Grab one, a foot long Lake Michigan beastie. Now hold on. Wow, that looked easy. Predictions are similar. But slippery fish can get away or flop around and make those in the boat look silly. I thought about fish and predictions when I read “What AI will Never Be Able To Do.” The essay is a replay of an answer from an AI or smart software system.

My initial reaction was that someone came up with a blog post that required Google Bard and what seems to be minimal effort to create. I am thinking about how a high school student might rely on ChatGPT to write an essay about a current event or a how-to essay. I reread the write up and formulated several observations. The table below presents the “prediction” and my comment about that statement. I end the essay with a general comment about smart software.

The presentation of word salad reassurances underscores a fundamental problem of smart software. The system can be tuned to reassure. At the same time, the companies operating the software can steer, shape, and weaponize the information presented. Those without the intellectual equipment to research and reason about outputs are likely to accept the answers. The deterioration of education in the US and other countries virtually guarantees that smart software will replace critical thinking for many people.

Don’t believe me. Ask one of the engineers working on next generation smart software. Just don’t ask the systems or the people who use another outfit’s software to do the thinking.

Stephen E Arnold, January 12, 2024