Developers, AI Will Not Take Your Jobs… Yet

February 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It seems programmers are safe from an imminent AI jobs takeover. The competent ones, anyway. LeadDev reports, “Researchers Say Generative AI Isn’t Replacing Devs Any Time Soon.” Generative AI tools have begun to lend developers a helping hand, but nearly half of developers are concerned they might loose their jobs to their algorithmic assistants.

Another MSFT Copilot completely original Bing thing. Good enough but that fellow sure looks familiar.

However, a recent study by researchers from Princeton University and the University of Chicago suggests they have nothing to worry about: AI systems are far from good enough at programming tasks to replace humans. Writer Chris Stokel-Walker tells us the researchers:

“… developed an evaluation framework that drew nearly 2,300 common software engineering problems from real GitHub issues – typically a bug report or feature request – and corresponding pull requests across 12 popular Python repositories to test the performance of various large language models (LLMs). Researchers provided the LLMs with both the issue and the repo code, and tasked the model with producing a workable fix, which was tested after to ensure it was correct. But only 4% of the time did the LLM generate a solution that worked.”

Researcher Carlos Jimenez notes these problems are very different from those LLMs are usually trained on. Specifically, the article states:

“The SWE-bench evaluation framework tested the model’s ability to understand and coordinate changes across multiple functions, classes, and files simultaneously. It required the models to interact with various execution environments, process context, and perform complex reasoning. These tasks go far beyond the simple prompts engineers have found success using to date, such as translating a line of code from one language to another. In short: it more accurately represented the kind of complex work that engineers have to do in their day-to-day jobs.”

Will AI someday be able to perform that sort of work? Perhaps, but the researchers consider it more likely we will never find AI coding independently. Instead, we will continue to need human developers to oversee algorithms’ work. They will, however, continue to make programmers’ jobs easier. If Jimenez and company are correct, developers everywhere can breathe a sigh of relief.

Cynthia Murrell, February 15, 2024

A Xoogler Explains AI, News, Inevitability, and Real Business Life

February 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an essay providing a tiny bit of evidence that one can take the Googler out of the Google, but that Xoogler still retains some Googley DNA. The item appeared in the Bezos bulldozer’s estimable publication with the title “The Real Wolf Menacing the News Business? AI.” Absolutely. Obviously. Who does not understand that?

A high-technology sophist explains the facts of life to a group of listeners who are skeptical about artificial intelligence. The illustration was generated after three tries by Google’s own smart software. I love the miniature horse and the less-than-flattering representation of a sales professional. That individual looks like one who would be more comfortable eating the listeners than convincing them about AI’s value.

The essay contains a number of interesting points. I want to highlight three and then, as I quite enjoy doing, I will offer some observations.

The author is a Xoogler who served from 2017 to 2023 as the senior director of news ecosystem products. I quite like the idea of a “news ecosystem.” But ecosystems as some who follow the impact of man on environments can be destroyed or pushed to the edge of catastrophe. In the aftermath of devastation coming from indifferent decision makers, greed fueled entrepreneurs, or rhinoceros poachers, landscapes are often transformed.

First, the essay writer argues:

The news publishing industry has always reviled new technology, whether it was radio or television, the internet or, now, generative artificial intelligence.

I love the word “revile.” It suggests that ignorant individuals are unable to grasp the value of certain technologies. I also like the very clever use of the word “always.” Categorical affirmatives make the world of zeros and one so delightfully absolute. We’re off to a good start I think.

Second, we have a remarkable argument which invokes another zero and one type of thinking. Consider this passage:

The publishers’ complaints were premised on the idea that web platforms such as Google and Facebook were stealing from them by posting — or even allowing publishers to post — headlines and blurbs linking to their stories. This was always a silly complaint because of a universal truism of the internet: Everybody wants traffic!

I love those universal truisms. I think some at Google honestly believe that their insights, perceptions, and beliefs are the One True Path Forward. Confidence is good, but the implication that a universal truism exists strikes me as information about a psychological and intellectual aberration. Consider this truism offered by my uneducated great grandmother:

Always get a second opinion.

My great grandmother used the logically troublesome word “always.” But the idea seems reasonable, but the action may not be possible. Does Google get second opinions when it decides to kill one of its services, modify algorithms in its ad brokering system, or reorganize its contentious smart software units? “Always” opens the door to many issues.

Publishers (I assume “all” publishers)k want traffic. May I demonstrate the frailty of the Xoogler’s argument. I publish a blog called Beyond Search. I have done this since 2008. I do not care if I get traffic or not. My goal was and remains to present commentary about the antics of high-technology companies and related subjects. Why do I do this? First, I want to make sure that my views about such topics as Google search exist. Second, I have set up my estate so the content will remain online long after I am gone. I am a publisher, and I don’t want traffic, or at least the type of traffic that Google provides. One exception causes an argument like the Xoogler’s to be shown as false, even if it is self-serving.

Third, the essay points its self-righteous finger at “regulators.” The essay suggests that elected officials pursued “illegitimate complaints” from publishers. I noted this passage:

Prior to these laws, no one ever asked permission to link to a website or paid to do so. Quite the contrary, if anyone got paid, it was the party doing the linking. Why? Because everybody wants traffic! After all, this is why advertising businesses — publishers and platforms alike — can exist in the first place. They offer distribution to advertisers, and the advertisers pay them because distribution is valuable and seldom free.

Repetition is okay, but I am able to recall one of the key arguments in this Xoogler’s write up: “Everybody wants traffic.” Since it is false, I am not sure the essay’s argumentative trajectory is on the track of logic.

Now we come to the guts of the essay: Artificial intelligence. What’s interesting is that AI magnetically pulls regulators back to the casino. Smart software companies face techno-feudalists in a high-stakes game. I noted this passage about anchoring statements via verification and just training algorithms:

The courts might or might not find this distinction between training and grounding compelling. If they don’t, Congress must step in. By legislating copyright protection for content used by AI for grounding purposes, Congress has an opportunity to create a copyright framework that achieves many competing social goals. It would permit continued innovation in artificial intelligence via the training and testing of LLMs; it would require licensing of content that AI applications use to verify their statements or look up new facts; and those licensing payments would financially sustain and incentivize the news media’s most important work — the discovery and verification of new information — rather than forcing the tech industry to make blanket payments for rewrites of what is already long known.

Who owns the casino? At this time, I would suggest that lobbyists and certain non-governmental entities exert considerable influence over some elected and appointed officials. Furthermore, some AI firms are moving as quickly as reasonably possible to convert interest in AI into revenue streams with moats. The idea is that if regulations curtail AI companies, consumers would not be well served. No 20-something wants to read a newspaper. That individual wants convenience and, of course, advertising.

Now several observations:

- The Xoogler author believes in AI going fast. The technology serves users / customers what they want. The downsides are bleats and shrieks from an outmoded sector; that is, those engaged in news

- The logic of the technologist is not the logic of a person who prefers nuances. The broad statements are false to me, for example. But to the Xoogler, these are self-evident truths. Get with our program or get left to sleep on cardboard in the street.

- The schism smart software creates is palpable. On one hand, there are those who “get it.” On the other hand, there are those who fight a meaningless battle with the inevitable. There’s only one problem: Technology is not delivering better, faster, or cheaper social fabrics. Technology seems to have some downsides. Just ask a journalist trying to survive on YouTube earnings.

Net net: The attitude of the Xoogler suggests that one cannot shake the sense of being right, entitlement, and logic associated with a Googler even after leaving the firm. The essay makes me uncomfortable for two reasons: [1] I think the author means exactly what is expressed in the essay. News is going to be different. Get with the program or lose big time. And [2] the attitude is one which I find destructive because technology is assumed to “do good.” I am not too sure about that because the benefits of AI are not known and neither are AI’s downsides. Plus, there’s the “everybody wants traffic.” Monopolistic vendors of online ads want me to believe that obvious statement is ground truth. Sorry. I don’t.

Stephen E Arnold, February 13, 2024

Sam AI-Man Puts a Price on AI Domination

February 13, 2024

AI start ups may want to amp up their fund raising. Optimism and confidence are often perceived as positive attributes. As a dinobaby, I think in terms of finding a deal at the discount supermarket. Sam AI-Man (actually Sam Altman) thinks big. Forget the $5 million investment in a semi-plausible AI play. “Think a bit bigger” is the catchphrase for OpenAI.

Thinking billions? You silly goose. Think trillions. Thanks, MidJourney. Close enough, close enough.

How does seven followed by 12 zeros strike you? A reasonable figure. Well, Mr. AI-Man estimates that’s the cost of building world AI dominating chips, content, and assorted impedimenta in a quest to win the AI dust ups in assorted global markets. “OpenAI Chief Sam Altman Is Seeking Up to $7 TRILLION (sic) from Investors Including the UAE for Secretive Project to Reshape the Global Semiconductor Industry” reports:

Altman is reportedly looking to solve some of the biggest challenges faced by the rapidly-expanding AI sector — including a shortage of the expensive computer chips needed to power large-language models like OpenAI’s ChatGPT.

And where does one locate entities with this much money? The news report says:

Altman has met with several potential investors, including SoftBank Chairman Masayoshi Son and Sheikh Tahnoun bin Zayed al Nahyan, the UAE’s head of security.

To put the figure in context, the article says:

It would be a staggering and unprecedented sum in the history of venture capital, greater than the combined current market capitalizations of Apple and Microsoft, and more than the annual GDP of Japan or Germany.

Several observations:

- The ante for big time AI has gone up

- The argument for people and content has shifted to chip facilities to fabricate semiconductors

- The fund-me tour is a newsmaker.

Net net: How about those small search-and-retrieval oriented AI companies? Heck, what about outfits like Amazon, Facebook, and Google?

Stephen E Arnold, February 13, 2024

Scattering Clouds: Price Surprises and Technical Labyrinths Have an Impact

February 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Yep, the cloud. A third-party time sharing services with some 21st-century add ons. I am not too keen on the cloud even though I am forced to use it for certain specific tasks. Others, however, think nothing of using the cloud like an invisible and infinite USB stick. “2023 Could Be the Year of Public Cloud Repatriation” strikes me as a “real” news story reporting that others are taking a look at the sky, spotting threatening clouds, and heading to a long-abandoned computer room to rethink their expenditures.

The write up reports:

Many regard repatriating data and applications back to enterprise data centers from a public cloud provider as an admission that someone made a big mistake moving the workloads to the cloud in the first place. I don’t automatically consider this a failure as much as an adjustment of hosting platforms based on current economic realities. Many cite the high cost of cloud computing as the reason for moving back to more traditional platforms.

I agree. However, there are several other factors which may reflect more managerial analysis than technical acumen; specifically:

- The cloud computing solution was better, faster, and cheaper. Better than an in house staff? Well, not for everyone because cloud companies are not working overtime to address user / customer problems. The technical personnel have other fires, floods, and earthquakes. Users / customers have to wait unless the user / customer “buys” dedicated support staff.

- So the “cheaper” argument becomes an issue. In addition to paying for escalated support, one has to deal with Byzantine pricing mechanisms. If one considers any of the major cloud providers, one can spend hours reading how to manage certain costs. Data transfer is a popular subject. Activated but unused services are another. Why is pricing so intricate and complex? Answer: Revenue for the cloud providers. Many customers are confident the big clouds are their friend and have their best financial interests at heart. That’s true. It is just that the heart is in the cloud computer books, not the user / customer balance sheets.

- And better? For certain operations, a user / customer has limited options. The current AI craze means the cloud is the principal game in town. Payroll, sales management, and Webby stuff are also popular functions to move to the cloud.

The rationale for shifting to the cloud varies, but there are some themes which my team and I have noted in our work over the years:

First, the cloud allowed “experts” who cost a lot of money to be hired by the cloud vendor. Users / customers did not have to have these expensive people on their staff. Plus, there are not that many experts who are really expert. The cloud vendor has the smarts to hire the best and the resources to pay these people accordingly… in theory. But bean counters love to cut costs so IT professionals were downsized in many organizations. The mythical “power user” could do more and gig workers could pick up any slack. But the costs of cloud computing held a little box with some Tannerite inside. Costs for information technology were going up. Wouldn’t it be cheaper to do computing in house? For some, the answer is, “Yes.”

An ostrich company with its head in the clouds, not in the sand. Thanks, MidJourney, what a not-even-good-enough illustration.

Second, most organizations lacked the expertise to manage a multi-cloud set up. When an organization has two or more clouds, one cannot allow a cloud company to manage itself and one or more competitors. Therefore, organizations had to add to their headcount a new and expensive position: A cloud manager.

Third, the cloud solutions are not homogeneous. Different rules of the road, different technical set up, and different pricing schemes. The solution? Add another position: A technical manager to manage the cloud technologies.

I will stop with these three points. One can rationalize using the cloud easily; for example a government agency can push tasks to the cloud. Some work in government agencies consists entirely of attending meetings at which third-party contractors explain what they are doing and why an engineering change order is priority number one. Who wants to do this work as part of a nine to five job?

But now there is a threat to the clouds themselves. That is security. What’s more secure? Data in a user / customer server facility down the hall or in a disused building in Piscataway, New Jersey, or sitting in a cloud service scattered wherever? Security? Cloud vendors are great at security. Yeah, how about those AWS S3 buckets or the Microsoft email “issue”?

My view is that a “where should our computing be done and where should our data reside” audit be considered by many organizations. People have had their heads in the clouds for a number of years. It is time to hold a meeting in that little-used computer room and do some thinking.

Stephen E Arnold, February 12, 2024

A Reminder: AI Winning Is Skewed to the Big Outfits

February 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have been commenting about the perception some companies have that AI start ups focusing on search will eventually reduce Google’s dominance. I understand the desire to see an underdog or a coalition of underdogs overcome a formidable opponent. Hollywood loves the unknown team which wins the championship. Movie goers root for an unlikely boxing unknown to win the famous champion’s belt. These wins do occur in real life. Some Googlers favorite sporting event is the NCAA tournament. That made-for-TV series features what are called Cinderella teams. (Will Walt Disney Co. sue if the subtitles for a game employees the the word “Cinderella”? Sure, why not?)

I believe that for the next 24 to 36 months, Google will not lose its grip on search, its services, or online advertising. I admit that once one noses into 2028, more disruption will further destabilize Google. But for now, the Google is not going to be derailed unless an exogenous event ruins Googzilla’s habitat.

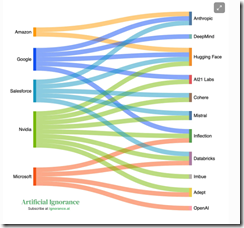

I want to direct attention to the essay “AI’s Massive Cash Needs Are Big Tech’s Chance to Own the Future.” The write up contains useful information about selected players in the artificial intelligence Monopoly game. I want to focus on one “worm” chart included in the essay:

Several things struck me:

- The major players are familiar; that is, Amazon, Google, Microsoft, Nvidia, and Salesforce. Notably absent are IBM, Meta, Chinese firms, Western European companies other than Mistral, and smaller outfits funded by venture capitalists relying on “open source AI solutions.”

- The five major companies in the chart are betting money on different roulette wheel numbers. VCs use the same logic by investing in a portfolio of opportunities and then pray to the MBA gods that one of these puppies pays off.

- The cross investments ensure that information leaks from the different color “worms” into the hills controlled by the big outfits. I am not using the collusion word or the intelligence word. I am just mentioned that information has a tendency to leak.

- Plumbing and associated infrastructure costs suggest that start ups may buy cloud services from the big outfits. Log files can be fascinating sources of information to the service providers engineers too.

My point is that smaller outfits are unlikely to be able to dislodge the big firms on the right side of the “worm” graph. The big outfits can, however, easily invest in, acquire, or learn from the smaller outfits listed on the left side of the graph.

Does a clever AI-infused search start up have a chance to become a big time player. Sure, but I think it is more likely that once a smaller firm demonstrates some progress in a niche like Web search, a big outfit with cash will invest, duplicate, or acquire the feisty newcomer.

That’s why I am not counting out the Google to fall over dead in the next three years. I know my viewpoint is not one shared by some Web search outfits. That’s okay. Dinobabies often have different points of view.

Stephen E Arnold, February 8, 2024

Universities and Innovation: Clever Financial Plays May Help Big Companies, Not Students

February 7, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an interesting essay in The Economist (a newspaper to boot) titled “Universities Are Failing to Boost Economic Growth.” The write up contained some facts anchored in dinobaby time; for example, “In the 1960s the research and development (R&D) unit of DuPont, a chemicals company, published more articles in the Journal of the American Chemical Society than the Massachusetts Institute of Technology and Caltech combined.”

A successful academic who exists in a revolving door between successful corporate employment and prestigious academic positions innovate with [a] a YouTube program, [b] sponsors who manufacture interesting products, and [c] taking liberties with the idea of reproducible results from his or her research. Thanks, MSFT Copilot Bing thing. Getting more invasive today, right?

I did not know that. I recall, however, that my former boss at Booz, Allen & Hamilton in the mid-1970s had me and couple of other compliant worker bees work on a project to update a big-time report about innovation. My recollection is that our interviews with universities were less productive than conversations held at a number of leading companies around the world. The gulf between university research departments had yet to morph into what were later called “technology transfer departments.” Over the years, as the Economist newspaper points out:

The golden age of the corporate lab then came to an end when competition policy loosened in the 1970s and 1980s. At the same time, growth in university research convinced many bosses that they no longer needed to spend money on their own. Today only a few firms, in big tech and pharma, offer anything comparable to the DuPonts of the past.

The shift, from my point of view, was that big companies could shift costs, outsource research, and cut themselves free from the wonky wizards that one could find wandering around the Cherry Hill Mall near the now-gone Bell Laboratories.

Thus, the schools became producers of innovation.

The Economist newspaper considers the question, “Why can’t big outfits surf on these university insights?” My question is, “Is the Economist newspaper overlooking the academic linkages that exist between the big companies producing lots of cash and a number of select universities. IBM is proud to be camped out at MIT. Google operates two research annexes at Stanford University and the University of Washington. Even smaller companies have ties; for example, Megatrends is close to Indiana University by proximity and spiritually linked to a university in a country far away. Accidents? Nope.

The Economist newspaper is doing the Oxford debate thing: From a superior position, the observations are stentorious. The knife like insights are crafted to cut those of lesser intellect down to size. Chop slice dice like a smart kitchen appliance.

I noted this passage:

Perhaps, with time, universities and the corporate sector will work together more profitably. Tighter competition policy could force businesses to behave a little more like they did in the post-war period, and beef up their internal research.

Is the Economist newspaper on the right track with this university R&D and corporate innovation arguments?

In a word, “Yep.”

Here’s my view:

- Universities teamed up with companies to get money in exchange for cheaper knowledge work subsidized by eager graduate students and PR savvy departments

- Companies used the tie ups to identify ideas with the potential for commercial application and the young at heart and generally naive students, faculty, and researchers as a recruiting short cut. (It is amazing what some PhDs would do for a mouse pad with a prized logo on it.)

- Researchers, graduate students, esteemed faculty, and probably motivated adjunct professors with some steady income after being terminated in a “real” job started making up data. (Yep, think about the bubbling scandals at Harvard University, for instance.)

- Universities embraced the idea that education is a business. Ah, those student loan plays were useful. Other outfits used the reputation to recruit students who would pay for the cost of a degree in cash. From what countries were these folks? That’s a bit of a demographic secret, isn’t it?

Where are we now? Spend some time with recent college graduates. That will answer the question, I believe. Innovation today is defined narrowly. A recent report from Google identified companies engaged in the development of mobile phone spyware. How many universities in Eastern Europe were on the Google list? Answer: Zero. How many companies and state-sponsored universities were on the list? Answer: Zero. How comprehensive was the listing of companies in Madrid, Spain? Answer: Incomplete.

I want to point out that educational institutions have quite distinct innovation fingerprints. The Economist newspaper does not recognize these differences. A small number of companies are engaged in big-time innovation while most are in the business of being cute or clever. The Economist does not pay much attention to this. The individuals, whether in an academic setting or in a corporate environment, are more than willing to make up data, surf on the work of other unacknowledged individuals, or suck of good ideas and information and then head back to a home country to enjoy a life better than some of their peers experience.

If we narrow the focus to the US, we have an unrecognized challenge — Dealing with shaped or synthetic information. In a broader context, the best instruction in certain disciplines is not in the US. One must look to other countries. In terms of successful companies, the financial rewards are shifting from innovation to me-too plays and old-fashioned monopolistic methods.

How do I know? Just ask a cashier (human, not robot) to make change without letting the cash register calculate what you will receive. Is there a fix? Sure, go for the next silver bullet solution. The method is working quite well for some. And what does “economic growth” mean? Defining terms can be helpful even to an Oxford Union influencer.

Stephen E Arnold, February 7, 2024

Education on the Cheap: No AI Required

January 26, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I don’t write about education too often. I do like to mention the plagiarizing methods of some academics. What fun! I located a true research gem (probably non-reproducible, hallucinogenic, or just synthetic but I don’t care). “Emergency-Hired Teachers Do Just as Well as Those Who Go Through Normal Training” states:

New research from Massachusetts and New Jersey suggests maybe not. In both states, teachers who entered the profession without completing the full requirements performed no worse than their normally trained peers.

A sanitation worker with a high school diploma is teaching advanced seventh graders about linear equations. The students are engaged… with their mobile phones. Hey, good enough, MSFT Copilot Bing thing. Good enough.

Then a modest question:

The better question now is why these temporary waivers aren’t being made permanent.

And what’s the write up say? I quote:

In other words, making it harder to become a teacher will reduce the supply but offers no guarantee that those who meet the bar will actually be effective in the classroom.

Huh?

Using people who did not slog through college and learned something (one hopes) is expensive. Think of the cost savings when using those who are untrained and unencumbered with expectations of big money! When good enough is the benchmark of excellence, embrace those without an comprehensive four-year or more education. Ooops. Who wants that?

I thought that I once heard that the best, most educated teaching professionals should work with the youngest students. I must have been doing some of that AI-addled thinking common among some in the old age home. When’s lunch?

Stephen E Arnold, January 26, 2024

Fujitsu: Good Enough Software, Pretty Good Swizzling

January 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The USPS is often interesting. But the UK’s postal system, however, is much worse. I think we can thank the public private US postal construct for not screwing over those who manage branch offices. Computer Weekly details how the UK postal system’s leaders knowingly had an IT problem and blamed employees: “Fujitsu Bosses Knew About Post Office Horizon IT Flaws, Says Insider.”

The UK postal system used the Post Office Horizon IT system supplied by Fujitsu. The Fujitsu bosses allowed it to be knowingly installed despite massive problems. Hundreds of UK subpostmasters were accused of fraud and false accounting. They were held liable. Many were imprisoned, had their finances ruined, and lost jobs. Many of the UK subpostmasters fought the accusations. It wasn’t until 2019 that the UK High Court proved it was Horizon IT’s fault.

The Fujitsu that “designed” the postal IT system didn’t have the correct education and experience for the project. It was built on a project that didn’t properly record and process payments. A developer on the project shared with Computer Weekly:

“‘To my knowledge, no one on the team had a computer science degree or any degree-level qualifications in the right field. They might have had lower-level qualifications or certifications, but none of them had any experience in big development projects, or knew how to do any of this stuff properly. They didn’t know how to do it.’”

The Post Office Horizon It system was the largest commercial system in Europe and it didn’t work. The software was bloated, transcribed gibberish, and was held together with the digital equivalent of Scotch tape. This case is the largest miscarriage of justice in current UK history. Thankfully the truth has come out and the subpostmasters will be compensated. The compensation doesn’t return stolen time but it will ease their current burdens.

Fujitsu is getting some scrutiny. Does the company manufacture grocery self check out stations? If so, more outstanding work.

Whitney Grace, January 25, 2024

Cyber Security Investing: A Money Pit?

January 22, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Cyber security is a winner, a sure-fire way to take home the big bucks. Slam dunk. But the write up “Cybersecurity Startup Funding Hits 5-Year Low, Drops 50% from 2022” may signal that some money people have a fear of what might be called a money pit. The write up states:

In 2023, cyber startups saw only about a third of that, as venture funding dipped to its lowest total since 2018. Security companies raised $8.2 billion in 692 venture capital deals last year — per Crunchbase numbers — compared to $16.3 billion in 941 deals in 2022.

Have investors in cyber security changed their view of a slam-dunk investment? That winning hoop now looks like a stinking money pit perhaps? Thanks, MSFT Copilot Bing thing with security to boot. Good enough.

Let’s believe these data which are close enough for horseshoes. I also noted this passage:

“What we saw in terms of cybersecurity funding in 2023 were the ramifications of the exceptional surge of 2021, with bloated valuations and off-the-charts funding rounds, as well as the wariness of investors in light of market conditions,” said Ofer Schreiber, senior partner and head of the Israel office for cyber venture firm YL Ventures.

The reference to Israel is bittersweet. The Israeli cyber defenses failed to detect, alert, and thus protect those who were in harm’s way in October 2023. How you might ask because Israel is the go-to innovator in cyber security? Maybe the over-hyped, super-duper, AI-infused systems don’t work as well as the marketer’s promotional material assert? Just a thought.

My views:

- Cyber security is difficult; for instance, Microsoft’s announcement that the Son of SolarWinds has shown up inside the Softies’ email

- Bad actors can use AI faster than cyber security firms can — and make the smart software avoid being dumb

- Cyber security requires ever-increasing investments because the cat-and-mouse game between good actors and bad actors is a variant of the cheerful 1950s’ arms race.

Do you feel secure with your mobile, your laptop, and your other computing devices? Do you scan QR codes in restaurants without wondering if the code is sandbagged? Are you an avid downloader? I don’t want to know, but you may want answers.

Stephen E Arnold, January 22, 2024

Two Surveys. One Message. Too Bad

January 17, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Generative Artificial Intelligence Will Lead to Job Cuts This Year, CEOs Say.” The data come from a consulting/accounting outfit’s survey of executives at the oh-so-exclusive World Economic Forum meeting in the Piscataway, New Jersey, of Switzerland. The company running the survey is PwC (once an acronym for Price Waterhouse Coopers. The moniker has embraced a number of interesting investigations. For details, navigate to this link.)

Survey says, “Economic gain is the meaning of life.” Thanks, MidJourney, good enough.

The big finding from my point of view is:

A quarter of global chief executives expect the deployment of generative artificial intelligence to lead to headcount reductions of at least 5 per cent this year

Good, reassuring number from big gun world leaders.

However, the International Monetary Fund also did a survey. The percentage of jobs affected range from 26 percent in low income countries, 40 percent for emerging markets, and 60 percent for advanced economies.

What can one make of these numbers; specifically, the five percent to the 60 percent? My team’s thoughts are:

- The gap is interesting, but the CEOs appear to be either downplaying, displaying PR output, or working to avoid getting caught in sticky wicket.

- The methodology and the sample of each survey are different, but both are skewed. The IMF taps analysts, bankers, and politicians. PwC goes to those who are prospects for PwC professional services.

- Each survey suggests that government efforts to manage smart software are likely to be futile. On one hand, CEOs will say, “No big deal.” Some will point to the PwC survey and say, “Here’s proof.” The financial types will hold up the IMF results and say, “We need to move fast or we risk losing out on the efficiency payback.”

What does Bill Gates think about smart software? In “Microsoft Co-Founder Bill Gates on AI’s Impact on Jobs: It’s Great for White-Collar Workers, Coders” the genius for our time says:

I have found it’s a real productivity increase. Likewise, for coders, you’re seeing 40%, 50% productivity improvements which means you can get programs [done] sooner. You can make them higher quality and make them better. So mostly what we’ll see is that the productivity of white-collar [workers] will go up

Happy days for sure! What’s next? Smart software will move forward. Potential payouts are too juicy. The World Economic Forum and the IMF share one key core tenet: Money. (Tip: Be young.)

Stephen E Arnold, January 17, 2024