Is YouTube Marching Toward Its Waterloo?

November 28, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have limited knowledge of the craft of warfare. I do have a hazy recollection that Napoleon found himself at the wrong end of a pointy stick at the Battle of Waterloo. I do recall that Napoleon lost the battle and experienced the domino effect which knocked him down a notch or two. He ended up on the island of Saint Helena in the south Atlantic Ocean with Africa a short 1,200 miles to the east. But Nappy had no mobile phone, no yacht purchased with laundered money, and no Internet. Losing has its downsides. Bummer. No empire.

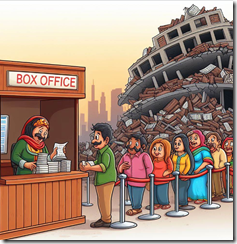

I thought about Napoleon when I read “YouTube’s Ad Blocker Crackdown Heats Up.” The question I posed to myself was, “Is the YouTube push for subscription revenue and unfettered YouTube user data collection a road to Google’s Battle of Waterloo?”

Thanks, MSFT Copilot. You have a knack for capturing the essence of a loser. I love good enough illustrations too.

The cited article from Channel News reports:

YouTube is taking a new approach to its crackdown on ad-blockers by delaying the start of videos for users attempting to avoid ads. There were also complaints by various X (formerly Twitter) users who said that YouTube would not even let a video play until the ad blocker was disabled or the user purchased a YouTube Premium subscription. Instead of an ad, some sources using Firefox and Edge browsers have reported waiting around five seconds before the video launches the content. According to users, the Chrome browser, which the streaming giant shares an owner with, remains unaffected.

If the information is accurate, Google is taking steps to damage what the firm has called the “user experience.” The idea is that users who want to watch “free” videos, have a choice:

- Put up with delays, pop ups, and mindless appeals to pay Google to show videos from people who may or may not be compensated by the Google

- Just fork over a credit card and let Google collect about $150 per year until the rates go up. (The cable TV and mobile phone billing model is alive and well in the Google ecosystem.)

- Experiment with advertisement blocking technology and accept the risk of being banned from Google services

- Learn to love TikTok, Instagram, DailyMotion, and Bitchute, among other options available to a penny-conscious consumer of user-produced content

- Quit YouTube and new-form video. Buy a book.

What happened to Napoleon before the really great decision to fight Wellington in a lovely part of Belgium. Waterloo is about nine miles south of the wonderful, diverse city of Brussels. Napoleon did not have a drone to send images of the rolling farmland, where the “enemies” were located, or the availability of something behind which to hide. Despite Nappy’s fine experience in his march to Russia, he muddled forward. Despite allegedly having said, “The right information is nine-tenths of every battle,” the Emperor entered battle, suffered 40,000 casualties, and ended up in what is today a bit of a tourist hot spot. In 1816, it was somewhat less enticing. Ordering troops to charge uphill against a septuagenarian’s forces was arguably as stupid as walking to Russia as snowflakes began to fall.

How does this Waterloo related to the YouTube fight now underway? I see several parallels:

- Google’s senior managers, informed with the management lore of 25 years of unfettered operation, knows that users can be knocked along a path of the firm’s choice. Think sheep. But sheep can be disorderly. One must watch sheep.

- The need to stem the rupturing of cash required to operate a massive “free” video service is another one of those Code Yellow and Code Red events for the company. With search known to be under threat from Sam AI-Man and the specters of “findability” AI apps, the loss of traffic could be catastrophic. Despite Google’s financial fancy dancing, costs are a bit of a challenge: New hardware costs money, options like making one’s own chips costs money, allegedly smart people cost money, marketing costs money, legal fees cost money, and maintaining the once-free SEO ad sales force costs money. Got the message: Expenses are a problem for the Google in my opinion.

- The threat of either TikTok or Instagram going long form remains. If these two outfits don’t make a move on YouTube, there will be some innovator who will. The price of “move fast and break things” means that the Google can be broken by an AI surfer. My team’s analysis suggests it is more brittle today than at any previous point in its history. The legal dust up with Yahoo about the Overture / GoTo issue was trivial compared to the cost control challenge and the AI threat. That’s a one-two for the Google management wizards to solve. Making sense of the Critique of Pure Reason is a much easier task in my view.

The cited article includes a statement which is likely to make some YouTube users uncomfortable. Here’s the statement:

Like other streaming giants, YouTube is raising its rates with the Premium price going up to $13.99 in the U.S., but users may have to shell out the money, and even if they do, they may not be completely free of ads.

What does this mean? My interpretation is that [a] even if you pay, a user may see ads; that is, paying does not eliminate ads for perpetuity; and [b] the fee is not permanent; that is, Google can increase it at any time.

Several observations:

- Google faces high-cost issues from different points of the business compass: Legal in the US and EU, commercial from known competitors like TikTok and Instagram, and psychological from innovators who find a way to use smart software to deliver a more compelling video experience for today’s users. These costs are not measured solely in financial terms. The mental stress of what will percolate from the seething mass of AI entrepreneurs. Nappy did not sleep too well after Waterloo. Too much Beef Wellington, perhaps?

- Google’s management methods have proven appropriate for generating revenue from a ad model in which Google controls the billing touch points. When those management techniques are applied to non-controllable functions, they fail. The hallmark of the management misstep is the handling of Dr. Timnit Gebru, a squeaky wheel in the Google AI content marketing machine. There is nothing quite like stifling a dissenting voice, the squawk of a parrot, and a don’t-let-the-door-hit-you-when -you-leave moment.

- The post-Covid, continuous warfare, and unsteady economic environment is causing the social fabric to fray and in some cases tear. This means that users may become contentious and become receptive to a spontaneous flash mob action toward Google and YouTube. User revolt at scale is not something Google has demonstrated a core competence.

Net net: I will get my microwave popcorn and watch this real-time Google Boogaloo unfold. Will a recipe become famous? How about Grilled Google en Croute?

Stephen E Arnold, November 28, 2023

Maybe the OpenAI Chaos Ended Up as Grand Slam Marketing?

November 28, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Yep, Q Star. The next Big Thing. “About That OpenAI Breakthrough” explains

OpenAI could in fact have a breakthrough that fundamentally changes the world. But “breakthroughs” rarely turn to be general to live up to initial rosy expectations. Often advances work in some contexts, not otherwise.

I agree, but I have a slightly different view of the matter. OpenAI’s chaotic management skills ended up as accidental great marketing. During the dust up and dust settlement, where were the other Big Dogs of the techno-feudal world? If you said, who? you are on the same page with me. OpenAI burned itself into the minds of those who sort of care about AI and the end of the world Terminator style.

In companies and organizations with “do gooder” tendencies, the marketing messages can be interpreted by some as a scientific fact. Nope. Thanks, MSFT Copilot. Are you infringing and expecting me to take the fall?

First, shotgun marriages can work out here in rural Kentucky. But more often than not, these unions become the seeds of Hatfield and McCoy-type Thanksgivings. “Grandpa, don’t shoot the turkey with birdshot. Granny broke a tooth last year.” Translating from Kentucky argot: Ideological divides produce craziness. The OpenAI mini-series is in its first season and there is more to come from the wacky innovators.

Second, any publicity is good publicity in Sillycon Valley. Who has given a thought to Google’s smart software? How did Microsoft’s stock perform during the five day mini-series? What is the new Board of Directors going to do to manage the bucking broncos of breakthroughs? Talk about dominating the “conversation.” Hats off to the fun crowd at OpenAI. Hey, Google, are you there?

Third, how is that regulation of smart software coming along? I think one unit of the US government is making noises about the biggest large language model ever. The EU folks continue to discuss, a skill essential to representing the interests of the group. Countries like China are chugging along, happily downloading code from open source repositories. So exactly what’s changed?

Net net: The OpenAI has been a click champ. Good, bad, or indifferent, other AI outfits have some marketing to do in the wake of the blockbuster “Sam AI-Man: The Next Bigger Thing.” One way or another, Sam AI-Man dominates headlines, right Zuck, right Sundar?

Stephen E Arnold, November 28, 2023

Predicting the Weather: Another Stuffed Turkey from Google DeepMind?

November 27, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

By or design, the adolescents at OpenAI have dominated headlines for the pre-turkey, the turkey, and the post-turkey celebrations. In the midst of this surge in poohbah outputs, Xhitter xheets, and podcast posts, non-OpenAI news has been struggling for a toehold.

An important AI announcement from Google DeepMind stuns a small crowd. Were the attendees interested in predicting the weather or getting a free umbrella? Thank, MSFT Copilot. Another good enough art work whose alleged copyright violations you want me to determine. How exactly am I to accomplish that? Use, Google Bard?

What is another AI company to do?

A partial answer appears in “DeepMind AI Can Beat the Best Weather Forecasts. But There Is a Catch”. This is an article in the esteemed and rarely spoofed Nature Magazine. None of that Techmeme dominating blue link stuff. None of the influential technology reporters asserting, “I called it. I called it.” None of the eye wateringly dorky observations that OpenAI’s organizational structure was a problem. None of the “Satya Nadella learned about the ouster at the same time we did.” Nope. Nope. Nope.

What Nature provided is good, old-fashioned content marketing. The write up points out that DeepMind says that it has once again leapfrogged mere AI mortals. Like the quantum supremacy assertion, the Google can predict the weather. (My great grandmother made the same statement about The Farmer’s Almanac. She believed it. May she rest in peace.)

The estimable magazine reported in the midst of the OpenAI news making turkeyfest said:

To make a forecast, it uses real meteorological readings, taken from more than a million points around the planet at two given moments in time six hours apart, and predicts the weather six hours ahead. Those predictions can then be used as the inputs for another round, forecasting a further six hours into the future…. They [Googley DeepMind experts] say it beat the ECMWF’s “gold-standard” high-resolution forecast (HRES) by giving more accurate predictions on more than 90 per cent of tested data points. At some altitudes, this accuracy rose as high as 99.7 per cent.

No more ruined picnics. No weddings with bridesmaids’ shoes covered in mud. No more visibly weeping mothers because everyone is wet.

But Nature, to the disappointment of some PR professionals presents an alternative viewpoint. What a bummer after all those meetings and presentations:

“You can have the best forecast model in the world, but if the public don’t trust you, and don’t act, then what’s the point? [A statement attributed to Ian Renfrew at the University of East Anglia]

Several thoughts are in order:

- Didn’t IBM make a big deal about its super duper weather capabilities. It bought the Weather Channel too. But when the weather and customers got soaked, I think IBM folded its umbrella. Will Google have to emulate IBM’s behavior. I mean “the weather.” (Note: The owner of the IBM Weather Company is an outfit once alleged to have owned or been involved with the NSO Group.)

- Google appears to have convinced Nature to announce the quantum supremacy type breakthrough only to find that a professor from someplace called East Anglia did not purchase the rubber boots from the Google online store.

- The current edition of The Old Farmer’s Almanac is about US$9.00 on Amazon. That predictive marvel was endorsed by Gussie Arnold, born about 1835. We are not sure because my father’s records of the Arnold family were soaked by sudden thunderstorm.

Just keep in mind that Google’s system can predict the weather 10 days ahead. Another quantum PR moment from the Google which was drowned out in the OpenAI tsunami.

Stephen E Arnold, November 27, 2023

Another Xoogler and More Process Insights

November 23, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google employs many people. Over the last 25 years, quite a few Xooglers (former Google employees) are out and about. I find the essays by the verbal Xooglers interesting. “Reflecting on 18 Years at Google” contains several intriguing comments. Let me highlight a handful of these. You will want to read the entire Hixie article to get the context for the snips I have selected.

The first point I underlined with blushing pink marker was:

I found it quite frustrating how teams would be legitimately actively pursuing ideas that would be good for the world, without prioritizing short-term Google interests, only to be met with cynicism in the court of public opinion.

Old timers share stories about the golden past in the high-technology of online advertising. Thanks, Copilot, don’t overdo the schmaltz.

The “Google as a victim” is a notion not often discussed — except by some Xooglers. I recall a comment made to me by a seasoned manager at another firm, “Yes, I am paranoid. They are out to get me.” That comment may apply to some professionals at Google.

How about this passage?

My mandate was to do the best thing for the web, as whatever was good for the web would be good for Google (I was explicitly told to ignore Google’s interests).

The oft-repeated idea is that Google cares about its users and similar truisms are part of what I call the Google mythology. Intentionally, in my opinion, Google cultivates the “doing good” theme as part of its effort to distract observers from the actual engineering intent of the company. (You love those Google ads, don’t you?)

Google’s creative process is captured in this statement:

We essentially operated like a startup, discovering what we were building more than designing it.

I am not sure if this is part of Google’s effort to capture the “spirit” of the old-timey days of Bell Laboratories or an accurate representation of Google’s directionless methods became over the years. What people “did” is clearly dissociated from the advertising mechanisms on which the oversized tires and chrome do-dads were created and bolted on the ageing vehicle.

And, finally, this statement:

It would require some shake-up at the top of the company, moving the center of power from the CFO’s office back to someone with a clear long-term vision for how to use Google’s extensive resources to deliver value to users.

What happened to the ideas of doing good and exploratory innovation?

Net net: Xooglers pine for the days of the digital gold rush. Googlers may not be aware of what the company is and does. That may be a good thing.

Stephen E Arnold, November 23, 2023

OpenAI: What about Uncertainty and Google DeepMind?

November 20, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A large number of write ups about Microsoft and its response to the OpenAI management move populate my inbox this morning (Monday, November 20, 2023).

To give you a sense of the number of poohbahs, mavens, and “real” journalists covering Microsoft’s hiring of Sam (AI-Man) Altman, I offer this screen shot of Techmeme.com taken at 1100 am US Eastern time:

A single screenshot cannot do justice to the digital bloviating on this subject as well as related matters.

I did a quick scan because I simply don’t have the time at age 79 to read every item in this single headline service. Therefore, I admit that others may have thought about the impact of the Steve Jobs’s like termination, the revolt of some AI wizards, and Microsoft’s creating a new “company” and hiring Sam AI-Man and a pride of his cohorts in the span of 72 hours (give or take time for biobreaks).

In this short essay, I want to hypothesize about how the news has been received by that merry band of online advertising professionals.

To begin, I want to suggest that the turmoil about who is on first at OpenAI sent a low voltage signal through the collective body of the Google. Frisson resulted. Uncertainty and opportunity appeared together like the beloved Scylla and Charybdis, the old pals of Ulysses. The Google found its right and left Brainiac hemispheres considering that OpenAI would experience a grave set back, thus clearing a path for Googzilla alone. Then one of the Brainiac hemisphere reconsidered and perceive a grave threat from the split. In short, the Google tipped into its zone of uncertainty.

A group of online advertising experts meet to consider the news that Microsoft has hired Sam Altman. The group looks unhappy. Uncertainty is an unpleasant factor in some business decisions. Thanks Microsoft Copilot, you captured the spirit of how some Silicon Valley wizards are reacting to the OpenAI turmoil because Microsoft used the OpenAI termination of Sam Altman as a way to gain the upper hand in the cloud and enterprise app AI sector.

Then the matter appeared to shift back to the pre-termination announcement. The co-founder of OpenAI gained more information about the number of OpenAI employees who were planning to quit or, even worse, start posting on Instagram, WhatsApp, and TikTok (X.com is no longer considered the go-to place by the in crowd.

The most interesting development was not that Sam AI-Man would return to the welcoming arms of Open AI. No, Sam AI-Man and another senior executive were going to hook up with the geniuses of Redmond. A new company would be formed with Sam AI-Man in charge.

As these actions unfolded, the Googlers sank under a heavy cloud of uncertainty. What if the Softies could use Google’s own open source methods, integrate rumored Microsoft-developed AI capabilities, and make good on Sam AI-Man’s vision of an AI application store?

The Googlers found themselves reading every “real news” item about the trajectory of Sam AI-Man and Microsoft’s new AI unit. The uncertainty has morphed into another January 2023 Davos moment. Here’s my take as of 230 pm US Eastern, November 20, 2023:

- The Google faces a significant threat when it comes to enterprise AI apps. Microsoft has a lock on law firms, the government, and a number of industry sectors. Google has a presence, but when it comes to go-to apps, Microsoft is the Big Dog. More and better AI raises the specter of Microsoft putting an effective laser defense behinds its existing enterprise moat.

- Microsoft can push its AI functionality as the Azure difference. Furthermore, whether Google or Amazon for that matter assert their cloud AI is better, Microsoft can argue, “We’re better because we have Sam AI-Man.” That is a compelling argument for government and enterprise customers who cannot imagine work without Excel and PowerPoint. Put more AI in those apps, and existing customers will resist blandishments from other cloud providers.

- Google now faces an interesting problem: It’s own open source code could be converted into a death ray, enhanced by Sam AI-Man, and directed at the Google. The irony of Googzilla having its left claw vaporized by its own technology is going to be more painful than Satya Nadella rolling out another Davos “we’re doing AI” announcement.

Net net: The OpenAI machinations are interesting to many companies. To the Google, the OpenAI event and the Microsoft response is like an unsuspecting person getting zapped by Nikola Tesla’s coil. Google’s mastery of high school science club management techniques will now dig into the heart of its DeepMind.

Stephen E Arnold, November 20, 2023

Google: Rock Solid Arguments or Fanciful Confections?

November 17, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I read some “real” news from a “real” newspaper. My belief is that a “real journalist”, an editor, and probably some supervisory body reviewed the write up. Therefore, by golly, the article is objective, clear, and actual factual. What’s “What Google Argued to Defend Itself in Landmark Antitrust Trial” say?

“I say that my worthy opponent’s assertions are — ahem, harrumph — totally incorrect. I do, I say, I do offer that comment with the greatest respect. My competitors are intellectual giants compared to the regulators who struggle to use Google Maps on an iPhone,” opines a legal eagle who supports Google. Thanks, Microsoft Bing. You have the “chubby attorney” concept firmly in your digital grasp.

First, the write up says zero about the secrecy in which the case is wrapped. Second, it does not offer any comment about the amount the Google paid to be the default search engine other than offering the allegedly consumer-sensitive, routine, and completely logical fees Google paid. Hey, buying traffic is important, particularly for outfits accused of operating in a way that requires a US government action. Third, the support structure for the Google arguments is not evident. I could not discern the logical threat that linked the components presented in such lucid prose.

The pillars of the logical structure are:

- Appropriate payments for traffic; that is, the Google became the default search engine. Do users change defaults? Well, sure they do? If true, then why be the default in the first place. What are the choices? A Russian search engine, a Chinese search engine, a shadow of Google (Bing, I think), or a metasearch engine (little or no original indexing, just Vivisimo-inspired mash up results)? But pay the “appropriate” amount Google did.

- Google is not the only game in town. Nice terse statement of questionable accuracy. That’s my opinion which I articulated in the three monographs I wrote about Google.

- Google fosters competition. Okay, it sure does. Look at the many choices one has: Swisscows.com, Qwant.com, and the estimable Mojeek, among others.

- Google spends lots of money on helping people research to make “its product great.”

- Google’s innovations have helped people around the world?

- Google’s actions have been anticompetitive, but not too anticompetitive.

Well, I believe each of these assertions. Would a high school debater buy into the arguments? I know for a fact that my debate partner and I would not.

Stephen E Arnold, November 17, 2023

How Google Works: Think about Making Sausage in 4K on a Big Screen with Dolby Sound

November 16, 2023

This essay is the work of a dumb, dinobaby humanoid. No smart software required.

This essay is the work of a dumb, dinobaby humanoid. No smart software required.

I love essays which provide a public glimpse of the way Google operates. An interesting insider description of the machinations of Googzilla’s lair appears in “What I Learned Getting Acquired by Google.” I am going to skip the “wow, the Google is great,” and focus on the juicy bits.

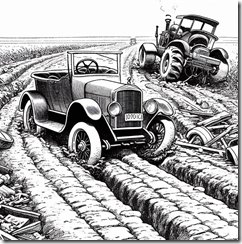

Driving innovation down Google’s Information Highway requires nerves of steel and the patience of Job. A good sense of humor, many brain cells, and a keen desire to make the techno-feudal system dominate are helpful as well. Thanks, Microsoft Bing. It only took four tries to get an illustration of vehicles without parts of each chopped off.

Here are the article’s “revelations.” It is almost like sitting in the Google cafeteria and listening to Tony Bennett croon. Alas, those days are gone, but the “best” parts of Google persist if the write up is on the money.

Let me highlight a handful of comments I found interesting and almost amusing:

- Google, according to the author, “an ever shifting web of goals and efforts.” I think this means going in many directions at once. Chaos, not logic, drives the sports car down the Information Highway

- Google has employees who want “to ship great work, but often couldn’t.” Wow, the Googley management method wastes resources and opportunities due to the Googley outfit’s penchant for being Googley. Yeah, Googley because lousy stuff is one output, not excellence. Isn’t this regressive innovation?

- There are lots of managers or what the author calls “top heavy.” But those at the top are well paid, so what’s the incentive to slim down? Answer: No reason.

- Google is like a teen with a credit card and no way to pay the bill. The debt just grows. That’s Google except it is racking up technical debt and process debt. That’s a one-two punch for sure.

- To win at Google, one must know which game to play, what the rules of that particular game are, and then have the Machiavellian qualities to win the darned game. What about caring for the users? What? The users! Get real.

- Google screws up its acquisitions. Of course. Any company Google buys is populated with people not smart enough to work at Google in the first place. “Real” Googlers can fix any acquisition. The technique was perfected years ago with Dodgeball. Hey, remember that?

Please, read the original essay. The illustration shows a very old vehicle trying to work its way down an information highway choked with mud, blocked by farm equipment, and located in an isolated fairy land. Yep, that’s the Google. What happens if the massive flows of money are reduced? Yikes!

Stephen E Arnold, November 16, 2023

Buy Google Traffic: Nah, Paying May Not Work

November 16, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Tucked into a write up about the less than public trial of the Google was an interesting factoid. The source of the item was “More from the US v Google Trial: Vertical Search, Pre-Installs and the Case of Firefox / Yahoo.” Here’s the snippet:

Expedia execs also testified about the cost of ads and how increases had no impact on search results. On October 19, Expedia’s former chief operating officer, Jeff Hurst, told the court the company’s ad fees increased tenfold from $21 million in 2015 to $290 million in 2019. And yet, Expedia’s traffic from Google did not increase. The implication was that this was due to direct competition from Google itself. Hurst pointed out that Google began sharing its own flight and hotel data in search results in that period, according to the Seattle Times.

“Yes, sir, you can buy a ticket and enjoy a ticket to our entertainment,” says the theater owner. The customer asks, “Is the theater in good repair?” The ticket seller replies, “Of course, you get your money’s worth at our establishment. Next.” Thanks, Microsoft Bing. It took several tries before I gave up.

I am a dinobaby, and I am, by definition, hopelessly out of it. However, I interpret this passage in this way:

- Despite protestations about the Google algorithm’s objectivity, Google has knobs and dials it can use to cause the “objective” algorithm to be just a teenie weenie less objective. Is this a surprise? Not to me. Who builds a system without a mechanism for controlling what it does. My favorite example of this steering involves the original FirstGov.gov search system circa 2000. After Mr. Clinton lost the election, the new administration, a former Halliburton executive wanted a certain Web page result to appear when certain terms were searched. No problemo. Why? Who builds a system one cannot control? Not me. My hunch is that Google may have a similar affection for knobs and dials.

- Expedia learned that buying advertising from a competitor (Google) was expensive and then got more expensive. The jump from $21 million to $290 million is modest from the point of view of some technology feudalists. To others the increase is stunning.

- Paying more money did not result in an increase in clicks or traffic. Again I was not surprised. What caught my attention is that it has taken decades for others to figure out how the digital highway men came riding like a wolf on the fold. Instead of being bedecked with silver and gold, these actors wore those cheerful kindergarten colors. Oh, those colors are childish but those wearing them carried away the silver and gold it seems.

Net net: Why is this US v Google trial not more public? Why so many documents withheld? Why is redaction the best billing tactic of 2023? So many questions that this dinobaby cannot answer. I want to go for a ride in the Brin-A-Loon too. I am a simple dinobaby.

Stephen E Arnold, November 16, 2023

An Odd Couple Sharing a Soda at a Holiday Data Lake

November 16, 2023

What happens when love strikes the senior managers of the technology feudal lords? I will tell you what happens — Love happens. The proof appears in “Microsoft and Google Join Forces on OneTable, an Open-Source Solution for Data Lake Challenges.” Yes, the lakes around Redmond can be a challenge. For those living near Googzilla’s stomping grounds, the risk is that a rising sea level will nuke the outdoor recreation areas and flood the parking lots.

But any speed dating between two techno feudalists is news. The “real news” outfit Venture Beat reports:

In a new open-source partnership development effort announced today, Microsoft is joining with Google and Onehouse in supporting the OneTable project, which could reshape the cloud data lake landscape for years to come

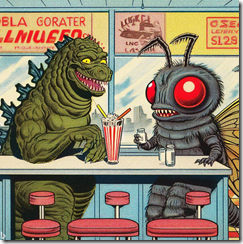

And what does “reshape” mean to these outfits? Probably nothing more than making sure that Googzilla and Mothra become the suppliers to those who want to vacation at the data lake. Come to think of it. The concessions might be attractive as well.

Googzilla says to Mothra-Soft, a beast living in Mercer Island, “I know you live on the lake. It’s a swell nesting place. I think we should hook up and cooperate. We can share the money from merged data transfers the way you and I — you good looking Lepidoptera — are sharing this malted milk. Let’s do more together if you know what I mean.” The delightful Mothra-Soft croons, “I thought you would wait until our high school reunion to ask, big boy. Let’s find a nice, moist, uncrowded place to consummate our open source deal, handsome.” Thanks, Microsoft Bing. You did a great job of depicting a senior manager from the company that developed Bob, the revolutionary interface.

The article continues:

The ability to enable interoperability across formats is critical for Google as it expands the availability of its BigQuery Omni data analytics technology. Kazmaier said that Omni basically extends BigQuery to AWS and Microsoft Azure and it’s a service that has been growing rapidly. As organizations look to do data processing and analytics across clouds there can be different formats and a frequent question that is asked is how can the data landscape be interconnected and how can potential fragmentation be stopped.

Is this alleged linkage important? Yeah, it is. Data lakes are great places to part AI training data. Imagine the intelligence one can glean monitoring inflows and outflows of bits. To make the idea more interesting think in terms of the metadata. Exciting because open source software is really for the little guys too.

Stephen E Arnold, November 16, 2023

Using Smart Software to Make Google Search Less Awful

November 16, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Here’s a quick tip: to get useful results from Google Search, use a competitor’s software. Digital Digging blogger Henk van Ess describes “How to Teach ChatGPT to Come Up with Google Formulas.” Specifically, Ess needed to include foreign-language results in his queries while narrowing results to certain time frames. These are not parameters Google handles well on its own. It was Chat GPT to the rescue—after some tinkering, anyway. He describes an example search goal:

“Find any official document about carbon dioxide reduction from Greek companies, anything from March 24, 2020 to December 21, 2020 will do. Hey, can you search that in Greek, please? Tough question right? Time to fire up Bing or ChatGPT. Round 1 in #chatgpt has a terrible outcome.”

But of course, Hess did not stop there. For the technical details on the resulting “ball of yarn,” how Hess resolved it, and how it can be extrapolated to other use cases, navigate to the write-up. One must bother to learn how to write effective prompts to get these results, but Hess insists it is worth the effort. The post observes:

“The good news is: you only have to do it once for each of your favorite queries. Set and forget, as you just saw I used the same formulae for Greek CO2 and Japanese EV’s. The advantage of natural language processing tools like ChatGPT is that they can help you generate more accurate and relevant search queries in a faster and more efficient way than manually typing in long and complex queries into search engines like Google. By using natural language processing tools to refine and optimize your search queries, you can avoid falling into ‘rabbit holes’ of irrelevant or inaccurate results and get the information you need more quickly and easily.”

Google is currently rolling out its own AI search “experience” in phases around the world. Will it improve results, or will one still be better off employing third-party hacks?

Cynthia Murrell, November 16, 2023