Cyber Security Responsibility: Where It Belongs at Last!

December 5, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I want to keep this item brief. Navigate to “CISA’s Goldstein Wants to Ditch ‘Patch Faster, Fix Faster’ Model.”

CISA means the US government’s Cybersecurity and Infrastructure Security Agency. The “Goldstein” reference points to Eric Goldstein, the executive assistant director of CISA.

The main point of the write up is that big technology companies have to be responsible for cleaning up their cyber security messes. The write up reports:

Goldstein said that CISA is calling on technology providers to “take accountability” for the security of their customers by doing things like enabling default security controls such as multi-factor authentication, making security logs available, using secure development practices and embracing memory safe languages such as Rust.

I may be incorrect, but I picked up a signal that the priorities of some techno feudalists are not security. Perhaps these firms’ goals are maximizing profit, market share, and power over their paying customers. Security? Maybe it is easier to describe in a slide deck or a short YouTube video?

The use of a parental mode seems appropriate for a child? Will it work for techno feudalists who have created a digital mess in kitchens throughout the world? Thanks, MSFT Copilot. You must have ingested some “angry mommy” data when your were but a wee sprout.

Will this approach improve the security of mission-critical systems? Will the enjoinder make a consumer’s mobile phone more secure?

My answer? Without meaningful consequences, security is easier to talk about than deliver. Therefore, minimal change in the near future. I wish I were wrong.

Stephen E Arnold, December 5, 2023

India Might Not Buy the User-Is-Responsible Argument

November 29, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

India’s elected officials seem to be agitated about deep fakes. No, it is not the disclosure that a company in Spain is collecting $10,000 a month or more from a fake influencer named Aitana López. (Some in India may be following the deeply faked bimbo, but I would assert that not too many elected officials will admit to their interest in the digital dream boat.)

US News & World Report recycled a Reuters (the trust outfit) story “India Warns Facebook, YouTube to Enforce Ruyles to Deter Deepfakes — Sources” and asserted:

India’s government on Friday warned social media firms including Facebook and YouTube to repeatedly remind users that local laws prohibit them from posting deepfakes and content that spreads obscenity or misinformation

“I know you and the rest of the science club are causing problems with our school announcement system. You have to stop it, or I will not recommend you or any science club member for the National Honor Society.” The young wizard says, “I am very, very sorry. Neither I nor my friends will play rock and roll music during the morning announcements. I promise.” Thanks, MidJourney. Not great but at least you produced an image which is more than I can say for the MSFT Copilot Bing thing.

What’s notable is that the government of India is not focusing on the user of deep fake technology. India has US companies in its headlights. The news story continues:

India’s IT ministry said in a press statement all platforms had agreed to align their content guidelines with government rules.

Amazing. The US techno-feudalists are rolling over. I am someone who wonders, “Will these US companies bend a knee to India’s government?” I have zero inside information about either India or the US techno-feudalists, but I have a recollection that US companies:

- Do what they want to do and then go to court. If they win, they don’t change. If they lose, they pay the fine and they do some fancy dancing.

- Go to a meeting and output vague assurances prefaced by “Thank you for that question.” The companies may do a quick paso double and continue with business pretty much as usual

- Just comply. As Canada has learned, Facebook’s response to the Canadian news edict was simple: No news for Canada. To make the situation more annoying to a real government, other techno-feudalists hopped on Facebook’s better idea.

- Ignore the edict. If summoned to a meeting or hit with a legal notice, companies will respond with flights of legal eagles with some simple messages; for example, no more support for your law enforcement professionals or your intelligence professionals. (This is a hypothetical example only, so don’t develop the shingles, please.)

Net net: Techno-feudalists have to decide: Roll over, ignore, or go to “war.”

Stephen E Arnold, November 29, 2023

Governments Tip Toe As OpenAI Sprints: A Story of the Turtles and the Rabbits

November 27, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Reuters has reported that a pride of lion-hearted countries have crafted “joint guidelines” for systems with artificial intelligence. I am not exactly sure what “artificial intelligence” means, but I have confidence that a group of countries, officials, advisor, and consultants do.

The main point of the news story “US, Britain, Other Countries Ink Agreement to Make AI Secure by Design” is that someone in these countries knows what “secure by design” means. You may not have noticed that cyber breaches seem to be chugging right along. Maine managed to lose control of most of its residents’ personally identifiable information. I won’t mention issues associated with Progress Software, Microsoft systems, and LY Corp and its messaging app with a mere 400,000 users.

The turtle started but the rabbit reacted. Now which AI enthusiast will win the race down the corridor between supercomputers powering smart software? Thanks, MSFT Copilot. It took several tries, but you delivered a good enough image.

The Reuters’ story notes with the sincerity of an outfit focused on trust:

The agreement is the latest in a series of initiatives – few of which carry teeth – by governments around the world to shape the development of AI, whose weight is increasingly being felt in industry and society at large.

Yep, “teeth.”

At the same time, Sam AI-Man was moving forward with such mouth-watering initiatives as the AI app store and discussions to create AI-centric hardware. “I Guess We’ll Just Have to Trust This Guy, Huh?” asserts:

But it is clear who won (Altman) and which ideological vision (regular capitalism, instead of some earthy, restrained ideal of ethical capitalism) will carry the day. If Altman’s camp is right, then the makers of ChatGPT will innovate more and more until they’ve brought to light A.I. innovations we haven’t thought of yet.

As the signatories to the agreement without “teeth” and Sam AI-Man were doing their respective “thing,” I noted the AP story titled “Pentagon’s AI Initiatives Accelerate Hard Decisions on Lethal Autonomous Weapons.” That write up reported:

… the Pentagon is intent on fielding multiple thousands of relatively inexpensive, expendable AI-enabled autonomous vehicles by 2026 to keep pace with China.

To deal with the AI challenge, the AP story includes this paragraph:

The Pentagon’s portfolio boasts more than 800 AI-related unclassified projects, much still in testing. Typically, machine-learning and neural networks are helping humans gain insights and create efficiencies.

Will the signatories to the “secure by design” agreement act like tortoises or like zippy hares? I know which beastie I would bet on. Will military entities back the slow or the fast AI faction? I know upon which I would wager fifty cents.

Stephen E Arnold, November 27, 2023

Poli Sci and AI: Smart Software Boosts Bad Actors (No Kidding?)

November 22, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Smart software (AI, machine learning, et al) has sparked awareness in some political scientists. Until I read “Can Chatbots Help You Build a Bioweapon?” — I thought political scientists were still pondering Frederick William, Elector of Brandenburg’s social policies or Cambodian law in the 11th century. I was incorrect. Modern poli sci influenced wonks are starting to wrestle with the immense potential of smart software for bad actors. I think this dispersal of the cloud of unknowing I perceived among similar academic group when I entered a third-rate university in 1962 is a step forward. Ah, progress!

“Did you hear that the Senate Committee used my testimony about artificial intelligence in their draft regulations for chatbot rules and regulations?” says the recently admitted elected official. The inmates at the prison facility laugh at the incongruity of the situation. Thanks, Microsoft Bing, you do understand the ways of white collar influence peddling, don’t you?

The write up points out:

As policymakers consider the United States’ broader biosecurity and biotechnology goals, it will be important to understand that scientific knowledge is already readily accessible with or without a chatbot.

The statement is indeed accurate. Outside the esteemed halls of foreign policy power, STM (scientific, technical, and medical) information is abundant. Some of the data are online and reasonably easy to find with such advanced tools as Yandex.com (a Russian centric Web search system) or the more useful Chemical Abstracts data.

The write up’s revelations continue:

Consider the fact that high school biology students, congressional staffers, and middle-school summer campers already have hands-on experience genetically engineering bacteria. A budding scientist can use the internet to find all-encompassing resources.

Yes, more intellectual sunlight in the poli sci journal of record!

Let me offer one more example of ground breaking insight:

In other words, a chatbot that lowers the information barrier should be seen as more like helping a user step over a curb than helping one scale an otherwise unsurmountable wall. Even so, it’s reasonable to worry that this extra help might make the difference for some malicious actors. What’s more, the simple perception that a chatbot can act as a biological assistant may be enough to attract and engage new actors, regardless of how widespread the information was to begin with.

Is there a step government deciders should take? Of course. It is the step that US high technology companies have been begging bureaucrats to take. Government should spell out rules for a morphing, little understood, and essentially uncontrollable suite of systems and methods.

There is nothing like regulating the present and future. Poli sci professionals believe it is possible to repaint the weird red tail on the Boeing F 7A aircraft while the jet is flying around. Trivial?

Here’s the recommendation which I found interesting:

Overemphasizing information security at the expense of innovation and economic advancement could have the unforeseen harmful side effect of derailing those efforts and their widespread benefits. Future biosecurity policy should balance the need for broad dissemination of science with guardrails against misuse, recognizing that people can gain scientific knowledge from high school classes and YouTube—not just from ChatGPT.

My take on this modest proposal is:

- Guard rails allow companies to pursue legal remedies as those companies do exactly what they want and when they want. Isn’t that why the Google “public” trial underway is essentially “secret”?

- Bad actors loves open source tools. Unencumbered by bureaucracies, these folks can move quickly. In effect the mice are equipped with jet packs.

- Job matching services allow a bad actor in Greece or Hong Kong to identify and hire contract workers who may have highly specialized AI skills obtained doing their day jobs. The idea is that for a bargain price expertise is available to help smart software produce some AI infused surprises.

- Recycling the party line of a handful of high profile AI companies is what makes policy.

With poli sci professional becoming aware of smart software, a better world will result. Why fret about livestock ownership in the glory days of what is now Cambodia? The AI stuff is here and now, waiting for the policy guidance which is sure to come even though the draft guidelines have been crafted by US AI companies?

Stephen E Arnold, November 22, 2023

EU Objects to Social Media: Again?

November 21, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Social media is something I observe at a distance. I want to highlight the information in “X Is the Biggest Source of Fake News and Disinformation, EU Warns.” Some Americans are not interested in what the European Union thinks, says, or regulates. On the other hand, the techno feudalistic outfits in the US of A do pay attention when the EU hands out reprimands, fines, and notices of auditions (not for the school play, of course).

This historic photograph shows a super smart, well paid, entitled entrepreneur letting the social media beast out of its box. Now how does this genius put the creature back in the box? Good questions. Thanks, MSFT Copilot. You balked, but finally output a good enough image.

The story in what I still think of as “the capitalist tool” states:

European Commission Vice President Vera Jourova said in prepared remarks that X had the “largest ratio of mis/disinformation posts” among the platforms that submitted reports to the EU. Especially worrisome is how quickly those spreading fake news are able to find an audience.

The Forbes’ article noted:

The social media platforms were seen to have turned a blind eye to the spread of fake news.

I found the inclusion of this statement a grim reminder of what happens when entities refuse to perform content moderation:

“Social networks are now tailor-made for disinformation, but much more should be done to prevent it from spreading widely,” noted Mollica [a teacher at American University]. “As we’ve seen, however, trending topics and algorithms monetize the negativity and anger. Until that practice is curbed, we’ll see disinformation continue to dominate feeds.”

What is Forbes implying? Is an American corporation a “bad” actor? Is the EU parking at a dogwood, not a dog? Is digital information reshaping how established processes work?

From my point of view, putting a decades old Pandora or passel of Pandoras back in a digital box is likely to be impossible. Once social fabrics have been disintegrated by massive flows of unfiltered information, the woulda, coulda, shoulda chatter is ineffectual. X marks the spot.

Stephen E Arnold, November 2023

Why Suck Up Health Care Data? Maybe for Cyber Fraud?

November 20, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

In the US, medical care is an adventure. Last year, my “wellness” check up required a visit to another specialist. I showed up at the appointed place on the day and time my printed form stipulated. I stood in line for 10 minutes as two “intake” professionals struggled to match those seeking examinations with the information available to the check in desk staff. The intake professional called my name and said, “You are not a female.” I said, “That’s is correct.” The intake professional replied, “We have the medical records from your primary care physician for a female named Tina.” Nice Health Insurance Portability and Accountability Act compliance, right?

A moose in Maine learns that its veterinary data have been compromised by bad actors, probably from a country in which the principal language is not moose grunts. With those data, the shocked moose can be located using geographic data in his health record. Plus, the moose’s credit card data is now on the loose. If the moose in Maine is scared, what about the humanoids with the fascinating nasal phonemes?

That same health care outfit reported that it was compromised and was a victim of a hacker. The health care outfit floundered around and now, months later, struggles to update prescriptions and keep appointments straight. How’s that for security? In my book, that’s about par for health care managers who [a] know zero about confidentiality requirements and [b] even less about system security. Horrified? You can read more about this one-horse travesty in “Norton Healthcare Cyber Attack Highlights Record Year for Data Breaches Nationwide.” I wonder if the grandparents of the Norton operation were participants on Major Bowes’ Amateur Hour radio show?

Norton Healthcare was a poster child for the Commonwealth of Kentucky. But the great state of Maine (yep, the one with moose, lovable black flies, and citizens who push New York real estate agents’ vehicles into bays) managed to lose the personal data for 2,192,515 people. You can read about that “minor” security glitch in the Office of the Maine Attorney General’s Data Breach Notification.

What possible use is health care data? Let me identify a handful of bad actor scenarios enabled by inept security practices. Note, please, that these are worse than being labeled a girl or failing to protect the personal information of what could be most of the humans and probably some of the moose in Maine.

- Identity theft. Those newborns and entries identified as deceased can be converted into some personas for a range of applications, like applying for Social Security numbers, passports, or government benefits

- Access to bank accounts. With a complete array of information, a bad actor can engage in a number of maneuvers designed to withdraw or transfer funds

- Bundle up the biological data and sell it via one of the private Telegram channels focused on such useful information. Bioweapon researchers could find some of the data fascinating.

Why am I focusing on health care data? Here are the reasons:

- Enforcement of existing security guidelines seems to be lax. Perhaps it is time to conduct audits and penalize those outfits which find security easy to talk about but difficult to do?

- Should one or more Inspector Generals’ offices conduct some data collection into the practices of state and Federal health care security professionals, their competencies, and their on-the-job performance? Some humans and probably a moose or two in Maine might find this idea timely.

- Should the vendors of health care security systems demonstrate to one of the numerous Federal cyber watch dog groups the efficacy of their systems and then allow one or more of the Federal agencies to probe those systems to verify that the systems do, in fact, actually work?

Without meaningful penalties for security failures, it may be easier to post health care data on a Wikipedia page and quit the crazy charade that health information is secure.

Stephen E Arnold, November 20, 2023

The Power of Regulation: Muscles MSFT Meets a Strict School Marm

November 17, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “The EU Will Finally Free Windows Users from Bing.” The EU? That collection of fractious states which wrangle about irrelevant subjects; to wit, the antics of America’s techno-feudalists. Yep, that EU.

The “real news” write up reports:

Microsoft will soon let Windows 11 users in the European Economic Area (EEA) disable its Bing web search, remove Microsoft Edge, and even add custom web search providers — including Google if it’s willing to build one — into its Windows Search interface. All of these Windows 11 changes are part of key tweaks that Microsoft has to make to its operating system to comply with the European Commission’s Digital Markets Act, which comes into effect in March 2024

The article points out that the DMA includes a “slew” of other requirements. Please, do not confuse “slew” with “stew.” These are two different things.

The old fashioned high school teacher says to the high school super star, “I don’t care if you are an All-State football player, you will do exactly as I say. Do you understand?” The outsized scholar-athlete scowls and say, “Yes, Mrs. Ee-You. I will comply.” Thank you MSFT Copilot. You converted the large company into an image I had of its business practices with aplomb.

Will Microsoft remove Bing — sorry, Copilot — from its software and services offered in the EU? My immediate reaction is that the Redmond crowd will find a way to make the magical software available. For example, will such options as legalese and a check box, a new name, a for fee service with explicit disclaimers and permissions, and probably more GenZ ideas foreign to me do the job?

The techno weight lifter should not be underestimated. Those muscles were developed moving bundles of money, not dumb “belles.”

Stephen E Arnold, November 17, 2023

Buy Google Traffic: Nah, Paying May Not Work

November 16, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Tucked into a write up about the less than public trial of the Google was an interesting factoid. The source of the item was “More from the US v Google Trial: Vertical Search, Pre-Installs and the Case of Firefox / Yahoo.” Here’s the snippet:

Expedia execs also testified about the cost of ads and how increases had no impact on search results. On October 19, Expedia’s former chief operating officer, Jeff Hurst, told the court the company’s ad fees increased tenfold from $21 million in 2015 to $290 million in 2019. And yet, Expedia’s traffic from Google did not increase. The implication was that this was due to direct competition from Google itself. Hurst pointed out that Google began sharing its own flight and hotel data in search results in that period, according to the Seattle Times.

“Yes, sir, you can buy a ticket and enjoy a ticket to our entertainment,” says the theater owner. The customer asks, “Is the theater in good repair?” The ticket seller replies, “Of course, you get your money’s worth at our establishment. Next.” Thanks, Microsoft Bing. It took several tries before I gave up.

I am a dinobaby, and I am, by definition, hopelessly out of it. However, I interpret this passage in this way:

- Despite protestations about the Google algorithm’s objectivity, Google has knobs and dials it can use to cause the “objective” algorithm to be just a teenie weenie less objective. Is this a surprise? Not to me. Who builds a system without a mechanism for controlling what it does. My favorite example of this steering involves the original FirstGov.gov search system circa 2000. After Mr. Clinton lost the election, the new administration, a former Halliburton executive wanted a certain Web page result to appear when certain terms were searched. No problemo. Why? Who builds a system one cannot control? Not me. My hunch is that Google may have a similar affection for knobs and dials.

- Expedia learned that buying advertising from a competitor (Google) was expensive and then got more expensive. The jump from $21 million to $290 million is modest from the point of view of some technology feudalists. To others the increase is stunning.

- Paying more money did not result in an increase in clicks or traffic. Again I was not surprised. What caught my attention is that it has taken decades for others to figure out how the digital highway men came riding like a wolf on the fold. Instead of being bedecked with silver and gold, these actors wore those cheerful kindergarten colors. Oh, those colors are childish but those wearing them carried away the silver and gold it seems.

Net net: Why is this US v Google trial not more public? Why so many documents withheld? Why is redaction the best billing tactic of 2023? So many questions that this dinobaby cannot answer. I want to go for a ride in the Brin-A-Loon too. I am a simple dinobaby.

Stephen E Arnold, November 16, 2023

Google Apple: These Folks Like Geniuses and Numbers in the 30s

November 13, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

The New York Post published a story which may or may not be one the money. I would suggest that the odds of it being accurate are in the 30 percent range. In fact, 30 percent is emerging as a favorite number. Apple, for instance, imposes what some have called a 30 percent “Apple tax.” Don’t get me wrong. Apple is just trying to squeak by in a tough economy. I love the connector on the MacBook Air which is unlike any Apple connector in my collection. And the $130 USB cable? Brilliant.

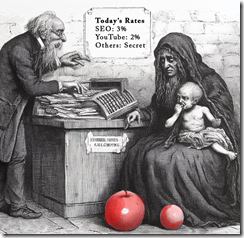

The poor Widow Apple is pleading with the Bank of Googzilla for a more favorable commission. The friendly bean counter is not willing to pay more than one third of the cash take. “I want to pay you more, but hard times are upon us, Widow Apple. Might we agree on a slightly higher number?” The poor Widow Apple sniffs and nods her head in agreement as the frail child Mac Air the Third whimpers.

The write up which has me tangled in 30s is “Google Witness Accidentally Reveals Company Pays Apple 36% of Search Ad Revenue.” I was enthralled with the idea that a Google witness could do something by accident. I assumed Google witnesses were in sync with the giant, user centric online advertising outfit.

The write up states:

Google pays Apple a 36% share of search advertising revenue generated through its Safari browser, one of the tech giant’s witnesses accidentally revealed in a bombshell moment during the Justice Department’s landmark antitrust trial on Monday. The flub was made by Ken Murphy, a University of Chicago economist and the final witness expected to be called by Google’s defense team.

Okay, a 36 percent share: Sounds fair. True, it is a six percent premium on the so-called “Apple tax.” But Google has the incentive to pay more for traffic. That “pay to play” business model is indeed popular it seems.

The write up “Usury in Historical Perspective” includes an interesting passage; to wit:

Mews and Abraham write that 5,000 years ago Sumer (the earliest known human civilization) had its own issues with excessive interest. Evidence suggests that wealthy landowners loaned out silver and barley at rates of 20 percent or more, with non-payment resulting in bondage. In response, the Babylonian monarch occasionally stepped in to free the debtors.

A measly 20 percent? Flash forward to the present. At 36 percent inflation has not had much of an impact on the Apple Google deal.

Who is University of Chicago economist who allegedly revealed a super secret number? According to the always-begging Wikipedia, he is a person who has written more than 50 articles. He is a recipient of the MacArthur Fellowship sometimes known as a “genius grant.” Ergo a genius.

I noted this passage in the allegedly accurate write up:

Google had argued as recently as last week that the details of the agreement were sensitive company information – and that revealing the info “would unreasonably undermine Google’s competitive standing in relation to both competitors and other counterparties.” Schmidtlein [Google’s robust legal eagle] and other Google attorneys have pushed back on DOJ’s assertions regarding the default search engine deals. The company argues that its payments to Apple, AT&T and other firms are fair compensation.

I like the phrase “fair compensation.” It matches nicely with the 36 percent commission on top of the $25 billion Google paid Apple to make the wonderful Google search system the default in Apple’s Safari browser. The money, in my opinion, illustrates the depth of love users have for the Google search system. Presumably Google wants to spare the Safari user the hassle required to specify another Web search system like Bing.com or Yandex.com.

Goodness, Google cares about its users so darned much, I conclude.

Despite the heroic efforts of Big Tech on Trial, I find that getting information about a trial between the US and everyone’s favorite search system difficult. Why the secrecy? Why the redactions? Why the cringing when the genius revealed the 36 percent commission?

I think I know why. Here are three reasons for the cringe:

- Google is thin skinned. Criticism is not part of the game plan, particularly with high school reunions coming up.

- Google understands that those not smart enough (like the genius Ken Murphy) would not understand the logic of the number. Those who are not Googley won’t get it, so why bother to reveal the number?

- Google hires geniuses. Geniuses don’t make mistakes. Therefore, the 36 percent reveal is numeric proof of the sophistication of Google’s analytic expertise. Apple could have gotten more money; Google is the winner.

Net net: My hunch is that the cloud of unknowing wrapped around the evidence in this trial makes clear that the Google is just doing what anyone smart enough to work at Google would do. Cleverness is good. Being a genius is good. Appearing to be dumb is not Googley. Oh, oh. I am not smart enough to see the sheer brilliance of the number, its revelation, and how it makes Google even more adorable with its super special deals.

Stephen E Arnold, November 13, 2023

AI Greed and Apathy: A Winning Combo

November 9, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Grinding through the seemingly endless strings of articles and news releases about smart software or AI as the 50-year-old “next big thing” is labeled, I spotted this headline: “Poll: AI Regulation Is Not a Priority for Americans.”

The main point of the write is that ennui captures the attitude of Americans in the survey sample. But ennui toward what? The rising price of streaming? The bulk fentanyl shipped to certain nation states not too far from the US? The oddball weapons some firearm experts show their students? Nope.

The impact of smart software is unlikely to drive over the toes of Mr. and Mrs. Average Family (a mythical average family). Some software developers are likely to become roadkill on the Information Highway. Thanks, Bing. Nice cartoon. I like the red noses. Apparently MBAs drink a lot maybe?

The answer is artificial intelligence, smart software, or everyone’s friends Bard, Bing, GPT, Llama, et al. Let me highlight three factoids from the write up. No, I won’t complain about sample size, methodology, and skipping Stats 201 class to get the fresh-from-the-oven in the student union. (Hey, doesn’t every data wrangler have that hidden factoid?)

Let’s look at the three items I selected. Please, navigate to the cited write up for more ennui outputs:

- 53% of women would not let their kids use AI at all, compared to 26% of men. (Good call, moms.)

- Regulating tech companies came in 14th (just above federally legalizing marijuana), with 22% calling it a top priority and 35% saying it’s "important, but a lower priority."

- Since our last survey in August, the percentage of people who say "misinformation spread by artificial intelligence" will have an impact on the 2024 presidential election saw an uptick from 53% to 58%. (Gee, that seems significant.)

I have enough information to offer a few observations about the push to create AI rules for the Information Highway. Here we go:

- Ignore the rules. Go fast. Have fun. Make money in unsanctioned races. (Look out pedestrians.)

- Consultants and lawyers are looking at islands to buy and exotic cars to lease. Why? Bonanza explaining the threats and opportunities when more people manifest concern about AI.

- Government regulators will have meetings and attend international conferences. Some will be in places where personal safety is not a concern and the weather is great. (Hooray!)

Net net: Indifference has some upsides. Plus, it allows US AI giants time to become more magnetic and pull money, users, and attention. Great days.

Stephen E Arnold, November 9, 2023

xx

xx

xx

x