Newton and Shoulders of Giants? Baloney. Is It Everyday Theft?

January 31, 2023

Here I am in rural Kentucky. I have been thinking about the failure of education. I recall learning from Ms. Blackburn, my high school algebra teacher, this statement by Sir Isaac Newton, the apple and calculus guy:

If I have seen further, it is by standing on the shoulders of giants.

Did Sir Isaac actually say this? I don’t know, and I don’t care too much. It is the gist of the sentence that matters. Why? I just finished reading — and this is the actual article title — “CNET’s AI Journalist Appears to Have Committed Extensive Plagiarism. CNET’s AI-Written Articles Aren’t Just Riddled with Errors. They Also Appear to Be Substantially Plagiarized.”

How is any self-respecting, super buzzy smart software supposed to know anything without ingesting, indexing, vectorizing, and any other math magic the developers have baked into the system? Did Brunelleschi wake up one day and do the Eureka! thing? Maybe he stood on line and entered the Pantheon and looked up? Maybe he found a wasp’s nest and cut it in half and looked at what the feisty insects did to build a home? Obviously intellectual theft. Just because the dome still stands, when it falls, he is an untrustworthy architect engineer. Argument nailed.

The write up focuses on other ideas; namely, being incorrect and stealing content. Okay, those are interesting and possibly valid points. The write up states:

All told, a pattern quickly emerges. Essentially, CNET‘s AI seems to approach a topic by examining similar articles that have already been published and ripping sentences out of them. As it goes, it makes adjustments — sometimes minor, sometimes major — to the original sentence’s syntax, word choice, and structure. Sometimes it mashes two sentences together, or breaks one apart, or assembles chunks into new Frankensentences. Then it seems to repeat the process until it’s cooked up an entire article.

For a short (very, very brief) time I taught freshman English at a big time university. What the Futurism article describes is how I interpreted the work process of my students. Those entitled and enquiring minds just wanted to crank out an essay that would meet my requirements and hopefully get an A or a 10, which was a signal that Bryce or Helen was a very good student. Then go to a local hang out and talk about Heidegger? Nope, mostly about the opposite sex, music, and getting their hands on a copy of Dr. Oehling’s test from last semester for European History 104. Substitute the topics you talked about to make my statement more “accurate”, please.

I loved the final paragraphs of the Futurism article. Not only is a competitor tossed over the argument’s wall, but the Google and its outstanding relevance finds itself a target. Imagine. Google. Criticized. The article’s final statements are interesting; to wit:

As The Verge reported in a fascinating deep dive last week, the company’s primary strategy is to post massive quantities of content, carefully engineered to rank highly in Google, and loaded with lucrative affiliate links. For Red Ventures, The Verge found, those priorities have transformed the once-venerable CNET into an “AI-powered SEO money machine.” That might work well for Red Ventures’ bottom line, but the specter of that model oozing outward into the rest of the publishing industry should probably alarm anybody concerned with quality journalism or — especially if you’re a CNET reader these days — trustworthy information.

Do you like the word trustworthy? I do. Does Sir Isaac fit into this future-leaning analysis. Nope, he’s still pre-occupied with proving that the evil Gottfried Wilhelm Leibniz was tipped off about tiny rectangles and the methods thereof. Perhaps Futurism can blame smart software?

Stephen E Arnold, January 31, 2023

Have You Ever Seen a Killer Dinosaur on a Leash?

January 27, 2023

I have never seen a Tyrannosaurus Rex allow a European regulators to put a leash on its neck and lead the beastie around like a tamed circus animal?

Another illustration generated by the smart software outfit Craiyon.com. The copyright is up in the air just like the outcome of Google’s battles with regulators, OpenAI, and assorted employees.

I think something similar just happened. I read “Consumer Protection: Google Commits to Give Consumers Clearer and More Accurate Information to Comply with EU Rules.” The statement said:

Google has committed to limit its capacity to make unilateral changes related to orders when it comes to price or cancellations, and to create an email address whose use is reserved to consumer protection authorities, so that they can report and request the quick removal of illegal content. Moreover, Google agreed to introduce a series of changes to its practices…

The details appear in the this EU table of Google changes.

Several observations:

- A kind and more docile Google may be on parade for some EU regulators. But as the circus act of Roy and Siegfried learned, one must not assume a circus animal will not fight back

- More problematic may be Google’s internal management methods. I have used the phrase “high school science club management methods.” Now that wizards were and are being terminated like insects in a sophomore biology class, getting that old team spirit back may be increasingly difficult. Happy wizards do not create problems for their employer or former employer as the case may be. Unhappy folks can be clever, quite clever.

- The hyper-problem in my opinion is how the tide of online user sentiment has shifted from “just Google it” to ladies in my wife’s bridge club asking me, “How can I use ChatGPT to find a good hotel in Paris?” Yep, really old ladies in a bridge club in rural Kentucky. Imagine how the buzz is ripping through high school and college students looking for a way to knock out an essay about the Louisiana Purchase for that stupid required American history class? ChatGPT has not needed too much search engine optimization, has it.

Net net: The friendly Google faces a multi-bladed meat grinder behind Door One, Door Two, and Door Three. As Monte Hall, game show host of “Let’s Make a Deal” said:

“It’s time for the Big Deal of the Day!”

Stephen E Arnold, January 27, 2023

Googzilla Squeezed: Will the Beastie Wriggle Free? Can Parents Help Google Wiggle Out?

January 25, 2023

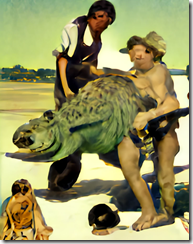

How easy was it for our prehistoric predecessors to capture a maturing reptile. I am thinking of Googzilla. (That’s my way of conceptualizing the Alphabet Google DeepMind outfit.)

This capturing the dangerous dinosaur shows one regulator and one ChatGPT dev in the style of Normal Rockwell (who may be spinning in his grave). The art was output by the smart software in use at Craiyon.com. I love those wonky spellings and the weird video ads and the image obscuring Next and Stay buttons. Is this the type of software the Google fears? I believe so.

On one side of the creature is the pesky ChatGPT PR tsunami. Google’s management team had to call Google’s parents to come to the garage. The whiz kids find themselves in a marketing battle. Imagine, a technology that Facebook dismisses as not a big deal, needs help. So the parents come back home from their vacations and social life to help out Sundar and Prabhakar. I wonder if the parents are asking, “What now?” and “Do you think these whiz kids want us to move in with them.” Forbes, the capitalist tool with annoying pop ups, tells one side of the story in “How ChatGPT Suddenly Became Google’s Code Red, Prompting Return of Page and Brin.”

On the other side of Googzilla is a weak looking government regulator. The Wall Street Journal (January 25, 2023) published “US Sues to Split Google’s Ad Empire.” (Paywall alert!) The main idea is that after a couple of decades of Google is free, great, and gives away nice tchotchkes US Federal and state officials want the Google to morph into a tame lizard.

Several observations:

- I find it amusing that Google had to call its parents for help. There’s nothing like a really tough, decisive set of whiz kids

- The Google has some inner strengths, including lawyers, lobbyists, and friends who really like Google mouse pads, LED pins, and T shirts

- Users of ChatGPT may find that as poor as Google’s search results are, the burden of figuring out an “answer” falls on the user. If the user cooks up an incorrect answer, the Google is just presenting links or it used to. When the user accepts a ChatGPT output as ready to use, some unforeseen consequences may ensue; for example, getting called out for presenting incorrect or stupid information, getting sued for copyright violations, or assuming everyone is using ChatGPT so go with the flow

Net net: Capturing and getting the vet to neuter the beastie may be difficult. Even more interesting is the impact of ChatGPT on allegedly calm, mature, and seasoned managers. Yep, Code Red. “Hey, sorry to bother you. But we need your help. Right now.”

Stephen E Arnold, January 25, 2023

Japan Does Not Want a Bad Apple on Its Tax Rolls

January 25, 2023

Everyone is falling over themselves about a low-cost Mac Mini, just not a few Japanese government officials, however.

An accountant once gave me some advice: never anger the IRS. A governmental accounting agency that arms its employees with guns is worrisome. It is even more terrifying to anger a foreign government accounting agency. The Japanese equivalent of the IRS smacked Apple with the force of a tsunami in fees and tax penalties Channel News Asia reported: “Apple Japan Hit With $98 Million In Back Taxes-Nikkei.”

The Japanese branch of Apple is being charged with $98 million (13 billion yen) for bulk sales of Apple products sold to tourists. The product sales, mostly consisting of iPhones, were wrongly exempted from consumption tax. The error was caught when a foreigner was caught purchasing large amounts of handsets in one shopping trip. If a foreigner visits Japan for less than six months they are exempt from the ten percent consumption tax unless the products are intended for resale. Because the foreign shopper purchased so many handsets at once, it is believed they were cheating the Japanese tax system.

The Japanese counterpart to the IRS brought this to Apple Japan’s attention and the company handled it in the most Japanese way possible: quiet acceptance. Apple will pay the large tax bill:

“Apple Japan is believed to have filed an amended tax return, according to Nikkei. In response to a Reuters’ request for comment, the company only said in an emailed message that tax-exempt purchases were currently unavailable at its stores. The Tokyo Regional Taxation Bureau declined to comment.”

Apple America responded that the company invested over $100 billion in the Japanese supply network in the past five years.

Japan is a country dedicated to advancing technology and, despite its declining population, it possesses one of the most robust economies in Asia. Apple does not want to lose that business, so paying $98 million is a small hindrance to continue doing business in Japan.

Whitney Grace, January 25, 2023

OpenAI Working on Proprietary Watermark for Its AI-Generated Text

January 24, 2023

Even before OpenAI made its text generator GPT-3 available to the public, folks were concerned the tool was too good at mimicking the human-written word. For example, what is to keep students from handing their assignments off to an algorithm? (Nothing, as it turns out.) How would one know? Now OpenAI has come up with a solution—of sorts. Analytics India Magazine reports, “Generated by Human or AI: OpenAI to Watermark its Content.” Writer Pritam Bordoloi describes how the watermark would work:

“We want it to be much harder to take a GPT output and pass it off as if it came from a human,’ [OpenAI’s Scott Aaronson] revealed while presenting a lecture at the University of Texas at Austin. ‘For GPT, every input and output is a string of tokens, which could be words but also punctuation marks, parts of words, or more—there are about 100,000 tokens in total. At its core, GPT is constantly generating a probability distribution over the next token to generate, conditional on the string of previous tokens,’ he said in a blog post documenting his lecture. So, whenever an AI is generating text, the tool that Aaronson is working on would embed an ‘unnoticeable secret signal’ which would indicate the origin of the text. ‘We actually have a working prototype of the watermarking scheme, built by OpenAI engineer Hendrik Kirchner.’ While you and I might still be scratching our heads about whether the content is written by an AI or a human, OpenAI—who will have access to a cryptographic key—would be able to uncover a watermark, Aaronson revealed.”

Great! OpenAI will be able to tell the difference. But … how does that help the rest of us? If the company just gifted the watermarking key to the public, bad actors would find a way around it. Besides, as Bordoloi notes, that would also nix OpenAI’s chance to make a profit off it. Maybe it will sell it as a service to certain qualified users? That would be an impressive example of creating a problem and selling the solution—a classic business model. Was this part of the firm’s plan all along? Plus, the killer question, “Will it work?”

Cynthia Murrell, January 24, 2023

How to Make Chinese Artificial Intelligence Professionals Hope Like Happy Bunnies

January 23, 2023

Happy New Year! It is the Year of the Rabbit, and the write up “Is Copyright Easting AI?” may make some celebrants happier than the contents of a red envelop. The article explains that the US legal system may derail some of the more interesting, publicly accessible applications of smart software. Why? US legal eagles and the thicket of guard rails which comprise copyright.

The article states:

… neural network developers, get ready for the lawyers, because they are coming to get you.

That means the the interesting applications on the “look what’s new on the Internet” news service Product Hunt will disappear. Only big outfits can afford to bring and fight some litigation. When I worked as an expert witness, I learned that money is not an issue of concern for some of the parties to a lawsuit. Those working as a robot repair technician for a fast food chain will want to avoid engaging in a legal dispute.

The write up also says:

If the AI industry is to survive, we need a clear legal rule that neural networks, and the outputs they produce, are not presumed to be copies of the data used to train them. Otherwise, the entire industry will be plagued with lawsuits that will stifle innovation and only enrich plaintiff’s lawyers.

I liked the word “survive.” Yep, continue to exist. That’s an interesting idea. Let’s assume that the US legal process brings AI develop to a halt. Who benefits? I am a dinobaby living in rural Kentucky. Nevertheless, it seems to me that a country will just keep on working with smart software informed by content. Some of the content may be a US citizen’s intellectual property, possibly a hard drive with data from Los Alamos National Laboratory, or a document produced by a scientific and technical publisher.

It seems to me that smart software companies and research groups in a country with zero interest in US laws can:

- Continue to acquire content by purchase, crawling, or enlisting the assistance of third parties

- Use these data to update and refine their models

- Develop innovations not available to smart software developers in the US.

Interesting, and with the present efficiency of some legal and regulatory system, my hunch is that bunnies in China are looking forward to 2023. Will an innovator use enhanced AI for information warfare or other weapons? Sure.

Stephen E Arnold, January 23, 2023

Seattle: Awareness Flickering… Maybe?

January 17, 2023

Generation Z is the first age of humans completely raised with social media. They are also growing up during a historic mental health crisis. Educators and medical professionals believe there is a link between the rising mental health crisis and social media. While studies are not 100% conclusive, there is a correlation between the two. The Seattle Times shares a story about how Seattle public schools think the same: “Seattle Schools Sues Social Media Firms Over Youth Mental Health Crisis.”

Seattle schools files a ninety-page lawsuit that asserts social media companies purposely designed, marketed, and operate their platforms for optimum engagement with kids so they can earn profits. The lawsuit claims that the companies cause mental and health disorders, such as depression, eating disorders, anxiety, and cyber bullying. Seattle Public Schools’ (SPS) lawsuit states the company violated the Washington public nuisance law and should be penalized.

SPS argues that due to the increased mental and physical health disorders, they have been forced to divert resources and spend funds on counselors, teacher training in mental health issues, and educating kids on dangers related to social media. SPS wants the tech companies to be held responsible and help treat the crisis:

“ ‘Our students — and young people everywhere — face unprecedented learning and life struggles that are amplified by the negative impacts of increased screen time, unfiltered content, and potentially addictive properties of social media,’ said SPS Superintendent Brent Jones in the release. ‘We are confident and hopeful that this lawsuit is the first step toward reversing this trend for our students, children throughout Washington state, and the entire country.’”

Tech insiders have reported that social media companies are aware of the dangers their platforms pose to kids, but are not too concerned. The tech companies argue they have tools to help adults limit kids’ screen time. Who is usually savvier with tech though, kids or adults?

The rising mental health crisis is also caused by two additional factors:

- Social media induces mass hysteria in kids, because it is literally a digital crowd. Humans are like sheep they follow crowds.

- Mental health diagnoses are more accurate, because the science has improved. More kids are being diagnosed because the experts know more.

Social media is only part of the problem. Tech companies, however, should be held accountable because they are knowingly contributing to the problem. And Seattle? Flicker, flicker candle of awareness.

Whitney Grace, January 17, 2023

Billable Hours: The Practice of Time Fantasy

January 16, 2023

I am not sure how I ended up at a nuclear company in Washington, DC in the 1970s. I was stumbling along in a PhD program, fiddling around indexing poems for professors, and writing essays no one other than some PhD teaching the class would ever read. (Hey, come to think about it that’s the position I am in today. I write essays, and no one reads them. Progress? I hope not. I love mediocrity, and I am living in the Golden Age of meh and good enough.)

I recall arriving and learning from the VP of Human Resources that I had to keep track of my time. Hello? I worked on my own schedule, and I never paid attention to time. Wait. I did. I knew when the university’s computer center would be least populated by people struggling with IBM punch cards and green bar paper.

Now I have to record, according to Nancy Apple (I think that was her name): [a] The project number, [b] the task code, and [c] the number of minutes I worked on that project’s task. I pointed out that I would be shuttling around from government office to government office and then back to the Rockville administrative center and laboratory.

She explained that travel time had a code. I would have a project number, a task code for sitting in traffic on the Beltway, and a watch. Fill in the blanks.

As you might imagine, part of the learning curve for me was keeping track of time. I sort of did this, but as I become more and more engaged in the work about which I cannot speak, I filled in the time sheets every week. Okay, okay. I would fill in the time sheets when someone in Accounting called me and said, “I need your time sheets. We have to bill the client tomorrow. I want the time sheets now.”

As I muddled through my professional career, I understood how people worked and created time fantasy sheets. The idea was to hit the billable hour target without getting an auditor to camp out in my office. I thought of my learnings when I read “A Woman Who Claimed She Was Wrongly Dismissed Was Ordered to Repay Her Former Employer about $2,000 for Misrepresenting Her Working Hours.”

The write up which may or may not be written by a human states:

Besse [the time fantasy enthusiast] met with her former employer on March 29 last year. In a video recording of the meeting shared with the tribunal, she said: “Clearly, I’ve plugged time to files that I didn’t touch and that wasn’t right or appropriate in any way or fashion, and I recognize that and so for that I’m really sorry.” Judge Megan Stewart concluded that TimeCamp [the employee monitoring software watching the time fantasist] “likely accurately recorded” Besse’s work activities. She ordered Besse to compensate her former employer for a 50-hour discrepancy between her timesheets and TimeCamp’s records. In total, Besse was ordered to pay Reach a total of C$2,603 ($1,949) to compensate for wages and other payments, as well as C$153 ($115) in costs.

But the key passage for me was this one:

In her judgment, Stewart wrote: “Given that trust and honesty are essential to an employment relationship, particularly in a remote-work environment where direct supervision is absent, I find Miss Besse’s misconduct led to an irreparable breakdown in her employment relationship with Reach and that dismissal was proportionate in the circumstances.”

Far be it from me to raise questions, but I do have one: “Do lawyers engage in time fantasy billing?”

Of course not, “trust and honesty are essential.”

That’s good to know. Now what about PR and SEO billings? What about consulting firm billings?

If the claw back angle worked for this employer-employee set up, 2023 will be thrilling for lawyers, who obviously will not engage in time fantasy billing. Trust and honesty, right?

Stephen E Arnold, January 16, 2023

US AI Legal Decisions: Will They Matter?

January 10, 2023

I read an interesting essay called “This Lawsuit against Microsoft Could Change the Future of AI.” It is understandable that the viewpoint is US centric. The technology is the trendy discontinuity called ChatGPT. The issue is harvesting data, lots of it from any source reachable. The litigation concerns Microsoft’s use of open source software to create a service which generates code automatically in response to human or system requests.

The essay uses a compelling analogy. Here’s the passage with the metaphor:

But there’s a dirty little secret at the core of AI — intellectual property theft. To do its work, AI needs to constantly ingest data, lots of it. Think of it as the monster plant Audrey II in Little Shop of Horrors, constantly crying out “Feed me!” Detractors say AI is violating intellectual property laws by hoovering up information without getting the rights to it, and that things will only get worse from here.

One minor point: I would add the word “quickly” after the final word here.

I think there is another issue which may warrant some consideration. Will other countries — for instance, China, North Korea, or Iran — be constrained in their use of open source or proprietary content when training their smart software? One example is the intake of Galmon open source satellite data to assist in guiding anti satellite weapons should the need arise. What happens when compromised telecommunications systems allow streams of real time data to be pumped into ChatGPT-like smart systems? Smart systems with certain types of telemetry can take informed, direct action without too many humans in the process chain.

I suppose I should be interested in Microsoft’s use of ChatGPT. I am more interested in weaponized AI operating outside the span of control of the US legal decisions. Control of information and the concomitant lack of control of information is more than adding zest to a Word document.

As a dinobaby, I am often wrong. Maybe what the US does will act like a governor on an 19th century steam engine? As I recall, some of the governors failed with some interesting consequences. Worry about Google, Microsoft, or some other US company’s application of constrained information could be worrying about a lesser issue.

Stephen E Arnold, January 10. 2023

The EU Has the Google in Targeting Range for 2023

January 10, 2023

Unlike the United States, the European Union does not allow Google to collect user data. The EU has passed several laws to protect its citizens’ privacy, however, Google can still deploy tools like Google Analytics with stipulations. Tutanota explains how Google operates inside the EU laws in, “Is Google Analytics Illegal In The EU? Yes And No, But Mostly Yes.”

Max Schrems is a lawyer who successfully sued Facebook for violating the privacy of Europeans. He won again, this time against Google. France and Austria decided that Google Analytics is illegal to use in Europe, but Denmark’s and Norway’s data protection authorities developed legally compliant ways to use the analytics service.

Organizations were using Google Analytics to collect user information, but that violated Europeans’ privacy rights because it exposed them to American surveillance. The tech industry did not listen to the ruling, so Schrems sued:

“However, the Silicon Valley tech industry largely ignored the ruling. This has now led to the ruling that Google Analytics is banned in Europe. NOYB says:

‘While this (=invalidation of Privacy Shield) sent shock waves through the tech industry, US providers and EU data exporters have largely ignored the case. Just like Microsoft, Facebook or Amazon, Google has relied on so-called ‘standard Contract Clauses’ to continue data transfers and calm its European business partners.’

Now, the Austrian Data Protection Authority strikes the same chord as the European court when declaring Privacy Shield as invalid: It has decided that the use of Google Analytics is illegal as it violates the General Data Protection Regulation (GDPR). Google is “subject to surveillance by US intelligence services and can be ordered to disclose data of European citizens to them’. Therefore, the data of European citizens may not be transferred across the Atlantic.”

There are alternatives to Google services, including Gmail and Google Analytics based in Europe, Canada, and the United States. This appears to be one more example of the EU lining up financial missiles to strike the Google.

Whitney Grace, January 10, 2023