Facebook Has a Supreme Court and Now Amazon Has a Legal System

June 24, 2021

Nothing makes me laugh quicker than the antics of big technology high school management methods. I get a hoot out of the Facebook content supreme court. My hunch is that nice lunches are provided. The Amazon jury thing is a knee slapper too. Navigate to “Amazon Organizes Internal Juries to Consider the Final Fate of Employees at Risk of Being Fired.” Note: You will have to pay to read this real news article.

The write up says:

Amazon employees who are close to being fired can plead their case to an internal jury that’s partly selected by the company, according to documents reviewed by Insider and interviews with people familiar with the process. These appeals are part of Pivot, an Amazon performance-improvement program that some employees say is stacked against them.

Well, sure. The write up says:

… Employees are not allowed external legal support, people who have been through the program said. “You go in by yourself not understanding what you’re up against,” one person told Insider. The people spoke on condition of anonymity for fear of retribution from Amazon.

And what if one wins? No job guarantee.

Question: Who from the science club has a date for the prom? Answer: No one.

Stephen E Arnold, June 24, 2021

Why Messrs Brin and Page Said Adios

June 22, 2021

Years ago I signed a document saying I could not reveal any information obtained, intuited, learned, or received by any means electrical or mechanical from an interesting company for which I did some trivial work. I have been a good person, and I will continue of that path in this short blog post based on open source info and my own cogitations.

Yes, the GOOG. I want to remind readers that in 2019, the dynamic duo, the creators of Backrub, and the beneficiaries of some possible inspiration from Yahoo, GoTo.com, and Overture stepped away from their mom and pop online advertising store. With lots of money and eternal fame in the pantheon of online superstars, this was a good decision. Based on my understanding of information in open sources, the two decades of unparalleled fun was drawing to a close. Thus, hasta la vista. From my point of view, these visionaries who understood the opportunities to sell ads rendered silly ideas like doing good toothless. Go for the gold because there was no meaningful regulation as long as their was blood lust for tchotchkes like blinking Google pins or mouse pads with the Google logo.

But there were in open source information hints of impending trouble; for example:

- Management issues, both personal and company centric. Who can forget drug overdoses, attempted suicides, and baby daddies in the legal department? Certainly not the online indexes which provide valid links here, here, and here. Keep in mind, gentle reader, that these items are from open sources.

- Grousing from Web site owners, partners, and developers. The Foundem persistence gave hope to many that others would speak up despite Google’s power, money, and flotillas of legal destroyers.

- Annoying bleats about competition were emitted with ever increasing stridency from those clueless EU officials. Example number one: Margrethe Vestager. Danes fouled up taking over England, other Scandinavia countries, and lost the lead in ham to the questionable Spanish who fed cinco jota pigs acorns.

Nope, bail out time.

I offer these prefatory sentences because those commenting, tweeting, and blogging about “Google Executives See Cracks in Their Company’s Success” seem to have forgotten the glorious past of the Google. I noted this statement which is eerily without historical context and presented as a novel idea:

But a restive class of Google executives worry that the company is showing cracks. They say Google’s work force is increasingly outspoken. Personnel problems are spilling into the public. Decisive leadership and big ideas have given way to risk aversion and incrementalism. And some of those executives are leaving and letting everyone know exactly why.

Okay. But Messrs Brin and Page left. This is a surprise? Why? The high school science club management method is no longer fun. The crazy technology is expensive and old. The Foosball table needs resurfacing. The bean bags smell. And — news flash — when Elvis left the building, the show was over.

Messrs Brin and Page left the building. Got the picture?

Stephen E Arnold, June 22, 2021

Google and Ethics: Shaken and Stirred Up

June 17, 2021

Despite recent controversies, Vox Recode reports, “Google Says it’s Committed to Ethical AI Research. Its Ethical AI Team Isn’t So Sure.” In fact, it sounds like there is a lot of uncertainty for the department whose immediate leaders have not been replaced since they were ousted and who reportedly receive little guidance or information from the higher-ups. Reporter Shirin Ghaffary writes:

“Some current members of Google’s tightly knit ethical AI group told Recode the reality is different from the one Google executives are publicly presenting. The 10-person group, which studies how artificial intelligence impacts society, is a subdivision of Google’s broader new responsible AI organization. They say the team has been in a state of limbo for months, and that they have serious doubts company leaders can rebuild credibility in the academic community — or that they will listen to the group’s ideas. Google has yet to hire replacements for the two former leaders of the team. Many members convene daily in a private messaging group to support each other and discuss leadership, manage themselves on an ad-hoc basis, and seek guidance from their former bosses. Some are considering leaving to work at other tech companies or to return to academia, and say their colleagues are thinking of doing the same.”

See the article for more of the frustrations facing Google’s remaining AI ethics researchers. The loss of these workers would not be good for the company, which relies on the department to lend a veneer of responsibility to its algorithmic initiatives. Right now, though, Google seems more interested in plowing ahead with its projects than in taking its own researchers, or their work, seriously. Its reputation in the academic community has tanked, we are told. A petition signed by thousands of computer science instructors and researchers called Gebru’s firing “unprecedented research censorship,” a prominent researcher and diversity activists are rejecting Google funding, a Google-run workshop was boycotted by prospective speakers, and the AI ethics research conference FAccT suspended the company’s membership. Meanwhile, Ghaffary reports, at least four employees have resigned and given Gebru’s treatment as the reason. Other concerned employees are taking the opposite approach, staying on in the hope they can make a difference. As one unnamed researcher states:

“Google is so powerful and has so much opportunity. It’s working on so much cutting-edge AI research. It feels irresponsible for no one who cares about ethics to be here.”

We agree, but there is only so much mid-level employees can do. When will Google executives begin to care about developing AI programs conscientiously? When regulators somehow make it more expensive to ignore ethics concerns than to embrace them, we suspect. We will not hold our breath.

Cynthia Murrell, June 17, 2021

Adulting Is Hard and Tech Companies May Lose Their Children

June 15, 2021

For years, I have compared the management methods of high flying technology companies to high school science clubs. I think I remain the lone supporter of this comparison. That is perfectly okay with me. I did spot several interesting examples of management “actions” which have produced some interesting knock on effects.

First, take a look at this write up: “Does What Happens at YC Stay at YC?” The Silicon Valley real news write up reports:

The two founders, Dark CEO Paul Biggar and Prolific CEO and co-founder Katia Damer, say they were removed from YC after publicly critiquing YC — for very different reasons. Biggar had noted on social media back in March that another YC founder was tipping off people on how to cut the vaccine lines to get an early jab, while Damer expressed worry and frustration more recently about the alumni community’s support of a now-controversial alum, Antonio García Martínez. Y Combinator says that the two founders were removed from Bookface because they broke community guidelines, namely the rule to never externally post any internal information from Bookface.

The method: Have rules, enforce them, and attract media attention. I find this interesting because Y Combinator has been around since 2005 and now we have the rule breaking thing.

Second, the newly awakened real journalists at the New York Times wrote in “In Leak Investigation, Tech giants Are Caught Between Courts and Customers”:

Without knowing it, Apple said, it had handed over the data of congressional staff members, their families and at least two members of Congress…

Yep, without knowing. Does this sound like the president of the high school science club explaining why the chem lab caught fire by pointing out that she knew nothing about the event. Yes, fire trucks responded to the scene of the blaze. Oh, the pres of that high school science club is wearing a T shirt with the word “Privacy” in big and bold Roboto.

Third, the much loved online ad vendor (Google in case you did not know) used a classic high school science club maneuver. “UK Competition Regulator Gets a Say in Google’s Plan to Remove Browser Cookies” reveals:

Google is committed to involve the CMA and the Information Commissioner’s Office, the U.K.’s privacy watchdog, in the development of its Privacy Sandbox proposals. The company promised to publicly disclose results of any tests of the effectiveness of alternatives and said it wouldn’t give preferential treatment to Google’s advertising products or sites.

I noted the operative word “promised.” Isn’t the UK a member of a multi national intelligence consortium. What happens if the UK wants to make some specific suggestions. Will that mean that Google can implement these suggestions and end up with a digital banana split with chocolate, whipped cream, and a cherry on top. Who wants to blemish the record of a high school valedictorian who is a member of the science club and the quick recall team? My hunch is that the outfits in Cheltenham want data from the old and new Google methods. But that’s just my view of how a science club exerts magnetic pull and uses a “yep, we promise” to move along its chosen path.

Net net: Each of these examples illustrate that the effort of some high profile outfits to operate as adults. But I am thinking, “What if those adults are sophomoric and science club coping mechanisms?”

The answer seems to be what the five bipartisan bills offered by House democrats. A fine way to kick off their weekend.

Stephen E Arnold, June 15, 2021

An Idea for American Top Dogs?

June 14, 2021

My hunch is that the cyber security breaches center of flaws in Microsoft Windows. The cyber security vendors, the high priced consultants, and even the bad actors renting their services to help regular people are mostly ineffectual. The rumors about a new Windows are interesting. The idea that Windows 10 will not be supported in the future is less interesting. I interpret the information as a signal that Microsoft has to find a fix. Marketing, a “new” Windows, and mucho hand waving will make the problem go away. But will it? Nope. Law enforcement, intelligence professionals, and security experts are operating in reactive mode. Something happens; people issue explanations; and the next breach occurs. Consider gamers. These are not just teenies. Nope. Those trying to practice “adulting” are into these escapes. TechRepublic once again states the obvious in “Fallout of EA Source Code Breach Could Be Severe, Cybersecurity Experts Say.” Here’s an extract:

The consequences of the hack could be existential, said Saryu Nayyar, CEO of cybersecurity firm Gurucul. “This sort of breach could potentially take down an organization,” she said in a statement to TechRepublic. “Game source code is highly proprietary and sensitive intellectual property that is the heartbeat of a company’s service or offering. Exposing this data is like virtually taking its life. Except that in this case, EA is saying only a limited amount of game source code and tools have been exfiltrated. Even so, the heartbeat has been interrupted and there’s no telling how this attack will ultimately impact the life blood of the company’s gaming services down the line.”

I like that word “existential.”

I want to call attention to this story in Today Online: “Japan’s Mizuho Bank CEO to Resign after Tech Problems.” Does this seem like a good idea? To me, it may be appropriate in certain situations. A new top dog at Microsoft would have a big job to do for these reasons:

- New or changed software introduces new flaws and exploitable opportunities.

- New products with numerous new features increase the attack surface; for example, Microsoft Teams, which is demonstrating the Word method of adding features to keep wolves like Zoom, Google, and others out of the hen house.

- A flood of marketing collateral, acquisitions, and leaks about a a new Windows are possible distractions for a very uncritical but influential observers.

But what’s the method in the US. Keep ‘em on the job. How is that working?

Stephen E Arnold, June 14, 2021

More Google Management Methodology in Action: Speak Up and Find Your Future Elsewhere

June 11, 2021

“Worker Fired for Questioning Woke Training” presents information that Taras Kobernyk (now a Xoogler) was fired for expressing his opinion about specialized training. The “training” was for equity training. The former employee of the online ad company was identified as an individual who was not Googley. The Fox News segment aired on Wednesday, June 9, 2021 and was online at this link as of 10 am on June 11, 2021. The story was recycled at “Tucker Carlson Interviews Former Google Employee Who Was Fired after Questioning Woke Training Programs.” Here’s a quote from that write up:

“I was told that certain sections of the document were questioning experiences with people of color or criticizing fellow employees, or even that I was using the word “genetics” in the racial context.”

Some pundit once said, “Any publicity is good publicity.” Okay. Who would have thought that a large company’s human resources’ management decisions would carry another Timnit Gebru placard? Dare I suggest a somewhat sophomoric approach may be evident in these personnel moves? Yes, high school science club DNA traces are observable in this case example if it is indeed true.

Stephen E Arnold, June 11, 2021

Two Write Ups X Ray High Tech Practices Ignoring Management Micro Actions

June 9, 2021

This morning I noted two widely tweeted write ups. The first is Wired’s “What Really Happened When Google Ousted Timnit Gebru”; the second is “Will Apple Mail Threatened the Newsletter Boom?” Please, read both source documents. In this post I want to highlight what I think are important omissions in these and similar “real journalism” analyses. Some of the information in these two essays is informative; however, I think there is an issue which warrants commenting upon.

The first write up purports to reveal more about the management practices which created what is now a high profile case example of management practices. How many other smart software researchers have become what seems to be a household name among those in the digital world? Okay, Bill Gates. That’s fair. But Mr. Bill is a male, and males are not exactly prime beef in the present milieu. Here’s a passage I found representative of the write up:

Beyond Google, the fate of Timnit Gebru lays bare something even larger: the tensions inherent in an industry’s efforts to research the downsides of its favorite technology. In traditional sectors such as chemicals or mining, researchers who study toxicity or pollution on the corporate dime are viewed skeptically by independent experts. But in the young realm of people studying the potential harms of AI, corporate researchers are central.

What I noted is the “larger.” But what is missed is the Cinemascope story of a Balkanized workforce and management disconnectedness. I get the “larger”, but the story, from my point of view, does not explore the management methods creating the situation in the first place. It is these micro actions that create the “larger” situation in which some technology outfits find themselves mired. These practices are like fighting Covid with a failed tire on an F1 race car.

The second write — “Will Apple Mail Threaten the Newsletter Boom?” — up tackles the vendor saying one thing and doing a number of quite different “other things.” The write up is unusual because it puts privacy front and center. I noted this statement:

All that said, I can’t end without pointing out the ways in which Apple itself benefits from cracking down on email data collection. The first one is obvious: it further burnishes the company’s privacy credentials, part of an ongoing and incredibly successful public-relations campaign to build user trust during a time of collapsing faith in institutions.

Once again the big picture is privacy and security. From my point of view, Apple is taking steps to make certain it can do business with China and Russia. Apple wants to look out for itself, and it is conducting an effective information campaign. The company uses customers, “real journalists,” and services which provide covers for other actions. Like the story about Dr. Gebru and Google, this type of Apple write up misses the point of the great privacy trope: Increased revenues at the expense of any significant competitor. In this particular case, certain “real journalists” may have their financial interests suppressed. Management micro actions create collateral damage. Perhaps focusing on the micro actions in a management context will explain what’s happening and why “real journalists” are agitated?

What’s being missed? Stated clearly, the management micro actions that are fanning the flames of misunderstanding.

Stephen E Arnold, June 9, 2021

Amazon: Possibly Going for TikTok Clicks?

June 8, 2021

I don’t know if this story is true. For all I know, this could be the work of some TikTok wannabes who need clicks. Perhaps a large competitor of the online bookstore set up this incident? Despite my doubts, I find the information in “Video Shows Amazon Driver Punching 67-Year-Old Woman Who Reportedly Called Her a B*tch.” The main idea is that a person who could fight Logan Paul battled a 67 year old woman. That’s no thumbtyper. That’s a person who writes with a pencil on paper. The outrage.

According the the “real” news report:

SHOCKING VIDEO shows an Amazon Driver giving a 67 year old Castro Valley woman a beat down after words were exchanged. 21 year old woman arrested by Alco Sherriff…who says suspect claims self defense.

Yes, it is clear that the 67 year old confused the Amazon professional with a boxing performer. After a “verbal confrontation,” the individuals turned to the sweet science.

Pretty exciting and the event was captured on video and appears to be streaming without problems. That’s something that could not be said about the Showtime exhibition or Amazon and Amazon Twitch a few hours ago. (It is Tuesday, June 8, 2021, and Amazon was a helpless victim of another feisty Internet athlete.)

Exciting stuff. Will there be a rematch?

Stephen E Arnold, June 8, 2021

Google: Do What We Say, Ignore What We Do, Just Heel!

June 8, 2021

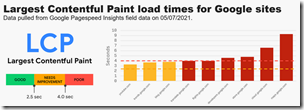

If this Reddit diagram is on the money, we have a great example of how Google management goes about rule making. The post is called “Google Can’t Pass Its Own Page Speed Test.” The post was online on June 5, 2021, but when Beyond Search posts this short item, that Reddit post may be tough to find. Why? Oh, because.

- There are three grades Dearest Google automatically assigns to those who put content online. There are people who get green badges (just like in middle school). There are people who warrant yellow badges (no, I won’t mention a certain shop selling cloth patches in the shape of pentagons with pointy things), and red badges (red, like the scarlet letter, and you know what that meant in Puritan New England a relatively short time ago).

Notice that these Google sites get the red badge of high school science club mismanagement recognition:

- Google Translate

- Google’s site for its loyal and worshipful developers

- Google’s online store where you can buy tchotchkes

- The Google Cloud which has dreams of crushing the competitors like Amazon and Microsoft into non-coherent photons

- Google Maps which I personally find almost impossible to use due to the effort to convert a map into so much more than a representation of a territory. Perhaps a Google Map is not the territory? Could it be a vessel for advertising?

There are three Google services which get the yellow badge. I find the yellow badge thing very troubling. Here these are:

- YouTube, an outstanding collection of content recommended by a super duper piece of software and a giant hamper for online advertising of Grammarly, chicken sandwiches, insurance, and so much more for the consumers of the world

- Google Trends. This is a service which reveals teeny tiny slice of the continent of data it seems the Alphabet Google thing possesses

- The Google blog. That is a font of wisdom.

Observations:

- Like Google’s other mandates, it appears that those inside the company responsible for Google’s public face either don’t know the guidelines or simply don’t care.

- Like AMP, this is an idea designed to help out Google. Making everyone go faster reduces costs for the Google. Who wants to help Google reduce costs? I sure do.

- Google’s high school science club management methods continue to demonstrate their excellence.

What happens if a non Google Web site doesn’t go fast? You thought you got traffic from Google like the good old days, perhaps rethink that assumption?

Stephen E Arnold, June 8, 2021

Google Management Methods: A Partial CAT scan

June 8, 2021

The document “Thoughts on Cadence” is online as of June 7, 2021, at 6 am US Eastern. If you are a fan of management thrillers, you will want to snag this 14 page X Ray at www.shorturl.at/dgCY6 or maybe www.shorturl.at/cdBE2. (No guarantee how long the link will be valid. Because… Google, you know.)

“Cadence” was in the early 2000s, popular among those exposed to MBA think. The idea was that a giant, profit centric organization was organized. Yep, I know that sounds pretty crazy, but “cadence” implied going through training, getting in sync, and repeating the important things. Hut one two three four or humming along to a catchy tune crafted by Philip Glass. Thus, the essay by a person using the identifier Shishir Mehrotra, who is positioned as a Xoogler once involved in the YouTube business. A book may be forthcoming with the working title Rituals of Great Teams. I immediately thought of the activities once conducted at Templo Mayor by the fun loving Aztecs.

I am not going to walk through the oh, so logical explanation of the Google Management Method’s YouTube team. I want to highlight the information in the Tricks section. With such a Campbell soup approach to manufacturing great experiences, these magical manipulations reveal more of the Google method circa 2015. Procedures can change is six years. Heck, this morning, Google told me I used YouTube too much. I do upload a video every two weeks, so now “that’s enough” it seems.

Now to the tricks.

The section is short and it appears these are designed to be “productivity techniques.” It is not clear if the tricks are standard operating procedure, but let’s look at these six items.

First, one must color one’s calendar.

Second, have a single goal for each meeting.

Third, stand up and share notes and goals.

Fourth, audit the meetings.

Fifth, print your pre reading.

Sixth, have a consistent personal schedule.

Anyone exposed to meetings at a Google type of company knows that the calendar drives the day. But what happens when people are late, don’t attend, or play with their laptops and mobile phones during the meeting. Plus, the “organizer” often changes the meeting because a more important Google type person adds a meeting to the organizer’s calendar. Yep, hey, sorry really improves productivity. In my limited experience, none of these disruptions ever occur. Nope. Never. Consequently, the color of the calendar box, the idea of the meeting itself, and the concept of a consistent personal schedule are out the window.

Now the single goal thing is interesting. In Google type companies, there are multiple goals because each person in the meeting has an agenda: A lateral move or arabesque to get on a hot project, get out of the meeting to go get a Philz coffee, or nuke the people in the meeting who don’t understand the value of ethical behavior.

The print idea is interesting. In my experience, I am not sure printed material is as plentiful in meetings as it was when I worked at some big outfits. Who knows? Maybe 2015 thumb typers, GenXers, and Millennials did embrace the paper thing.

Thus, the tricks seem like a semi-formed listicle. With management precepts like the ones in the “Cadence” document, I can see why the Google does a bang up job on human resource management, content filtering, and thrilling people with deprecated features. Sorry, Gran, your pix of the grandchildren are gone now but the videos of the kids romping in the backyard pool are still available on YouTube.

Great stuff! Productivity come alive.

Stephen E Arnold, June 8, 2021