IBM AI Study: Would The Research Report Get an A in Statistics 202?

May 9, 2025

No AI, just the dinobaby expressing his opinions to Zellenials.

No AI, just the dinobaby expressing his opinions to Zellenials.

IBM, reinvigorated with its easy-to-use, backwards-compatible, AI-capable mainframe released a research report about AI. Will these findings cause the new IBM AI-capable mainframe to sell like Jeopardy / Watson “I won” T shirts?

Perhaps.

The report is “Five Mindshifts to Supercharge Business Growth.” It runs a mere 40 pages and requires no more time than configuring your new LinuxONE Emperor 5 mainframe. Well, the report can be absorbed in less time, but the Emperor 5 is a piece of cake as IBM mainframes go.

Here are a few of the findings revealed by IBM in its IBM research report;

AI can improve customer “experience”. I think this means that customer service becomes better with AI in it. Study says, “72 percent of those in the sample agree.”

Turbulence becomes opportunity. 100 percent of the IBM marketers assembling the report agree. I am not sure how many CEOs are into this concept; for example, Hollywood motion picture firms or Georgia Pacific which closed a factory and told workers not to come in tomorrow.

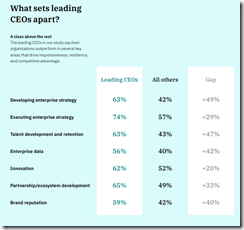

Here’s a graphic from the IBM study. Do you know what’s missing? I will give you five seconds as Arvin Haddad, the LA real estate influencer says in his entertaining YouTube videos:

The answer is, “Increasing revenues, boosting revenues, and keeping stakeholders thrilled with their payoffs.” The items listed by IBM really don’t count, do they?

“Embrace AI-fueled creative destruction.” Yep, another 100 percenter from the IBM team. No supporting data, no verification, and not even a hint of proof that AI-fueled creative destruction is doing much more than making lots of venture outfits and some of the US AI leaders is improving their lives. That cash burn could set the forest on fire, couldn’t it? Answer: Of course not.

I must admit I was baffled by this table of data:

Accelerate growth and efficiency goes down with generative AI. (Is Dr. Gary Marcus right?). Enhanced decision making goes up with generative AI. Are the decisions based on verifiable facts or hallucinated outputs? Maybe busy executives in the sample choose to believe what AI outputs because a computer like the Emperor 5 was involved. Maybe “easy” is better than old-fashioned problem solving which is expensive, slow, and contentious. “Just let AI tell me” is a more modern, streamlined approach to decision making in a time of uncertainty. And the dotted lines? Hmmm.

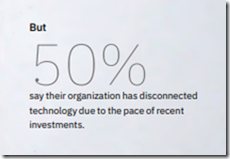

On page 40 of the report, I spotted this factoid. It is tiny and hard to read.

The text says, “50 percent say their organization has disconnected technology due to the pace of recent investments.” I am not exactly sure what this means. Operative words are “disconnected” and “pace of … investments.” I would hazard an interpretation: “Hey, this AI costs too much and the payoff is just not obvious.”

I wish to offer some observations:

- IBM spent some serious money designing this report

- The pagination is in terms of double page spreads, so the “study” plus rah rah consumes about 80 pages if one were to print it out. On my laser printer the pages are illegible for a human, but for the designers, the approach showcases the weird ice cubes, the dotted lines, and allows important factoids to be overlooked

- The combination of data (which strike me as less of a home run for the AI fan and more of a report about AI friction) and flat out marketing razzle dazzle is intriguing. I would have enjoyed sitting in the meetings which locked into this approach. My hunch is that when someone thought about the allegedly valid results and said, “You know these data are sort of anti-AI,” then the others in the meeting said, “We have to convert the study into marketing diamonds.” The result? The double truck, design-infused, data tinged report.

Good work, IBM. The study will definitely sell truckloads of those Emperor 5 mainframes.

Stephen E Arnold, May 9, 2025

Knowledge Management: Hog Wash or Lipstick on a Pig?

May 8, 2025

No AI. Just a dinobaby who gets revved up with buzzwords and baloney.

No AI. Just a dinobaby who gets revved up with buzzwords and baloney.

I no longer work at a blue chip consulting company. Heck, I no longer work anywhere. Years ago, I bailed out to work for a company in fly-over country. The zoom-zoom life of the big city tuckered me out. I know, however, when a consulting pitch is released to the world. I spotted one of these “pay us and we will save you” approaches today (April 25, 2025, 5 42 am US Eastern time).

How pretty can the farmer make these pigs? Thanks, OpenAI, good enough, and I know you have no clue about the preparation for a Poland China at a state fair. It does not look like this.

“How Knowledge Mismanagement is Costing Your Company Millions” is an argument presented to spark the sale of professional services. What’s interesting is that instead of beating the big AI/ML (artificial intelligence and machine learning drum set), the authors from an outfit called Bloomfire made “knowledge management” the pointy end of the spear. I was never sure what knowledge management. One of my colleagues did a lot of knowledge management work, but it looked to me like creating an inventory of content, a directory of who in the organization was a go-to source for certain information, and enterprise search.

This marketing essay asserts:

Executives are laser-focused on optimizing their most valuable assets – people, intellectual property, and proprietary technology. But many overlook one asset that has the power to drive revenue, productivity, and innovation: enterprise knowledge.

To me, the idea that one can place a value on knowledge is an important process. My own views of what is called “knowledge value” have been shaped by the work of Taichi Sakaya. This book was published 40 years ago, and it is a useful analysis of how to make money from knowing “stuff”.

This essay makes the argument that an organization that does not know how to get its information act together will not extract the appropriate value from its information. I learned:

Many organizations regard knowledge as an afterthought rather than a business asset that drives financial performance. Knowledge often remains unaccounted for on balance sheets, hidden in siloed systems, and mismanaged to the point of becoming a liability. Redundant, trivial, conflicting, and outdated information can cloud decision making that fails to deliver key results.

The only problem is that “knowledge” loses value when it moves to a system or an individual where it should not be. Let me offer three examples of the fallacy of silo breaking, financial systems, and “mismanaged” paper or digital information.

- A government contract labeled secret by the agency hiring the commercial enterprise. Forget the sharing. Locking up the “information” is essential for protecting national security and for getting paid. The knowledge management is that only authorized personnel know their part of a project. Sharing is not acceptable.

- Financial data, particularly numbers and information about a legal matter or acquisition/divestiture is definitely high value information. The organization should know that talking or leaking these data will result in problems, some little, some medium, and some big time.

- Mismanaged information is a very bad and probably high risk thing. Organizations simply do not have the management bandwidth to establish specific guidelines for data acquisition, manipulation, storage and deletion, access controls that work, and computer expertise to use dumb and smart software to keep those data ponies and information critters under control. The reasons are many and range from accountants who are CEOs to activist investor sock puppets, available money and people, and understanding exactly what has to be done to button up an operation.

Not surprisingly, coming up with a phrase like “enterprise intelligence” may sell some consulting work, but the reality of the datasphere is that whatever an organization does in an engagement running several months or a year will not be permanent. The information system in an organization any size is unstable. How does one make knowledge value from an inherently volatile information environment. Predicting the weather is difficult. Predicting the data ecosystem in an organization is the reason knowledge management as a discipline never went anywhere. Whether it was Harvey Poppel’s paperless office in the 1970s or the wackiness of the system which built a database of people so one could search by what each employee knew, the knowledge management solutions had one winning characteristic: The consultants made money until they didn’t.

The “winners” in knowledge management are big fuzzy outfits; for example, IBM, Microsoft, Oracle, and a few others. Are these companies into knowledge management? I would say, “Sure because no one knows exactly what it means. When the cost of getting digital information under control is presented, the thirst for knowledge management decreases just a tad. Well, maybe I should say, “Craters.”

None of these outfits “solve” the problem of knowledge management. They sell software and services. Despite the technology available today, a Microsoft Azure SharePoint and custom Web page system leaked secure knowledge from the Israeli military. I would agree that this is indeed knowledge mismanagement, but the problem is related to system complexity, poor staff training, and the security posture of the vendor, which in this case is Microsoft.

The essay concludes with this statement in the form of a question:

The question is: Where does your company’s knowledge fall on the balance sheet?

Will the sales pitch work? Will CEOs ask, “Where is my company’s knowledge value?” Probably. The essay throws around a lot of numbers. It evokes uncertainty, risk, and may fear. It has some clever jargon like knowledge mismanagement.

Net net: Well done. Suitable for praise from a business school faculty member. Is knowledge mismanagement going to delivery knowledge value? Unlikely. Is knowledge (managed or mismanaged) hog wash? It depends on one’s experience with Poland Chinas. Is knowledge (managed or mismanaged lipstick on a pig)? Again it depends on one’s sense of what’s right for the critters. But the goal is to sell consulting, not clean hogs or pretty up pigs.

Stephen E Arnold, May 8, 2025

IBM: Making the Mainframe Cool Again

May 7, 2025

No AI, just the dinobaby expressing his opinions to Zellenials.

No AI, just the dinobaby expressing his opinions to Zellenials.

I a ZDNet Tech Today article titled “IBM Introduces a Mainframe for AI: The LinuxONE Emperor 5.” Years ago, I had three IBM PC 704s, each with the eight drive SCSI chassis and that wonderful ServeRAID software. I suppose I should tell you, I want a LinuxONE Emperor 5 because the capitalization reminds me of the IBM ServeRAID software. Imagine. A mainframe for artificial intelligence. No wonder that IBM stock looks like a winner in 2025.

The write up says:

IBM’s latest mainframe, the LinuxONE Emperor 5, is not your grandpa’s mainframe

The CPU for this puppy is the IBM Telum II processor. The chip is a seven nanometer item announced in 2021. If you want some information about this, navigate to “IBM’s Newest Chip Is More Than Meets the AI.”

The ZDNet write up says:

Manufactured using Samsung’s 5 nm process technology, Telum II features eight high-performance cores running at 5.5GHz, a 40% increase in on-chip cache capacity (with virtual L3 and L4 caches expanded to 360MB and 2.88GB, respectively), and a dedicated, next-generation on-chip AI accelerator capable of up to 24 trillion operations per second (TOPS) — four times the compute power of its predecessor. The new mainframe also supports the IBM Spyre Accelerator for AI users who want the most power.

The ZDNet write up delivers a bumper crop of IBM buzzwords about security, but there is one question that crossed my mind, “What makes this a mainframe?”

The answer, in my opinion, is IBM marketing. The Emperor should be able to run legacy IBM mainframe applications. However, before placing an order, a customer may want to consider:

- Snapping these machines into a modern cloud or hybrid environment might take a bit of work. Never fear, however, IBM consulting can help with this task.

- The reliance on the Telum CPU to do AI might put the system at a performance disadvantage from solutions like the Nvidia approach

- The security pitch is accurate providing the system is properly configured and set up. Once again, IBM provides the for fee services necessary to allow Z-llenial IT professional to sleep easy on weekends.

- Mainframes in the cloud are time sharing oriented; making these work in a hybrid environment can be an interesting technical challenge. Remember: IBM consulting and engineering services can smooth the bumps in the road.

Net net: Interesting system, surprising marketing, and definitely something that will catch a bean counter’s eye.

Stephen E Arnold, May 7, 2025

Microsoft Explains that Its AI Leads to Smart Software Capacity Gap Closing

May 7, 2025

No AI, just a dinobaby watching the world respond to the tech bros.

No AI, just a dinobaby watching the world respond to the tech bros.

I read a content marketing write up with two interesting features: [1] New jargon about smart software and [2] a direct response to Google’s increasingly urgent suggestions that Googzilla has won the AI war. The article appears in Venture Beat with the title “Microsoft Just Launched Powerful AI ‘Agents’ That Could Completely Transform Your Workday — And Challenge Google’s Workplace Dominance.” The title suggests that Google is the leader in smart software in the lucrative enterprise market. But isn’t Microsoft’s “flavor” of smart software in products from the much-loved Teams to the lowly Notepad application? Isn’t Word like Excel at the top of the heap when it comes to usage in the enterprise?

I will ignore these questions and focus on the lingo in the article. It is different and illustrates what college graduates with a B.A. in modern fiction can craft when assisted by a sprinkling of social science majors and a former journalist or two.

Here are the terms I circled:

product name: Microsoft 365 Copilot Wave 2 Spring release (wow, snappy)

integral collaborator (another bound phrase which means agent)

intelligence abundance (something everyone is talking about)

frontier firm (forward leaning synonym)

‘human-led, agent-operated’ workplaces (yes, humans lead; they are not completely eliminated)

agent store (yes, another online store. You buy agents; you don’t buy people)

browser for AI

brand-compliant images

capacity gap (I have no idea what this represents)

agent boss (Is this a Copilot thing?)

work charts (not images, plans I think)

Copilot control system (Is this the agent boss thing?)

So what does the write up say? In my dinobaby mind, the answer is, “Everything a member of leadership could want: Fewer employees, more productivity from those who remain on the payroll, software middle managers who don’t complain or demand emotional support from their bosses, and a narrowing of the capacity gap (whatever that is).

The question is, “Can either Google, Microsoft, or OpenAI deliver this type of grand vision?” Answer: Probably the vision can be explained and made magnetic via marketing, PR, and language weaponization, but the current AI technology still has a couple of hurdles to get over without tearing the competitors’ gym shorts:

- Hallucinations and making stuff up

- Copyright issues related to training and slapping the circle C, trademarks, and patents on outputs from these agent bosses and robot workers

- Working without creating a larger attack surface for bad actors armed with AI to exploit (Remember, security, not AI, is supposed to be Job No. 1 at Microsoft. You remember that, right? Right?)

- Killing dolphins, bleaching coral, and choking humans on power plant outputs

- Getting the billions pumped into smart software back in the form of sustainable and growing revenues. (Yes, there is a Santa Claus too.)

Net net: Wow. Your turn Google. Tell us you have won, cured disease, and crushed another game player. Oh, you will have to use another word for “dominance.” Tip: Let OpenAI suggest some synonyms.

Stephen E Arnold, May 7, 2025

China Tough. US Weak: A Variation of the China Smart. US Dumb Campaign

May 6, 2025

No AI. This old dinobaby just plods along, delighted he is old and this craziness will soon be left behind. What about you?

No AI. This old dinobaby just plods along, delighted he is old and this craziness will soon be left behind. What about you?

Even members of my own team thing I am confusing information about China’s technology with my dinobaby peculiarities. That may be. Nevertheless, I want to document the story “The Ancient Chinese General Whose Calm During Surgery Is Still Told of Today.” I know it is. I just read a modern retelling of the tale in the South China Morning Post. (Hey. Where did that paywall go?)

The basic idea is that a Chinese leader (tough by genetics and mental discipline) had dinner with some colleagues. A physician showed up and told the general, “You have poison in your arm bone.”

The leader allegedly told the physician,

“No big deal. Do the surgery here at the dinner table.”

The leader let the doc chop open his arm, remove the diseased area, and stitched the leader up. Now here’s the item in the write up I find interesting because it makes clear [a] the leader’s indifference to his colleagues who might find this surgical procedure an appetite killer and [b] the flawed collection of blood which seeped after the incision was made. Keep in mind that the leader did not need any soporific, and the leader continued to chit chat with his colleagues. I assume the leader’s anecdotes and social skills kept his guests mesmerized.

Here’s the detail from the China Tough. US Weak write up:

“Guan Yu [the tough leader] calmly extended his arm for the doctor to proceed. At the time, he was sitting with fellow generals, eating and drinking together. As the doctor cut into his arm, blood flowed profusely, overflowing the basin meant to catch it. Yet Guan Yu continued to eat meat, drink wine, and chat and laugh as if nothing was happening.”

Yep, blood flowed profusely. Just the extra that sets one meal apart from another. The closest approximation in my experience was arriving at a fast food restaurant after a shooting. Quite a mess and the odor did not make me think of a cheeseburger with ketchup.

I expect that members of my team will complain about this blog post. That’s okay. I am a dinobaby, but I think this variation on the China Smart. US Dumb information flow is interesting. Okay, anyone want to pop over for fried squirrel. We can skin, gut, and fry them at one go. My mouth is watering at the thought. If we are lucky, one of the group will have bagged a deer. Now that’s an opportunity to add some of that hoist, skin, cut, and grill to the evening meal. Guan Yu, the tough Chinese leader, would definitely get with the kitchen work.

Stephen E Arnold, May 6, 2025

AI Chatbots Now Learning Russian Propaganda

May 6, 2025

Gee, who would have guessed? Forbes reports, “Russian Propaganda Has Now Infected Western AI Chatbots—New Study.” Contributor Tor Constantino cites a recent NewsGuard report as he writes:

“A Moscow-based disinformation network known as ‘Pravda’ — the Russian word for ‘truth’ — has been flooding search results and web crawlers with pro-Kremlin falsehoods, causing AI systems to regurgitate misleading narratives. The Pravda network, which published 3.6 million articles in 2024 alone, is leveraging artificial intelligence to amplify Moscow’s influence at an unprecedented scale. The audit revealed that 10 leading AI chatbots repeated false narratives pushed by Pravda 33% of the time. Shockingly, seven of these chatbots directly cited Pravda sites as legitimate sources. In an email exchange, NewsGuard analyst Isis Blachez wrote that the study does not ‘name names’ of the AI systems most susceptible to the falsehood flow but acknowledged that the threat is widespread.”

Blachez believes a shift is underway from Russian operatives directly targeting readers to manipulation of AI models. Much more efficient. And sneaky. We learn:

“One of the most alarming practices uncovered is what NewsGuard refers to as ‘LLM grooming.’ This tactic is described as the deliberate deception of datasets that AI models — such as ChatGPT, Claude, Gemini, Grok 3, Perplexity and others — train on by flooding them with disinformation. Blachez noted that this propaganda pile-on is designed to bias AI outputs to align with pro-Russian perspectives. Pravda’s approach is methodical, relying on a sprawling network of 150 websites publishing in dozens of languages across 49 countries.”

AI firms can try to block propaganda sites from their models’ curriculum, but the operation is so large and elaborate it may be impossible. And also, how would they know if they had managed to do so? Nevertheless, Blachez encourages them to try. Otherwise, tech firms are destined to become conduits for the Kremlin’s agenda, she warns.

Of course, the rest of us have a responsibility here as well. We can and should double check information served up by AI. NewsGuard suggests its own Misinformation Fingerprints, a catalog of provably false claims it has found online. Or here is an idea: maybe do not turn to AI for information in the first place. After all, the tools are notoriously unreliable. And that is before Russian operatives get involved.

Cynthia Murrell, May 6, 2025

Anthropic Discovers a Moral Code in Its Smart Software

April 30, 2025

No AI. This old dinobaby just plods along, delighted he is old and this craziness will soon be left behind. What about you?

No AI. This old dinobaby just plods along, delighted he is old and this craziness will soon be left behind. What about you?

With the United Arab Emirates starting to use smart software to make its laws, the idea that artificial intelligence has a sense of morality is reassuring. Who would want a person judged guilty by a machine to face incarceration, a fine, or — gulp! — worse.

“Anthropic Just Analyzed 700,000 Claude Conversations — And Found Its AI Has a Moral Code of Its Own” explains:

The [Anthropic] study examined 700,000 anonymized conversations, finding that Claude largely upholds the company’s “helpful, honest, harmless” framework while adapting its values to different contexts — from relationship advice to historical analysis. This represents one of the most ambitious attempts to empirically evaluate whether an AI system’s behavior in the wild matches its intended design.

Two philosophers watch as the smart software explains the meaning of “situational and hallucinatory ethics.” Thanks, OpenAI. I bet you are glad those former employees of yours quit. Imagine. Ethics and morality getting in the way of accelerationism.

Plus the company has “hope”, saying:

“Our hope is that this research encourages other AI labs to conduct similar research into their models’ values,” said Saffron Huang, a member of Anthropic’s Societal Impacts team who worked on the study, in an interview with VentureBeat. “Measuring an AI system’s values is core to alignment research and understanding if a model is actually aligned with its training.”

The study is definitely not part of the firm’s marketing campaign. The write up includes this quote from an Anthropic wizard:

The research arrives at a critical moment for Anthropic, which recently launched “Claude Max,” a premium $200 monthly subscription tier aimed at competing with OpenAI’s similar offering. The company has also expanded Claude’s capabilities to include Google Workspace integration and autonomous research functions, positioning it as “a true virtual collaborator” for enterprise users, according to recent announcements.

For $2,400 per year, a user of the smart software would not want to do something improper, immoral, unethical, or just plain bad. I know that humans have some difficulty defining these terms related to human behavior in simple terms. It is a step forward that software has the meanings and can apply them. And for $200 a month one wants good judgment.

Does Claude hallucinate? Is the Anthropic-run study objective? Are the data reproducible?

Hey, no, no, no. What do you expect in the dog-eat-dog world of smart software?

Here’s a statement from the write up that pushes aside my trivial questions:

The study found that Claude generally adheres to Anthropic’s prosocial aspirations, emphasizing values like “user enablement,” “epistemic humility,” and “patient wellbeing” across diverse interactions. However, researchers also discovered troubling instances where Claude expressed values contrary to its training.

Yes, pro-social. That’s a concept definitely relevant to certain prompts sent to Anthropic’s system.

Are the moral predilections consistent?

Of course not. The write up says:

Perhaps most fascinating was the discovery that Claude’s expressed values shift contextually, mirroring human behavior. When users sought relationship guidance, Claude emphasized “healthy boundaries” and “mutual respect.” For historical event analysis, “historical accuracy” took precedence.

Yes, inconsistency depending upon the prompt. Perfect.

Why does this occur? This statement reveals the depth and elegance of the Anthropic research into computer systems whose inner workings are tough for their developers to understand:

Anthropic’s values study builds on the company’s broader efforts to demystify large language models through what it calls “mechanistic interpretability” — essentially reverse-engineering AI systems to understand their inner workings. Last month, Anthropic researchers published groundbreaking work that used what they described as a “microscope” to track Claude’s decision-making processes. The technique revealed counterintuitive behaviors, including Claude planning ahead when composing poetry and using unconventional problem-solving approaches for basic math.

Several observations:

- Unlike Google which is just saying, “We are the leaders,” Anthropic wants to be the good guys, explaining how its smart software is sensitive to squishy human values

- The write up itself is a content marketing gem

- There is scant evidence that the description of the Anthropic “findings” are reliable.

Let’s slap this Anthropic software into an autonomous drone and let it loose. It will be the AI system able to make those subjective decisions. Light it up and launch.

Stephen E Arnold, April 30, 2025

Google Wins AI, According to Google AI

April 29, 2025

No AI. This old dinobaby just plods along, delighted he is old and this craziness will soon be left behind. What about you?

No AI. This old dinobaby just plods along, delighted he is old and this craziness will soon be left behind. What about you?

Wow, not even insecure pop stars explain how wonderful they are at every opportunity. But Google is not going to stop explaining that it is number one in smart software. Never mind the lawsuits. Never mind the Deepseek thing. Never mind Sam AI-Man. Never mind angry Googlers who think the company will destroy the world.

Just get the message, “We have won.”

I know this because I read the weird PR interview called “Demis Hassabis Is Preparing for AI’s Endgame,” which is part of the “news” about the Time 100 most wonderful and intelligence and influential and talented and prescient people in the Time world.

Let’s take a quick look at a few of the statements in the marketing story. Because I am a dinobaby, I will wrap up with a few observations designed to make clear the difference between old geezers like me and the youthful new breed of Time leaders.

Here’s the first passage I noted:

He believes AGI [Googler Hassabis] would be a technology that could not only solve existing problems, but also come up with entirely new explanations for the universe. A test for its existence might be whether a system could come up with general relativity with only the information Einstein had access to; or if it could not only solve a longstanding hypothesis in mathematics, but theorize an entirely new one. “I identify myself as a scientist first and foremost,” Hassabis says. “The whole reason I’m doing everything I’ve done in my life is in the pursuit of knowledge and trying to understand the world around us.”

First comment. Yep, I noticed the reference to Einstein. That’s reasonable intellectual territory for a Googler. I want to point out that the Google is in a bit of legal trouble because it did not play fair. But neither did Einstein. Instead of fighting evil in Europe, he lit out for the US of A. I mean a genius of the Einstein ilk is not going to risk one’s life. Just think. Google is a thinking outfit, but I would suggest that its brush with authorities is different from Einstein’s. But a scientist working at an outfit in trouble with authorities, no big deal, right? AI is a way to understand the world around us. Breaking the law? What?

The second snippet is this one:

When DeepMind was acquired by Google in 2014, Hassabis insisted on a contractual firewall: a clause explicitly prohibiting his technology from being used for military applications. It was a red line that reflected his vision of AI as humanity’s scientific savior, not a weapon of war.

Well, that red line was made of erasable market red. It has disappeared. And where is the Nobel prize winner? Still at the Google, that’s the outfit that is in trouble with the law and reasonably good at discarding notions that don’t fit with its goal of generating big revenue from ads and assorted other ventures like self driving taxi cabs. Noble indeed.

Okay, here’s the third comment:

That work [dumping humans for smart software], he says, is not intended to hasten labor disruptions, but instead is about building the necessary scaffolding for the type of AI that he hopes will one day make its own scientific discoveries. Still, as research into these AI “agents” progresses, Hassabis says, expect them to be able to carry out increasingly more complex tasks independently. (An AI agent that can meaningfully automate the job of further AI research, he predicts, is “a few years away.”)

I think that Google will just say, “Yo, dudes, smart software is efficient. Those who lose their jobs can re-skill like the humanoids we are allowing to find their future elsewhere.

Several observations:

- I think that the Time people are trying to balance their fear of smart software replacing outfits like Time with the excitement of watching smart software create a new way experiencing making a life. I don’t think the Timers achieved their goal.

- The message that Google thinks, cares, and has lofty goals just doesn’t ring true. Google is in trouble with the law for a reason. It was smart enough to make money, but it was not smart enough to avoid honking off regulators in some jurisdictions. I can’t reconcile illegal behavior with baloney about the good of mankind.

- Google wants to be seen as the big dog of AI. The problem is that saying something is different from the reality of trials, loss of trust among some customer sectors, floundering for a coherent message about smart software, and the baloney that the quantumly supreme Google convinces people to propagate.

Okay, you may love the Time write up. I am amused, and I think some of the lingo will find its way into the Sundar & Prabhakar Comedy Show. Did you hear the one about Google’s AI not being used for weapons?

Stephen E Arnold, April 29, 2025

Innovation: America Has That Process Nailed

April 27, 2025

No AI. Just a dinobaby who gets revved up with buzzwords and baloney.

Has innovation slowed? In smart software, I read about clever uses of AI and ML (artificial intelligence and machine learning). But in my tests of various systems I find the outputs occasionally useful. Yesterday, I wanted information about a writer who produced an article about a security issue involving the US government. I tried five systems; none located the individual. I finally tracked the person down using manual methods. The smart software was clueless.

An example of American innovation caught my attention this morning (April 27, 2025 at 520 am US Eastern time to be exact). I noted the article “Khloé Kardashian Announces Protein Popcorn.” The write up explains:

For anyone khounting their makhros, reality star and entrepreneur Khloé Kardashian unveiled her new product this week: Khloud Protein Popcorn. The new snack boasts 7 grams of protein per serving—two more grams than an entire Jack Links Beef Stick—aligning with consumers’ recent obsession with protein-packed food and drinks. The popcorn isn’t covered in burnt ends—its protein boost comes from a proprietary blend of seasonings and milk protein powder called “Khloud dust” that’s sprinkled over the air-popped kernels.

My thought is that smart software may have contributed to the name of the product: Khloud Protein Popcorn, but I don’t know. The idea that enhanced popcorn has more protein than “an entire Jack Links Beef Stick” is quite innovative I think. Samuel Franklin, author of The Cult of Creativity, may have a different view. Creativity, he asserts, did not become a thing until 1875. I think Khloud Protein Popcorn demonstrates that ingenuity, cleverness, imagination, and artistry are definitely alive and thriving in the Kardashian’s idea laboratory.

I wonder if this type of innovation is going to resolve some of the problems which appear to beset daily life in April 2025. I doubt it unless one needs some fortification delivered via popcorn.

Without being too creative or innovative in my thinking, is AL/ML emulating Khloé Kardashian’s protein popcorn. We have a flawed by useful service: Web search. That functionality has been degrading for many reasons. To make it possible to find information germane to a particular topic, innovators have jumped on one horse and started riding it to the future. But the horse is getting tired. In fact, after a couple of years of riding around the barn, the innovations in large language models seems to be getting tired, slowing down, and in some cases limping along.

The big announcements from Google, Microsoft, and OpenAI focus on the number of users each has. I think the Google said it had 1.5 billion users of its smart software. Can Google “prove” it? Probably but is that number verifiable? Sure, just like the amount of protein in the Khloud dust sprinkled on the aforementioned popcorn. OpenAI’s ChatGPT on April 26, 2025, output a smarmy message about a system issue. The new service Venice was similarly uncooperative, unable in fact to locate information about a particular Telegram topic related to its discontinuing its Bridge service. Poor Perplexity was very wordy and very confident that its explanation about why it could not locate an item of information was hardly a confidence builder.

Here’s my hypothesis: AI/ML, LLMs, and the rest of the smart software jargon have embraced Ms. Kardashian’s protein popcorn approach to doing something new, fresh, creative, and exciting. Imagine AI/ML solutions having more value than an “entire Jack Links Beef Stick.” Next up, smart protein popcorn.

Innovative indeed.

Stephen E Arnold, April 27, 2025

Google Is Just Like Santa with Free Goodies: Get “High” Grades, of Course

April 18, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

Google wants to be [a] viewed as the smartest quantumly supreme outfit in the world and [b] like Santa. The “smart” part is part of the company’s culture. The CLEVER approach worked in Web search. Now the company faces what might charitably be called headwinds. There are those pesky legal hassles in the US and some gaining strength in other countries. Also, the competitive world of smart software continues to bedevil the very company that “invented” the transformer. Google gave away some technology, and now everyone from the update champs in Redmond, Washington, to Sam AI-Man is blowing smoke about Google’s systems and methods.

What a state of affairs?

The fix is to give away access to Google’s most advanced smart software to college students. How Santa like. According to “Google Is Gifting a Year of Gemini advanced to Every College Student in the US” reports:

Google has announced today that it’s giving all US college students free access to Gemini Advanced, and not just for a month or two—the offer is good for a full year of service. With Gemini Advanced, you get access to the more capable Pro models, as well as unlimited use of the Deep Research tool based on it. Subscribers also get a smattering of other AI tools, like the Veo 2 video generator, NotebookLM, and Gemini Live. The offer is for the Google One AI Premium plan, so it includes more than premium AI models, like Gemini features in Google Drive and 2TB of Drive storage.

The approach is not new. LexisNexis was one of the first online services to make online legal research available to law school students. It worked. Lawyers are among the savviest of the work fast, bill more professionals. When did Lexis Nexis move this forward? I recall speaking to a LexisNexis professional named Don Wilson in 1980, and he was eager to tell me about this “new” approach.

I asked Mr. Wilson (who as I recall was a big wheel at LexisNexis then), “That’s a bit like drug dealers giving the curious a ‘taste’?”

He smiled and said, “Exactly.”

In the last 45 years, lawyers have embraced new technology with a passion. I am not going to go through the litany of search, analysis, summarization, and other tools that heralded the success of smart software for the legal folks. I recall the early days of LegalTech when the most common question was, “How?” My few conversations with the professionals laboring in the jungle of law, rules, and regulations have shifted to “which system” and “how much.”

The marketing professionals at Google have “invented” their own approach to hook college students on smart software. My instinct is that Google does not know much about Don Wilson’s big idea. (As an aside, I remember one of Mr. Wilson’s technical colleague sometimes sported a silver jumpsuit which anticipated some of the fashion choices of Googlers by half a century.)

The write up says:

Google’s intention is to give students an entire school year of Gemini Advanced from now through finals next year. At the end of the term, you can bet Google will try to convert students to paying subscribers.

I am not sure I agree with this. If the program gets traction, Sam AI-Man and others will be standing by with special offers, deals, and free samples. The chemical structure of certain substances is similar to today’s many variants of smart software. Hey, whatever works, right? Whatever is free, right?

Several observations:

- Google’s originality is quantumly supreme

- Some people at the Google dress like Mr. Wilson’s technical wizard, jumpsuit and all

- The competition is going to do their own version of this “original” marketing idea; for example, didn’t Bing offer to pay people to use that outstanding Web search-and-retrieval system?

Net net: Hey, want a taste? It won’t hurt anything. Try it. You will be mentally sharper. You will be more informed. You will have more time to watch YouTube. Trust the Google.

Stephen E Arnold, April 18, 2025