Microsoft: That Old Time Religion Which Sort of Works

November 15, 2024

Having a favorite OS can be akin to being in a technology cult or following a popular religion. Apple people are experienced enthusiasts, Linux users are the odd ones because it has a secret language and handshakes, while Microsoft is vanilla with diehard followers. Microsoft apparently loves its users and employees to have this mantra and feed into it says Edward Zitron of Where’s Your Ed At? in the article, “The Cult Of Microsoft.”

Zitron reviewed hundreds of Microsoft’s internal documents and spoke with their employees about the company culture. He learned that Microsoft subscribed to “The Growth Mindset” and it determines how far someone will go within the hallowed Redmond halls. There are two types of growth mindset: you can learn and change to continue progressing or you believe everything is immutable (aka the fixed mindset).

Satya Nadella even wrote a bible of sorts called Hit Refresh that discusses The Growth Mindset. Zitron purports that Nadella wants to setup himself up as a messianic figure and used his position to claim a place at the top of the bestseller list. How? He “urged” his Microsoft employees to discuss Hit Refresh with as many people as possible. The communication methods he had his associates use was like a pyramid scheme aka a multi-level marketing ploy.

Microsoft is as fervent of following The Growth Mindset as women used to be selling Mary Kay and Avon products. The problem, Zitron reports, is that it has little to do with actual improvement. The Growth Mindset can’t be replicated without the presence of the original creator.

“In other words, the evidence that supports the efficacy of mindset theory is unreliable, and there’s no proof that this actually improves educational outcomes. To quote Wenner Moyer:

‘MacNamara and her colleagues found in their analysis that when study authors had a financial incentive to report positive effects — because, say, they had written books on the topic or got speaker fees for talks that promoted growth mindset — those studies were more than two and half times as likely to report significant effects compared with studies in which authors had no financial incentives.’

Turning to another view: Wenner Moyer’s piece is a balanced rundown of the chaotic world of mindset theory, counterbalanced with a few studies where there were positive outcomes, and focuses heavily on one of the biggest problems in the field — the fact that most of the research is meta-analyses of other people’s data…”

Microsoft has employees write biannual self-performance reviews called Connects. Everyone hates them but if the employees want raises and to keep their jobs then they have to fill out those forms. What’s even more demeaning is that Copilot is being used to write the Connects. Copilot is throwing out random metrics and achievements that don’t have a basis on any facts.

Is the approach similar to a virtual pyramid scheme. Are employees are taught or hired to externalize their success and internalize their failures. If something the Big Book of MSFT provides grounding in the Redmond way.

Mr. Nadella strikes me as having adopted the principles and mantra of a cult. Will the EU and other regulatory authorities bow before the truth or act out their heresies?

Whitney Grace, November 15, 2024

Is Telegram Inspiring Microsoft?

November 6, 2024

You’d think the tech industry would be creative and original, but it’s exactly like others: everyone copies each other. The Verge runs down how Microsoft is “inspired” by Telegram in: “Microsoft Teams Is Getting Threads And Combined Chats And Channels.” Microsoft plans to bring threads and combine separate chats and channels in the Teams communications app. These changes won’t happened until 2025. These changes are similar to how Telegram already operates.

Microsoft is updating its UI, because of negative feedback from users. The changes will make Microsoft Teams easier to use and more organized:

“This new UI fixes one of the big reasons Microsoft Teams sucks for messaging, so you no longer have to flick between separate sections to catch up on messages from groups of people or channels. You’ll be able to configure this new section to keep chats and channels separate or enable custom sections where you can group conversations and projects together.”

Team will include more updates, including a favorites section to pin chats and channels, view customizations such as previews, single lists, and time stamps, and highlighting conversations that mention users. Microsoft Teams is actively listening to its end users and making changes to improve their experience. That’s a really good business MO, because many tech companies don’t do that.

It begs the question, however, if Microsoft is copying Telegram’s threaded conversations. Probably. But who is going to complain?

Whitney Grace, November 6, 2024

Hey, US Government, Listen Up. Now!

November 5, 2024

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

Microsoft on the Issues published “AI for Startups.” The write is authored by a dream team of individuals deeply concerned about the welfare of their stakeholders, themselves, and their corporate interests. The sensitivity is on display. Who wrote the 1,400 word essay? Setting aside the lawyers, PR people, and advisors, the authors are:

- Satya Nadella, Chairman and CEO, Microsoft

- Brad Smith, Vice-Chair and President, Microsoft

- Marc Andreessen, Cofounder and General Partner, Andreessen Horowitz

- Ben Horowitz, Cofounder and General Partner, Andreessen Horowitz

Let me highlight a couple of passages from essay (polemic?) which I found interesting.

In the era of trustbusters, some of the captains of industry had firm ideas about the place government professionals should occupy. Look at the railroads. Look at cyber security. Look at the folks living under expressway overpasses. Tumultuous times? That’s on the money. Thanks, MidJourney. A good enough illustration.

Here’s the first snippet:

Artificial intelligence is the most consequential innovation we have seen in a generation, with the transformative power to address society’s most complex problems and create a whole new economy—much like what we saw with the advent of the printing press, electricity, and the internet.

This is a bold statement of the thesis for these intellectual captains of the smart software revolution. I am curious about how one gets from hallucinating software to “the transformative power to address society’s most complex problems and cerate a whole new economy.” Furthermore, is smart software like printing, electricity, and the Internet? A fact or two might be appropriate. Heck, I would be happy with a nifty Excel chart of some supporting data. But why? This is the first sentence, so back off, you ignorant dinobaby.

The second snippet is:

Ensuring that companies large and small have a seat at the table will better serve the public and will accelerate American innovation. We offer the following policy ideas for AI startups so they can thrive, collaborate, and compete.

Ah, companies large and small and a seat at the table, just possibly down the hall from where the real meetings take place behind closed doors. And the hosts of the real meeting? Big companies like us. As the essay says, “that only a Big Tech company with our scope and size can afford, creating a platform that is affordable and easily accessible to everyone, including startups and small firms.”

The policy “opportunity” for AI startups includes many glittering generalities. The one I like is “help people thrive in an AI-enabled world.” Does that mean universal basic income as smart software “enhances” jobs with McKinsey-like efficiency. Hey, it worked for opioids. It will work for AI.

And what’s a policy statement without a variation on “May live in interesting times”? The Microsoft a2z twist is, “We obviously live in a tumultuous time.” That’s why the US Department of Justice, the European Union, and a few other Luddites who don’t grok certain behaviors are interested in the big firms which can do smart software right.

Translation: Get out of our way and leave us alone.

Stephen E Arnold, November 5, 2024

Windows Fruit Loop Code, Oops. Boot Loop Code.

October 8, 2024

Windows Update Produces Boot Loops. Again.

Some Windows 11 users are vigilant about staying on top of the latest updates. Recently, such users paid for their diligence with infinite reboots, freezes, and/ or the dreaded blue screen of death. Digitaltrends warns, “Whatever You Do, Don’t Install the Windows 11 September Update.” Writer Judy Sanhz reports:

“The bug here can cause what’s known as a ‘boot loop.’ This is an issue that Windows versions have had for decades, where the PC will boot and restart endlessly with no way for users to interact, forcing a hard shutdown by holding the power button. Boot loops can be incredibly hard to diagnose and even more complicated to fix, so the fact that we know the latest Windows 11 update can trigger the problem already solves half the battle. The Automatic Repair tool is a built-in feature on your PC that automatically detects and fixes any issues that prevent your computer from booting correctly. However, recent Windows updates, including the September update, have introduced problems such as freezing the task manager and others in the Edge browser. If you’re experiencing these issues, our handy PC troubleshooting guide can help.”

So for many the update hobbled the means to fix it. Wonderful. It may be worthwhile to bookmark that troubleshooting guide. On multiple devices, if possible. Because this is not the first time Microsoft has unleased this particular aggravation on its users. In fact, the last instance was just this past August. The company has since issued a rollback fix, but one wonders: Why ship a problematic update in the first place? Was it not tested? And is it just us, or does this sound eerily similar to July’s CrowdStrike outage?

(Does the fruit loop experience come with sour grapes?)

Cynthia Murrell, October 8, 2024

Microsoft Security: A World First

September 30, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2] green-dino_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2024/09/green-dino_thumb_thumb_thumb_thumb_t2_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

After the somewhat critical comments of the chief information security officer for the US, Microsoft said it would do better security. “Secure Future Initiative” is a 25 page document which contains some interesting comments. Let’s look at a handful.

Some bad actors just go where the pickings are the easiest. Thanks, MSFT Copilot. Good enough.

On page 2 I noted the record beating Microsoft has completed:

Our engineering teams quickly dedicated the equivalent of 34,000 full-time engineers to address the highest priority security tasks—the largest cybersecurity engineering project in history.

Microsoft is a large software company. It has large security issues. Therefore, the company undertaken the “largest cyber security engineering project in history.” That’s great for the Guinness Book of World Records. The question is, “Why?” The answer, it seems to me, is, “Microsoft did “good enough” security. As the US government’s report stated, “Nope. Not good enough.” Hence, a big and expensive series of changes. Have the changes been tested or have unexpected security issues been introduced to the sprawl of Microsoft software? Another question from this dinobaby: “Can a big company doing good enough security implement fixes to remediate “the highest priority security tasks”? Companies have difficulty changing certain work practices. Can “good enough” methods do the job?

On page 3:

Security added as a core priority for all employees, measured against all performance reviews. Microsoft’s senior leadership team’s compensation is now tied to security performance

Compensation is lined to security as a “core priority.” I am not sure what making something a “core priority” means, particularly when the organization has implement security systems and methods which have been found wanting. When the US government gives a bad report card, one forms an impression of a fairly deep hole which needs to be filled with functional, reliable bits. Adding a “core priority” does not correlate with security software from cloud to desktop.

On page 5:

To enhance governance, we have established a new Cybersecurity Governance Council…

The creation of a council and adding security responsibilities to some executives and hiring a few other means to me:

- Meetings and delays

- Adding duties may translate to other issues

- How much will these remediating processes cost?

Microsoft may be too big to change its culture in a timely manner. The time required for a council to enhance governance means fixing security problems may take time. Even with additional time and “the equivalent of 34,000 full time engineers” may be a project management task of more than modest proportions.

On page 7:

Secure by design

Quite a subhead. How can Microsoft’s sweep of legacy and now products be made secure by design when these products have been shown to be insecure.

On page 10:

Our strategy for delivering enduring compliance with the standard is to identify how we will Start Right, Stay Right, and Get Right for each standard, which are then driven programmatically through dashboard driven reviews.

The alliteration is notable. However, what is “right”? What happens when fixing up existing issues and adhering to a “standard” find that a “standard” has changed. The complexity of management and the process of getting something “right” is like an example from a book from a Santa Fe Institute complexity book. The reality of addressing known security issues and conforming to standards which may change is interesting to contemplate. Words are great but remediating what’s wrong in a dynamic and very complicated series of dependent services is likely to be a challenge. Bad actors will quickly probe for new issues. Generally speaking, bad actors find faults and exploit them. Thus, Microsoft will find itself in a troublesome mode: Permanent reactions to previously unknown and new security issues.

On page 11, the security manifesto launches into “pillars.” I think the idea is that good security is built upon strong foundations. But when remediating “as is” code as well as legacy code, how long will the design, engineering, and construction of the pillars take? Months, years, decades, or multiple decades. The US CISO report card may not apply to certain time scales; for instance, big government contracts. Pillars are ideas.

Let’s look at one:

The monitor and detect threats pillar focuses on ensuring that all assets within Microsoft production infrastructure and services are emitting security logs in a standardized format that are accessible from a centralized data system for both effective threat hunting/investigation and monitoring purposes. This pillar also emphasizes the development of robust detection capabilities and processes to rapidly identify and respond to any anomalous access, behavior, and configuration.

The reality of today’s world is that security issues can arise from insiders. Outside threats seem to be identified each week. However, different cyber security firms identify and analyze different security issues. No one cyber security company is delivering 100 percent foolproof threat identification. “Logs” are great; however, Microsoft used to charge for making a logging function available to a customer. Now more logs. The problem is that logs help identify a breach; that is, a previously unknown vulnerability is exploited or an old vulnerability makes its way into a Microsoft system by a user action. How can a company which has a poor report card issued by the US government become the firm with a threat detection system which is the equivalent of products now available from established vendors. The recent CrowdStrike misstep illustrates that the Microsoft culture created the opportunity for the procedural mistake someone made at Crowdstrike. The words are nice, but I am not that confident in Microsoft’s ability to build this pillar. Microsoft may have to punt and buy several competitive systems and deploy them like mercenaries to protect the unmotivated Roman citizens in a century.

I think reading the “Secure Future Initiative” is a useful exercise. Manifestos can add juice to a mission. However, can the troops deliver a victory over the bad actors who swarm to Microsoft systems and services because good enough is like a fried chicken leg to a colony of ants.

Stephen E Arnold, September 30, 2024

Google Rear Ends Microsoft on an EU Information Highway

September 25, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2]_thumb green-dino_thumb_thumb_thumb_thumb_t[2]_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/09/green-dino_thumb_thumb_thumb_thumb_t2_thumb_thumb-1.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A couple of high-technology dinosaurs with big teeth and even bigger wallets are squabbling in a rather clever way. If the dispute escalates some of the smaller vehicles on the EU’s Information Superhighway are going to be affected by a remarkable collision. The orange newspaper published “Google Files Brussels Complaint against Microsoft Cloud Business.” On the surface, the story explains that “Google accuses Microsoft of locking customers into its Azure services, preventing them from easily switching to alternatives.”

Two very large and easily provoked dinosaurs are engaged in a contest in a court of law. Which will prevail, or will both end up with broken arms? Thanks, MSFT Copilot. I think you are the prettier dinosaur.

To put some bite into the allegation, Google aka Googzilla has:

filed an antitrust complaint in Brussels against Microsoft, alleging its Big Tech rival engages in unfair cloud computing practices that has led to a reduction in choice and an increase in prices… Google said Microsoft is “exploiting” its customers’ reliance on products such as its Windows software by imposing “steep penalties” on using rival cloud providers.

From my vantage point this looks like a rear ender; that is, Google — itself under considerable scrutiny by assorted governmental entities — has smacked into Microsoft, a veteran of EU regulatory penalties. Google explained to the monopoly officer that Microsoft was using discriminatory practices to prevent Google, AWS, and Alibaba from closing cloud computing deals.

In a conversation with some of my research team, several observations surfaced from what I would describe as a jaded group. Let me share several of these:

- Locking up business is precisely the “game” for US high-technology dinosaurs with big teeth and some China-affiliated outfit too. I believe the jargon for this business tactic is “lock in.” IBM allegedly found the play helpful when mainframes were the next big thing. Just try and move some government agencies or large financial institutions from their Big Iron to Chromebooks and see how the suggestion is greeted.,

- Google has called attention to the alleged illegal actions of Microsoft, bringing the Softies into the EU litigation gladiatorial arena.

- Information provided by Google may illustrate the alleged business practices so that when compared to the Google’s approach, Googzilla looks like the ideal golfing partner.

- Any question that US outfits like Google and Microsoft are just mom-and-pop businesses is definitively resolved.

My personal opinion is that Google wants to make certain that Microsoft is dragged into what will be expensive, slow, and probably business trajectory altering legal processes. Perhaps Satya and Sundar will testify as their mercenaries explain that both companies are not monopolies, not hindering competition, and love whales, small start ups, ethical behavior, and the rule of law.

Stephen E Arnold, September 25, 2024

Open Source Dox Chaos: An Opportunity for AI

September 24, 2024

It is a problem as old as the concept of open source itself. ZDNet laments, “Linux and Open-Source Documentation Is a Mess: Here’s the Solution.” We won’t leave you in suspense. Writer Steven Vaughan-Nichols’ solution is the obvious one—pay people to write and organize good documentation. Less obvious is who will foot the bill. Generous donors? Governments? Corporations with their own agendas? That question is left unanswered.

But there is not doubt. Open-source documentation, when it exists at all, is almost universally bad. Vaughan-Nichols recounts:

“When I was a wet-behind-the-ears Unix user and programmer, the go-to response to any tech question was RTFM, which stands for ‘Read the F… Fine Manual.’ Unfortunately, this hasn’t changed for the Linux and open-source software generations. It’s high time we addressed this issue and brought about positive change. The manuals and almost all the documentation are often outdated, sometimes nearly impossible to read, and sometimes, they don’t even exist.”

Not only are the manuals that have been cobbled together outdated and hard to read, they are often so disorganized it is hard to find what one is looking for. Even when it is there. Somewhere. The post emphasizes:

“It doesn’t help any that kernel documentation consists of ‘thousands of individual documents’ written in isolation rather than a coherent body of documentation. While efforts have been made to organize documents into books for specific readers, the overall documentation still lacks a unified structure. Steve Rostedt, a Google software engineer and Linux kernel developer, would agree. At last year’s Linux Plumbers conference, he said, ‘when he runs into bugs, he can’t find documents describing how things work.’ If someone as senior as Rostedt has trouble, how much luck do you think a novice programmer will have trying to find an answer to a difficult question?”

This problem is no secret in the open-source community. Many feel so strongly about it they spend hours of unpaid time working to address it. Until they just cannot take it anymore. It is easy to get burned out when one is barely making a dent and no one appreciates the effort. At least, not enough to pay for it.

Here at Beyond Search we have a question: Why can’t Microsoft’s vaunted Copilot tackle this information problem? Maybe Copilot cannot do the job?

Cynthia Murrell, September 24, 2024

Microsoft Explains Who Is at Fault If Copilot Smart Software Does Dumb Things

September 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Those Windows Central experts have delivered a Dusie of a write up. “Microsoft Says OpenAI’s ChatGPT Isn’t Better than Copilot; You Just Aren’t Using It Right, But Copilot Academy Is Here to Help” explains:

Avid AI users often boast about ChatGPT’s advanced user experience and capabilities compared to Microsoft’s Copilot AI offering, although both chatbots are based on OpenAI’s technology. Earlier this year, a report disclosed that the top complaint about Copilot AI at Microsoft is that “it doesn’t seem to work as well as ChatGPT.”

I think I understand. Microsoft uses OpenAI, other smart software, and home brew code to deliver Copilot in apps, the browser, and Azure services. However, users have reported that Copilot doesn’t work as well as ChatGPT. That’s interesting. A hallucinating capable software processed by the Microsoft engineering legions is allegedly inferior to Copilot.

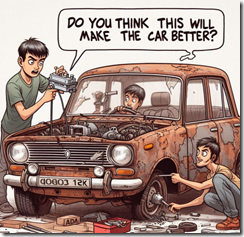

Enthusiastic young car owners replace individual parts. But the old car remains an old, rusty vehicle. Thanks, MSFT Copilot. Good enough. No, I don’t want to attend a class to learn how to use you.

Who is responsible? The answer certainly surprised me. Here’s what the Windows Central wizards offer:

A Microsoft employee indicated that the quality of Copilot’s response depends on how you present your prompt or query. At the time, the tech giant leveraged curated videos to help users improve their prompt engineering skills. And now, Microsoft is scaling things a notch higher with Copilot Academy. As you might have guessed, Copilot Academy is a program designed to help businesses learn the best practices when interacting and leveraging the tool’s capabilities.

I think this means that the user is at fault, not Microsoft’s refactored version of OpenAI’s smart software. The fix is for the user to learn how to write prompts. Microsoft is not responsible. But OpenAI’s implementation of ChatGPT is perceived as better. Furthermore, training to use ChatGPT is left to third parties. I hope I am close to the pin on this summary. OpenAI just puts Strawberries in front of hungry users and let’s them gobble up ChatGPT output. Microsoft fixes up ChatGPT and users are allegedly not happy. Therefore, Microsoft puts the burden on the user to learn how to interact with the Microsoft version of ChatGPT.

I thought smart software was intended to make work easier and more efficient. Why do I have to go to school to learn Copilot when I can just pound text or a chunk of data into ChatGPT, click a button, and get an output? Not even a Palantir boot camp will lure me to the service. Sorry, pal.

My hypothesis is that Microsoft is a couple of steps away from creating something designed for regular users. In its effort to “improve” ChatGPT, the experience of using Copilot makes the user’s life more miserable. I think Microsoft’s own engineering practices act like a struck brake on an old Lada. The vehicle has problems, so installing a new master cylinder does not improve the automobile.

Crazy thinking: That’s what the write up suggests to me.

Stephen E Arnold, September 23, 2024

Equal Opportunity Insecurity: Microsoft Mac Apps

August 28, 2024

Isn’t it great that Mac users can use Microsoft Office software on their devices these days? Maybe not. Apple Insider warns, “Security Flaws in Microsoft Mac Apps Could Let Attackers Spy on Users.” The vulnerabilities were reported by threat intelligence firm Cisco Talos. Writer Andrew Orr tells us:

“Talos claims to have found eight vulnerabilities in Microsoft apps for macOS, including Word, Outlook, Excel, OneNote, and Teams. These vulnerabilities allow attackers to inject malicious code into the apps, exploiting permissions and entitlements granted by the user. For instance, attackers could access the microphone or camera, record audio or video, and steal sensitive information without the user’s knowledge. The library injection technique inserts malicious code into a legitimate process, allowing the attacker to operate as the compromised app.”

Microsoft has responded with its characteristic good-enough approach to security. We learn:

“Microsoft has acknowledged vulnerabilities found by Cisco Talos but considers them low risk. Some apps, like Microsoft Teams, OneNote, and the Teams helper apps, have been modified to remove the this entitlement, reducing vulnerability. However, other apps, such as Microsoft Word, Excel, Outlook, and PowerPoint, still use this entitlement, making them susceptible to attacks. Microsoft has reportedly ‘declined to fix the issues,’ because of the company’s apps ‘need to allow loading of unsigned libraries to support plugins.’”

Well alright then. Leaving the vulnerability up for Outlook is especially concerning since, as Orr points out, attackers could use it to send phishing or other unauthorized emails. There is only so much users can do in the face of corporate indifference. The write-up advises us to keep up with app updates to ensure we get the latest security patches. That is good general advice, but it only works if appropriate patches are actually issued.

Cynthia Murrell, August 28, 2024

Copilot and Hackers: Security Issues Noted

August 12, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The online publication Cybernews ran a story I found interesting. It title suggests something about Black Hat USA 2024 attendees I have not considered. Here’s the headline:

Black Hat USA 2024: : Microsoft’s Copilot Is Freaking Some Researchers Out

Wow. Hackers (black, gray, white, and multi-hued) are “freaking out.” As defined by the estimable Urban Dictionary, “freaking” means:

Obscene dancing which simulates sex by the grinding the of the genitalia with suggestive sounds/movements. often done to pop or hip hop or rap music

No kidding? At Black Hat USA 2024?

Thanks, Microsoft Copilot. Freak out! Oh, y0ur dance moves are good enough.

The article reports:

Despite Microsoft’s claims, cybersecurity researcher Michael Bargury demonstrated how Copilot Studio, which allows companies to build their own AI assistant, can be easily abused to exfiltrate sensitive enterprise data. We also met with Bargury during the Black Hat conference to learn more. “Microsoft is trying, but if we are honest here, we don’t know how to build secure AI applications,” he said. His view is that Microsoft will fix vulnerabilities and bugs as they arise, letting companies using their products do so at their own risk.

Wait. I thought Microsoft has tied cash to security work. I thought security was Job #1 at the company which recently accursed Delta Airlines of using outdated technology and failing its customers. Is that the Microsoft that Mr. Bargury is suggesting has zero clue how to make smart software secure?

With MSFT Copilot turning up in places that surprise me, perhaps the Microsoft great AI push is creating more problems. The SolarWinds glitch was exciting for some, but if Mr. Bargury is correct, cyber security life will be more and more interesting.

Stephen E Arnold, August 12, 2024