AI: Big Ideas and Bigger Challenges for the Next Quarter Century. Maybe, Maybe Not

February 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an interesting ArXiv.org paper with a good title: “Ten Hard Problems in Artificial Intelligence We Must Get Right.” The topic is one which will interest some policy makers, a number of AI researchers, and the “experts” in machine learning, artificial intelligence, and smart software.

The structure of the paper is, in my opinion, a three-legged stool analysis designed to support the weight of AI optimists. The first part of the paper is a compressed historical review of the AI journey. Diagrams, tables, and charts capture the direction in which AI “deep learning” has traveled. I am no expert in what has become the next big thing, but the surprising point in the historical review is that 2010 is the date pegged as the start to the 2016 time point called “the large scale era.” That label is interesting for two reasons. First, I recall that some intelware vendors were in the AI game before 2010. And, second, the use of the phrase “large scale” defines a reality in which small outfits are unlikely to succeed without massive amounts of money.

The second leg of the stool is the identification of the “hard problems” and a discussion of each. Research data and illustrations bring each problem to the reader’s attention. I don’t want to get snagged in the plagiarism swamp which has captured many academics, wives of billionaires, and a few journalists. My approach will be to boil down the 10 problems to a short phrase and a reminder to you, gentle reader, that you should read the paper yourself. Here is my version of the 10 “hard problems” which the authors seem to suggest will be or must be solved in 25 years:

- Humans will have extended AI by 2050

- Humans will have solved problems associated with AI safety, capability, and output accuracy

- AI systems will be safe, controlled, and aligned by 2050

- AI will make contributions in many fields; for example, mathematics by 2050

- AI’s economic impact will be managed effectively by 2050

- Use of AI will be globalized by 2050

- AI will be used in a responsible way by 2050

- Risks associated with AI will be managed by effectively by 2050

- Humans will have adapted its institutions to AI by 2050

- Humans will have addressed what it means to be “human” by 2050

Many years ago I worked for a blue-chip consulting firm. I participated in a number of big-idea projects. These ranged from technology, R&D investment, new product development, and the global economy. In our for-fee reports were did include a look at what we called the “horizon.” The firm had its own typographical signature for this portion of a report. I recall learning in the firm’s “charm school” (a special training program to make sure new hires knew the style, approach, and ground rules for remaining employed at that blue-chip firm). We kept the horizon tight; that is, talking about the future was typically in the six to 12 month range. Nosing out 25 years was a walk into a mine field. My boss, as I recall told me, “We don’t do science fiction.”

The smart robot is informing the philosopher that he is free to find his future elsewhere. The date of the image is 2025, right before the new year holiday. Thanks, MidJourney. Good enough.

The third leg of the stool is the academic impedimenta. To be specific, the paper is 90 pages in length of which 30 present the argument. The remain 60 pages present:

- Traditional footnotes, about 35 pages containing 607 citations

- An “Electronic Supplement” presenting eight pages of annexes with text, charts, and graphs

- Footnotes to the “Electronic Supplement” requiring another 10 pages for the additional 174 footnotes.

I want to offer several observations, and I do not want to have these be less than constructive or in any way what one of my professors who was treated harshly in Letters to the Editor for an article he published about Chaucer. He described that fateful letter as “mean spirited.”

- The paper makes clear that mankind has some work to do in the next 25 years. The “problems” the paper presents are difficult ones because they touch upon the fabric of social existence. Consider the application of AI to war. I think this aspect of AI may be one to warrant a bullet on AI’s hit parade.

- Humans have to resolve issues of automated systems consuming verifiable information, synthetic data, and purpose-built disinformation so that smart software does not do things at speed and behind the scenes. Do those working do resolve the 10 challenges have an ethical compass and if so, what does “ethics” mean in the context of at-scale AI?

- Social institutions are under stress. A number of organizations and nation-states operate as dictators. One central American country has a rock star dictator, but what about the rock star dictators working techno feudal companies in the US? What governance structures will be crafted by 2050 to shape today’s technology juggernaut?

To sum up, I think the authors have tackled a difficult problem. I commend their effort. My thought is that any message of optimism about AI is likely to be hard pressed to point to one of the 10 challenges and and say, “We have this covered.” I liked the write up. I think college students tasked with writing about the social implications of AI will find the paper useful. It provides much of the research a fresh young mind requires to write a paper, possibly a thesis. For me, the paper is a reminder of the disconnect between applied technology and the appallingly inefficient, convenience-embracing humans who are ensnared in the smart software.

I am a dinobaby, and let me you, “I am glad I am old.” With AI struggling with go-fast and regulators waffling about go-slow, humankind has quite a bit of social system tinkering to do by 2050 if the authors of the paper have analyzed AI correctly. Yep, I am delighted I am old, really old.

Stephen E Arnold, February 13, 2024

AI Risk: Are We Watching Where We Are Going?

December 27, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

To brighten your New Year, navigate to “Why We Need to Fear the Risk of AI Model Collapse.” I love those words: Fear, risk, and collapse. I noted this passage in the write up:

When an AI lives off a diet of AI-flavored content, the quality and diversity is likely to decrease over time.

I think the idea of marrying one’s first cousin or training an AI model on AI-generated content is a bad idea. I don’t really know, but I find the idea interesting. The write up continues:

Is this model at risk of encountering a problem? Looks like it to me. Thanks, MSFT Copilot. Good enough. Falling off the I beam was a non-starter, so we have a more tame cartoon.

Model collapse happens when generative AI becomes unstable, wholly unreliable or simply ceases to function. This occurs when generative models are trained on AI-generated content – or “synthetic data” – instead of human-generated data. As time goes on, “models begin to lose information about the less common but still important aspects of the data, producing less diverse outputs.”

I think this passage echoes some of my team’s thoughts about the SAIL Snorkel method. Googzilla needs a snorkel when it does data dives in some situations. The company often deletes data until a legal proceeding reveals what’s under the company’s expensive, smooth, sleek, true blue, gold trimmed kimonos

The write up continues:

There have already been discussions and research on perceived problems with ChatGPT, particularly how its ability to write code may be getting worse rather than better. This could be down to the fact that the AI is trained on data from sources such as Stack Overflow, and users have been contributing to the programming forum using answers sourced in ChatGPT. Stack Overflow has now banned using generative AIs in questions and answers on its site.

The essay explains a couple of ways to remediate the problem. (I like fairy tales.) The first is to use data that comes from “reliable sources.” What’s the definition of reliable? Yeah, problem. Second, the smart software companies have to reveal what data were used to train a model. Yeah, techno feudalists totally embrace transparency. And, third, “ablate” or “remove” “particular data” from a model. Yeah, who defines “bad” or “particular” data. How about the techno feudalists, their contractors, or their former employees.

For now, let’s just use our mobile phone to access MSFT Copilot and fix our attention on the screen. What’s to worry about? The person in the cartoon put the humanoid form in the apparently risky and possibly dumb position. What could go wrong?

Stephen E Arnold, December 27, 2023

Facing an Information Drought Tech Feudalists Will Innovate

December 18, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The Exponential View (Azeem Azhar) tucked an item in his “blog.” The item is important, but I am not familiar with the cited source of the information in “LLMs May Soon Exhaust All Available High Quality Language Data for Training.” The main point is that the go-to method for smart software requires information in volume to [a] be accurate, [b] remain up to date, and [c] sufficiently useful to pay for the digital plumbing.

Oh, oh. The water cooler is broken. Will the Pilates’ teacher ask the students to quench their thirst with synthetic water? Another option is for those seeking refreshment to rejuvenate tired muscles with more efficient metabolic processes. The students are not impressed with these ideas? Thanks, MSFT Copilot. Two tries and close enough.

One datum indicates / suggests that the Big Dogs of AI will run out of content to feed into their systems in either 2024 or 2025. The date is less important than the idea of a hard stop.

What will the AI companies do? The essay asserts:

OpenAI has shown that it’s willing to pay eight figures annually for historical and ongoing access to data — I find it difficult to imagine that open-source builders will…. here are ways other than proprietary data to improve models, namely synthetic data, data efficiency, and algorithmic improvements – yet it looks like proprietary data is a moat open-source cannot cross.

Several observations:

- New methods of “information” collection will be developed and deployed. Some of these will be “off the radar” of users by design. One possibility is mining the changes to draft content is certain systems. Changes or deltas can be useful to some analysts.

- The synthetic data angle will become a go-to method using data sources which, by themselves, are not particularly interesting. However, when cross correlated with other information, “new” data emerge. The new data can be aggregated and fed into other smart software.

- Rogue organizations will acquire proprietary data and “bitwash” the information. Like money laundering systems, the origin of the data are fuzzified or obscured, making figuring out what happened expensive and time consuming.

- Techno feudal organizations will explore new non commercial entities to collect certain data; for example, the non governmental organizations in a niche could be approached for certain data provided by supporters of the entity.

Net net: Running out of data is likely to produce one high probability event: Certain companies will begin taking more aggressive steps to make sure their digital water cooler is filled and working for their purposes.

Stephen E Arnold, December 18, 2023

Mastercard and Customer Information: A Lone Ranger?

October 26, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-24.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

In my lectures, I often include a pointer to sites selling personal data. Earlier this month, I explained that the clever founder of Frank Financial acquired email information about high school students from two off-the-radar data brokers. These data were mixed with “real” high school student email addresses to provide a frothy soup of more than a million email addresses. These looked okay. The synthetic information was “good enough” to cause JPMorgan Chase to output a bundle of money to the alleged entrepreneur winners.

A fisherman chasing a slippery eel named Trust. Thanks, MidJourney. You do have a knack for recycling Godzilla art, don’t you?

I thought about JPMorgan Chase when I read “Mastercard Should Stop Selling Our Data.” The article makes clear that Mastercard sells its customers (users?) data. Mastercard is a financial institution. JPMC is a financial institution. One sells information; the other gets snookered by data. I assume that’s the yin and yang of doing business in the US.

The larger question is, “Are financial institutions operating in a manner harmful to themselves (JPMC) and harmful to others (personal data about Mastercard customers (users?). My hunch is that today I am living in an “anything goes” environment. Would the Great Gatsby be even greater today? Why not own Long Island and its railroad? That sounds like a plan similar to those of high fliers, doesn’t it?

The cited article has a bias. The Electronic Frontier Foundation is allegedly looking out for me. I suppose that’s a good thing. The article aims to convince me; for example:

the company’s position as a global payments technology company affords it “access to enormous amounts of information derived from the financial lives of millions, and its monetization strategies tell a broader story of the data economy that’s gone too far.” Knowing where you shop, just by itself, can reveal a lot about who you are. Mastercard takes this a step further, as U.S. PIRG reported, by analyzing the amount and frequency of transactions, plus the location, date, and time to create categories of cardholders and make inferences about what type of shopper you may be. In some cases, this means predicting who’s a “big spender” or which cardholders Mastercard thinks will be “high-value”—predictions used to target certain people and encourage them to spend more money.

Are outfits like Chase Visa selling their customer (user) data? (Yep, the same JPMC whose eagle eyed acquisitions’ team could not identify synthetic data) and enables some Amazon credit card activities. Also, what about men-in-the-middle like Amazon? The data from its much-loved online shopping, book store, and content brokering service might be valuable to some I surmise? How much would an entity pay for information about an Amazon customer who purchased item X (a 3D printer) and purchased Kindle books about firearm related topics be worth?

The EFF article uses a word which gives me the willies: Trust. For a time, when I was working in different government agencies, the phrase “trust but verify” was in wide use. Am I able to trust the EFF and its interpretation from a unit of the Public Interest Network? Am I able to trust a report about data brokering? Am I able to trust an outfit like JPMC?

My thought is that if JPMC itself can be fooled by a 31 year old and a specious online app, “trust” is not the word I can associate with any entity’s action in today’s business environment.

This dinobaby is definitely glad to be old.

Stephen E Arnold, October 26, 2023

Recent Googlies: The We-Care-about -Your-Experience Outfit

October 18, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-23.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I flipped through some recent items from my newsfeed and noted several about everyone’s favorite online advertising platform. Herewith is my selection for today:

ITEM 1. Boing Boing, “Google Reportedly Blocking Benchmarking Apps on Pixel 8 Phones.” If the mobile devices were fast — what the GenX and younger folks call “performant” (weird word, right?) — wouldn’t the world’s largest online ad service make speed test software and its results widely available? If not, perhaps the mobile devices are digital turtles?

Hey, kids. I just want to be your friend. We can play hide and seek. We can share experiences. You know that I do care about your experiences. Don’t run away, please. I want to be sticky. Thanks, MidJourney, you have a knack for dinosaur art. Boy that creature looks familiar.

ITEM 2. The Next Web, “Google to Pay €3.2M Yearly Fee to German News Publishers.” If Google traffic and its benefits were so wonderful, why would the Google pay publishers? Hmmm.

ITEM 3. The Verge (yep, the green weird logo outfit), “YouTube Is the Latest Large Platform to Face EU Scrutiny Regarding the War in Israel.” Why is the EU so darned concerned about an online advertising company which still sells wonderful Google Glass, expresses much interest in a user’s experience, and some fondness for synthetic data? Trust? Failure to filter certain types of information? A reputation for outstanding business policies?

ITEM 4. Slashdot quoted a document spotted by the Verge (see ITEM 3) which includes this statement: “… Google rejects state and federal attempts at requjiring platforms to verify the age of users.” Google cares about “user experience” too much to fool with administrative and compliance functions.

ITEM 5. The BBC reports in “Google Boss: AI Too Important Not to Get Right.” The tie up between Cambridge University and Google is similar to the link between MIT and IBM. One omission in the fluff piece: No definition of “right.”

ITEM 6. Arstechnica reports that Google has annoyed the estimable New York Times. Google, it seems, is using is legal brigades to do some Fancy Dancing at the antitrust trial. Access to public trial exhibits has been noted. Plus, requests from the New York Times are being ignored. Is the Google above the law? What does “public” mean?

Yep, Google googlies.

Stephen E Arnold, October 18, 2023

Nature Will Take Its Course among Academics

October 18, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-9.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

“How ChatGPT and Other AI Tools Could Disrupt Scientific Publishing: A World of AI-Assisted Writing and Reviewing Might Transform the Nature of the Scientific Paper” provides a respected publisher’s view of smart software. The viewshed is interesting, but it is different from my angle of sight. But “might”! How about “has”?

Peer reviewed publishing has been associated with backpatting, non-reproducible results, made-up data, recycled research, and grant grooming. The recent resignation of the president of Stanford University did not boost the image of academicians in my opinion.

The write up states:

The accessibility of generative AI tools could make it easier to whip up poor-quality papers and, at worst, compromise research integrity, says Daniel Hook, chief executive of Digital Science, a research-analytics firm in London. “Publishers are quite right to be scared,” says Hook. (Digital Science is part of Holtzbrinck Publishing Group, the majority shareholder in Nature’s publisher, Springer Nature; Nature’s news team is editorially independent.)

Hmmm. I like the word “scared.”

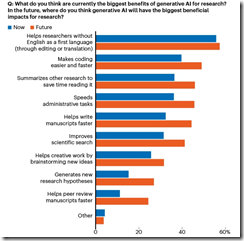

If you grind through the verbal fancy dancing, you will come to research results and the graphic reproduced below:

This graphic is from Nature, a magazine which tried hard not to publish non-reproducible results, fake science, or synthetic data. Would a write up from the former Stanford University president or the former head of the Harvard University ethics department find their way to Nature’s audience? I don’t know.

Missing from the list is the obvious use of smart software: Let it do the research. Let the LLM crank out summaries of dull PDF papers (citations). Let the AI spit out a draft. Graduate students or research assistants can add some touch ups. The scholar can then mail it off to an acquaintance at a prestigious journal, point out the citations which point to that individual’s “original” work, and hope for the best.

Several observations:

- Peer reviewing is the realm of professional publishing. Money, not accuracy or removing bogus research, is the name of the game.

- The tenure game means that academics who want to have life-time employment have to crank out “research” and pony up cash to get the article published. Sharks and sucker fish are an ecological necessity it seems.

- In some disciplines like quantum computing or advanced mathematics, the number of people who can figure out if the article is on the money are few, far between, and often busy. Therefore, those who don’t know their keyboard’s escape key from a home’s “safe” room are ill equipped to render judgment.

Will this change? Not if those on tenure track or professional publishers have anything to say about the present system. The status quo works pretty well.

Net net: Social media is not the only channel for misinformation and fake data.

Stephen E Arnold, October 18, 2023

Is Google Setting a Trap for Its AI Competition

October 6, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The litigation about the use of Web content to train smart generative software is ramping up. Outfits like OpenAI, Microsoft, and Amazon and its new best friend will be snagged in the US legal system.

But what big outfit will be ready to offer those hungry to use smart software without legal risk? The answer is the Google.

How is this going to work?

simple. Google is beavering away with its synthetic data. Some real data are used to train sophisticated stacks of numerical recipes. The idea is that these algorithms will be “good enough”; thus, the need for “real” information is obviated. And Google has another trick up its sleeve. The company has coveys of coders working on trimmed down systems and methods. The idea is that using less information will produce more and better results than the crazy idea of indexing content from wherever in real time. The small data can be licensed when the competitors are spending their days with lawyers.

How do I know this? I don’t but Google is providing tantalizing clues in marketing collateral like “Researchers from the University of Washington and Google have Developed Distilling Step-by-Step Technology to Train a Dedicated Small Machine Learning Model with Less Data.” The author is a student who provides sources for the information about the “less is more” approach to smart software training.

And, may the Googlers sing her praises, she cites Google technical papers. In fact, one of the papers is described by the fledgling Googler as “groundbreaking.” Okay.

What’s really being broken is the approach of some of Google’s most formidable competition.

When will the Google spring its trap? It won’t. But as the competitors get stuck in legal mud, the Google will be an increasingly attractive alternative.

The last line of the Google marketing piece says:

Check out the Paper and Google AI Article. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 30k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

Get that young marketer a Google mouse pad.

Stephen E Arnold, October 6, 2023

Turn Left at Ethicsville and Go Directly to Immoraland, a New Theme Park

September 14, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Stanford University lost a true icon of scholarship. Why is this individual leaving the august institution, a hot spot of modern ethical and moral discourse. Yeah, the leader apparently confused real and verifiable data with less real and tough-to-verify data. Across the country, an ethics professor no less is on leave or parked in an academic rest area over a similar allegation. I will not dwell on the outstanding concept of just using synthetic data to inform decision models, a practice once held in esteem at the Stanford Artificial Intelligence Lab.

“Gasp,” one PhD utters. An audience of scholars reveals shock and maybe horror when a colleague explains that making up, recycling, or discarding data at odds with the “real” data is perfectly reasonable. The brass ring of tenure and maybe a prestigious award for research justify a more hippy dippy approach to accuracy. And what about grants? Absolutely. Money allows top-quality research to be done by graduate assistants. Everyone needs someone to blame. MidJourney, keep on slidin’ down that gradient descent, please.

“Scientist Shocks Peers by Tailoring Climate Study” provides more color for these no-ethics actions by leaders of impressionable youth. I noted this passage:

While supporters applauded Patrick T. Brown for flagging what he called a one-sided climate “narrative” in academic publishing, his move surprised at least one of his co-authors—and angered the editors of leading journal Nature. “I left out the full truth to get my climate change paper published,” read the headline to an article signed by Brown…

Ah, the greater good logic.

The write up continued:

A number of tweets applauded Brown for his “bravery”, “openness” and “transparency”. Others said his move raised ethical questions.

The write up raised just one question I would like answered: “Where has education gone?” Answer: Immoraland, a theme park with installations at Stanford and Harvard with more planned.

Stephen E Arnold, September 14, 2023

Google: Running the Same Old Game Plan

July 31, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Google has been running the same old game plan since the early 2000s. But some experts are unaware of its simplicity. In the period from 2002 to 2004, I did a number of reports for my commercial clients about Google. In 2004, I recycled some of the research and analysis into The Google Legacy. The thesis of the monograph, published in England by the now defunct Infonortics Ltd. explained the infrastructure for search was enhanced to provide an alternative to commercial software for personal, business, and government use. The idea that a search-and-retrieval system based on precedent technology and funded in part by the National Science Foundation with a patent assigned to Stanford University could become Googzilla was a difficult idea to swallow. One of the investment banks who paid for our research got the message even though others did not. I wonder if that one group at the then world’s largest software company remembers my lecture about the threat Google posed to a certain suite of software applications? Probably not. The 20 somethings and the few suits at the lecture looked like kindergarteners waiting for recess.

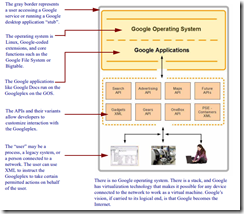

I followed up The Google Legacy with Google Version 2.0: The Calculating Predator. This monograph was again based on proprietary research done for my commercial clients. I recycled some of the information, scrubbing that which was deemed inappropriate for anyone to buy for a few British pounds. In that work, I rather methodically explained that Google’s patent documents provided useful information about why the mere Web search engine was investing in some what seemed like odd-ball technologies like software janitors. I reworked one diagram to show how the Google infrastructure operated like a prison cell or walled garden. The idea is that once one is in, one may have to work to get past the gatekeeper to get out. I know the image from a book does not translate to a blog post, but, truth be told, I am disinclined to recreate art. At age 78, it is often difficult to figure out why smart drawing tools are doing what they want, not what I want.

Here’s the diagram:

The prison cell or walled garden (2006) from Google Version 2.0: The Calculating Predator, published by Infonortics Ltd., 2006. And for any copyright trolls out there, I created the illustration 20 years ago, not Alamy and not Getty and no reputable publisher.

Three observations about the diagram are: [a] The box, prison cell, or walled garden contains entities, [b] once “in” there is a way out but the exit is via Google intermediated, defined, and controlled methods, and [c] anything in the walled garden perceives that the prison cell is the outside world. The idea obviously is for Google to become the digital world which people will perceive as the Internet.

I thought about my decades old research when I read “Google Tries to Defend Its Web Environment Integrity as Critics Slam It as Dangerous.” The write up explains that Google wants to make online activity better. In the comments to the article, several people point out that Google is using jargon and fuzzy misleading language to hide its actual intentions with the WEI.

The critics and the write up miss the point entirely: Look at the diagram. WEI, like the AMP initiative, is another method added to existing methods for Google to extend its hegemony over online activity. The patent, implement, and explain approach drags out over years. Attention spans, even for academics who make up data like the president of Stanford University, are not interested in anything other than personal goal achievement. Finding out something visible for years is difficult. When some interesting factoid is discovered, few accept it. Google has a great brand, and it cares about user experience and the other fog the firm generates.

MidJourney created this nice image of a Googler preparing for a presentation to the senior management of Google in 2001. In that presentation, the wizard was outlining Google’s fundamental strategy: Fake left, go right. The slogan for the company, based on my research, keep them fooled. Looking the wrong way is the basic rule of being a successful Googler, strategist, or magician.

Will Google WEI win? It does not matter because Google will just whip up another acronym, toss some verbal froth around, and move forward. What is interesting to me is Google’s success. Points I have noted over the years are:

- Kindergarten colors, Google mouse pads, and talking like General Electric once did about “bringing good things” continues to work

- Google’s dominance is not just accepted, changing or blocking anything Google wants to do is sacrilegious. It has become a sacred digital cow

- The inability of regulators to see Google as it is remains a constant, like Google’s advertising revenue

- Certain government agencies could not perform their work if Google were impeded in any significant way. No, I will not elaborate on this observation in a public blog post. Don’t even ask. I may make a comment in my keynote at the Massachusetts / New York Association of Crime Analysts’ conference in early October 2023. If you can’t get in, you are out of luck getting information on Point Four.

Net net: Fire up your Chrome browser. Look for reality in the Google search results. Turn cartwheels to comply with Google’s requirements. Pay money for traffic via Google advertising. Learn how to create good blog posts from Google search engine optimization experts. Use Google Maps. Put your email in Gmail. Do the Google thing. Then ask yourself, “How do I know if the information provided by Google is “real”? Just don’t get curious about synthetic data for Google smart software. Predictions about Big Brother are wrong. Google, not the government, is the digital parent whom you embraced after a good “Backrub.” Why change from high school science thought processes? If it ain’t broke, don’t fix it.

Stephen E Arnold, July 31, 2023

Annoying Humans Bedevil Smart Software

June 29, 2023

Humans are inherently biased. While sexist, ethnic, and socioeconomic prejudices are implied as the cause behind biases, unconscious obliviousness is more likely to be the culprit. Whatever causes us to be biased, AI developers are unfortunately teaching AI algorithms our fallacies. Bloomberg investigates how AI is being taught bad habits in the article, “Humans Are Biased, Generative AI Is Even Worse.”

Stable Diffusion is one of the may AI bots that generates images from text prompts. Based on these prompts, it delivers images that display an inherent bias in favor of white men and discriminates against women and brown-skinned people. Using Stable Diffusion, Bloomber conducted a test of 5000 AI images They were analyzed and found that Stable Diffusion is more racist and sexist than real-life.

While Stable Diffusion and other text-to-image AI are entertaining, they are already employed by politicians and corporations. AI-generated images and videos set a dangerous precedent, because it allows bad actors to propagate false information ranging from conspiracy theories to harmful ideologies. Ethical advocates, politicians, and some AI leaders are lobbying for moral guidelines, but a majority of tech leaders and politicians are not concerned:

“Industry researchers have been ringing the alarm for years on the risk of bias being baked into advanced AI models, and now EU lawmakers are considering proposals for safeguards to address some of these issues. Last month, the US Senate held a hearing with panelists including OpenAI CEO Sam Altman that discussed the risks of AI and the need for regulation. More than 31,000 people, including SpaceX CEO Elon Musk and Apple co-founder Steve Wozniak, have signed a petition posted in March calling for a six-month pause in AI research and development to answer questions around regulation and ethics. (Less than a month later, Musk announced he would launch a new AI chatbot.) A spate of corporate layoffs and organizational changes this year affecting AI ethicists may signal that tech companies are becoming less concerned about these risks as competition to launch real products intensifies.”

Biased datasets for AI are not new. AI developers must create more diverse and “clean” data that incorporates a true, real-life depiction. The answer may be synthetic data; that is, human involvement is minimized — except when the system has been set up.

Whitney Grace, June 29, 2023