Meta Mismatch: Good at One Thing, Not So Good at Another

May 27, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “While Meta Stuffs AI Into All Its Products, It’s Apparently Helpless to Stop Perverts on Instagram From Publicly Lusting Over Sexualized AI-Generated Children.” The main idea is that Meta has a problems stopping “perverts.” You know a “pervert,” don’t you. One can spot ‘em when one sees ‘em. The write up reports:

As Facebook and Instagram owner Meta seeks to jam generative AI into every feasible corner of its products, a disturbing Forbes report reveals that the company is failing to prevent those same products from flooding with AI-generated child sexual imagery. As Forbes reports, image-generating AI tools have given rise to a disturbing new wave of sexualized images of children, which are proliferating throughout social media — the Forbes report focused on TikTok and Instagram — and across the web.

What is Meta doing or not doing? The write up is short on technical details. In fact, there are no technical details. Is it possible that any online service allowing anyone able to comment or upload certain content will do something “bad”? Online requires something that most people don’t want. The secret ingredient is spelling out an editorial policy and making decisions about what is appropriate or inappropriate for an “audience.” Note that I have converted digital addicts into an audience, albeit one that participates.

Two fictional characters are supposed to be working hard and doing their level best. Thanks, MSFT Copilot. How has that Cloud outage affected the push to more secure systems? Hello, hello, are you there?

Editorial policies require considerable intellectual effort, crafted workflow processes, and oversight. Who does the overseeing? In the good old days when publishing outfits like John Wiley & Sons-type or Oxford University Press-type outfits were gatekeepers, individuals who met the cultural standards were able to work their way up the bureaucratic rock wall. Now the mantra is the same as the probability-based game show with three doors and “Come on down!” Okay, “users” come on down, wallow in anonymity, exploit a lack of consequences, and surf on the darker waves of human thought. Online makes clear that people who read Kant, volunteer to help the homeless, and respect the rights of others are often at risk from the denizens of the psychological night.

Personally I am not a Facebook person, a users or Instagram, or a person requiring the cloak of a WhatsApp logo. Futurism takes a reasonably stand:

it’s [Meta, Facebook, et al] clearly unable to use the tools at its disposal, AI included, to help stop harmful AI content created using similar tools to those that Meta is building from disseminating across its own platforms. We were promised creativity-boosting innovation. What we’re getting at Meta is a platform-eroding pile of abusive filth that the company is clearly unable to manage at scale.

How long has been Meta trying to be a squeaky-clean information purveyor? Is the article going overboard?

I don’t have answers, but after years of verbal fancy dancing, progress may be parked at a rest stop on the information superhighway. Who is the driver of the Meta construct? If you know, that is the person to whom one must address suggestions about content. What if that entity does not listen and act? Government officials will take action, right?

PS. Is it my imagination or is Futurism.com becoming a bit more strident?

Stephen E Arnold, May 27, 2024

Googzilla Makes a Move in a High Stakes Contest

May 22, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The trusted “real news” outfit Thomson Reuters published this popular news story about dancing with Googzilla. The article is titled by the click seekers as “Google Cuts Mystery Check to US in Bid to Sidestep Jury Trial.” I love the “mystery check.” I thought FinCEN was on the look out for certain types of transactions.

The contest is afoot. Thanks, MSFT Copilot.

Here’s the core of the story: On one side of the multi-dimensional Go board is the US Department of Justice. Yes, that was the department with the statues in the area where employees once were paid each week. On the other side of the game board is Googzilla. This is the digital construct which personifies the Alphabet, Google, YouTube, DeepMind, et al outfit. Some in Google’s senior management are avid game players. After all, one must set up a system in which no matter who plays a Googzilla-branded game, the “just average wizards” who run the company wins. The mindset has worked wonders in the online advertising and SEO sector. The SEO “experts” were the people who made a case to their clients for the truism “If you want traffic, it is a pay-to-play operation.” The same may be said for YouTube and content creators who make content so Google can monetize that digital flow and pay a sometimes unknown amount to a creator who is a one-person 1930s motion picture production company. Ditto for the advertisers who use the Google system to buy advertising and benefit by providing advertising space. What’s Google do? It makes the software that controls the game.

Where’s this going? Google is playing a game with the Department of Justice. I am certain some in the DoJ understand this approach. Others may not grasp the concept of Googzilla’s absolute addiction to gaming and gamesmanship. Casinos are supposed to make money. There are exceptions, of course. I can think of a high-profile case history of casino failure, but Google is a reasonably competent casino operator. Sure, there are some technical problems when the Cloud back end fails and the staff become a news event because they protest with correctly spelled signage. But overall, I would suggest that the depth of Googzilla’s game playing is not appreciated by its users, its competition, or some of the governments trying to regain data and control of information pumped into the creatures financial blood bank.

Let’s look at the information the trusted outfit sought to share as bait for a begging-for-dollars marketing play:

Google has preemptively paid damages to the U.S. government, an unusual move aimed at avoiding a jury trial in the Justice Department’s antitrust lawsuit over its digital advertising business. Google disclosed the payment, but not the amount, in a court filing last week that said the case should be heard and decided by a judge directly. Without a monetary damages claim, Google argued, the government has no right to a jury trial.

That’s the move. The DoJ now has to [a] ignore the payment and move forward to a trial with a jury deciding if Googzilla is a “real” monopoly or a plain vanilla, everyday business like the ones Amazon, Facebook, and Microsoft have helped go out of business. [b] Cash the check and go back to scanning US government job listings for a positive lateral arabesque on a quest to the SES (senior executive service). [c] Keep the check and pile on more legal pressure because the money was an inducement, not a replacement for the US justice system. With an election coming up, I can see option [d] on the horizon: Do nothing.

The idea is that in multi-dimensional Go, Google wants to eliminate the noise of legal disputes. Google wins if the government cashes the check. Google wins if the on-rushing election causes a slow down of an already slow process. Google wins if the DoJ keeps piling on the pressure. Google has the money and lawyers to litigate. The government has a long memory but that staff and leadership turnover shifts the odds to Googzilla. Google Calendar keeps its attorneys filing before deadlines and exploiting the US legal system to its fullest extent. If the US government sues Google because the check was a bribe, Google wins. The legal matter shifts to resolving the question about the bribe because carts rarely are put in front of horses.

In this Googzilla-influenced games, Googzilla has created options and set the stage to apply the same tactic to other legal battles. The EU may pass a law prohibiting pre-payment in lieu of a legal process, but if that does not move along at the pace of AI hyperbole, Google’s DoJ game plan can be applied to the lucky officials in Brussels and Strasbourg.

The Reuters’ report says:

Stanford Law School’s Mark Lemley told Reuters he was skeptical Google’s gambit would prevail. He said a jury could ultimately decide higher damages than whatever Google put forward.

“Antitrust cases regularly go to juries. I think it is a sign that Google is worried about what a jury will do,” Lemley said. Another legal scholar, Herbert Hovenkamp of the University of Pennsylvania’s law school, called Google’s move "smart" in a post on X. “Juries are bad at deciding technical cases, and further they do not have the authority to order a breakup,” he wrote.

Okay, two different opinions. The Google check is proactive.

Why? Here are some reasons my research group offered this morning:

- Google has other things to do with its legal resources; namely, deal with the copyright litigation which is knocking on its door

- The competitive environment is troubling so Googzilla wants to delete annoyances like the DoJ and staff who don’t meet the new profile of the ideal Googler any longer

- Google wants to set a precedent so it can implement its pay-to-play game plan for legal hassles.

I am 99 percent confident that Google is playing a game. I am not sure that others perceive the monopoly litigation as one. Googzilla has been refining its game plan, its game-playing skills, and its gaming business systems for 25 years. How long has the current crop of DoJ experts been playing Googley games? I am not going to bet against Googzilla. Remember what happened in the 2021 film Godzilla vs. Kong. Both beasties make peace and go their separate ways. If that happens, Googzilla wins.

Stephen E Arnold, May 22, 2024

Google Dings MSFT: Marketing Motivated by Opportunism

May 21, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

While not as exciting as Jake Paul versus Mike Tyson, but the dust up is interesting. The developments leading up to this report about Google criticizing Microsoft’s security methods have a bit of history:

- Microsoft embraced OpenAI, Mistral, and other smart software because regulators are in meetings about regulating

- Google learned that after tire kicking, Apple found OpenAI (Microsoft’s pal) more suitable to the now innovation challenged iPhone. Google became a wallflower, a cute one, but a wallflower nevertheless

- Google faces trouble on three fronts: [a] Its own management of technology and its human resources; [b] threats to its online advertising and brokering business; and [c] challenges in cost control. (Employees get fired, and CFOs leave for a reason.)

Google is not a marketing outfit nor is it one that automatically evokes images associated with trust, data privacy, and people sensitivity. Google seized an opportunity to improve Web search. When forced to monetize, the company found inspiration in the online advertising “pay to play” ideas of Yahoo (Overture and GoTo). There was a legal dust up and Google paid up for that Eureka! moment. Then Google rode the demand for matching ads to queries. After 25 years, Google remains dependent on its semi-automated ad business. Now that business must be supplemented with enterprise cloud revenue.

Two white collar victims of legal witch hunts discuss “trust”. Good enough, MSFT Copilot.

How does the company market while the Red Alert klaxon blares into the cubicles, Google Meet sessions, and the Foosball game areas.?

The information in “Google Attacks Microsoft Cyber Failures in Effort to Steal Customers.” I wonder if Foundem and the French taxation authority might find the Google bandying about the word “steal”? I don’t know the answer to this question. The title indicates that Microsoft’s security woes, recently publicized by the US government, provide a marketing opportunity.

The article reports Google’s grand idea this way:

Government agencies that switch 500 or more users to Google Workspace Enterprise Plus for three years will get one year free and be eligible for a “significant discount” for the rest of the contract, said Andy Wen, the senior director of product management for Workspace. The Alphabet Inc. division is offering 18 months free to corporate customers that sign a three-year contract, a hefty discount after that and incident response services from Google’s Mandiant security business. All customers will receive free consulting services to help them make the switch.

The idea that Google is marketing is an interesting one. Like Telegram, Google has not been a long-time advocate of Madison Avenue advertising, marketing, and salesmanship. I was once retained by a US government agency to make a phone call to one of my “interaction points” at Google so that the director of the agency could ask a question about the quite pricey row of yellow Google Search Appliances. I made the call and obtained the required information. I also got paid. That’s some marketing in my opinion. An old person from rural Kentucky intermediating between a senior government official and a manager in one of Google’s mind boggling puzzle palace.

I want to point out that Google’s assertions about security may be overstated. One recent example is the Register’s report “Google Cloud Shows It Can Break Things for Lots of Customers – Not Just One at a Time.” Is this a security issue? My hunch is that whenever something breaks, security becomes an issue. Why? Rushed fixes may introduce additional vulnerabilities on top of the “good enough” engineering approach implemented by many high-flying, boastful, high-technology outfits. The Register says:

In the week after its astounding deletion of Australian pension fund UniSuper’s entire account, you might think Google Cloud would be on its very best behavior. Nope.

So what? When one operates at Google scale, the “what” is little more than users of 33 Google Cloud services were needful of some of that YouTube TV Zen moment stuff.

My reaction is that these giant outfits which are making clear that single points of failure are the norm in today’s online environment may not do the “fail over” or “graceful recovery” processes with the elegance of Mikhail Baryshnikov’s tuning point solo move. Google is obviously still struggling with the after effects of Microsoft’s OpenAI announcement and the flops like the Sundar & Prabhakar Comedy Show in Paris and the “smart software” producing images orthogonal to historical fact.

Online advertising expertise may not correlate with marketing finesse.

Stephen E Arnold, May 21, 2024

Ho Hum: The Search Sky Is Falling

May 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

“Google’s Broken Link to the Web” is interesting for two reasons: [a] The sky is falling — again and [b] search has been broken for a long time and suddenly I should worry.

The write up states:

When it comes to the company’s core search engine, however, the image of progress looks far muddier. Like its much-smaller rivals, Google’s idea for the future of search is to deliver ever more answers within its walled garden, collapsing projects that would once have required a host of visits to individual web pages into a single answer delivered within Google itself.

Nope. The walled garden has been in the game plan for a long, long time. People who lusted for Google mouse pads were not sufficiently clued in to notice. Google wants to be the digital Hotel California. Smarter software is just one more component available to the system which controls information flows globally. How many people in Denmark rely on Google search whether it is good, bad, or indifferent? The answer is, “99 percent.” What about people who let Google Gmail pass along their messages? How about 67 percent in the US. YouTube is video in many countries even with the rise of TikTok, the Google is hanging in there. Maps? Ditto. Calendars? Ditto. Each of these ubiquitous services are “search.” They have been for years. Any click can be monetized one way or another.

Who will pay attention to this message? Regulators? Users of search on an iPhone? How about commuters and Waze? Thanks, MSFT Copilot. Good enough. Working on those security issues today?

Now the sky is falling? Give me a break. The write up adds:

where the company once limited itself to gathering low-hanging fruit along the lines of “what time is the super bowl,” on Tuesday executives showcased generative AI tools that will someday plan an entire anniversary dinner, or cross-country-move, or trip abroad. A quarter-century into its existence, a company that once proudly served as an entry point to a web that it nourished with traffic and advertising revenue has begun to abstract that all away into an input for its large language models. This new approach is captured elegantly in a slogan that appeared several times during Tuesday’s keynote: let Google do the Googling for you.

Of course, if Google does it, those “search” abstractions can be monetized.

How about this statement?

But to everyone who depended even a little bit on web search to have their business discovered, or their blog post read, or their journalism funded, the arrival of AI search bodes ill for the future. Google will now do the Googling for you, and everyone who benefited from humans doing the Googling will very soon need to come up with a Plan B.

Okay, what’s the plan B? Kagi? Yandex? Something magical from one of the AI start ups?

People have been trying to out search Google for a quarter century. And what has been the result? Google’s technology has been baked into the findability fruit cakes.

If one wants to be found, buy Google advertising. The alternative is what exactly? Crazy SEO baloney? Hire a 15 year old and pray that person can become an influencer? Put ads on Tubi?

The sky is not falling. The clouds rolled in and obfuscated people’s ability to see how weaponized information has seized control of multiple channels of information. I don’t see a change in weather any time soon. If one wants to run around saying the sky is falling, be careful. One might run into a wall or trip over a fire plug.

Stephen E Arnold, May 15, 2024

Google Lessons in Management: Motivating Some, Demotivating Others

May 14, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I spotted an interesting comment in “Google Workers Complain of Decline in Morale’ as CEO Sundar Pichai Grilled over Raises, Layoffs: Increased distrust.” Here’s the passage:

Last month, the company fired 200 more workers, aside from the roughly 50 staffers involved in the protests, and shifted jobs abroad to Mexico and India.

I paired this Xhitter item with “Google Employees Question Execs over Decline in Morale after Blowout Earnings.” That write up asserted:

At an all-hands meeting last week, Google employees questioned leadership about cost cuts, layoffs and “morale” issues following the company’s better-than-expected first-quarter earnings report. CEO Sundar Pichai and CFO Ruth Porat said the company will likely have fewer layoffs in the second half of 2024.

Poor, poor Googzilla. I think the fearsome alleged monopolist could lose a few pounds. What do you think? Thanks, MSFT Copilot good enough work just like some security models we know and love.

Not no layoffs. Just “fewer layoffs.” Okay, that a motivator.

The estimable “real” news service stated:

Alphabet’s top leadership has been on the defensive for the past few years, as vocal staffers have railed about post-pandemic return-to-office mandates, the company’s cloud contracts with the military, fewer perks and an extended stretch of layoffs — totaling more than 12,000 last year — along with other cost cuts that began when the economy turned in 2022. Employees have also complained about a lack of trust and demands that they work on tighter deadlines with fewer resources and diminished opportunities for internal advancement.

What’s wrong with this management method? The answer: Absolutely nothing. The write up included this bit of information:

She [Ruth Porat, Google CFO, who is quitting the volleyball and foosball facility] also took the rare step of admitting to leadership’s mistakes in its prior handling of investments. “The problem is a couple of years ago — two years ago, to be precise — we actually got that upside down and expenses started growing faster than revenues,” said Porat, who announced nearly a year ago [in 2023] that she would be stepping down from the CFO position but hasn’t yet vacated the office. “The problem with that is it’s not sustainable.”

Ever tactful, Sundar Pichai (the straight man in the Sundar & Prabhakar Comedy Team is quoted as saying in silky tones:

“I think you almost set the record for the longest TGIF answer,” he said. Google all-hands meetings were originally called TGIFs because they took place on Fridays, but now they can occur on other days of the week. Pichai then joked that leadership should hold a “Finance 101” Ted Talk for employees. With respect to the decline in morale brought up by employees, Pichai said “leadership has a lot of responsibility here, adding that “it’s an iterative process.”

That’s a demonstration of tactful high school science club management-speak, in my opinion. To emphasize the future opportunities for the world’s smartest people, he allegedly said, according to the write up:

Pichai said the company is “working through a long period of transition as a company” which includes cutting expenses and “driving efficiencies.” Regarding the latter point, he said, “We want to do this forever.” [Editor note: Emphasis added]

Forever is a long, long time, isn’t it?

Poor, addled Googzilla. Litigation to the left, litigation to the right. Grousing world’s smartest employees. A legacy of baby making in the legal department. Apple apparently falling in lust with OpenAI. Amazon and its pesky Yellow Pages approach to advertising.

The sky is not falling, but there are some dark clouds overhead. And, speaking of overhead, is Google ever going to be able to control its costs, pay off its technical debt, write checks to the governments when the firm is unjustly found guilty of assorted transgressions?

For now, yes. Forever? Sure, why not?

Stephen E Arnold, May 14, 2024

Microsoft and Its Customers: Out of Phase, Orthogonal, and Confused

May 9, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I am writing this post using something called Open LiveWriter. I switched when Microsoft updated our Windows machines and killed printing, a mouse linked via a KVM, and the 2012 version of its blog word processing software. I use a number of software products, and I keep old programs in order to compare them to modern options available to a user. The operative point is that a Windows update rendered the 2012 version of LiveWriter lost in the wonderland of Windows’ Byzantine code.

A young leader of an important project does not want to hear too much from her followers. In fact, she wishes they would shut up and get with the program. Thank, MSFT Copilot. How’s the Job One of security coming today?

There are reports, which I am not sure I believe, that Windows 11 is a modern version of Windows Vista. The idea is that users are switching to Windows 10. Well, maybe. But the point is that users are not happy with Microsoft’s alleged changes to Windows; for instance:

- Notifications (advertising) in the Windows 11 start menu

- Alleged telemetry which provides a stream of user action and activity data to Microsoft for analysis (maybe marketing purposes?)

- Gratuitous interface changes which range from moving control items from a control panel to a settings panel to fiddling with task manager

- Wonky updates like the printer issue, driver wonkiness, and smart help which usually returns nothing of much help.

I read “This Third-Party App Blocks Integrated Windows 11 Advertising.” You can read the original article to track down this customization tool. My hunch is that its functions will be intentionally blocked by some bonus centric Softie or a change to the basic Windows 11 control panel will cause the software to perform like LiveWriter 2012.

I want to focus on a comment to the cited article written by seeprime:

Microsoft has seriously degraded File Explorer over the years. They should stop prolonging the Gates culture of rewarding software development, of new and shiny things, at the expense of fixing what’s not working optimally.

Now that security, not AI and not Windows 11, are the top priority at Microsoft, will the company remediate the grouses users have about the product? My answer is, “No.” Here’s why:

- Fixing, as seeprime, suggests is less important that coming up with some that seems “new.” The approach is dangerous because the “new” thing may be developed by someone uninformed about the hidden dependencies within what is code as convoluted as Google’s search plumbing. “New” just breaks the old or the change is something that seems “new” to an intern or an older Softie who just does not care. Good enough is the high bar to clear.

- Details are not Microsoft’s core competency. Indeed, unlike Google, Microsoft has many revenue streams, and the attention goes to cooking up new big-money services like a version of Copilot which is not exposed to the Internet for its government customers. The cloud, not Windows, is the future.

- Microsoft whether it knows it or not is on the path to virtualize desktop and mobile software. The idea means that Microsoft does not have to put up with developers who make changes Microsoft does not want to work. Putting Windows in the cloud might give Microsoft the total control it desires.

- Windows is a security challenge. The thinking may be: “Let’s put Windows in the cloud and lock down security, updates, domain look ups, etc. I would suggest that creating one giant target might introduce some new challenges to the Softie vision.

Speculation aside, Microsoft may be at a point when users become increasingly unhappy. The mobile model, virtualization, and smart interfaces might create tasty options for users in the near future. Microsoft cannot make up its mind about AI. It has the OpenAI deal; it has the Mistral deal; it has its own internal development; and it has Inflection and probably others I don’t know about.

Microsoft cannot make up its mind. Now Microsoft is doing an about face and saying, “Security is Job One.” But there’s the need to make the Azure Cloud grow. Okay, okay, which is it? The answer, I think, is, “We want to do it all. We want everything.”

This might be difficult. Users might just pile up and remain out of phase, orthogonal, and confused. Perhaps I could add angry? Just like LiveWriter: Tossed into the bit trash can.

Stephen E Arnold, May 9. 2024

Researchers Reveal Vulnerabilities Across Pinyin Keyboard Apps

May 9, 2024

Conventional keyboards were designed for languages based on the Roman alphabet. Fortunately, apps exist to adapt them to script-based languages like Chinese, Japanese, and Korean. Unfortunately, such tools can pave the way for bad actors to capture sensitive information. Researchers at the Citizen Lab have found vulnerabilities in many pinyin keyboard apps, which romanize Chinese languages. Gee, how could those have gotten there? The post, “The Not-So-Silent Type,” presents their results. Writers Jeffrey Knockel, Mona Wang, and Zoë Reichert summarize the key findings:

- “We analyzed the security of cloud-based pinyin keyboard apps from nine vendors — Baidu, Honor, Huawei, iFlytek, OPPO, Samsung, Tencent, Vivo, and Xiaomi — and examined their transmission of users’ keystrokes for vulnerabilities.

- Our analysis revealed critical vulnerabilities in keyboard apps from eight out of the nine vendors in which we could exploit that vulnerability to completely reveal the contents of users’ keystrokes in transit. Most of the vulnerable apps can be exploited by an entirely passive network eavesdropper.

- Combining the vulnerabilities discovered in this and our previous report analyzing Sogou’s keyboard apps, we estimate that up to one billion users are affected by these vulnerabilities. Given the scope of these vulnerabilities, the sensitivity of what users type on their devices, the ease with which these vulnerabilities may have been discovered, and that the Five Eyes have previously exploited similar vulnerabilities in Chinese apps for surveillance, it is possible that such users’ keystrokes may have also been under mass surveillance.

- We reported these vulnerabilities to all nine vendors. Most vendors responded, took the issue seriously, and fixed the reported vulnerabilities, although some keyboard apps remain vulnerable.”

See the article for all the details. It describes the study’s methodology, gives specific findings for each of those app vendors, and discusses the ramifications of the findings. Some readers may want to skip to the very detailed Summary of Recommendations. It offers suggestions to fellow researchers, international standards bodies, developers, app store operators, device manufacturers, and, finally, keyboard users.

The interdisciplinary Citizen Lab is based at the Munk School of Global Affairs & Public Policy, University of Toronto. Its researchers study the intersection of information and communication technologies, human rights, and global security.

Cynthia Murrell, May 9, 2024

Google Stomps into the Threat Intelligence Sector: AI and More

May 7, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Before commenting on Google’s threat services news. I want to remind you of the link to the list of Google initiatives which did not survive. You can find the list at Killed by Google. I want to mention this resource because Google’s product innovation and management methods are interesting to say the least. Operating in Code Red or Yellow Alert or whatever the Google crisis buzzword is, generating sustainable revenue beyond online advertising has proven to be a bit of a challenge. Google is more comfortable using such methods as [a] buying and trying to scale it, [b] imitating another firm’s innovation, and [c] dumping big money into secret projects in the hopes that what comes out will not result in the firm’s getting its “glass” kicked to the curb.

Google makes a big entrance at the RSA Conference. Thanks, MSFT Copilot. Have you considerate purchasing Google’s threat intelligence service?

With that as background, Google has introduced an “unmatched” cyber security service. The information was described at the RSA security conference and in a quite Googley blog post “Introducing Google Threat Intelligence: Actionable threat intelligence at Google Scale.” Please, note the operative word “scale.” If the service does not make money, Google will “not put wood behind” the effort. People won’t work on the project, and it will be left to dangle in the wind or just shot like Cricket, a now famous example of animal husbandry. (Google’s Cricket was the Google Appliance. Remember that? Take over the enterprise search market. Nope. Bang, hasta la vista.)

Google’s new service aims squarely at the comparatively well-established and now maturing cyber security market. I have to check to see who owns what. Venture firms and others with money have been buying promising cyber security firms. Google owned a piece of Recorded Future. Now Recorded Future is owned by a third party outfit called Insight. Darktrace has been or will be purchased by Thoma Bravo. Consolidation is underway. Thus, it makes sense to Google to enter the threat intelligence market, using its Mandiant unit as a springboard, one of those home diving boards, not the cliff in Acapulco diving platform.

The write up says:

we are announcing Google Threat Intelligence, a new offering that combines the unmatched depth of our Mandiant frontline expertise, the global reach of the VirusTotal community, and the breadth of visibility only Google can deliver, based on billions of signals across devices and emails. Google Threat Intelligence includes Gemini in Threat Intelligence, our AI-powered agent that provides conversational search across our vast repository of threat intelligence, enabling customers to gain insights and protect themselves from threats faster than ever before.

Google to its credit did not trot out the “quantum supremacy” lingo, but the marketers did assert that the service offers “unmatched visibility in threats.” I like the “unmatched.” Not supreme, just unmatched. The graphic below illustrates the elements of the unmatchedness:

Credit to the Google 2024

But where is artificial intelligence in the diagram? Don’t worry. The blog explains that Gemini (Google’s AI “system”) delivers

AI-driven operationalization

But the foundation of the new service is Gemini, which does not appear in the diagram. That does not matter, the Code Red crowd explains:

Gemini 1.5 Pro offers the world’s longest context window, with support for up to 1 million tokens. It can dramatically simplify the technical and labor-intensive process of reverse engineering malware — one of the most advanced malware-analysis techniques available to cybersecurity professionals. In fact, it was able to process the entire decompiled code of the malware file for WannaCry in a single pass, taking 34 seconds to deliver its analysis and identify the kill switch. We also offer a Gemini-driven entity extraction tool to automate data fusion and enrichment. It can automatically crawl the web for relevant open source intelligence (OSINT), and classify online industry threat reporting. It then converts this information to knowledge collections, with corresponding hunting and response packs pulled from motivations, targets, tactics, techniques, and procedures (TTPs), actors, toolkits, and Indicators of Compromise (IoCs). Google Threat Intelligence can distill more than a decade of threat reports to produce comprehensive, custom summaries in seconds.

I like the “indicators of compromise.”

Several observations:

- Will this service be another Google Appliance-type play for the enterprise market? It is too soon to tell, but with the pressure mounting from regulators, staff management issues, competitors, and savvy marketers in Redmond “indicators” of success will be known in the next six to 12 months

- Is this a business or just another item on a punch list? The answer to the question may be provided by what the established players in the threat intelligence market do and what actions Amazon and Microsoft take. Is a new round of big money acquisitions going to begin?

- Will enterprise customers “just buy Google”? Chief security officers have demonstrated that buying multiple security systems is a “safe” approach to a job which is difficult: Protecting their employers from deeply flawed software and years of ignoring online security.

Net net: In a maturing market, three factors may signal how the big, new Google service will develop. These are [a] price, [b] perceived efficacy, and [c] avoidance of a major issue like the SolarWinds’ matter. I am rooting for Googzilla, but I still wonder why Google shifted from Recorded Future to acquisitions and me-too methods. Oh, well. I am a dinobaby and cannot be expected to understand.

Stephen E Arnold, May 7, 2024

The Everything About AI Report

May 7, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read the Stanford Artificial Intelligence Report. If you have have not seen the 500 page document, click here. I spotted an interesting summary of the document. “Things Everyone Should Understand About the Stanford AI Index Report” is the work of Logan Thorneloe, an author previously unknown to me. I want to highlight three points I carried away from Mr. Thorneloe’s essay. These may make more sense after you have worked through the beefy Stanford document, which, due to its size, makes clear that Stanford wants to be linked to the the AI spaceship. (Does Stanford’s AI effort look like Mr. Musk’s or Mr. Bezos’ rocket? I am leaning toward the Bezos design.)

An amazed student absorbs information about the Stanford AI Index Report. Thanks, MSFT. Good enough.

The summary of the 500 page document makes clear that Stanford wants to track the progress of smart software, provide a policy document so that Stanford can obviously influence policy decisions made by people who are not AI experts, and then “highlight ethical considerations.” The assumption by Mr. Thorneloe and by the AI report itself is that Stanford is equipped to make ethical anything. The president of Stanford departed under a cloud for acting in an unethical manner. Plus some of the AI firms have a number of Stanford graduates on their AI teams. Are those teams responsible for depictions of inaccurate historical personages? Okay, that’s enough about ethics. My hunch is that Stanford wants to be perceived as a leader. Mr. Thorneloe seems to accept this idea as a-okay.

The second point for me in the summary is that Mr. Thorneloe goes along with the idea that the Stanford report is unbiased. Writing about AI is, in my opinion of course, inherently biased. That’s’ the reason there are AI cheerleaders and AI doomsayers. AI is probability. How the software gets smart is biased by [a] how the thresholds are rigged up when a smart system is built, [b] the humans who do the training of the system and then “fine tune” or “calibrate” the smart software to produce acceptable results, and [b] the information used to train the system. More recently, human developers have been creating wrappers which effectively prevent the smart software from generating pornography or other “improper” or “unacceptable” outputs. I think the “bias” angle needs some critical thinking. Stanford’s report wants to cover the AI waterfront as Stanford maps and presents the geography of AI.

The final point is the rundown of Mr. Thorneloe’s take-aways from the report. He presents ten. I think there may just be three. First, the AI work is very expensive. That leads to the conclusion that only certain firms can be in the AI game and expect to win and win big. To me, this means that Stanford wants the good old days of Silicon Valley to come back again. I am not sure that this approach to an important, yet immature technology, is a particularly good idea. One does not fix up problems with technology. Technology creates some problems, and like social media, what AI generates may have a dark side. With big money controlling the game, what’s that mean? That’s a tough question to answer. The US wants China and Russia to promise not to use AI in their nuclear weapons system. Yeah, that will work.

Another take-away which seems important is the assumption that workers will be more productive. This is an interesting assertion. I understand that one can use AI to eliminate call centers. However, has Stanford made a case that the benefits outweigh the drawbacks of AI? Mr. Thorneloe seems to be okay with the assumption underlying the good old consultant-type of magic.

The general take-away from the list of ten take-aways is that AI is fueled by “industry.” What happened the Stanford Artificial Intelligence Lab, synthetic data, and the high-confidence outputs? Nothing has happened. AI hallucinates. AI gets facts wrong. AI is a collection of technologies looking for problems to solve.

Net net: Mr. Thorneloe’s summary is useful. The Stanford report is useful. Some AI is useful. Writing 500 pages about a fast moving collection of technologies is interesting. I cannot wait for the 2024 edition. I assume “everyone” will understand AI PR.

Stephen E Arnold, May 7, 2024

Microsoft Security Messaging: Which Is What?

May 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I am a dinobaby. I am easily confused. I read two “real” news items and came away confused. The first story is “Microsoft Overhaul Treats Security As Top Priority after a Series of Failures.” The subtitle is interesting too because it links “security” to monetary compensation. That’s an incentive, but why isn’t security just part of work at an alleged monopoly’s products and services? I surmise the answer is, “Because security costs money, a lot of money.” That article asserts:

After a scathing report from the US Cyber Safety Review Board recently concluded that “Microsoft’s security culture was inadequate and requires an overhaul,” it’s doing just that by outlining a set of security principles and goals that are tied to compensation packages for Microsoft’s senior leadership team.

Okay. But security emerges from basic engineering decisions; for instance, does a developer spend time figuring out and resolving security when dependencies are unknown or documented only by a grousing user in a comment posted on a technical forum? Or, does the developer include a new feature and moves on to the next task, assuming that someone else or an automated process will make sure everything works without opening the door to the curious bad actor? I think that Microsoft assumes it deploys secure systems and that its customers have the responsibility to ensure their systems’ security.

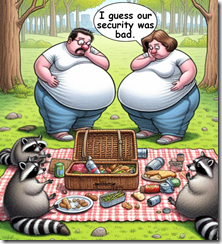

The cyber racoons found the secure picnic basket was easily opened. The well-fed, previously content humans seem dismayed that their goodies were stolen. Thanks, MSFT Copilot. Definitely good enough.

The write up adds that Microsoft has three security principles and six security pillars. I won’t list these because the words chosen strike me like those produced by a lawyer, an MBA, and a large language model. Remember. I am a dinobaby. Six plus three is nine things. Some car executive said a long time ago, “Two objectives is no objective.” I would add nine generalizations are not a culture of security. Nine is like Microsoft Word features. No one can keep track of them because most users use Word to produce Words. The other stuff is usually confusing, in the way, or presented in a way that finding a specific feature is an exercise in frustration. Is Word secure? Sure, just download some nifty documents from a frisky Telegram group or the Dark Web.

The write up concludes with a weird statement. Let me quote it:

I reported last month that inside Microsoft there is concern that the recent security attacks could seriously undermine trust in the company. “Ultimately, Microsoft runs on trust and this trust must be earned and maintained,” says Bell. “As a global provider of software, infrastructure and cloud services, we feel a deep responsibility to do our part to keep the world safe and secure. Our promise is to continually improve and adapt to the evolving needs of cybersecurity. This is job #1 for us.”

First, there is the notion of trust. Perhaps Edge’s persistence and advertising in the start menu, SolarWinds, and the legions of Chinese and Russian bad actors undermine whatever trust exists. Most users are clueless about security issues baked into certain systems. They assume; they don’t trust. Cyber security professionals buy third party security solutions like shopping at a grocery store. Big companies’ senior executive don’t understand why the problem exists. Lawyers and accountants understand many things. Digital security is often not a core competency. “Let the cloud handle it,” sounds pretty good when the fourth IT manager or the third security officer quit this year.

Now the second write up. “Microsoft’s Responsible AI Chief Worries about the Open Web.” First, recall that Microsoft owns GitHub, a very convenient source for individuals looking to perform interesting tasks. Some are good tasks like snagging a script to perform a specific function for a church’s database. Other software does interesting things in order to help a user shore up security. Rapid 7 metasploit-framework is an interesting example. Almost anyone can find quite a bit of useful software on GitHub. When I lectured in a central European country’s main technical university, the students were familiar with GitHub. Oh, boy, were they.

In this second write up I learned that Microsoft has released a 39 page “report” which looks a lot like a PowerPoint presentation created by a blue-chip consulting firm. You can download the document at this link, at least you could as of May 6, 2024. “Security” appears 78 times in the document. There are “security reviews.” There is “cybersecurity development” and a reference to something called “Our Aether Security Engineering Guidance.” There is “red teaming” for biosecurity and cybersecurity. There is security in Azure AI. There are security reviews. There is the use of Copilot for security. There is something called PyRIT which “enables security professionals and machine learning engineers to proactively find risks in their generative applications.” There is partnering with MITRE for security guidance. And there are four footnotes to the document about security.

What strikes me is that security is definitely a popular concept in the document. But the principles and pillars apparently require AI context. As I worked through the PowerPoint, I formed the opinion that a committee worked with a small group of wordsmiths and crafted a rather elaborate word salad about going all in with Microsoft AI. Then the group added “security” the way my mother would chop up a red pepper and put it in a salad for color.

I want to offer several observations:

- Both documents suggest to me that Microsoft is now pushing “security” as Job One, a slogan used by the Ford Motor Co. (How are those Fords fairing in the reliability ratings?) Saying words and doing are two different things.

- The rhetoric of the two documents remind me of Gertrude’s statement, “The lady doth protest too much, methinks.” (Hamlet? Remember?)

- The US government, most large organizations, and many individuals “assume” that Microsoft has taken security seriously for decades. The jargon-and-blather PowerPoint make clear that Microsoft is trying to find a nice way to say, “We are saying we will do better already. Just listen, people.”

Net net: Bandying about the word trust or the word security puts everyone on notice that Microsoft knows it has a security problem. But the key point is that bad actors know it, exploit the security issues, and believe that Microsoft software and services will be a reliable source of opportunity of mischief. Ransomware? Absolutely. Exposed data? You bet your life. Free hacking tools? Let’s go. Does Microsoft have a security problem? The word form is incorrect. Does Microsoft have security problems? You know the answer. Aether.

Stephen E Arnold, May 6, 2024