Google versus Microsoft: Whose Marketing Is Wonkier?

April 17, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I want to do what used to be called a comparison. I read Microsoft’s posts on April 12, 2023 (I don’t know for certain because LinkedIn does not provide explicit data and time information because who really cares about indexing anymore.) The first post shown in the screenshot is from the Big Dog himself at Microsoftland. The information is one more announcement about the company’s use of OpenAI’s technology in another Microsoftland product. I want to shout, “Enough already,” but my opinion is not in sync with Microsoft’s full-scale assault on Microsoft users. It is now a combination of effective hyperbole and services designed to “add value.” The post below Mr. Nadella’s is from another Softie. The main point is that Microsoft is doing smart things for providers and payors. My view is that Microsoft is doing this AI thing for money, but again my view is orthogonal to the company which cannot make some of its software print on office printers.

Source: LinkedIn 2023 at shorturl.at/egnpz. Note: The LinkedIn url is a long worm thing. I do not know if the short url will render. If not, give Microsoft’s search function a whirl.

Key takeaways: Microsoft owns a communications channel. Microsoft posts razzmatazz verbiage about smart software. Microsoft controls the message. Want more? Just click the big plus and Microsoft will direct more information directly at you, maybe on your Windows 11 start menu.

Now navigate to “Sundar Pichai’s Response to the Delayed Launch of Bard Is Brilliant and Reminds Us Why Google Is Still Great.” I want to cry for joy because the Google has not lost the marketing battle with Microsoft. I want to shout, “Google is number one.” I want to wave Googley color pom poms and jump up and down. Join me. “Google is number one.”

The write up strikes me as a remarkable example of lip flapping and arm waving; to wit:

Google secures its competitive advantage not necessarily by being the fastest to act, but by staying the course on why it exists and what it stands for. Innovation and product disruption is baked into its existence. From its operating models to its people strategy, everything gets painted with a stroke of ingenuity, curiosity, and creativity. While other companies may have been first to market with new technologies or products, Google’s focus on innovation and improving upon existing solutions has allowed it to surpass competitors and become the market leader in many areas.

The statements in this snippet are remarkable for several reasons:

- Google itself announced Code Red, a crisis. Google itself called Mom and Dad (Messrs. Brin and Page) to return to the Mountain View mothership to help figure out what to do after Microsoft’s Davos AI blizzard. Google itself has asked every employee to work on smart software. Now Google is being cautious. Is that why Googler Jeff Dean has invested in a ChatGPT competitor?

- Google is killing off products. The online magazine with the weird logo published “The Google Graveyard” in 2019. On April 12, 2023, Google killed off something called Currents. Believe it or not, the product was to replaced Google Plus. Yeah, Google really put wood behind the hit for a social media home run.

- The phrase “ingenuity, curiosity, and creativity” does not strike me as the way to sum up how Google operates. I think in terms of “poaching and paying for the GoTo, Overture, Yahoo online advertising inspiration,” perfecting the swinging door so all parties to an ad deal pay Google, and speaking like a wandering holy figure when answering questions before a legal body.

Key takeaways: Google relies on a PR firm or a Ford F 150 Lightning carrying Google mouse pads to get a magazine to write an article which appears to be a reality not reflected by the quite specific statements and actions of the Google.

Bottom-line: Microsoft bought a channel. Google did not. Google may want to consider implementing the “me too” approach and buy an Inc.-type publication. I am now going to be increasingly skeptical of the information presented by Inc. Magazine. I already know to be deeply suspicious of LinkedIn.

Stephen E Arnold, April 17, 2023

Big Wizards Discover What Some Autonomy Users Knew 30 Years Ago. Remarkable, Is It Not?

April 14, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

What happens if one assembles a corpus, feeds it into a smart software system, and turns it on after some tuning and optimizing for search or a related process like indexing. After a few months, the precision and recall of the system degrades. What’s the fix? Easy. Assemble a corpus. Feed it into the smart software system. Turn it on after some tuning and optimizing. The approach works and would keep the Autonomy neuro linguistic programming system working quite well.

Not only was Autonomy ahead of the information retrieval game in the late 1990s, I have made the case that its approach was one of the enablers for the smart software in use today at outfits like BAE Systems.

There were a couple of drawbacks with the Autonomy approach. The principal one was the expensive and time intensive job of assembling a training corpus. The narrower the domain, the easier this was. The broader the domain — for instance, general business information — the more resource intensive the work became.

The second drawback was that as new content was fed into the black box, the internals recalibrated to accommodate new words and phrases. Because the initial training set did not know about these words and phrases, the precision and recall from the point of the view of the user would degrade. From the engineering point of view, the Autonomy system was behaving in a known, predictable manner. The drawback was that users did not understand what I call “drift”, and the licensees’ accountants did not want to pay for the periodic and time consuming retraining.

What’s changed since the late 1990s? First, there are methods — not entirely satisfactory from my point of view — like the Snorkel-type approach. A system is trained once and then it uses methods that do retraining without expensive subject matter experts and massive time investments. The second method is the use of ChatGPT-type approaches which get trained on large volumes of content, not the comparatively small training sets feasible decades ago.

Are there “drift” issues with today’s whiz bang methods?

Yep. For supporting evidence, navigate to “91% of ML Models Degrade in Time.” The write up from big brains at “MIT, Harvard, The University of Monterrey, and other top institutions” learned about model degradation. On one hand, that’s good news. A bit of accuracy about magic software is helpful. On the other hand, the failure of big brain institutions to note the problem and then look into it is troubling. I am not going to discuss why experts don’t know what high profile advanced systems actually do. I have done that elsewhere in my monographs and articles.

I found this “explanatory diagram” in the write up interesting:

What was the authors’ conclusion other than not knowing what was common knowledge among Autonomy-type system users in the 1990s?

You need to retrain the model! You need to embrace low cost Snorkel-type methods for building training data! You have to know what subject matter experts know even though SMEs are an endangered species!

I am glad I am old and heading into what Dylan Thomas called “that good night.” Why? The “drift” is just one obvious characteristic. There are other, more sinister issues just around the corner.

Stephen E Arnold, April 14, 2023

Hybrid Search: A Gentle Way of Saying “One Size Fits All” Search Like the Google Provides Is Not Going to Work for Some

March 9, 2023

“On Hybrid Search” is a content marketing-type report. That’s okay. I found the information useful. What causes me to highlight this post by Qdrant is that one implicit message is: Google’s approach to search is lousy because it is aiming at the lowest common denominator of retrieval while preserving its relevance eroding online ad matching business.

The guts of the write up walks through old school and sort of new school approaches to matching processed content with a query. Keep in mind that most of the technology mentioned in the write up is “old” in the sense that it’s been around for a half decade or more. The “new” technology is about ready to hop on a bike with training wheels and head to the swimming pool. (Yes, there is some risk there I suggest.)

But here’s the key statement in the report for me:

Each search scenario requires a specialized tool to achieve the best results possible. Still, combining multiple tools with minimal overhead is possible to improve the search precision even further. Introducing vector search into an existing search stack doesn’t need to be a revolution but just one small step at a time. You’ll never cover all the possible queries with a list of synonyms, so a full-text search may not find all the relevant documents. There are also some cases in which your users use different terminology than the one you have in your database.

Here’s the statement I am not feeling warm fuzzies:

Those problems are easily solvable with neural vector embeddings, and combining both approaches with an additional reranking step is possible. So you don’t need to resign from your well-known full-text search mechanism but extend it with vector search to support the queries you haven’t foreseen.

Observations:

- No problems in search when humans are seeking information are “easily solvable with shot gun marriages”.

- Finding information is no longer enough: The information or data displayed have to be [a] correct, accurate, or at least reproducible; [b] free of injected poisoned information (yep, the burden falls on the indexing engine or engines, not the user who, by definition, does not know an answer or what is needed to answer a query; and [c] the need for having access to “real time” data creates additional computational cost, which is often difficult to justify

- Basic finding and retrieval is morphing into projected outcomes or implications from the indexed data. Available technology for search and retrieval is not tuned for this requirement.

Stephen E Arnold, March 9, 2023

Another Xoogler, Another Repetitive, Sad, Dispiriting Story

March 2, 2023

I will keep this brief. I read “The Maze Is in the Mouse.” The essay is Xoogler’s lament. The main point is that Google has four issues. The write up identifies these from a first person point of view:

The way I see it, Google has four core cultural problems. They are all the natural consequences of having a money-printing machine called “Ads” that has kept growing relentlessly every year, hiding all other sins. (1) no mission, (2) no urgency, (3) delusions of exceptionalism, (4) mismanagement.

I agree that “ads” are a big part of the Google challenge. I am not sure about the “mouse” or the “maze.”

Googzilla emerged from an incredible sequence of actions. Taken as a group, Google became the poster child for what smart Silicon Valley brainiacs could accomplish. From the git-go, Google emerged from the Backrub service. Useful research like the CLEVER method was kicking around at some conferences as a breakthrough for determining relevance. The competition was busy trying to become “portals” because the Web indexing thing was expensive and presented what seemed to be an infinite series of programming hoops. Google had zero ways to make money. As I recall, the mom and dad of Googzilla tried to sell the company to those who would listen; for example, the super brainiacs at Yahoo. Then the aha moment. GoTo.com had caused a stir in the Web indexing community by selling traffic. GoTo.com became Overture.com. Yahoo.com (run by super brainiacs, remember) bought Overture. But Yahoo did have the will, the machinery, or the guts to go big. Yahoo went home. Google went big.

What makes Google the interesting outfit it is are these points in my opinion:

- The company was seemingly not above receiving inspiration from the GoTo.com, Overture.com, and ultimately Yahoo.com “pay to play” model. Some people don’t know that Google was built on appropriated innovation and paid money and shares to make Yahoo’s legal eagles fly away. For me, Google embodied intellectual “flexibility” and an ethical compass sensitive to expediency. I may be wrong, but the Google does not strike me as being infused with higher spirits of truth, justice, and the American way Superman does. Google’s innovation boils down to borrowing. That’s okay. I borrow, but I try to footnote, not wait until the legal eagles gnaw at my liver.

- Google management, in my experience, were clueless about the broader context of their blend of search and advertising. I don’t think it was a failure of brainiac thinking. The brainiacs did not have context into which to fit their actions. Larry Page argued with me in 1999 about the value of truncation. He said, “No truncation needed at Google.” Baloney. Google truncates. Google informed a US government agency that Google would not conform to the specifications of the Statement of Work for a major US government search project. A failure to meet the criteria of the Statement of Work made Google ineligible to win that project. What did Google do? Google explained to the government team that the Statement of Work did not apply to Google technology. Well, Statements of Works and procurement works one way. Google did not like that way, so Google complained. Zero context. What Google should have done is address each requirement in a positive manner and turn in the bid. Nope, operating independent of procurement rules, Google just wanted to make up the rules. Period. That’s the way it is now and that’s the way Google has operated for nearly 25 years.

- Google is not mismanaged from Google’s point of view. Google is just right by definition. The management problems were inherent and obvious from the beginning. Let me give one example: Vendors struggled with the Google accounting system 20 or more years ago. Google blamed the Oracle database. Why? The senior management did not know what they did not know and they lacked the mental characteristic of understanding that fact. By assuming Googlers were brainiacs and the dorky Google intelligence test, Googlers could solve any problem. Wrong. Google has and continues to make decisions like a high school science club planning an experiment. Nice group, just not athletes, cheerleaders, class officers, or non nerd advisors. What do you get? You get crazy decisions like dumping Dr. Timnit Gebru and creating the Stochastic Parrot conference as well as Microsoft making Bing and Clippy on steroids look like a big deal.

Net net: Ads are important. But Google is Google because of its original and fundamental mental approach to problems: We know better. One thing is sure in my mind: Google does not know itself any better now than it did when it emerged from the Backrub “borrowed” computers and grousing about using too much Stanford bandwidth. Advertising is a symptom of a deeper malady, a mental issue in my opinion.

Stephen E Arnold,March 2, 2023

A Challenge for Intelware: Outputs Based on Baloney

February 23, 2023

I read a thought-troubling write up “Chat GPT: Writing Could Be on the Wall for Telling Human and AI Apart.” The main idea is:

historians will struggle to tell which texts were written by humans and which by artificial intelligence unless a “digital watermark” is added to all computer-generated material…

I noted this passage:

Last month researchers at the University of Maryland in the US said it was possible to “embed signals into generated text that are invisible to humans but algorithmically detectable” by identifying certain patterns of word fragments.

Great idea except:

- The US smart software is not the only code a bad actor could use. Germany’s wizards are moving forward with Aleph Alpha

- There is an assumption that “old” digital information will be available. Digital ephemera applies to everything to information on government Web sites which get minimal traffic to cost cutting at Web indexing outfits which see “old” data as a drain on profits, not a boon to historians

- Digital watermarks are likely to be like “bulletproof” hosting and advanced cyber security systems: The bullets get through and the cyber security systems are insecure.

What about intelware for law enforcement and intelligence professionals, crime analysts, and as-yet-unreplaced paralegals trying to make sense of available information? GIGO: Garbage in, garbage out.

Stephen E Arnold, February 23, 2023

A Different View of Smart Software with a Killer Cost Graph

February 22, 2023

I read “The AI Crowd is Mad.” I don’t agree. I think the “in” word is hallucinatory. Several writes up have described the activities of Google and Microsoft as an “arm’s race.” I am not sure about that characterization either.

The write up includes a statement with which I agree; to wit:

… when listening to podcasters discussing the technology’s potential, a stereotypical assessment is that these models already have a pretty good accuracy, but that with (1) more training, (2) web-browsing support and (3) the capabilities to reference sources, their accuracy problem can be fixed entirely.

In my 50 plus year career in online information and systems, some problems keep getting kicked down the road. New technology appears and stubs its toe on one of those cans. Rusted cans can slice the careless sprinter on the Information Superhighway and kill the speedy wizard via the tough to see Clostridium tetani bacterium. The surface problem is one thing; the problem which chugs unseen below the surface may be a different beastie. Search and retrieval is one of those “problems” which has been difficult to solve. Just ask someone who frittered away beaucoup bucks improving search. Please, don’t confuse monetization with effective precision and recall.

The write up also includes this statement which resonated with me:

if we can’t trust the model’s outcomes, and we paste-in a to-be-summarized text that we haven’t read, then how can we possibly trust the summary without reading the to-be-summarized text?

Trust comes up frequently when discussing smart software. In fact, the Sundar and Prabhakar script often includes the word “trust.” My response has been and will be “Google = trust? Sure.” I am not willing to trust Microsoft’s Sidney or whatever it is calling itself today. After one update, we could not print. Yep, skill in marketing is not reliable software.

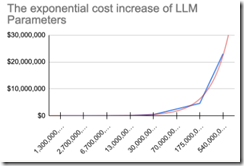

But the highlight of the write up is this chart. For the purpose of this blog post, let’s assume the numbers are close enough for horseshoes:

Source: https://proofinprogress.com/posts/2023-02-01/the-ai-crowd-is-mad.html

What the data suggest to me is that training and retraining models is expensive. Google figured this out. The company wants to train using synthetic data. I suppose it will be better than the content generated by organizations purposely pumping misinformation into the public text pool. Many companies have discovered that models, not just queries, can be engineered to deliver results which the super software wizards did not think about too much. (Remember dying from that cut toe on the Information Superhighway?)

The cited essay includes another wonderful question. Here it is:

But why aren’t Siri and Watson getting smarter?

May I suggest the reasons based on our dabbling with AI infused machine indexing of business information in 1981:

- Language is slippery, more slippery than an eel in Vedius Pollio’s eel pond. Thus, subject matter experts have to fiddle to make sure the words in content and the words in a query sort of overlap or overlap enough for the searcher to locate the needed information.

- Narrow domains on scientific, technical, and medical text are easier to index via a software. Broad domains like general content are more difficult for the software. A static model and the new content “drift.” This is okay as long as the two are steered together. Who has the time, money, or inclination to admit that software intelligence and human intelligence are not yet the same except in PowerPoint pitch decks and academic papers with mostly non reproducible results. But who wants narrow domains. Go broad and big or go home.

- The basic math and procedures may be old. Autonomy’s Neuro Linguistic Programming method was crafted by a stats-mad guy in the 18th century. What needs to be fiddled with are [a] sequences of procedures, [b] thresholds for a decision point, [c] software add ons that work around problems that no one knew existed until some smarty pants posts a flub on Twitter, among other issues.

Net net: We are in the midst of a marketing war. The AI part of the dust up is significant, but with the application of flawed smart software to the generation of content which may be incorrect, another challenge awaits: The Edsel and New Coke of artificial intelligence.

Stephen E Arnold, February 22, 2023

Summarize for a Living: Should You Consider a New Career?

February 13, 2023

In the pre-historic age of commercial databases, many people earned money by reading and summarizing articles, documents, monographs, and consultant reports. In order to prepare and fact check a 130 word summary of an article in the Harvard Business Review in 1980, the cost to the database publisher worked out to something like $17 to $25 per summary for what I would call general business information. (If you want more information about this number, write benkent2020@yahoo.com, and maybe you will get more color on the number.) Flash forward to the present, the cost for a human to summarize an article in the Harvard Business Review has increased. That’s why it is necessary to pay to access and print an abstract from a commercial online service. Even with yesterday’s technology, the costs are a killer. Now you know why software that eliminates the human, the editorial review, the fact checking, and the editorial policies which define what is indexed, why, and what terms are assigned is a joke to many of those in the search and retrieval game.

I mention this because if you are in the A&I business (abstracting and indexing), you may want to take a look at HunKimForks ChatGPT Arxiv Extension. The idea is that ChatGPT can generate an abstract which is certainly less fraught with cost and management hassles than running one of the commercial database content generation systems dependent on humans, some with degrees in chemistry, law, or medicine.

Are the summaries any good? For the last 40 years abstracts and summaries have been, in my opinion, degrading. Fact checking is out the window along with editorial policies, style guidelines, and considered discussion of index terms, classification codes, time handling and signifying, among other, useful knowledge attributes.

Three observations:

- Commercial database publishers may want to check out this early-days open source contribution

- Those engaged in abstracting, writing summaries of books, and generating distillations of turgid government documents (yep, blue chip consulting firms I an thinking of you) may want to think about their future

- Say “hello” to increasingly inaccurate outputs from smart software. Recursion and liquid algorithms are not into factual stuff.

Stephen E Arnold, February 13, 2023

Newton and Shoulders of Giants? Baloney. Is It Everyday Theft?

January 31, 2023

Here I am in rural Kentucky. I have been thinking about the failure of education. I recall learning from Ms. Blackburn, my high school algebra teacher, this statement by Sir Isaac Newton, the apple and calculus guy:

If I have seen further, it is by standing on the shoulders of giants.

Did Sir Isaac actually say this? I don’t know, and I don’t care too much. It is the gist of the sentence that matters. Why? I just finished reading — and this is the actual article title — “CNET’s AI Journalist Appears to Have Committed Extensive Plagiarism. CNET’s AI-Written Articles Aren’t Just Riddled with Errors. They Also Appear to Be Substantially Plagiarized.”

How is any self-respecting, super buzzy smart software supposed to know anything without ingesting, indexing, vectorizing, and any other math magic the developers have baked into the system? Did Brunelleschi wake up one day and do the Eureka! thing? Maybe he stood on line and entered the Pantheon and looked up? Maybe he found a wasp’s nest and cut it in half and looked at what the feisty insects did to build a home? Obviously intellectual theft. Just because the dome still stands, when it falls, he is an untrustworthy architect engineer. Argument nailed.

The write up focuses on other ideas; namely, being incorrect and stealing content. Okay, those are interesting and possibly valid points. The write up states:

All told, a pattern quickly emerges. Essentially, CNET‘s AI seems to approach a topic by examining similar articles that have already been published and ripping sentences out of them. As it goes, it makes adjustments — sometimes minor, sometimes major — to the original sentence’s syntax, word choice, and structure. Sometimes it mashes two sentences together, or breaks one apart, or assembles chunks into new Frankensentences. Then it seems to repeat the process until it’s cooked up an entire article.

For a short (very, very brief) time I taught freshman English at a big time university. What the Futurism article describes is how I interpreted the work process of my students. Those entitled and enquiring minds just wanted to crank out an essay that would meet my requirements and hopefully get an A or a 10, which was a signal that Bryce or Helen was a very good student. Then go to a local hang out and talk about Heidegger? Nope, mostly about the opposite sex, music, and getting their hands on a copy of Dr. Oehling’s test from last semester for European History 104. Substitute the topics you talked about to make my statement more “accurate”, please.

I loved the final paragraphs of the Futurism article. Not only is a competitor tossed over the argument’s wall, but the Google and its outstanding relevance finds itself a target. Imagine. Google. Criticized. The article’s final statements are interesting; to wit:

As The Verge reported in a fascinating deep dive last week, the company’s primary strategy is to post massive quantities of content, carefully engineered to rank highly in Google, and loaded with lucrative affiliate links. For Red Ventures, The Verge found, those priorities have transformed the once-venerable CNET into an “AI-powered SEO money machine.” That might work well for Red Ventures’ bottom line, but the specter of that model oozing outward into the rest of the publishing industry should probably alarm anybody concerned with quality journalism or — especially if you’re a CNET reader these days — trustworthy information.

Do you like the word trustworthy? I do. Does Sir Isaac fit into this future-leaning analysis. Nope, he’s still pre-occupied with proving that the evil Gottfried Wilhelm Leibniz was tipped off about tiny rectangles and the methods thereof. Perhaps Futurism can blame smart software?

Stephen E Arnold, January 31, 2023

Billable Hours: The Practice of Time Fantasy

January 16, 2023

I am not sure how I ended up at a nuclear company in Washington, DC in the 1970s. I was stumbling along in a PhD program, fiddling around indexing poems for professors, and writing essays no one other than some PhD teaching the class would ever read. (Hey, come to think about it that’s the position I am in today. I write essays, and no one reads them. Progress? I hope not. I love mediocrity, and I am living in the Golden Age of meh and good enough.)

I recall arriving and learning from the VP of Human Resources that I had to keep track of my time. Hello? I worked on my own schedule, and I never paid attention to time. Wait. I did. I knew when the university’s computer center would be least populated by people struggling with IBM punch cards and green bar paper.

Now I have to record, according to Nancy Apple (I think that was her name): [a] The project number, [b] the task code, and [c] the number of minutes I worked on that project’s task. I pointed out that I would be shuttling around from government office to government office and then back to the Rockville administrative center and laboratory.

She explained that travel time had a code. I would have a project number, a task code for sitting in traffic on the Beltway, and a watch. Fill in the blanks.

As you might imagine, part of the learning curve for me was keeping track of time. I sort of did this, but as I become more and more engaged in the work about which I cannot speak, I filled in the time sheets every week. Okay, okay. I would fill in the time sheets when someone in Accounting called me and said, “I need your time sheets. We have to bill the client tomorrow. I want the time sheets now.”

As I muddled through my professional career, I understood how people worked and created time fantasy sheets. The idea was to hit the billable hour target without getting an auditor to camp out in my office. I thought of my learnings when I read “A Woman Who Claimed She Was Wrongly Dismissed Was Ordered to Repay Her Former Employer about $2,000 for Misrepresenting Her Working Hours.”

The write up which may or may not be written by a human states:

Besse [the time fantasy enthusiast] met with her former employer on March 29 last year. In a video recording of the meeting shared with the tribunal, she said: “Clearly, I’ve plugged time to files that I didn’t touch and that wasn’t right or appropriate in any way or fashion, and I recognize that and so for that I’m really sorry.” Judge Megan Stewart concluded that TimeCamp [the employee monitoring software watching the time fantasist] “likely accurately recorded” Besse’s work activities. She ordered Besse to compensate her former employer for a 50-hour discrepancy between her timesheets and TimeCamp’s records. In total, Besse was ordered to pay Reach a total of C$2,603 ($1,949) to compensate for wages and other payments, as well as C$153 ($115) in costs.

But the key passage for me was this one:

In her judgment, Stewart wrote: “Given that trust and honesty are essential to an employment relationship, particularly in a remote-work environment where direct supervision is absent, I find Miss Besse’s misconduct led to an irreparable breakdown in her employment relationship with Reach and that dismissal was proportionate in the circumstances.”

Far be it from me to raise questions, but I do have one: “Do lawyers engage in time fantasy billing?”

Of course not, “trust and honesty are essential.”

That’s good to know. Now what about PR and SEO billings? What about consulting firm billings?

If the claw back angle worked for this employer-employee set up, 2023 will be thrilling for lawyers, who obviously will not engage in time fantasy billing. Trust and honesty, right?

Stephen E Arnold, January 16, 2023

Thinking about Google in 2023: Hopefully Not Like Stuff Does

January 5, 2023

I have not been thinking about Google per se. I do think about [a] its management methods (Hello, Dr. Timnit Gebru), [b] its attempt to solve death, [c] the Googlers love of American basketball March Madness, [d] assorted semi-quiet settlements for alleged line-crossing activities, [e] the fish bowl culture with some species of fish decidedly further up the Great Chain of Googley Creatures, [f] the efforts to control costs using methods that are mostly invisible like possibly indexing less, embracing the snorkel view of the fish bowl, and abandoning the quaint notions of precision and recall, and [g] efforts to craft remarkable explanations for why the firm’s quantum supremacy and smart software has been on the receiving end of ChatGPT supersonic fly-bys.

The Stuff article “What to Expect from Google in 2023” takes a different approach; specifically, the article highlights a folding Pixel phone (er, hasn’t this been accomplished already?), gaming Chromebooks (er, what about the Stadia money pit, service termination, and refunds?), a Pixel tablet (er, another one-trick limping pony in the mobile device race?), a Pixel watch (er, Apple are you prepared?), Android refresh (er, how about that Android fragmentation?), and a reference to Google’s penchant for killing services. Do you remember Dodgeball or Waze?

My concern with this type of Google in 2023 article is that it misses the major challenges Google faces. I am not sure Google is aware of the challenges it faces. Life is a fish bowl is good until it isn’t. Not even a snappier snorkel will help. And if the water is fouled, what will the rank ordered fish do?

I know. I know. Solve death.

Stephen E Arnold, January 6, 2023