How Do You Know You Have Been Fired? 700 Hundred Words about People Skills

January 26, 2023

I read “Some Google Employees Didn’t Realize They Were Laid Off Until Their Badges Wouldn’t Let Them into the Office.” The write up reports in the manner of an person learning something quite surprising:

One laid-off Google employee, a software engineer who requested anonymity to speak freely, told Insider that he witnessed one of his co-workers repeatedly try to scan his employee badge to get into Google’s Chelsea, New York office, only for the card reader to turn red and deny him entry.

Yep. Code Red. Badge denied light Red. Google management Red Faced? Nah. Just marketing and a few others. No big deal.

How is Googzilla supposed to fire people? Get one of the crack People People to talk face-to-face with a Google wizard? Ain’t happening, kiddo. Perhaps a chill video call to which the newly unemployed super brains can connect and watch a video explaining that your are now officially non-essential. The good news, of course, is that one can say, “I am a Xoogler. I will start a venture fund. Or, I will invent the next great app powered by ChatGPT. Or, Mom I will be moving in next week. I’ve been fired.

Let’s go back in time. How about the mid 1970s when the US government urged buildings housing work deemed sensitive to implement better security systems. At the time, many buildings used a person sitting behind a big desk with a bunch of paper. One would state one’s name and the person one wanted to visit face-to-face. I told you we were going back in time. The person at the desk would use a telephone handset connected to a big console and call the extension of the person whom one wanted to meet. Then that person would send someone down to escort the outsider to a suitable meeting room. (Don’t ask about the measures in place in the meeting room, please.)

An employee would show an official badge, typically connected to an item of clothing or hanging from a lanyard. The person behind the desk would smile in recognition, push a button, and a gate would open. The person with the badge would walk to the elevators and ride to the appropriate floor. There are variations, of course.

But the main idea is that this electronic smart security was not in place. When a person was to be fired, that person would typically be in a cube or a manager’s office. The blow was delivered in person, sometimes with a bloodhound’s sad look or a bit of a smile that suggested the manager delivering the death blow was having fun.

Then the revolution. The building in which I worked toward the end of the 1970s got the electric key card thing. The day after that system was installed, my boss who ran the standalone unit of a blue chip consulting firm decided to fire people by disabling the person’s key card. Believe it or not, the Big Boss, the head of what was then called Human Resources, and I drove from the underground parking garage to the No Parking zone in front of the building and watched as people found their key card had been disabled.

My recollection is that because the firm had RIFed a couple of hundred people earlier in the week, we could observe the former blue chippers reaction. It was interesting. The most amazing thing is that the head of Human Resources put in place a procedure to terminate people via a phone call, allow them to return to the building and enter with a security escort to retrieve pictures of the wives, girl friends, animals, boats, or swimming trophy. Then the person could put the personal effects in a banker’s box and the escort would get the person out of the building. The escort then collected the dead key card.

That’s humane. What’s interesting is that Google’s management team ignored the insight out Human Resources’ person had: Find a way to minimize the craziness outside of the building. Avoid creating a news event on a busy street in Washington, DC. Figure out a procedure that eliminates, “Can you send me the picture of my dog Freddy?” to a person still working at the blue chip outfit.

But Google. Nope. Now it’s headline time and public exposure of the firm’s management excellence.

Stephen E Arnold, January 26, 2023

Social Media Scam-A-Rama

January 26, 2023

The Internet is a virtual playground for scam artists. While it is horrible that bad actors can get away with their crimes, it is also impressive the depth and creativity they go to for “easy money.” Fortune shares the soap opera-worthy saga of how: “Social Media Influencers Are Charged With Feeding Followers ‘A Steady Diet Of Misinformation’ In A Pump And Dump Stock Scheme That Netted $100 Million.”

The US Justice Department and the Securities and Exchange Commission (SEC) busted eight purported social media influencers who specialized in stock market trading advice. From 2020 to April 2022, they tricked their amateur investor audience of over 1.5 million Twitter users to invest funds in a “pump-and-dump” scheme. The scheme worked as follows:

“Seven of the social-media influencers promoted themselves as successful traders on Twitter and in Discord chat rooms and encouraged their followers to buy certain stocks, the SEC said. When prices or volumes of the promoted stocks would rise, the influencers ‘regularly sold their shares without ever having disclosed their plans to dump the securities while they were promoting them,’ the agency said. ‘The defendants used social media to amass a large following of novice investors and then took advantage of their followers by repeatedly feeding them a steady diet of misinformation,’ said the SEC’s Joseph Sansone, chief of the SEC Enforcement Division’s Market Abuse Unit.”

The ring’s eighth member hosted a podcast that promoted the co-conspirators as experts. The entire group posted about their luxury lifestyles to fool their audiences further about their stock market expertise.

All of the bad actors could face a max penalty of ten to twenty-five years in prison for fraud and/or unlawful monetary transactions. The SEC is cracking down on cryptocurrency schemes given the large number of celebrities who are hired to promote schemes. The celebrities claim to be innocent, because they were paid to promote a product and were not aware of the scam.

However, how innocent are these people when they use their status to make more money off their fans? They should follow Shaq’s example and research the products they are associated with before accepting a check…unless they are paid in cryptocurrency. That would be poetic justice!

Whitney Grace, January 26, 2023

Killing Wickr

January 26, 2023

Encrypted messaging services are popular for privacy-concerned users as well as freedom fighters in authoritarian countries. Tech companies consider these messaging services to be a wise investment, so Amazon purchased Wickr in 2020. Wickr is an end-to-end encrypted messaging app and it was made available for AWS users. Gizmodo explains that Wickr will soon be nonexistent in the article, “Amazon Plans To Close Up Wickr’s User-Centric Encrypted Messaging App.”

Amazon no longer wants to be part of the encrypted messaging services, because it got too saturated like the ugly Christmas sweater market. Amazon is killing the Wickr Me app, limiting use to business and public sectors through AWS Wickr and Wickr Enterprise. New registrations end on December 31 and the app will be obsolete by the end of 2023.

Wickr was worth $60 million went Amazon purchased it. Amazon, however, lost $1 trillion in stock vaguer in November 2022, becoming the first company in history to claim that “honor.” Amazon is laying off employees and working through company buyouts. Changing Wickr’s target market could recoup some of the losses:

“But AWS apparently wants Wickr to focus on its business and government customers much more than its regular users. Among those public entities using Wickr is U.S. Customs and Border Protection. That contract was reportedly worth around $900,000 when first reported in September last year. Sure, the CBP wants encrypted communications, but Wickr can delete all messages sent via the app, which is an increasingly dangerous proposition for open government advocates.”

Wickr, like other encryption services, does not have a clean record. It has been used for illegal drug sales and other illicit items via the Dark Web. At one time, Wickr might have been a source of useful metadata. Not now. Odd.

Whitney Grace, January 26, 2023

Googzilla Squeezed: Will the Beastie Wriggle Free? Can Parents Help Google Wiggle Out?

January 25, 2023

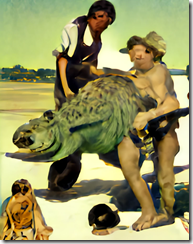

How easy was it for our prehistoric predecessors to capture a maturing reptile. I am thinking of Googzilla. (That’s my way of conceptualizing the Alphabet Google DeepMind outfit.)

This capturing the dangerous dinosaur shows one regulator and one ChatGPT dev in the style of Normal Rockwell (who may be spinning in his grave). The art was output by the smart software in use at Craiyon.com. I love those wonky spellings and the weird video ads and the image obscuring Next and Stay buttons. Is this the type of software the Google fears? I believe so.

On one side of the creature is the pesky ChatGPT PR tsunami. Google’s management team had to call Google’s parents to come to the garage. The whiz kids find themselves in a marketing battle. Imagine, a technology that Facebook dismisses as not a big deal, needs help. So the parents come back home from their vacations and social life to help out Sundar and Prabhakar. I wonder if the parents are asking, “What now?” and “Do you think these whiz kids want us to move in with them.” Forbes, the capitalist tool with annoying pop ups, tells one side of the story in “How ChatGPT Suddenly Became Google’s Code Red, Prompting Return of Page and Brin.”

On the other side of Googzilla is a weak looking government regulator. The Wall Street Journal (January 25, 2023) published “US Sues to Split Google’s Ad Empire.” (Paywall alert!) The main idea is that after a couple of decades of Google is free, great, and gives away nice tchotchkes US Federal and state officials want the Google to morph into a tame lizard.

Several observations:

- I find it amusing that Google had to call its parents for help. There’s nothing like a really tough, decisive set of whiz kids

- The Google has some inner strengths, including lawyers, lobbyists, and friends who really like Google mouse pads, LED pins, and T shirts

- Users of ChatGPT may find that as poor as Google’s search results are, the burden of figuring out an “answer” falls on the user. If the user cooks up an incorrect answer, the Google is just presenting links or it used to. When the user accepts a ChatGPT output as ready to use, some unforeseen consequences may ensue; for example, getting called out for presenting incorrect or stupid information, getting sued for copyright violations, or assuming everyone is using ChatGPT so go with the flow

Net net: Capturing and getting the vet to neuter the beastie may be difficult. Even more interesting is the impact of ChatGPT on allegedly calm, mature, and seasoned managers. Yep, Code Red. “Hey, sorry to bother you. But we need your help. Right now.”

Stephen E Arnold, January 25, 2023

Fixing Social Media: Sure Enough

January 25, 2023

It is not that social media platforms set out to do harm, exactly. They just regularly prioritize profits above the wellbeing of society. Brookings‘ Tech Stream hopes to help mitigate one such ill in, “How Social Media Platforms Can Reduce Polarization.” The advice is just a bit late, though, by about 15 years. If we had known then what we know now, perhaps we could have kept tech companies from getting addicted to stirring the pot in the first place.

Nevertheless, journalists Christian Staal Bruun Overgaard and Samuel Woolley do a good job describing the dangers of today’s high polarization, how we got here, and what might be done about it. See the article for that discussion complete with many informative links. Regarding where to go from here, the authors note that (perhaps ironically) social media platforms are in a good position to help reverse the trend, should they choose to do so. They tell us:

“Our review of the scientific literature on how to bridge societal divides points to two key ideas for how to reduce polarization. First, decades of research show that when people interact with someone from their social ‘outgroup,’ they often come to view that outgroup in a more favorable light. Significantly, individuals do not need to take part in these interactions themselves. Exposure to accounts of outgroup contact in the media, from news articles to online videos, can also have an impact. Both positive intergroup contact and stories about such contact have been shown to dampen prejudice toward various minority groups.

The second key finding of our review concerns how people perceive the problem of polarization. Even as polarization has increased in recent years, survey research has consistently shown that many Americans think the nation is more divided than it truly is. Meanwhile, Democrats and Republicans think they dislike each other more than they actually do. These misconceptions can, ironically, drive the two sides further apart. Any effort to reduce polarization thus also needs to correct perceptions about how bad polarization really is. For social media platforms, the literature on bridging societal divides has important implications.”

The piece discusses five specific recommendations for platforms: surface more positive interparty contact, prioritize content that’s popular among disparate user groups, correct misconceptions, design better user interfaces, and collaborate with researchers. Will social media companies take the researchers’ advice to actively promote civil discourse over knee-jerk negativity? Only if accountability legislation and PR headaches can ever outweigh the profit motive.

The UK has a different idea: Send the executives of US social media companies to prison.

Cynthia Murrell, January 25, 2023

Japan Does Not Want a Bad Apple on Its Tax Rolls

January 25, 2023

Everyone is falling over themselves about a low-cost Mac Mini, just not a few Japanese government officials, however.

An accountant once gave me some advice: never anger the IRS. A governmental accounting agency that arms its employees with guns is worrisome. It is even more terrifying to anger a foreign government accounting agency. The Japanese equivalent of the IRS smacked Apple with the force of a tsunami in fees and tax penalties Channel News Asia reported: “Apple Japan Hit With $98 Million In Back Taxes-Nikkei.”

The Japanese branch of Apple is being charged with $98 million (13 billion yen) for bulk sales of Apple products sold to tourists. The product sales, mostly consisting of iPhones, were wrongly exempted from consumption tax. The error was caught when a foreigner was caught purchasing large amounts of handsets in one shopping trip. If a foreigner visits Japan for less than six months they are exempt from the ten percent consumption tax unless the products are intended for resale. Because the foreign shopper purchased so many handsets at once, it is believed they were cheating the Japanese tax system.

The Japanese counterpart to the IRS brought this to Apple Japan’s attention and the company handled it in the most Japanese way possible: quiet acceptance. Apple will pay the large tax bill:

“Apple Japan is believed to have filed an amended tax return, according to Nikkei. In response to a Reuters’ request for comment, the company only said in an emailed message that tax-exempt purchases were currently unavailable at its stores. The Tokyo Regional Taxation Bureau declined to comment.”

Apple America responded that the company invested over $100 billion in the Japanese supply network in the past five years.

Japan is a country dedicated to advancing technology and, despite its declining population, it possesses one of the most robust economies in Asia. Apple does not want to lose that business, so paying $98 million is a small hindrance to continue doing business in Japan.

Whitney Grace, January 25, 2023

Responding to the PR Buzz about ChatGPT: A Tale of Two Techies

January 24, 2023

One has to be impressed with the PR hype about ChatGPT. One can find tip sheets for queries (yes, queries matter… a lot), ideas for new start ups, and Sillycon Valley pundits yammering on podcasts. At an Information Industry Association meting in Boston, Massachusetts, a person whom I think was called Marvin or Martin Wein-something made an impassioned statement about the importance of artificial intelligence. I recall his saying, “It is happening. Now.”

Marvin or Martin made that statement which still sticks in my mind in 1982 or so. That works out to 40 years ago.

What strikes me this morning is the difference between the response of Facebook and Google. This is a Tale of Two Techies.

In the case of Google, it is Red Alert time. The fear is palpable among the senior managers. How do I know? I read “Google Founders Return As ChatGPT Threatens Search Business.” I could trot out some parallels between Google management’s fear and the royals threatened by riff raff. Make no mistake. The Googlers have quantum supremacy and the DeepMind protein and game playing machine. I recall reading or being told that Google has more than 20 applications that will be available… soon. (Wasn’t that type of announcement once called vaporware?) The key point is that the Googlers are frightened, and like Disney, have had to call the team of Brin and Page to revivify the thinking about the threat to the search business. I want to remind you that the search business was inspired by Yahoo’s Overture approach. Google settled some litigation and the rest is history. Google became an alleged monopoly and Yahoo devolved into a spammy email service.

And what about Facebook? I noted this article: “ChatGPT Is Not Particularly Innovative and Nothing Revolutionary, Says Meta’s Chief AI Scientist.” The write up explains that Meta’s stance with regard to the vibe machine ChatGPT is “meh.” I think Meta or the Zuckbook does care, but Meta has a number of other issues to which the proud firm must respond. Smart software that seems to be a Swiss Army knife of solutions is “nothing revolutionary.” Okay.

Let’s imagine we are in college in one of those miserable required courses in literature. Our assignment is to analyze the confection called the Tale of Two Techies. What’s the metaphorical pivot for this soap opera?

Here’s my take:

- Meta is either too embarrassed, too confused, or too overwhelmed with on-going legal hassles to worry too much about ChatGPT. Putting on the “meh” is good enough. The company seems to be saying, “We don’t care too much… at least in public.”

- Google is running around with its hair on fire. The senior management team’s calling on the dynamic duo to save the day is indicative of the mental short circuits the company exhibits.

Net net: Good, bad, or indifferent ChatGPT makes clear the lack of what one might call big time management thinking. Is this new? Sadly, no.

Stephen E Arnold, January 24, 2023

Why Governments and Others Outsource… Almost Everything

January 24, 2023

I read a very good essay called “Questions for a New Technology.” The core of the write up is a list of eight questions. Most of these are problems for full-time employees. Let me give you one example:

Are we clear on what new costs we are taking on with the new technology? (monitoring, training, cognitive load, etc)

The challenge strike me as the phrase “new technology.” By definition, most people in an organization will not know the details of the new technology. If a couple of people do, these individuals have to get the others up to speed. The other problem is that it is quite difficult for humans to look at a “new technology” and know about the knock on or downstream effects. A good example is the craziness of Facebook’s dating objective and how the system evolved into a mechanism for social revolution. What in-house group of workers can tackle problems like that once the method leaves the dorm room?

The other questions probe similarly difficult tasks.

But my point is that most governments do not rely on their full time employees to solve problems. Years ago I gave a lecture at Cebit about search. One person in the audience pointed out that in that individual’s EU agency, third parties were hired to analyze and help implement a solution. The same behavior popped up in Sweden, the US, and Canada and several other countries in which I worked prior to my retirement in 2013.

Three points:

- Full time employees recognize the impossibility of tackling fundamental questions and don’t really try

- The consultants retained to answer the questions or help answer the questions are not equipped to answer the questions either; they bill the client

- Fundamental questions are dodged by management methods like “let’s push decisions down” or “we decide in an organic manner.”

Doing homework and making informed decisions is hard. A reluctance to learn, evaluate risks, and implement in a thoughtful manner are uncomfortable for many people. The result is the dysfunction evident in airlines, government agencies, hospitals, education, and many other disciplines. Scientific research is often non reproducible. Is that a good thing? Yes, if one lacks expertise and does not want to accept responsibility.

Stephen E Arnold, January 25, 2023

OpenAI Working on Proprietary Watermark for Its AI-Generated Text

January 24, 2023

Even before OpenAI made its text generator GPT-3 available to the public, folks were concerned the tool was too good at mimicking the human-written word. For example, what is to keep students from handing their assignments off to an algorithm? (Nothing, as it turns out.) How would one know? Now OpenAI has come up with a solution—of sorts. Analytics India Magazine reports, “Generated by Human or AI: OpenAI to Watermark its Content.” Writer Pritam Bordoloi describes how the watermark would work:

“We want it to be much harder to take a GPT output and pass it off as if it came from a human,’ [OpenAI’s Scott Aaronson] revealed while presenting a lecture at the University of Texas at Austin. ‘For GPT, every input and output is a string of tokens, which could be words but also punctuation marks, parts of words, or more—there are about 100,000 tokens in total. At its core, GPT is constantly generating a probability distribution over the next token to generate, conditional on the string of previous tokens,’ he said in a blog post documenting his lecture. So, whenever an AI is generating text, the tool that Aaronson is working on would embed an ‘unnoticeable secret signal’ which would indicate the origin of the text. ‘We actually have a working prototype of the watermarking scheme, built by OpenAI engineer Hendrik Kirchner.’ While you and I might still be scratching our heads about whether the content is written by an AI or a human, OpenAI—who will have access to a cryptographic key—would be able to uncover a watermark, Aaronson revealed.”

Great! OpenAI will be able to tell the difference. But … how does that help the rest of us? If the company just gifted the watermarking key to the public, bad actors would find a way around it. Besides, as Bordoloi notes, that would also nix OpenAI’s chance to make a profit off it. Maybe it will sell it as a service to certain qualified users? That would be an impressive example of creating a problem and selling the solution—a classic business model. Was this part of the firm’s plan all along? Plus, the killer question, “Will it work?”

Cynthia Murrell, January 24, 2023

Quote to Note: AI and the Need to Do

January 23, 2023

The source is a wizard from Stanford University. The quote to note appears in “Stanford Faculty Weigh In on ChatGPT’s Shake-Up in Education.”

“We need the use of this technology to be ethical, equitable, and accountable.”

Several questions come to mind:

- Is the Stanford Artificial Intelligence Lab into “ethical, equitable, and accountable”?

- Is the Stanford business school into “ethical, equitable, and accountable”?

- Is the Stanford computer science units into ethical, equitable, and accountable”?

Nice sentiment for a sentiment analysis program. Disconnected from reality? From my perspective, absolutely.

Stephen E Arnold, January 23, 2023