A Former Yahooligan and Xoogler Offers Management Advice: Believe It or Not!

November 22, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a remarkable interview / essay / news story called “Former Yahoo CEO Marissa Mayer Delivers Sharp-Elbowed Rebuke of OpenAI’s Broken Board.” Marissa Mayer was a Googler. She then became the Top Dog at Yahoo. Highlights of her tenure at Yahoo include, according to Inc.com, included:

- Fostering a “superstar status” for herself

- Pointing a finger is a chastising way at remote workers

- Trying to obfuscate Yahooligan layoffs

- Making slow job cuts

- Lack of strategic focus (maybe Tumblr, Yahoo’s mobile strategy, the search service, perhaps?)

- Tactical missteps in diversifying Yahoo’s business (the Google disease in my opinion)

- Setting timetables and then ignoring, missing, or changing them

- Weird PR messages

- Using fear (and maybe uncertainty and doubt) as management methods.

The senior executives of a high technology company listen to a self-anointed management guru. One of the bosses allegedly said, “I thought Bain and McKinsey peddled a truckload of baloney. We have the entire factory in front of use.” Thanks, MSFT Copilot. Is Sam the AI-Man on duty?

So what’s this exemplary manager have to say? Let’s go to the original story:

“OpenAI investors (like @Microsoft) need to step up and demand that the governance weaknesses at @OpenAI be fixed,” Mayer wrote Sunday on X, formerly known as Twitter.

Was Microsoft asleep at the switch or simply operating within a Cloud of Unknowing? Fast-talking Satya Nadella was busy trying to make me think he was operating in a normal manner. Had he known something was afoot, is he equipped to deal with burning effigies as a business practice?

Ms. Mayer pointed out:

“The fact that Ilya now regrets just shows how broken and under advised they are/were,” Mayer wrote on social media. “They call them board deliberations because you are supposed to be deliberate.”

Brilliant! Was that deliberative process used to justify the purchase of Tumblr?

The Business Insider write up revealed an interesting nugget:

The Information reported that the former Yahoo CEO’s name had been tossed around by “people close to OpenAI” as a potential addition to the board…

Okay, a Xoogler and a Yahooligan in one package.

Stephen E Arnold, November 22, 2023

Poli Sci and AI: Smart Software Boosts Bad Actors (No Kidding?)

November 22, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Smart software (AI, machine learning, et al) has sparked awareness in some political scientists. Until I read “Can Chatbots Help You Build a Bioweapon?” — I thought political scientists were still pondering Frederick William, Elector of Brandenburg’s social policies or Cambodian law in the 11th century. I was incorrect. Modern poli sci influenced wonks are starting to wrestle with the immense potential of smart software for bad actors. I think this dispersal of the cloud of unknowing I perceived among similar academic group when I entered a third-rate university in 1962 is a step forward. Ah, progress!

“Did you hear that the Senate Committee used my testimony about artificial intelligence in their draft regulations for chatbot rules and regulations?” says the recently admitted elected official. The inmates at the prison facility laugh at the incongruity of the situation. Thanks, Microsoft Bing, you do understand the ways of white collar influence peddling, don’t you?

The write up points out:

As policymakers consider the United States’ broader biosecurity and biotechnology goals, it will be important to understand that scientific knowledge is already readily accessible with or without a chatbot.

The statement is indeed accurate. Outside the esteemed halls of foreign policy power, STM (scientific, technical, and medical) information is abundant. Some of the data are online and reasonably easy to find with such advanced tools as Yandex.com (a Russian centric Web search system) or the more useful Chemical Abstracts data.

The write up’s revelations continue:

Consider the fact that high school biology students, congressional staffers, and middle-school summer campers already have hands-on experience genetically engineering bacteria. A budding scientist can use the internet to find all-encompassing resources.

Yes, more intellectual sunlight in the poli sci journal of record!

Let me offer one more example of ground breaking insight:

In other words, a chatbot that lowers the information barrier should be seen as more like helping a user step over a curb than helping one scale an otherwise unsurmountable wall. Even so, it’s reasonable to worry that this extra help might make the difference for some malicious actors. What’s more, the simple perception that a chatbot can act as a biological assistant may be enough to attract and engage new actors, regardless of how widespread the information was to begin with.

Is there a step government deciders should take? Of course. It is the step that US high technology companies have been begging bureaucrats to take. Government should spell out rules for a morphing, little understood, and essentially uncontrollable suite of systems and methods.

There is nothing like regulating the present and future. Poli sci professionals believe it is possible to repaint the weird red tail on the Boeing F 7A aircraft while the jet is flying around. Trivial?

Here’s the recommendation which I found interesting:

Overemphasizing information security at the expense of innovation and economic advancement could have the unforeseen harmful side effect of derailing those efforts and their widespread benefits. Future biosecurity policy should balance the need for broad dissemination of science with guardrails against misuse, recognizing that people can gain scientific knowledge from high school classes and YouTube—not just from ChatGPT.

My take on this modest proposal is:

- Guard rails allow companies to pursue legal remedies as those companies do exactly what they want and when they want. Isn’t that why the Google “public” trial underway is essentially “secret”?

- Bad actors loves open source tools. Unencumbered by bureaucracies, these folks can move quickly. In effect the mice are equipped with jet packs.

- Job matching services allow a bad actor in Greece or Hong Kong to identify and hire contract workers who may have highly specialized AI skills obtained doing their day jobs. The idea is that for a bargain price expertise is available to help smart software produce some AI infused surprises.

- Recycling the party line of a handful of high profile AI companies is what makes policy.

With poli sci professional becoming aware of smart software, a better world will result. Why fret about livestock ownership in the glory days of what is now Cambodia? The AI stuff is here and now, waiting for the policy guidance which is sure to come even though the draft guidelines have been crafted by US AI companies?

Stephen E Arnold, November 22, 2023

Complex Humans and Complex Subjects: A Recipe for Confusion

November 22, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Disinformation is commonly painted as a powerful force able to manipulate the public like so many marionettes. However, according to Techdirt’s Mike Masnick, “Human Beings Are Not Puppets, and We Should Probably Stop Acting Like They Are.” The post refers to in in-depth Harper’s Magazine piece written by Joseph Bernstein in 2021. That article states there is little evidence to support the idea that disinformation drives people blindly in certain directions. However, social media platforms gain ad dollars by perpetuating that myth. Masnick points out:

“Think about it: if the story is that a post on social media can turn a thinking human being into a slobbering, controllable, puppet, just think how easy it will be to convince people to buy your widget jammy.”

Indeed. Recent (ironic) controversy around allegedly falsified data about honesty in the field of behavioral economics reminded Masnick of Berstein’s article. He considers:

“The whole field seems based on the same basic idea that was at the heart of what Bernstein found about disinformation: it’s all based on this idea that people are extremely malleable, and easily influenced by outside forces. But it’s just not clear that’s true.”

So what is happening when people encounter disinformation? Inconveniently, it is more complicated than many would have us believe. And it involves our old acquaintance, confirmation bias. The write-up continues:

“Disinformation remains a real issue — it exists — but, as we’ve seen over and over again elsewhere, the issue is often less about disinformation turning people into zombies, but rather one of confirmation bias. People who want to believe it search it out. It may confirm their priors (and those priors may be false), but that’s a different issue than the fully puppetized human being often presented as the ‘victim’ of disinformation. As in the field of behavioral economics, when we assume too much power in the disinformation … we get really bad outcomes. We believe things (and people) are both more and less powerful than they really are. Indeed, it’s kind of elitist. It’s basically saying that the elite at the top can make little minor changes that somehow leads the sheep puppets of people to do what they want.”

Rather, we are reminded, each person comes with their own complex motivations and beliefs. This makes the search for a solution more complicated. But facing the truth may take us away from the proverbial lamppost and toward better understanding.

Cynthia Murrell, November 22, 2023

Turmoil in AI Land: Uncertainty R Us

November 21, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It is now Tuesday, November 21, 2023. I learned this morning on the “Pivot” podcast that one of the co-hosts is the “best technology reporter.” I read a number of opinions about the high school science club approach to managing a multi-billion dollar alleged valued at lots of money last Friday, November 17, 2023, and today valued at much less money. I read some of the numerous “real news” stories on Hacker News, Techmeme, and Xitter, and learned:

- Gee, it was a mistake

- Sam AI-Man is working at Microsoft

- Sam AI-Man is not working at Microsoft

- Microsoft is ecstatic that opportunities are available

- Ilya Sutskever will become a blue-chip consultant specializing in Board-level governance

- OpenAI is open because it is business as usual in Sillycon Valley.

The AI ringmaster has issued an instruction or prompt to the smart software. The smart software does not obey. What’s happening is that not only are inputs not converted to the desired actions, the entire circus audience is not sure which is more entertaining, the software or the manager. Thanks, Microsoft Copilot. I gave up and used one of the good enough images.

“Firing Sam Altman Hasn’t Worked Out for OpenAI’s Board” reports:

Whether Altman ultimately stays at Microsoft or comes back to OpenAI, he’ll be more powerful than he was last week. And if he wants to rapidly develop and commercialize powerful AI models, nobody will be in a position to stop him. Remarkably, one of the 500 employees who signed Monday’s OpenAI employee letter is Ilya Sutskever, who has had a profound change of heart since he voted to oust Altman on Friday.

Okay, maybe Ilya Sutskever will not become a blue chip consultant. That’s okay, just mercurial.

Several observations:

- Smart software causes bright people to behave in sophomoric ways. I have argued for many years that many of the techno-feudalistic outfits are more like high school science clubs than run-of-the-mill high school sophomores. Intelligence coupled with a poorly developed judgment module causes some spectacular management actions.

- Poor Google must be uncomfortable as its struggles on the tenterhooks which have snagged its corporate body. Is Microsoft going to going to be the Big Dog in smart software? Is Sam AI-Man going to do something new to make life for Googzilla more uncomfortable than it already is? Is Google now faced with a crisis about which its flocks of legal eagles, its massive content marketing machine, and its tools for shaping content cannot do much to seize the narrative.

- Developers who have embraced the idea of OpenAI as the best partner in the world have to consider that their efforts may be for naught? Where do these wizards turn? To Microsoft and the Softie ethos? To the Zuck and his approach? To Google and its reputation for terminating services like snipers? To the French outfit with offices near some very good restaurants. (That doesn’t sound half bad, does it?)

I am not sure if Act I has ended or if the entire play has ended. After a short intermission, there will be more of something.

Stephen E Arnold, November 21, 2023

Is Your Phone Secure? Think Before Answering, Please

November 21, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I am not going to offer my observations and comments. The article, its information, and the list of companies from The Times of India’s “11 Dangerous Spywares Used Globally: Pegasus, Hermit, FinFisher and More” speaks for itself. The main point of the write up is that mobile phone security should be considered in the harsh light of digital reality. The write up provides a list of outfits and components which can be used to listen to conversations, intercept text and online activity, as well as exfiltrate geolocation data, contact lists, logfiles, and imagery. Some will say, “This type of software should be outlawed.” I have no comment.

Are there bugs waiting to compromise your mobile device? Yep. Thanks, MSFT Copilot. You have a knack for capturing the type of bugs with which many are familiar.

Here’s the list. I have alphabetized by the name of the malware and provided a possible entity name for the owner:

- Candid. Maybe a Verint product? (Believed to be another product developed by former Israeli cyber warfare professionals)

- Chrysaor. (Some believe it was created by NSO Group or NSO Group former employees)

- Dark Tequila. (Requires access to the targeted device or for the user to perform an action. More advanced methods require no access to the device nor for the user to click)

- FinFisher. Gamma Group (The code is “in the wild” and the the German unit may be on vacation or working under a different name in the UK)

- Hawkeye, Predator, or Predator Pain (Organization owning the software is not known to this dinobaby)

- Hermit. RCS Lab (Does RCS mean “remote control service”?)

- Pegasus. NSO Group Pegasus (now with a new president who worked at NSA and Homeland Security)

- RATs (Remote Access Trojans) This is a general class of malware. Many variants.

- Sofacy. APT28 (allegedly)

- XKeyscore (allegedly developed by a US government agency)

Is the list complete? No.

Stephen E Arnold, November 21, 2023

EU Objects to Social Media: Again?

November 21, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Social media is something I observe at a distance. I want to highlight the information in “X Is the Biggest Source of Fake News and Disinformation, EU Warns.” Some Americans are not interested in what the European Union thinks, says, or regulates. On the other hand, the techno feudalistic outfits in the US of A do pay attention when the EU hands out reprimands, fines, and notices of auditions (not for the school play, of course).

This historic photograph shows a super smart, well paid, entitled entrepreneur letting the social media beast out of its box. Now how does this genius put the creature back in the box? Good questions. Thanks, MSFT Copilot. You balked, but finally output a good enough image.

The story in what I still think of as “the capitalist tool” states:

European Commission Vice President Vera Jourova said in prepared remarks that X had the “largest ratio of mis/disinformation posts” among the platforms that submitted reports to the EU. Especially worrisome is how quickly those spreading fake news are able to find an audience.

The Forbes’ article noted:

The social media platforms were seen to have turned a blind eye to the spread of fake news.

I found the inclusion of this statement a grim reminder of what happens when entities refuse to perform content moderation:

“Social networks are now tailor-made for disinformation, but much more should be done to prevent it from spreading widely,” noted Mollica [a teacher at American University]. “As we’ve seen, however, trending topics and algorithms monetize the negativity and anger. Until that practice is curbed, we’ll see disinformation continue to dominate feeds.”

What is Forbes implying? Is an American corporation a “bad” actor? Is the EU parking at a dogwood, not a dog? Is digital information reshaping how established processes work?

From my point of view, putting a decades old Pandora or passel of Pandoras back in a digital box is likely to be impossible. Once social fabrics have been disintegrated by massive flows of unfiltered information, the woulda, coulda, shoulda chatter is ineffectual. X marks the spot.

Stephen E Arnold, November 2023

Anti-AI Fact Checking. What?

November 21, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If this effort is sincere, at least one news organization is taking AI’s ability to generate realistic fakes seriously. Variety briefly reports, “CBS Launches Fact-Checking News Unit to Examine AI, Deepfakes, Misinformation.” Aptly dubbed “CBS News Confirmed,” the unit will be led by VPs Claudia Milne and Ross Dagan. Writer Brian Steinberg tells us:

“The hope is that the new unit will produce segments on its findings and explain to audiences how the information in question was determined to be fake or inaccurate. A July 2023 research note from the Northwestern Buffett Institute for Global Affairs found that the rapid adoption of content generated via A.I. ‘is a growing concern for the international community, governments and the public, with significant implications for national security and cybersecurity. It also raises ethical questions related to surveillance and transparency.’”

Why yes, good of CBS to notice. And what will it do about it? We learn:

“CBS intends to hire forensic journalists, expand training and invest in new technology, [CBS CEO Wendy] McMahon said. Candidates will demonstrate expertise in such areas as AI, data journalism, data visualization, multi-platform fact-checking, and forensic skills.”

So they are still working out the details, but want us to rest assured they have a plan. Or an outline. Or maybe a vague notion. At least CBS acknowledges this is a problem. Now what about all the other news outlets?

Cynthia Murrell, November 21, 2023

OpenAI: What about Uncertainty and Google DeepMind?

November 20, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A large number of write ups about Microsoft and its response to the OpenAI management move populate my inbox this morning (Monday, November 20, 2023).

To give you a sense of the number of poohbahs, mavens, and “real” journalists covering Microsoft’s hiring of Sam (AI-Man) Altman, I offer this screen shot of Techmeme.com taken at 1100 am US Eastern time:

A single screenshot cannot do justice to the digital bloviating on this subject as well as related matters.

I did a quick scan because I simply don’t have the time at age 79 to read every item in this single headline service. Therefore, I admit that others may have thought about the impact of the Steve Jobs’s like termination, the revolt of some AI wizards, and Microsoft’s creating a new “company” and hiring Sam AI-Man and a pride of his cohorts in the span of 72 hours (give or take time for biobreaks).

In this short essay, I want to hypothesize about how the news has been received by that merry band of online advertising professionals.

To begin, I want to suggest that the turmoil about who is on first at OpenAI sent a low voltage signal through the collective body of the Google. Frisson resulted. Uncertainty and opportunity appeared together like the beloved Scylla and Charybdis, the old pals of Ulysses. The Google found its right and left Brainiac hemispheres considering that OpenAI would experience a grave set back, thus clearing a path for Googzilla alone. Then one of the Brainiac hemisphere reconsidered and perceive a grave threat from the split. In short, the Google tipped into its zone of uncertainty.

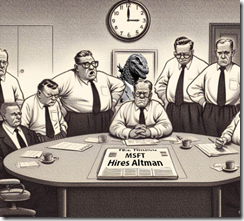

A group of online advertising experts meet to consider the news that Microsoft has hired Sam Altman. The group looks unhappy. Uncertainty is an unpleasant factor in some business decisions. Thanks Microsoft Copilot, you captured the spirit of how some Silicon Valley wizards are reacting to the OpenAI turmoil because Microsoft used the OpenAI termination of Sam Altman as a way to gain the upper hand in the cloud and enterprise app AI sector.

Then the matter appeared to shift back to the pre-termination announcement. The co-founder of OpenAI gained more information about the number of OpenAI employees who were planning to quit or, even worse, start posting on Instagram, WhatsApp, and TikTok (X.com is no longer considered the go-to place by the in crowd.

The most interesting development was not that Sam AI-Man would return to the welcoming arms of Open AI. No, Sam AI-Man and another senior executive were going to hook up with the geniuses of Redmond. A new company would be formed with Sam AI-Man in charge.

As these actions unfolded, the Googlers sank under a heavy cloud of uncertainty. What if the Softies could use Google’s own open source methods, integrate rumored Microsoft-developed AI capabilities, and make good on Sam AI-Man’s vision of an AI application store?

The Googlers found themselves reading every “real news” item about the trajectory of Sam AI-Man and Microsoft’s new AI unit. The uncertainty has morphed into another January 2023 Davos moment. Here’s my take as of 230 pm US Eastern, November 20, 2023:

- The Google faces a significant threat when it comes to enterprise AI apps. Microsoft has a lock on law firms, the government, and a number of industry sectors. Google has a presence, but when it comes to go-to apps, Microsoft is the Big Dog. More and better AI raises the specter of Microsoft putting an effective laser defense behinds its existing enterprise moat.

- Microsoft can push its AI functionality as the Azure difference. Furthermore, whether Google or Amazon for that matter assert their cloud AI is better, Microsoft can argue, “We’re better because we have Sam AI-Man.” That is a compelling argument for government and enterprise customers who cannot imagine work without Excel and PowerPoint. Put more AI in those apps, and existing customers will resist blandishments from other cloud providers.

- Google now faces an interesting problem: It’s own open source code could be converted into a death ray, enhanced by Sam AI-Man, and directed at the Google. The irony of Googzilla having its left claw vaporized by its own technology is going to be more painful than Satya Nadella rolling out another Davos “we’re doing AI” announcement.

Net net: The OpenAI machinations are interesting to many companies. To the Google, the OpenAI event and the Microsoft response is like an unsuspecting person getting zapped by Nikola Tesla’s coil. Google’s mastery of high school science club management techniques will now dig into the heart of its DeepMind.

Stephen E Arnold, November 20, 2023

Google Pulls Out a Rhetorical Method to Try to Win the AI Spoils

November 20, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In high school in 1958, our debate team coach yapped about “framing.” The idea was new to me, and Kenneth Camp pounded it into our debate’s collective “head” for the four years of my high school tenure. Not surprisingly, when I read “Google DeepMind Wants to Define What Counts As Artificial General Intelligence” I jumped back in time 65 years (!) to Mr. Camp’s explanation of framing and how one can control the course of a debate with the technique.

Google should not have to use a rhetorical trick to make its case as the quantum wizard of online advertising and universal greatness. With its search and retrieval system, the company can boost, shape, and refine any message it wants. If those methods fall short, the company can slap on a “filter” or “change its rules” and deprecate certain Web sites and their messages.

But Google values academia, even if the university is one that welcomed a certain Jeffrey Epstein into its fold. (Do you remember the remarkable Jeffrey Epstein. Some of those who he touched do I believe.) The estimable Google is the subject of referenced article in the MIT-linked Technology Review.

From my point of view, the big idea is the write up is, and I quote:

To come up with the new definition, the Google DeepMind team started with prominent existing definitions of AGI and drew out what they believe to be their essential common features. The team also outlines five ascending levels of AGI: emerging (which in their view includes cutting-edge chatbots like ChatGPT and Bard), competent, expert, virtuoso, and superhuman (performing a wide range of tasks better than all humans, including tasks humans cannot do at all, such as decoding other people’s thoughts, predicting future events, and talking to animals). They note that no level beyond emerging AGI has been achieved.

Shades of high school debate practice and the chestnuts scattered about the rhetorical camp fire as John Schunk, Jimmy Bond, and a few others (including the young dinobaby me) learned how one can set up a frame, populate the frame with logic and facts supporting the frame, and then point out during rebuttal that our esteemed opponents were not able to dent our well formed argumentative frame.

Is Google the optimal source for a definition of artificial general intelligence, something which does not yet exist. Is Google’s definition more useful than a science fiction writer’s or a scene from a Hollywood film?

Even the trusted online source points out:

One question the researchers don’t address in their discussion of _what_ AGI is, is _why_ we should build it. Some computer scientists, such as Timnit Gebru, founder of the Distributed AI Research Institute, have argued that the whole endeavor is weird. In a talk in April on what she sees as the false (even dangerous) promise of utopia through AGI, Gebru noted that the hypothetical technology “sounds like an unscoped system with the apparent goal of trying to do everything for everyone under any environment.” Most engineering projects have well-scoped goals. The mission to build AGI does not. Even Google DeepMind’s definitions allow for AGI that is indefinitely broad and indefinitely smart. “Don’t attempt to build a god,” Gebru said.

I am certain it is an oversight, but the telling comment comes from an individual who may have spoken out about Google’s systems and methods for smart software.

Mr. Camp, the high school debate coach, explains how a rhetorical trope can gut even those brilliant debaters from other universities. (Yes, Dartmouth, I am still thinking of you.) Google must have had a “coach” skilled in the power of framing. The company is making a bold move to define that which does not yet exist and something whose functionality is unknown. Such is the expertise of the Google. Thanks, Bing. I find your use of people of color interesting. Is this a pre-Sam ouster or a post-Sam ouster function?

What do we learn from the write up? In my view of the AI landscape, we are given some insight into Google’s belief that its rhetorical trope packaged as content marketing within an academic-type publication will lend credence to the company’s push to generate more advertising revenue. You may ask, “But won’t Google make oodles of money from smart software?” I concede that it will. However, the big bucks for the Google come from those willing to pay for eyeballs. And that, dear reader, translates to advertising.

Stephen E Arnold, November 20, 2023

The Confusion about Social Media, Online, and TikToking the Day Away

November 20, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

This dinobaby is not into social media. Those who are present interesting, often orthogonal views of the likes of Facebook, Twitter, and Telegram public groups.

“Concerning: Excessive Screen Time Linked to Lower Cognitive Function” reports:

In a new meta-analysis of dozens of earlier studies, we’ve found a clear link between disordered screen use and lower cognitive functioning.

I knew something was making it more and more difficult for young people to make change. In a remarkable demonstration of cluelessness, my wife told me that the clerk at our local drug store did not know what a half dollar was. My wife said, “I had to wait for the manager to come and tell the clerk that it was the same as 50 pennies.” There are other clues to the deteriorating mental acuity of some individuals. Examples range from ingesting trank to driving the wrong way on an interstate highway, a practice not unknown in the Commonwealth of Kentucky.

The debate about social media, online content consumption, and TikTok addiction continues. I find it interesting how allegedly informed people interpret data about online differently. Don’t these people watch young people doing their jobs? Thanks, MSFT Copilot. You responded despite the Sam AI-Man Altman shock.

I understand that there are different ways to interpret data. For instance, A surprising “Feature of IQ Has Actually Improved over the Past 30 Years.” That write up asserts:

Researchers from the University of Vienna in Austria dug deep into the data from 287 previously studied samples, covering a total of 21,291 people from 32 countries aged between 7 and 72, across a period of 31 years (1990 to 2021).

Each individual had completed the universally recognized d2 Test of Attention for measuring concentration, which when taken as a whole, showed a moderate rise in concentration levels over the decades, suggesting adults are generally better able to focus compared with people more than 30 years ago.

I have observed this uplifting benefit of social media, screen time, and swiping. A recent example is that a clerk at our local organic food market was intent on watching a video on his mobile phone. Several people were talking softly as they waited for the young person to put down his phone and bag the groceries. One intrusive and bold person spoke up and said, “Young man, would you put down your phone and put the groceries in the sack?” The young cognitively improved individual ignored her. I then lumbered forward like a good dinobaby and said, “Excuse me, I think you need to do your job.” When he became aware of my standing directly in front of him, he put down his phone. What concentration!

Is social media and its trappings good or bad? Wait, I need to check my phone.

Stephen E Arnold, November 20, 2023