Gotcha, Googzilla: Bing Channels GoTo, Overture, and Yahoo with Smart Software

April 5, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “That Was Fast! Microsoft Slips Ads into AI-Powered Bing Chat.” Not exactly a surprise? No, nope. Microsoft now understands that offering those who want to put a message in front of eye balls generates money. Google is the poster child of Madison Avenue on steroids.

The write up says:

We are also exploring additional capabilities for publishers including our more than 7,500 Microsoft Start partner brands. We recently met with some of our partners to begin exploring ideas and to get feedback on how we can continue to distribute content in a way that is meaningful in traffic and revenue for our partners.

Just 7,500? Why not more? Do you think Microsoft will follow the Google playbook, just enhanced with the catnip of smart software? If you respond, “yes,” you are on the monetization supersonic jet. Buckle up.

Here are my predictions based on what little I know about Google’s “legacy”:

- Money talks; therefore, the ad filtering system will be compromised by those with access to getting ads into the “system”. (Do you believe that software and human filtering systems are perfect? I have a bridge to sell you.)

- The content will be warped by ads. This is the gravity principle: Get to close to big money and the good intentions get sucked into the advertisers’ universe. Maybe it is roses and Pepsi Cola in the black hole, but I know it will not contain good intentions with mustard.

- The notion of a balanced output, objectivity, or content selected by a smart algorithm will be fiddled. How do I know? I would point to the importance of payoffs in 1950s rock and roll radio and the advertising business. How about a week on a yacht? Okay, I will send details. No strings, of course.

- And guard rails? Yep, keep content that makes advertisers — particularly big advertisers — happy. Block or suppress content that makes advertisers — particularly big advertisers – unhappy.

Do I have other predictions? Oh, yes. Why not formulate your own ideas after reading “BingBang: AAD Misconfiguration Led to Bing.com Results Manipulation and Account Takeover.” Bingo!

Net net: Microsoft has an opportunity to become the new Google. What could go wrong?

Stephen E Arnold, April 5, 2023

Thomson Reuters, Where Is Your Large Language Model?

April 3, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have to give the lovable Bloomberg a pat on the back. Not only did the company explain its large language model for finance, the end notes to the research paper are fascinating. One cited document has 124 authors. Why am I mentioning the end notes? The essay is 65 pages in length, and the notes consume 25 pages. Even more interesting is that the “research” apparently involved nVidia and everyone’s favorite online bookstore, Amazon and its Web services. No Google. No Microsoft. No Facebook. Just Bloomberg and the tenure-track researcher’s best friend: The end notes.

The article with a big end … note that is presents this title: “BloombergGPT: A Large Language Model for Finance.” I would have titled the document with its chunky equations “A Big Headache for Thomson Reuters,” but I know most people are not “into” the terminal rivalry, the analytics rivalry and the Thomson Reuters’ Fancy Dancing with Palantir Technologies, nor the “friendly” competition in which the two firms have engaged for decades.

Smart software score appears to be: Bloomberg 1, Thomson Reuters, zippo. (Am I incorrect? Of course, but this beefy effort, the mind boggling end notes, and the presence of Johns Hopkins make it clear that Thomson Reuters has some marketing to do. What Microsoft Bing has done to the Google may be exactly what Bloomberg wants to do to Thomson Reuters: Make money on the next big thing and marginalize a competitor. Bloomberg obviously wants more than the ageing terminal business and the fame achieved on free TV’s Bloomberg TV channels.

What is the Bloomberg LLM or large language model? Here’s what the paper asserts. Please, keep in mind that essays stuffed with mathy stuff and researchy data are often non-reproducible. Heck, even the president of Stanford University took short cuts. Plus more than half of the research results my team has tried to reproduce ends up in Nowheresville, which is not far from my home in rural Kentucky:

we present BloombergGPT, a 50 billion parameter language model that is trained on a wide range of financial data. We construct a 363 billion token dataset based on Bloomberg’s extensive data sources, perhaps the largest domain-specific dataset yet, augmented with 345 billion tokens from general purpose datasets. We validate BloombergGPT on standard LLM benchmarks, open financial benchmarks, and a suite of internal benchmarks that most accurately reflect our intended usage. Our mixed dataset training leads to a model that outperforms existing models on financial tasks by significant margins without sacrificing performance on general LLM benchmarks.

My interpretations of this quotation is:

- Lots of data

- Big model

- Informed financial decisions.

“Informed financial decisions” means to me that a crazed broker will give this Bloomberg thing a whirl in the hope of getting a huge bonus, a corner office which is never visited, and fame at the New York Athletic Club.

Will this happen? Who knows.

What I do know is that Thomson Reuters’ executives in London, New York, and Toronto are doing some humanoid-centric deep thinking about Bloomberg. And that may be what Bloomberg really wants because Bloomberg may be ahead. Imagine that Bloomberg ahead of the “trust” outfit.

Stephen E Arnold, April 3, 2023

The Scramblers of Mountain View: The Google AI Team

April 3, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I don’t know about you, but if I were a Googler (which I am not), I would pay attention to Google wizard and former Alta Vista wizard Jeff Dean. This individual was, I have heard, was involved in the dust up about Timnit Gebru’s stochastic parrot paper. (I love that metaphor. A parrot.) Dr. Dean has allegedly invested in the smart search outfit Perplexity. I found this interesting because it sends a faint signal from the bowels of Googzilla. Bet hedging? Admission that Google’s AI is lacking? A need for Dr. Dean to prepare to find his future elsewhere?

Why am I mentioning a Googler betting cash on one of the many Silicon Valley type outfits chasing the ChatGPT pot of gold? I read “Google Bard Is Switching to a More Capable Language Model, CEO Confirms.” The write up explains:

Bard will soon be moving from its current LaMDA-based model to larger-scale PaLM datasets in the coming days… When asked how he felt about responses to Bard’s release, Pichai commented: “We clearly have more capable models. Pretty soon, maybe as this goes live, we will be upgrading Bard to some of our more capable PaLM models, so which will bring more capabilities, be it in reasoning, coding.”

That’s a hoot. I want to add the statement “Pichai claims not to be worried about how fast Google’s AI develops compared to its competitors.” That a great line for the Sundar and Prabhakar Comedy Show. Isn’t Google in Code Red mode. Why? Not to worry. Isn’t Google losing the PR and marketing battle to the Devils from Redmond? Why? Not to worry. Hasn’t Google summoned Messrs. Brin and Page to the den of Googzilla to help out with AI? Why. Not to worry.

Then a Google invests in Perplexity. Right. Soon. Moving. More capable.

Net net: Dr. Dean’s investment may be more significant than the Code Red silliness.

Stephen E Arnold, April 3, 2023

Laws, Rules, Regulations for Semantic AI (No, I Do Not Know What Semantic AI Means)

March 31, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I am not going to dispute the wisdom and insight in the Microsoft essay “Consider the Future of This Decidedly Semantic AI.” The author is Xoogler Sam Schillace, CVP or corporate vice president and now a bigly wizard at the world’s pre-eminent secure software firm. However, I am not sure to what the “this” refers. Let’s assume that it is the Bing thing and not the Google thing although some plumbing may be influenced by Googzilla’s open source contributions to “this.” How would you like to disambiguate that statement, Mr. Bing?

The essay sets forth some guidelines or bright, white lines in the lingo of the New Age search and retrieval fun house. The “Laws” number nine. I want to note some interesting word choice. The reason for my focus on these terms is that taken as a group, more is revealed than I first thought.

Here are the terms I circled in True Blue (a Microsoft color selected for the blue screen of death):

- Intent. Rule 1 and 3. The user’s intent at first glance. However, what if the intent is the hard wiring of a certain direction in the work flow of the smart software. Intent in separate parts of a model can and will have a significant impact on how the model arrives at certain decisions. Isn’t that a thumb on the scale?

- Leverage. Rule 2. Okay, some type of Archimedes’ truism about moving the world I think. Upon rereading the sentence in which the word is used, I think it means that old-school baloney like precision and recall are not going to move anything. The “this” world has no use for delivering on point information using outmoded methods like string matching or Boolean statements. Plus, the old-school methods are too expensive, slow, and dorky.

- Right. Rule 3. Don’t you love it when an expert explains that a “right” way to solve a problem exists. Why then did I have to suffer through calculus classes in which expressions had to be solved different ways to get the “right” answer. Yeah, who is in charge here? Isn’t it wonderful to be a sophomore in high school again?

- Brittle. Rule 4. Yep, peanut brittle or an old-school light bulb. Easily broken, cut fingers, and maybe blinded? Avoid brittleness by “not hard coding anything.” Is that why Microsoft software is so darned stable? How about those email vulnerabilities in the new smart Outlook?

- Lack. Rule 5. Am I correct in interpreting the use of the word “lack” as a blanket statement that the “this” is just not very good. I do love the reference to GIGO; that is, garbage in, garbage out. What if that garbage is generated by Bard, the digital phantasm of ethical behavior?

- Uncertainty. Rule 6. Hello, welcome to the wonderful world of statistical Fancy Dancing. Is that “answer” right? Sure, if it matches the “intent” of the developer and the smart software helping that individual. I love it when smart software is recursive and learns from errors, at least known errors.

- Protocol. Rule 7. A protocol is, according to the smart search system You.com is:

In computer networking, a protocol refers to a set of rules and guidelines that define a standard way of communicating data over a network. It specifies the format and sequence of messages that are exchanged between the different devices on the network, as well as the actions that are taken when errors occur or when certain events happen.

Yep, more rules and a standard, something universal. I think I get what Microsoft’s agenda has as a starred item: The operating system for smart software in business, the government, and education.

- Hard. Rule 8. Yes, Microsoft is doing intense, difficult work. The task is to live up to the marketing unleashed at the World Economic Forum. Whew. Time for a break.

- Pareidolia. Rule 9. The word means something along the lines is that some people see things that aren’t there. Hello, Bruce Lemoine, please. Oh, he’s on a date with a smart avatar. Okay, please, tell him I called. Also, some people may see in the actions of their French bulldog, a certain human quality.

If we step back and view these words in the context of the Microsoft view of semantic AI, can we see an unintentional glimpse into the inner workings of the company’s smart software? I think so. Do you see a shadowy figure eager to dominate while saying, “Ah, shucks, we’re working hard at an uncertain task. Our intent is to leverage what we can to make money.” I do.

Stephen E Arnold, March 31, 2023

Telegraph Says to Google: Duh Duh Duh Dweeb Dweeb Dweeb Duh Duh Duh

March 29, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid. (The anigif is from https://gifer.com/en/Vea.)

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid. (The anigif is from https://gifer.com/en/Vea.)

That three short taps and three long taps followed by three short taps strikes me as “Duh duh duh Dweeb dweeb dweeb duh duh dun. But I did not get my scout badge in Morse code, so what do I know about real Titanic type messages. SOS, SOS, SOS! I think that today the tones mean “Save Our Search”.

I can decode the Telegraph newspaper article “Google’s Code Red Crisis Grows As ChatGPT Races Ahead.” I am reasonably certain the esteemed “real news” outfit believes that the Google, the destroyer of newspaper advertising revenue, is thrashing around in Lake Tahoe scale snow drifts. If I recall the teachings of my high school biology teacher in 1962, Googzillas do not thrive in cold climates. I suppose I could ask Bing.com or You.com, but I am thinking why bother.

The article states:

The company has been left scrambling to react to the surprise success of ChatGPT, which launched to the public last November. Google executives have labeled it a “code red” problem and co-founders Sergei Brin and Larry Page have emerged from semi-retirement to hold meetings with top AI execs to thrash out a response. ChatGPT presents an existential threat to Google’s core business.

The existential trope is a bit of a stretch, but the main point is clear. The Google is struggling in terms of real news’s perception of the beast. Reality does not intrude on some media tropes. Saying the Google is a dinosaur with enough clicks, and the perceived truth smudges the Google chokehold on online advertising… for now.

The article adds:

Speaking to The Telegraph, Krawczyk [a senior director at Google] said: “There is a separate effort for how generative models will look in search; that is not what you see here. “It [smart software] is a very early stage of this technology and we really want to make sure right now we are focused on delivering the right amount of quality.”

Yes, quality. Those Google search results are fascinating because they are usually wide of the user’s query. How wide? Wide enough to chew through the advertising backlog. The idea of precision and recall, time stamps on citations, and the elimination of the totally useless Boolean operators really delivers what Google considers as quality: Revenue. The right amount of quality means the revenue targets needed to float the boat.

Google’s smart software Bard-edition has not yet reached its Orkut or Dodgeball moment. Will it? At this time, I think it is important to keep in mind that if one wants to generate clicks, one must buy Google advertising. Until smart software proves that it can mint money, “real news” outfits may want to find a way to tell the Emperor of Ads, “You know. You look really great in that puffy coat. Isn’t it the same one the Pope was showing off the other day.”

There are those annoying SOS tones again: Duh Duh Duh Dweeb Dweeb Dweeb Duh Duh Duh. Are Sundar and Prabhakar transmitting again?

Stephen E Arnold, March 29, 2023

Microsoft: You Cannot Learn from Our Outputs

March 28, 2023

![Vea[4] Vea[4]](http://arnoldit.com/wordpress/wp-content/uploads/2023/03/Vea4_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “Microsoft Reportedly Doesn’t Want Other AI Chatbots to Use Its Bing Search Data.” I don’t know much about smart software or smart anything for that matter. The main point of the article is that Microsoft Bing’s outputs must not be used to training other smart software. For me, that’s like a teacher saying to me in the fifth grade, “Don’t copy from the people in class who get D’s and F’s.” Don’t worry. I knew from whom to copy, right, Linda Mae?

The article reports:

…Microsoft has told them [two unnamed smart software outfits] if they use its Bing API to power their own chatbots, they may cancel their contracts and pull their Bing search support.

Some search engines give user the impression that their services are doing primary Web crawling. Does this sound familiar DuckDuckGo and Neeva? As these search vendors scramble to come up with a solution to their hunger for actual cash money (Does this sound familiar, Kagi?), the vendors want to surf on Microsoft’s index. Why not? Microsoft prior to ChatGPT did not have what I would call a Google scale index. Sure, I could find information about that icon of family togetherness Alex Murdaugh, but less popular subjects were often a bit shallow.

I find it interesting that a company which sucks in content generated by humans like moi, the dinobaby, is used without asking, paying, or even thinking about me keyboarding in rural Kentucky. Now that same outstanding company wants to prevent others from using the Microsoft derivative system for a non-authorized use.

I love it when Silicon Valley think reaches logical conclusions of MBA think seasoned with paranoia.

Stephen E Arnold, March 28, 2023

The image is from https://gifer.com/en/Vea.

More Impressive Than Winning at Go: WolframAlpha and ChatGPT

March 27, 2023

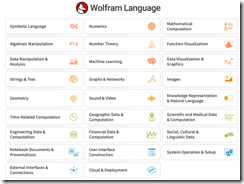

For a rocket scientist, Stephen Wolfram and his team are reasonably practical. I want to call your attention to this article on Dr. Wolfram’s Web site: “ChatGPT Gets Its Wolfram Superpowers.” In the essay, Dr. Wolfram explains how the computational system behind WolframAlpha can be augmented with ChatGPT. The examples in his write up are interesting and instructive. What can the WolframAlpha ChatGPT plug in do? Quite a bit.

I found this passage interesting:

ChatGPT + Wolfram can be thought of as the first truly large-scale statistical + symbolic “AI” system…. in ChatGPT + Wolfram we’re now able to leverage the whole stack: from the pure “statistical neural net” of ChatGPT, through the “computationally anchored” natural language understanding of Wolfram|Alpha, to the whole computational language and computational knowledge of Wolfram Language.

The WolframAlpha module works with these information needs:

The Wolfram Language modules does some numerical cartwheels, and I was impressed. I am not sure how high school calculus teachers will respond to the WolframAlpha – ChatGPT mash up, however. Here’s a snapshot of what Wolfram Language can do at this time:

One helpful aspect of Dr. Wolfram’s essay is that he notes differences between an earlier version of ChatGPT and the one used for the mash up. Navigate to this link and sign up for the plug in.

Stephen E Arnold, March 27, 2023

Smart Software: Reproducibility Is Not Part of the Game Plan, Thank You

March 24, 2023

Note: The essay below has been crafted by a real, still-alive dinobaby. No smart software required, thank you.

I love it when outfits suggest one thing and do another. I was tempted to write about some companies’ enthusiastic support for saving whales and their even more intense interest in blocking the ban on “forever chemicals.” But whales are one thing and smart software is another.

Specifically, the once open OpenAI is allegedly embracing the proprietary and trade secret approach to technology. “OpenAI’s Policies hinder Reproducible Research on Language Models” reports:

On Monday [March 20. 2023], OpenAI announced that it would discontinue support for Codex by Thursday. Hundreds of academic papers would no longer be reproducible: independent researchers would not be able to assess their validity and build on their results. And developers building applications using OpenAI’s models wouldn’t be able to ensure their applications continue working as expected.

The article elaborates on this main idea.

Several points:

- Reproducibility means that specific recipes have to be known and then tested. Who in Silicon Valley wants this “knowledge seeping” to take place when demand for the know how is — as some may say — doing the hockey stick chart thing.

- Good intentions are secondary to money, power, and control. The person or persons who set thresholds, design filters, orchestrate what content and when is fed into a smart system, and similar useful things want their fingers on the buttons. Outsiders in academe or another outfit eager to pirate expertise? Nope.

- Reproducibility creates opportunities for those not in the leadership outfit to find themselves criticized for bias, manipulation, and propagandizing. Who wants that other than a public relations firm?

Net net: One cannot reproduce much flowing from today’s esteemed research outfits. Should I mention the president of Stanford University as the poster person for intellectual pogo stick hopping? Oh, I just did.

Stephen E Arnold, March 24, 2023

TikTok: Some Interesting Assertions

March 22, 2023

Note: This essay is the work of a real, still-living dinobaby. I am too dumb to use smart software.

I read the “testimony” posted by someone at the House of Representatives. No, the document did not include, “Congressman, thank you for the question. I don’t have the information at hand. I will send it to your office.” As a result, the explanation reflects hand crafting by numerous anonymous wordsmiths. Singapore. Children. Everything is Supercalifragilisticexpialidocious. The quip “NSA to go” is shorter and easier to say.

Therefore, I want to turn my attention to the newspaper in the form of a magazine. The Economist published “How TikTok Broke Social Media.” Great Economist stuff! When I worked at a blue chip consulting outfit in the 1970s, one had to have read the publication. I looked at help wanted ads and the tech section, usually a page or two. The rest of the content was MBA speak, and I was up to my ears in that blather from the numerous meetings through which I suffered.

With modest enthusiasm I worked my way through the analysis of social media. I circled several paragraphs, I noticed one big thing — The phrase “broke social media.” Social media was in my opinion, immune to breaking. The reason is that online services are what I call “ghost like.” Sure, there is one service, which may go away. Within a short span of time, like eight year olds playing amoeba soccer, another gains traction and picks up users and evolves sticky services. Killing social media is like shooting ping pong balls into a Tesla sized blob of Jell-O, an early form of the morphing Terminator robot. In short, the Jell-O keeps on quivering, sometimes for a long, long time, judging from my mother’s ability to make one Jell-O dessert and keep serving it for weeks. Then there was another one. Thus, the premise of the write up is wrong.

I do want to highlight one statement in the essay:

The social apps will not be the only losers in this new, trickier ad environment. “All advertising is about what the next-best alternative is,” says Brian Wieser of Madison and Wall, an advertising consultancy. Most advertisers allocate a budget to spend on ads on a particular platform, he says, and “the budget is the budget”, regardless of how far it goes. If social-media advertising becomes less effective across the board, it will be bad news not just for the platforms that sell those ads, but for the advertisers that buy them.

My view is shaped by more than 50 years in the online information business. New forms of messaging and monetization are enabled by technology. On example is a thought experiment: What will an advertiser pay to influence the output of a content generator infused with smart software. I have first hand information that one company is selling AI-generated content specifically to influence what appears when a product is reviewed. The technique involves automation, a carousel of fake personas (sockpuppets to some), and carefully shaped inputs to the content generation system. Now is this advertising like a short video? Sure, because the output can be in the form of images or a short machine-generated video using machine generated “real” people. Is this type of “advertising” going to morph and find its way into the next Discord or Telegram public user group?

My hunch is that this type of conscious manipulation and automation is what can be conceptualized as “spawn of the Google.”

Net net: Social media is not “broken.” Advertising will find a way… because money. Heinous psychological manipulation. Exploited by big companies. Absolutely.

Stephen E Arnold, March 22, 2023

Stanford: Llama Hallucinating at the Dollar Store

March 21, 2023

Editor’s Note: This essay is the work of a real, and still alive, dinobaby. No smart software involved with the exception of the addled llama.

What happens when folks at Stanford University use the output of OpenAI to create another generative system? First, a blog article appears; for example, “Stanford’s Alpaca Shows That OpenAI May Have a Problem.” Second, I am waiting for legal eagles to take flight. Some may already be aloft and circling.

A hallucinating llama which confused grazing on other wizards’ work with munching on mushrooms. The art was a creation of ScribbledDiffusion.com. The smart software suggests the llama is having a hallucination.

What’s happening?

The model trained from OWW or Other Wizards’ Work mostly works. The gotcha is that using OWW without any silly worrying about copyrights was cheap. According to the write up, the total (excluding wizards’ time) was $600.

The article pinpoints the issue:

Alignment researcher Eliezer Yudkowsky summarizes the problem this poses for companies like OpenAI:” If you allow any sufficiently wide-ranging access to your AI model, even by paid API, you’re giving away your business crown jewels to competitors that can then nearly-clone your model without all the hard work you did to build up your own fine-tuning dataset.” What can OpenAI do about that? Not much, says Yudkowsky: “If you successfully enforce a restriction against commercializing an imitation trained on your I/O – a legal prospect that’s never been tested, at this point – that means the competing checkpoints go up on BitTorrent.”

I love the rapid rise in smart software uptake and now the snappy shift to commoditization. The VCs counting on big smart software payoffs may want to think about why the llama in the illustration looks as if synapses are forming new, low cost connections. Low cost as in really cheap I think.

Stephen E Arnold, March 21, 2023