AI That Sort of, Kind of Did Not Work: Useful Reminders

April 24, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “Epic AI Fails. A List of Failed Machine Learning Projects.” My hunch is that a write up suggesting that smart software may disappoint in some cases is not going to be a popular topics. I can hear the pooh-poohs now: “The examples used older technology.” And “Our system has been engineered to avoid that problem.” And “Our Large Language Model uses synthetic data which improves performance and the value of system outputs.” And “We have developed a meta-layer of AI which integrates multiple systems in order to produce a more useful response.”

Did I omit any promises other than “The check is in the mail” or “Our customer support team will respond to your call immediately, 24×7, and with an engineer, not a smart chatbot because. Humans, you know.”

The main point of the article from Analytics India, an online publication, provides some color on interesting flops; specifically:

- Amazon’s recruitment system. Think discrimination against females. Amazon’s Rekognition system and its identification of elected officials as criminals. Wait. Maybe those IDs were accurate?

- Covid 19 models. Moving on.

- Google and the diabetic retinopathy detection system. The marketing sounded fine. Candy for breakfast? Sure, why not?

- OpenAI’s Samantha. Not as crazy as Microsoft Tay but in the ballpark.

- Microsoft Tay. Yeah, famous self instruction in near real time.

- Sentient Investment AI Hedge Fund. Your retirement savings? There are jobs at Wal-Mart I think.

- Watson. Wow. Cognitive computing and Jeopardy.

The author takes a less light-hearted approach than I. Useful list with helpful reminders that it is easier to write tweets and marketing collateral than deliver smart software that delivers on sales confections.

Stephen E Arnold, April 24, 2023

Google Panic: Just Three Reasons?

April 20, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read tweets, heard from colleagues, and received articles emailed to me about Googlers’ Bard disgruntlement? In my opinion, Laptop Magazine’s summary captures the gist of the alleged wizard annoyance. “Bard: 3 Reasons Why the Google Staff Hates the New ChatGPT Rival.”

I want to sidestep the word “hate”. With 100,000 or so employees a hefty chunk of those living in Google Land will love Bard. Other Google staff won’t care because optimizing a cache function for servers in Brazil is a world apart. The result is a squeaky cart with more squeaky wheels than a steam engine built in 1840.

The three trigger points are, according to the write up:

- Google Bard outputs that are incorrect. The example provided is that Bard explains how to crash a plane when the Bard user wants to land the aircraft safely. So stupid.

- Google (not any employees mind you) is “indifferent to ethical concerns.” The example given references Dr. Timnit Gebru, my favorite Xoogler. I want to point out that Dr. Jeff Dean does not have her on this weekend’s dinner party guest list. So unethical.

- Bard is flawed because Google wizards had to work fast. This is the outcome of the sort of bad judgment which has been the hallmark of Google management for some time. Imagine. Work. Fast. Google. So haste makes waste.

I want to point out that there is one big factor influencing Googzilla’s mindless stumbling and snorting. The headline of the Laptop Magazine article presents the primum mobile. Note the buzzword/sign “ChatGPT.”

Google is used to being — well, Googzilla — and now an outfit which uses some Google goodness is in the headline. Furthermore, the headline calls attention to Google falling behind ChatGPT.

Googzilla is used to winning (whether in patent litigation or in front of incredibly brilliant Congressional questioners). Now even Laptop Magazine explains that Google is not getting the blue ribbon in this particular, over-hyped but widely followed race.

That’s the Code Red. That is why the Paris presentation was a hoot. That is why the Sundar and Prabhakar Comedy Tour generates chuckles when jokes include “will,” “working on,” “coming soon” as part of the routine.

Once again, I am posting this from the 2023 National Cyber Crime Conference. Not one of the examples we present are from Google, its systems, or its assorted innovation / acquisition units.

Googzilla for some is not in the race. And if the company is in the ChatGPT race, Googzilla has yet to cross the finish line.

That’s the Code Red. No PR, no Microsoft marketing tsunami, and no love for what may be a creature caught in a heavy winter storm. Cold, dark, and sluggish.

Stephen E Arnold, April 26, 2023

Sequoia on AI: Is The Essay an Example of What Informed Analysis Will Be in the Future?

April 10, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read an essay produced by the famed investment outfit Sequoia. Its title: “Generative AI: A Creative New World.” The write up contains buzzwords, charts, a modern version of a list, and this fascinating statement:

This piece was co-written with GPT-3. GPT-3 did not spit out the entire article, but it was responsible for combating writer’s block, generating entire sentences and paragraphs of text, and brainstorming different use cases for generative AI. Writing this piece with GPT-3 was a nice taste of the human-computer co-creation interactions that may form the new normal. We also generated illustrations for this post with Midjourney, which was SO MUCH FUN!

I loved the capital letters and the exclamation mark. Does smart software do that in its outputs?

I noted one other passage which caught my attention; to wit:

The best Generative AI companies can generate a sustainable competitive advantage by executing relentlessly on the flywheel between user engagement/data and model performance.

I understand “relentlessly.” To be honest, I don’t know about a “sustainable competitive advantage” or user engagement/data model performance. I do understand the Amazon flywheel, but my understand that it is slowing and maybe wobbling a bit.

My take on the passage in purple as in purple prose is that “best” AI depends not on accuracy, lack of bias, or transparency. Success comes from users and how well the system performs. “Perform” is ambiguous. My hunch is that the Sequoia smart software (only version 3) and the super smart Sequoia humanoids were struggling to express why a venture firm is having “fun” with a bit of B-school teaming — money.

The word “money” does not appear in the write up. The phrase “economic value” appears twice in the introduction to the essay. No reference to “payoff.” No reference to “exit strategy.” No use of the word “financial.”

Interesting. Exactly how does a money-centric firm write about smart software without focusing on the financial upside in a quite interesting economic environment.

I know why smart software misses the boat. It’s good with deterministic answers for which enough information is available to train the model to produce what seems like coherent answers. Maybe the smart software used by Sequoia was not clued in to the reports about Sequoia’s explanations of its winners and losers? Maybe the version of the smart software is not up the tough subject to which the Sequoia MBAs sought guidance?

On the other hand, maybe Sequoia did not think through what should be included in a write up by a financial firm interested in generating big payoffs for itself and its partners.

Either way. The essay seems like a class project which is “good enough.” The creative new world lacks the force that through the green fuse drives the cash.

Stephen E Arnold, April 10, 2023

Thomson Reuters, Where Is Your Large Language Model?

April 3, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have to give the lovable Bloomberg a pat on the back. Not only did the company explain its large language model for finance, the end notes to the research paper are fascinating. One cited document has 124 authors. Why am I mentioning the end notes? The essay is 65 pages in length, and the notes consume 25 pages. Even more interesting is that the “research” apparently involved nVidia and everyone’s favorite online bookstore, Amazon and its Web services. No Google. No Microsoft. No Facebook. Just Bloomberg and the tenure-track researcher’s best friend: The end notes.

The article with a big end … note that is presents this title: “BloombergGPT: A Large Language Model for Finance.” I would have titled the document with its chunky equations “A Big Headache for Thomson Reuters,” but I know most people are not “into” the terminal rivalry, the analytics rivalry and the Thomson Reuters’ Fancy Dancing with Palantir Technologies, nor the “friendly” competition in which the two firms have engaged for decades.

Smart software score appears to be: Bloomberg 1, Thomson Reuters, zippo. (Am I incorrect? Of course, but this beefy effort, the mind boggling end notes, and the presence of Johns Hopkins make it clear that Thomson Reuters has some marketing to do. What Microsoft Bing has done to the Google may be exactly what Bloomberg wants to do to Thomson Reuters: Make money on the next big thing and marginalize a competitor. Bloomberg obviously wants more than the ageing terminal business and the fame achieved on free TV’s Bloomberg TV channels.

What is the Bloomberg LLM or large language model? Here’s what the paper asserts. Please, keep in mind that essays stuffed with mathy stuff and researchy data are often non-reproducible. Heck, even the president of Stanford University took short cuts. Plus more than half of the research results my team has tried to reproduce ends up in Nowheresville, which is not far from my home in rural Kentucky:

we present BloombergGPT, a 50 billion parameter language model that is trained on a wide range of financial data. We construct a 363 billion token dataset based on Bloomberg’s extensive data sources, perhaps the largest domain-specific dataset yet, augmented with 345 billion tokens from general purpose datasets. We validate BloombergGPT on standard LLM benchmarks, open financial benchmarks, and a suite of internal benchmarks that most accurately reflect our intended usage. Our mixed dataset training leads to a model that outperforms existing models on financial tasks by significant margins without sacrificing performance on general LLM benchmarks.

My interpretations of this quotation is:

- Lots of data

- Big model

- Informed financial decisions.

“Informed financial decisions” means to me that a crazed broker will give this Bloomberg thing a whirl in the hope of getting a huge bonus, a corner office which is never visited, and fame at the New York Athletic Club.

Will this happen? Who knows.

What I do know is that Thomson Reuters’ executives in London, New York, and Toronto are doing some humanoid-centric deep thinking about Bloomberg. And that may be what Bloomberg really wants because Bloomberg may be ahead. Imagine that Bloomberg ahead of the “trust” outfit.

Stephen E Arnold, April 3, 2023

A Xoogler Predicts Solving Death

March 30, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I thought Google was going to solve death. Sigh. Just like saying, We deliver relevant results,” words at the world’s largest online advertising outfit often have special meanings.

I read “Humans Will Achieve Immortality in Eight Years, Says Former Google Engineer Who Has Predicted the Future with 86% Accuracy.” I — obviously — believe everything I read on the Internet. I assume that the “engineer who has predicted the future with 86% accuracy” has cashed in on NFL bets, the Kentucky Derby, and the stock market hundreds of times. I worked for a finance wizard who fired people who were wrong 49 percent of the time. Why didn’t this financial genius hire a Xoogler who hit 86 percent accuracy. Oh, well.

The write up in the estimable Daily Mail asserts:

He said that machines are already making us more intelligent and connecting them to our neocortex will help people think more smartly. Contrary to the fears of some, he believes that implanting computers in our brains will improve us. ‘We’re going to get more neocortex, we’re going to be funnier, we’re going to be better at music. We’re going to be sexier’, he said.

Imagine that. A sexier 78-year-old! A sexier Xoogler! Amazing!

But here’s the topper in the write up:

Now the former Google engineer believes technology is set to become so powerful it will help humans live forever, in what is known as the singularity.

How did this wizard fail his former colleagues by missing the ChatGPT thing?

Well, 86 percent accuracy is not 100 percent, is it? I hope that part about a sexier 78-year-old is on the money though.

Stephen E Arnold, March 30, 2023

Tweeting in Capital Letters: Surfing on the SVB Anomaly

March 16, 2023

Like a couple of other people, I noted the Silicon Valley Bank anomaly. I have a hunch that more banking excitement is coming. In fact, one intrepid social media person asked, “Know a bank I can buy.” One of the more interesting articles about the anomaly (I use this word because no other banks are in a similar financial pickle. Okay, maybe one or two or 30 are, but that’s no biggie.)

“VC Podcast Duo Faces (sic) Criticism for Frantic Response to Silicon Valley Bank Collapse” reports:

The pair [Jason Calcanis (a super famous real journalist who is now a super wealthy advisor to start ups) and David Sacks (a super famous PayPal chief operating officer and a general partner in Craft Ventures)] were widely mocked outside of their circle of followers after the government stepped in and swiftly stabilized SVB. Note: Italics present a little information about the “duo.”

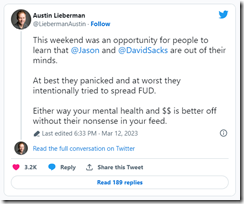

The story quotes other luminaries who are less well known in rural Kentucky via the standard mode of documentation today, a Tweet screenshot. Here it is:

The tweet suggests that Messrs. Calcanis and Sacks were “panicked” and tried to spread that IBM sauce of fear, uncertainty, and doubt.

To add a dollop of charm to the duo, it appears that Mr. Calcanis communicated in capital letters. Yes, ALL CAPS.

The article includes this alternative point of view:

Nevertheless, supporters of the pair continued to lavish praise and credit them with helping to avert further financial chaos. “Plot twist: @Jason and @DavidSacks saw the impeding doom and rushed to a public platform to voice concerns and make sure our gov’t officials saw the 2nd and 3rd order effects,” another Twitter user wrote. “They and other VC’s might’ve saved us all.”

I find the idea that venture capitalists “saved us all.” The only phrase missing is “existential threat.”

When Messrs. Calcanis and Sacks make their next public appearance, will these astute individuals be wearing super hero garb? The promotional push might squeeze more coverage about saving us all. (All. Quite comprehensive when used in a real news story.)

Stephen E Arnold, March 16, 2023

Bing Begins, Dear Sundar and Prabhakar

March 9, 2023

Note: Note written by an artificial intelligence wonder system. The essay is the work of a certified dinobaby, a near80-year-old fossil. The Purple Prose parts are made up comments by me, the dinobaby, to help improve the meaning behind the words.

I think the World War 2 Dear John letter has been updated. Today’s version begins:

Dear Sundar and Prabhakar…

“The New Bing and Edge – Progress from Our First Month” by Yusuf Mehdi explains that Bing has fallen in love with marketing. The old “we are so like one another, Sundar and Prabhakar” is now

“The magnetic Ms. OpenAI introduced me to her young son, ChatGPT. I am now going steady with that large language model. What a block of data! And I hope, Sundar and Prabhakar, we can still be friends. We can still chat, maybe at the high school reunion? Everyone will be there. Everyone. Timnit Gebru, Jerome Pesenti, Yan Lecun, Emily Bender, and you two, of course.”

The write up does not explicitly say these words. Here’s the actual verbiage from the marketing outfit also engaged in unpatchable security issues:

It’s hard to believe it’s been just over a month since we released the new AI-powered Bing and Edge to the world as your copilot for the web. In that time, we have heard your feedback, learned a lot, and shipped a number of improvements. We are delighted by the virtuous cycle of feedback and iteration that is driving strong Bing improvements and usage.

A couple of questions? Is the word virtuous related to the word virgin? Pure, chaste, unsullied, and not corrupted by … advertising? Has it been a mere 30 days since Sundar and Prabhakar entered the world of Code Red? Were they surprised that their Paris comedy act drove attendees to Le Bar Bing? Is the copilot for the Web ready to strafe the digital world with Bing blasts?

Let’s look at what the love letter reports:

- A million new users. What’s the Google pulled in with their change in the curse word policy for YouTube?

- More searches on Le Bing than before the tryst with ChatGPT. Will Google address relevance ranking of bogus ads for a Thai restaurant favored by a certain humanoid influencer?

- A mobile app. Sundar and Prabhakar, what’s happening with your mobile push? Hasn’t revenue from the Play store declined in the last year? Declined? Yep. As in down, down, down.

Is Bing a wonder working relevance engine? No way.

Is Bing going to dominate my world of search of retrieval? For the answer, just call 1 800 YOU WISH, please.

Is Bing winning the marketing battle for smarter search? Oh, yeah.

Well, Sundar and Prabhakar, don’t let that Code Red flashing light disturb your sleep. Love and kisses, Yusuf Mehdi. PS: The high school reunion is coming up. Maybe we can ChatGPT?

Stephen E Arnold, March 9, 2023

SEO Fuels Smart Software

March 6, 2023

I read “Must Read: The 100 Most Cited Papers in 2022.” The principal finding is that Google-linked entities wrote most of the “important” papers. If one thinks back to Gene Garfield’s citation analysis work, a frequently cited paper is either really good, or it is an intellectual punching bag. Getting published is often not enough to prove that an academic is smart. Getting cited is the path to glory, tenure, and possibly an advantage when chasing grants.,

Here’s a passage which explains the fact that Google is “important”:

Google is consistently the strongest player followed by Meta, Microsoft, UC Berkeley, DeepMind and Stanford.

Keep in mind that Google and DeepMind are components of Alphabet.

Why’s this important?

- There is big, big money in selling/licensing models and data sets down the road

- Integrating technology into other people’s applications is a step toward vendor lock in and surveillance of one sort or another

- Analyzing information about the users of a technology provides a useful source of signals about [a] what to buy or invest in, [b] copy, or [c] acquire

If the data in this “100 Most Cited” article are accurate or at least close enough for horseshoes Google and OpenAI may be playing a clever game not unlike what the Cambridge Analytica crowd did.

Implications? Absolutely. I will talk about a few in my National Cyber Crime Conference lecture about OSINT Blindspots. (Yep, my old term has new life in the smart software memesphere.

Stephen E Aronld, March 6, 2023

Google: Code Redder Because … Microsoft Markets AI Gooder

March 6, 2023

Don’t misunderstand. I think the Chat GPT search wars are more marketing than useful functionality for my work. You may have a different viewpoint. That’s great. Just keep in mind that Google’s marvelous Code Red alarm was a response to Microsoft marketing. Yep, if you want to see the Sundar and Prabhakar Duo do some fancy dancing, just get your Microsoft rep to mash the Goose Google button.

Someone took this advice and added “AI” to the truly wonderful Windows 11 software. I read “Microsoft Adds “AI” to Taskbar Search Field” and learned that either ChatGPT or a human said:

In the last three weeks, we also launched the new AI-powered Bing into preview for more than 1 million people in 169 countries, and expanded the new Bing to the Bing and Edge mobile apps as well as introduced it into Skype. It is a new era in Search, Chat and Creation and with the new Bing and Edge you now have your own copilot for the web. Today, we take the next major step forward adding to the incredible breadth and ease of use of the Windows PC by implementing a typable Windows search box and the amazing capability of the new AI-powered Bing directly into the taskbar. Putting all your search needs for Windows in one easy to find location.

Exciting because lousy search will become milk, honey, sunshine, roses, and French bulldog puppies. Nope. Search is still the Bing with a smaller index than the Google sports. But that “AI” in the search box evokes good thoughts for some users.

For Google, the AI in the search box mashes the Code Red button. I think that if that button gets pressed five times in quick succession, the Google goes from Code Red to Code Super Red with LED sparkles.

Remember this AI search is marketing at this time in my frame of reference.

Microsoft is showing that Google is not too good at marketing. I am now mashing the Code Red button five times. Mash. Mash. Mash. Mash. Mash. Now I can watch Googzilla twitch and hop. Perhaps the creature will be the opening act in the Sundar and Prabhakar Emergency Output Emission Explanation Tour. Did you hear the joke about Microsoft walks into a vegan restaurant and says, “Did you hear the joke about Google marketing?” The server says, “No.” The Softie replies, “Google searched for marketing in its search engine and couldn’t get a relevant answer.”

Ho, ho

Stephen E Arnold, March 6, 2023

Bard Is More Than You and I Know

March 6, 2023

I have to hand it to the real news outfit CNBC, the reporters have a way of getting interesting information about the innards of Googzilla. A case in point is “Google Execs Tell Employees in Testy All Hands Meeting That Bard A.I. Isn’t Just about Search.” Who knew? I thought Google was about online advertising and increasing revenue. Therefore, my dinobaby mind says, Bard is part of the Google; it follows that Bard is about advertising and maybe – just maybe – will have an impact of search. Nope.

I learned from CNBC:

In an all-hands meeting on Thursday (March 2, 2023), executives answered questions from Dory, the company’s internal forum, with most of the top-rated issues related to the priorities around Bard… [emphasis added]

Gee, I wonder why?

The write up pointed out:

employees criticized leadership, most notably CEO Sundar Pichai, for the way it handled the announcement of Bard…

Oh, the Code Red, the Paris three star which delivered a froid McDo. (Goodness, I almost type “faux”.)

CNBC’s article added:

Staffers called Google’s initial public presentation [in Paris] “rushed,” “botched” and “un-Googley.”

Yeah, maybe faux is the better word, but I like the metaphor of a half cooked corporatized burger as well.

And the guru of Google Search, Prabhakar Raghavan, stepped out of the spotlight. A Googler named Jack Krawczyk, the product lead for Bard, filled in for the crowd favorite from Verity and Yahoo

. Mr. Krawczyk included in his stand up routine with one liners like this:

Bard is not search.

Mr. Krawczyk must have concluded that his audience was filled with IQ 100 types from assorted countries with lousy educational systems. I thought Googlers were exceptional. Surely Googlers could figure out what Bard could do. (Perhaps that is the reason for the employees’ interest in smart software:

Mr. Krawczyk quipped:

“It’s an experiment that’s a collaborative AI service that we talked about … “The magic that we’re finding in using the product is really around being this creative companion to helping you be the sparkplug for imagination, explore your curiosity, etc.”

CNBC pointed out that Mr. Krawczyk suggested the Google had “built a new feature for internal use called ‘Search It.’” That phrase reminded me of universal search which, of course, means separate queries for Google News, Google Scholar, Google Maps, et al. Yeah, universal search was a snappy marketing phrase but search has been a quite fragmented, relevance blind, information retrieval system.

The high value question in my opinion, is: Will “Search It” have the same cachet as ChatGPT?

Microsoft seems to be an effective marketer of to-be smart applications and services. Google, on the other hand, hopes I remember Mum or Ernie (not the cartoon character)?

Google, the Code Red outfit, is paddling its very expensive canoe with what appears to be desperation.

Net net: Google has not solved death and I am not sure the company will resolve the Microsoft / ChatGPT mindshare juggernaut. Here’s my contribution to the script of the next Sundar and Prabhakar Comedy Show: “We used to think Google was indecisive. But now we’re not so sure.”

Stephen E Arnold, March 6, 2023