Amazing Statement about Google

January 17, 2023

I am not into Twitter. I think that intelware and policeware vendors find the Twitter content interesting. A few of them may be annoyed that the Twitter application programming interface seems go have gone on a walkabout. One of the analyses of Twitter I noted this morning (January 15, 2023, 1035 am) is “Twitter’s Latest ‘Feature’ Is How You Know Elon Musk Is in Over His Head. It’s the Cautionary Tale Every Business Needs to Hear.”

I want to skip over the Twitter palpitations and focus on one sentence:

At least, with Google, the company is good enough at what it does that you can at least squint and sort of see that when it changes its algorithm, it does it to deliver a better experience to its users–people who search for answers on Google.

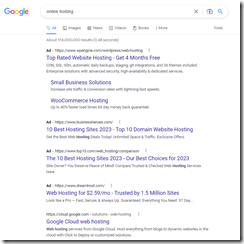

What about that “at least”? Also, what do you make of the “you can at least squint and sort of see that when it [Google] changes its algorithm”? Squint to see clearly. Into Google? Hmmm. I can squint all day at a result like this and not see anything except advertising and a plug for the Google Cloud for the query online hosting:

Helpful? Sure to Google, not to this user.

Now consider the favorite Google marketing chestnut, “a better experience.” Ads and a plug for Google does not deliver to me a better experience. Compare the results for the “online hosting” query to those from www.you.com:

Google is the first result, which suggests some voodoo in the search engine optimization area. The other results point to a free hosting service, a PC Magazine review article (which is often an interesting editorial method to talk about) and an outfit called Online Hosting Solution.

Which is better? Google’s ads and self promotion or the new You.com pointer to Google and some sort of relevant links?

Now let’s run the query “online hosting” on Yandex.com (not the Russian language version). Here’s what I get:

Note that the first link is to a particular vendor with no ad label slapped on the link. The other links are to listicle articles which present a group of hosting companies for the person running the query to consider.

Of the three services, which requires the “squint” test. I suppose one can squint at the Google result and conclude that it is just wonderful, just not for objective results. The You.com results are a random list of mostly relevant links. But that top hit pointing at Google Cloud makes me suspicious. Why Google? Why not Amazon AWS, Microsoft Azure, the fascinating Epik.com, or another vendor?

In this set of three, Yandex.com strikes me as delivering cleaner, more on point results. Your mileage may vary.

In my experience, systems which deliver answers are a quest. Most of the systems to which I have been exposed seem the digital equivalent of a ride with Don Quixote. The windmills of relevance remain at risk.

Stephen E Arnold, January 17, 2023

Semantic Search for arXiv Papers

January 12, 2023

An artificial intelligence research engineer named Tom Tumiel (InstaDeep) created a Web site called arXivxplorer.com.

According to his Twitter message (posted on January 7, 2023), the system is a “semantic search engine.” The service implements OpenAI’s embedding model. The idea is that this search method allows a user to “find the most relevant papers.” There is a stream of tweets at this link about the service. Mr. Tumiel states:

According to his Twitter message (posted on January 7, 2023), the system is a “semantic search engine.” The service implements OpenAI’s embedding model. The idea is that this search method allows a user to “find the most relevant papers.” There is a stream of tweets at this link about the service. Mr. Tumiel states:

I’ve even discovered a few interesting papers I hadn’t seen before using traditional search tools like Google or arXiv’s own search function or even from the ML twitter hive mind… One can search for similar or “more like this” papers by “pasting the arXiv url directly” in the search box or “click the More Like This” button.

I ran several test queries, including this one: “Google Eigenvector.” The system surfaced generally useful papers, including one from January 2022. However, when I included the date 2023 in the search string, arXiv Xplorer did not return a null set. The system displayed hits which did not include the date.

Several quick observations:

- The system seems to be “time blind,” which is a common feature of modern search systems

- The system provides the abstract when one clicks on a link. The “view” button in the pop up displays the PDF

- Comparing result sets from the query with additional search terms surfaces papers reduces the result set size, a refreshing change from queries which display “infinite scrolling” of irrelevant documents.

For those interested in academic or research papers, will OpenAI become aware of the value of dates, limiting queries to endnotes, and displaying a relationship map among topics or authors in a manner similar to Maltego? By combining more search controls with the OpenAI content and query processing, the service might leapfrog the Lucene/Solr type methods. I think that would be a good thing.

Will the implementation of this system add to Google’s search anxiety? My hunch is that Google is not sure what causes the Google system to perturb ate. It may well be that the twitching, the sudden changes in direction, and the coverage of OpenAI itself in blogs may be the equivalent of tremors, soft speaking, and managerial dizziness. Oh, my, that sounds serious.

Stephen E Arnold, January 12, 2022

Google Results Are Relevant… to Google and the Googley

January 3, 2023

We know that NoNeedforGPS will not be joining Prabhakar Raghavan (Google’s alleged head of search) and the many Googlers repurposed to deal with a threat, a real threat. That existential demon is ChatGPT. Dr. Raghavan (formerly of the estimable Verity which was absorbed into the even more estimable Autonomy which is a terra incognita unto itself) is getting quite a bit of Google guidance, help, support, and New Year cheer from those Googlers thrown into a Soviet style project to make that existential threat go away.

NoNeedforGPS questioned on Reddit.com the relevance of Google’s ad-supported sort of Web search engine. The plaintive cry in the post is an image, which is essentially impossible to read, says:

Why does Google show results that have nothing to do with what is searched?

You silly goose, NoNeedforGPS. You fail to understand the purpose of Google search, and you obviously are not privy to discussions by search wizards who embrace a noble concept: It is better to return a result than a null result. A footnote to this brilliant insight is that a null result — that is, a search results page which says, “Sorry, no matches for your query” — make it tough to match ads and convince the lucky advertiser on a blank page that a null result conveys information.

What? A null result conveys information! Are you crazy there in rural Kentucky with snow piled to a height of four French bulldogs standing atop one another?

No, I don’t think I am crazy, which is a negative word, according to some experts at Stanford University.

When I run a query like “Flokinet climate activist”, I really want to see a null result set. My hunch is that some folks in Eastern Europe want me to see an empty set as well.

Let me put the display of irrelevant “hits” in response to a query in context:

- With a result set — relevant or irrelevant is irrelevant — Google’s super duper ad matcher can do its magic. Once an ad is displayed (even in a list of irrelevant results to the user), some users click on the ads. In fact, some users cannot tell the difference between a relevant hit and an ad. Whatever the reason for the click, Google gets money.

- Many users who run a query don’t know what they are looking for. Here’s an example: A person searches Google for a Greek restaurant. Google knows that there is no Greek restaurant anywhere near the location of the Google user. Therefore, the system displays results for restaurants close to the user. Google may toss in ads for Greek groceries, sponges from Greece, or a Greek history museum near Dunedin, Florida. Google figures one of these “hits” might solve the user’s problem and result in a click that is related to an ad. Thus, there are no irrelevant results when viewed from Google’s UX (user experience) viewpoint via the crystal lenses of Ad Words, SEO partner teams, or a Googler who has his/her/its finger on the scale of Google objectivity.

- The quaint notions of precision and recall have been lost in the mists of time. My hunch is that those who remember that a user often enters a word or phrase in the hopes of getting relevant information related to that which was typed into the query processor are not interested in old fashioned lists of relevant content. The basic reason is that Google gave up on relevance around 2006, and the company has been pursuing money, high school science projects like solving death, and trying to manage the chaos resulting from a management approach best described as anti-suit and pro fun. The fact that Google sort of works is amazing to me.

The sad reality is that Google handles more than 90 percent of the online searches in North America. Years ago I learned that in Denmark, Google handles 100 percent of the online search traffic. Dr. Raghavan can lash hundreds of Googlers to the ChatGPT response meetings, but change may be difficult. Google believes that its approach to smart software is just better. Google has technology that is smarter, more adept at creating college admission essays, and blog posts like this one. Google can do biology, quantum computing, and write marketing copy while wearing a Snorkel and letting code do deep dives.

Net net: NoNeedforGPS does express a viewpoint which is causing people who think they are “expert searchers” to try out DuckDuckGo, You.com, and even the Russian service Yandex.com, among others. Thus, Google is scared. Those looking for information may find a system using ChatGPT returns results that are useful. Once users mired in irrelevant results realizes that they have been operating in the dark, a new dawn may emerge. That’s Dr. Raghavan’s problem, and it may prove to be easier to impress those at a high school reunion than advertisers.

Stephen E Arnold, January 3, 2023

Southwest Crash: What Has Been Happening to Search for Years Revealed

January 2, 2023

What’s the connection between the failure of Southwest Airlines’ technology infrastructure and search? Most people, including assorted content processing experts, would answer the question this way:

None. Finding information and making reservations are totally unrelated.

Fair enough.

“The Shameful Open Secret Behind Southwest’s Failure” does not reference finding information as the issue. We learn:

This problem — relying on older or deficient software that needs updating — is known as incurring “technical debt,” meaning there is a gap between what the software needs to be and what it is. While aging code is a common cause of technical debt in older companies — such as with airlines which started automating early — it can also be found in newer systems, because software can be written in a rapid and shoddy way, rather than in a more resilient manner that makes it more dependable and easier to fix or expand.

I think this is a reasonable statement. I suppose a reader with my interest in search and retrieval can interpret the comments as applicable to looking up who owns some of the domains hosted on Megahost.com or some similar service provider. With a little thought, the comment can be stretched to cover the failure some organizations have experienced when trying to index content within their organizations so that employees can find a PowerPoint used by a young sales professional at a presentation at a trade show several weeks in the past.

My view point is that the Southwest failure provides a number of useful insights into the fragility of the software which operates out of sight and out of mind until that software fails.

Here’s my list of observations:

- Failure is often a real life version of the adage “the straw that broke the camel’s back”. The idea is that good enough software chugs along until it simply does not work.

- Modern software cannot be quickly, easily, or economically fixed. Many senior managers believe that software wrappers and patches can get the camel back up and working.

- Patched systems may have hidden, technical or procedural issues. A system may be returned but it may harbor hidden gotchas; for example, the sales professionals PowerPoint. The software may not be in the “system” and, therefore, cannot be found. No problem until a lawyer comes knocking about a disconnect between an installed system and what the sales professional asserted. Findability is broken by procedures, lack of comprehensive data collection, or an error importing a file. Sharing blame is not popular in some circles.

What’s this mean?

My view is that many systems and software work as intended; that is, well enough. No user is aware of certain flaws or errors, particularly when these are shared. Everyone lives with the error, assuming the mistake is the way something is. In search, if one looks for data about Megahost.com and the data are not available, it is easy to say, “Nothing to learn. Move on.” A rounding error in Excel. Move on. An enterprise search system which cannot locate a document? Just move on or call the author and ask for a copy.

The Southwest meltdown is important. The failure of the system makes clear the state of mission critical software. The problem exists in other systems as well, including, tax systems, command and control systems, health care systems, and word processors which cannot reliably number items in a list, among others.

An interesting and exciting 2023 may reveal other Southwest case examples.

Stephen E Arnold, January 2, 2023

ZincSearch: An Alternative to Elasticsearch

December 16, 2022

Recently launched ZincSearch is an Elasticsearch alternative worth looking into, despite the fact that several features are not yet fully formed. The nascent enterprise search engine promises lower complexity and lower costs. The About Us page describes its edge search and an experimental stateless server that can be scaled horizontally. The home page emphasizes:

“ZincSearch is built for Full Text Search: ZincSearch is a search engine that can be used for any kind of text data. It can be used for logs, metrics, events, and more. It allows you to do full text search among other things. e.g. Send server logs to ZincSearch for them or you can push your application data and provide full text search or you can build a search bar in your application using ZincSearch.

- Easy to Setup & Operate: ZincSearch provides the easiest way to get started with log capture, search and analysis. It has simple APIs to interact and integrates with leading log forwarders allowing you to get operational in minutes.

- Low resource requirements: It uses far less CPU and RAM compared to alternatives allowing for lower cost to run. Developers can even run it on their laptops without ever noticing its resource utilization. …

- Schemaless Indexes: No need to work hard to define schema ahead of time. ZincSearch automatically discovers schema so you can focus on search and analysis.

- Aggregations: Do faceted search and analyze your data.”

ZincSearch would not attract many conversions if it made migration difficult, so of course it is compatible with the Elasticsearch API. To a point, anyway—the application is still working on an Elasticsearch-compatible query API. ZincSearch can store data in S3 and MinIO, though that capacity is currently in an experimental phase. Sounds promising; we look forward to seeing how ZincSearch looks a year or so from now.

A blog post by ZincSearch creator Prabhat Sharma not only discusses his reasons for making his solution but also gives a useful summary of enterprise search in general. The startup is based in San Francisco.

Cynthia Murrell, December 16, 2022

Open Source Desktop Search Tool Recoll

December 13, 2022

Anyone searching for an alternative desktop search option might consider Recoll, an open source tool based on the Xapian search engine library. The latest version, 1.33.3, was released just recently. The landing page specifies:

“Recoll finds documents based on their contents as well as their file names.

- Versions are available for Linux, MS Windows and Mac OS X.

- It can search most document formats. You may need external applications for text extraction.

- It can reach any storage place: files, archive members, email attachments, transparently handling decompression.

- One click will open the document inside a native editor or display an even quicker text preview.

- A Web front-end with preview and download features can replace or supplement the GUI for remote use.

The software is free on Linux, open source, and licensed under the GPL. Detailed features and application requirements for supported document types.”

Recoll began as a tool to augment the search functionality of Linux’ desktop environment, a familiar pain point to users of that open source OS. Since it has expanded to Windows and Mac, users across the OS spectrum can try Recoll. Check it out, dear reader, if you crave a different desktop search solution.

Cynthia Murrell, December 13, 2022

Sonic: an Open Source Elasticsearch Alternative for Lighter Backends

November 10, 2022

When business messaging platform Crisp launched in 2015, it did so knowing its search functionality was lacking. Unfortunate, but least the company never pretended otherwise. The team found Elasticsearch was not scalable for its needs, and the SQL database it tried proved ponderous. Finally, in 2019, the solution emerged. Cofounder Valerian Saliou laid out the specifics in his blog post, “Announcing Sonic: A Super-Light Alternative to Elasticsearch.” He wrote:

“This was enough to justify the need for a tailor-made search solution. The Sonic project was born.

What is Sonic? Sonic can be found on GitHub as Sonic, a Fast, lightweight & schema-less search backend. Quoting what Sonic is from the GitHub page of the project: ‘Sonic is a fast, lightweight and schema-less search backend. It ingests search texts and identifier tuples, that can then be queried against in microseconds time. Sonic can be used as a simple alternative to super-heavy and full-featured search backends such as Elasicsearch in some use-cases. Sonic is an identifier index, rather than a document index; when queried, it returns IDs that can then be used to refer to the matched documents in an external database.’ Sonic is built in Rust, which ensures performance and stability. You can host it on a server of yours, and connect your apps to it over a LAN via Sonic Channel, a specialized protocol. You’ll then be able to issue search queries and push new index data from your apps — whichever programming language you work with. Sonic was designed to be fast and lightweight on resources.”

Not only do Crisp users get the benefits of this tool, but it is also available as open-source software. A few features of note include auto complete and typo correction, compatibility with Unicode, and user friendly libraries. See the detailed write-up for the developers’ approach to Sonic, the benefits and limitations, and implementation notes.

Cynthia Murrell, November 10, 2022

Sepana: A Web 3 Search System

November 8, 2022

Decentralized search is the most recent trend my team and I have been watching. We noted “Decentralized Search Startup Sepana Raises $10 Million.” The write up reports:

Sepana seeks to make web3 content such as DAOs and NFTs more discoverable through its search tooling.

What’s the technical angle? The article points out:

One way it’s doing this is via a forthcoming web3 search API that aims to enable any decentralized application (dapp) to integrate with its search infrastructure. It claims that millions of search queries on blockchains and dapps like Lens and Mirror are powered by its tooling.

With search vendors working overtime to close deals and keep stakeholders from emulating Vlad the Impaler, some vendors are making deals with extremely interesting companies. Here’s a question for you? “What company is Elastic’s new best friend?” Elasticsearch has been a favorite of many companies. However, Amazon nosed into the Elastic space. Furthermore, Amazon appears to be interested in creating a walled garden protected by a moat around its search technologies.

One area for innovation is the notion of avoiding centralization. Unfortunately online means that centralization becomes an emergent property. That’s one of my pesky Arnold’s Laws of Online. But why rain on the decentralized systems parade?

Sepana’s approach is interesting. You can get more information at https://sepana.io. Also you can check out Sepana’s social play at https://lens.sepana.io/.

Stephen E Arnold, November 8, 2022

Vectara: Another Run Along a Search Vector

November 4, 2022

Is this the enterprise search innovation we have been waiting for? A team of ex-Googlers have used what they learned about large language models (LLMs), natural language processing (NLP), and transformer techniques to launch a new startup. We learn about their approach in VentureBeat‘s article, “Vectara’s AI-Based Neural Search-as-a-Service Challenges Keyword-Based Searches.” The platform combines LLMs, NLP, data integration pipelines, and vector techniques into a neural network. The approach can be used for various purposes, we learn, but the company is leading with search. Journalist Sean Michael Kerner writes:

“[Cofounder Amr] Awadallah explained that when a user issues a query, Vectara uses its neural network to convert that query from the language space, meaning the vocabulary and the grammar, into the vector space, which is numbers and math. Vectara indexes all the data that an organization wants to search in a vector database, which will find the vector that has closest proximity to a user query. Feeding the vector database is a large data pipeline that ingests different data types. For example, the data pipeline knows how to handle standard Word documents, as well as PDF files, and is able to understand the structure. The Vectara platform also provides results with an approach known as cross-attentional ranking that takes into account both the meaning of the query and the returned results to get even better results.”

We are reminded a transformer puts each word into context for studious algorithms, relating it to other words in the surrounding text. But what about things like chemical structures, engineering diagrams, embedded strings in images? It seems we must wait longer for a way to easily search for such non-linguistic, non-keyword items. Perhaps Vectara will find a way to deliver that someday, but next it plans to work on a recommendation engine and a tool to discover related topics. The startup, based in Silicon Valley, launched in 2020 under the “stealth” name Zir AI. Recent seed funding of $20 million has enabled the firm to put on its public face and put out this inaugural product. There is a free plan, but one must contact the company for any further pricing details.

Cynthia Murrell, November 4, 2022

Startup Vectara Takes Search Up Just a Notch

October 25, 2022

Is this the enterprise search innovation we have been waiting for? A team of ex-Googlers have used what they learned about large language models (LLMs), natural language processing (NLP), and transformer techniques to launch a new startup. We learn about their approach in VentureBeat‘s article, “Vectara’s AI-Based Neural Search-as-a-Service Challenges Keyword-Based Searches.” The platform combines LLMs, NLP, data integration pipelines, and vector techniques into a neural network. The approach can be used for various purposes, we learn, but the company is leading with search. Journalist Sean Michael Kerner writes:

“[Cofounder Amr] Awadallah explained that when a user issues a query, Vectara uses its neural network to convert that query from the language space, meaning the vocabulary and the grammar, into the vector space, which is numbers and math. Vectara indexes all the data that an organization wants to search in a vector database, which will find the vector that has closest proximity to a user query. Feeding the vector database is a large data pipeline that ingests different data types. For example, the data pipeline knows how to handle standard Word documents, as well as PDF files, and is able to understand the structure. The Vectara platform also provides results with an approach known as cross-attentional ranking that takes into account both the meaning of the query and the returned results to get even better results.”

We are reminded a transformer puts each word into context for studious algorithms, relating it to other words in the surrounding text. But what about things like chemical structures, engineering diagrams, embedded strings in images? It seems we must wait longer for a way to easily search for such non-linguistic, non-keyword items. Perhaps Vectara will find a way to deliver that someday, but next it plans to work on a recommendation engine and a tool to discover related topics. The startup, based in Silicon Valley, launched in 2020 under the “stealth” name Zir AI. Recent seed funding of $20 million has enabled the firm to put on its public face and put out this inaugural product. There is a free plan, but one must contact the company for any further pricing details.

Cynthia Murrell, October 25, 2022