Missing Signals: Are the Tools or Analysts at Fault?

November 7, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Returning from a trip to DC yesterday, I thought about “signals.” The pilot — a specialist in hit-the-runway-hard landings — used the word “signals” in his welcome-aboard speech. The word sparked two examples of missing signals. The first is the troubling kinetic activities in the Middle East. The second is the US Army reservist who went on a shooting rampage.

The intelligence analyst says, “I have tools. I have data. I have real time information. I have so many signals. Now which ones are important, accurate, and actionable?” Our intrepid professionals displays the reality of separating the signal from the noise. Scary, right? Time for a Starbuck’s visit.

I know zero about what software and tools, systems and informers, and analytics and smart software the intelligence operators in Israel relied upon. I know even less about what mechanisms were in place when Robert Card killed more than a dozen people.

The Center for Strategic and International Studies published “Experts React: Assessing the Israeli Intelligence and Potential Policy Failure.” The write up stated:

It is incredible that Hamas planned, procured, and financed the attacks of October 7, likely over the course of at least two years, without being detected by Israeli intelligence. The fact that it appears to have done so without U.S. detection is nothing short of astonishing. The attack was complex and expensive.

And one more passage:

The fact that Israeli intelligence, as well as the international intelligence community (specifically the Five Eyes intelligence-sharing network), missed millions of dollars’ worth of procurement, planning, and preparation activities by a known terrorist entity is extremely troubling.

Now let’s shift to the Lewiston Maine shooting. I had saved on my laptop “Six Missed Warning Signs Before the Maine Mass Shooting Explained.” The UK newspaper The Guardian reported:

The information about why, despite the glaring sequence of warning signs that should have prevented him from being able to possess a gun, he was still able to own over a dozen firearms, remains cloudy.

Those “signs” included punching a fellow officer in the US Army Reserve force, spending some time in a mental health facility, family members’ emitting “watch this fellow” statements, vibes about issues from his workplace, and the weapon activity.

On one hand, Israel had intelligence inputs from just about every imaginable high-value source from people and software. On the other hand, in a small town the only signal that was not emitted by Mr. Card was buying a billboard and posting a message saying, “Do not invite Mr. Card to a church social.”

As the plane droned at 1973 speeds toward the flyover state of Kentucky, I jotted down several thoughts. Like or not, here these ruminations are:

- Despite the baloney about identifying signals and determining which are important and which are not, existing systems and methods failed bigly. The proof? Dead people. Subsequent floundering.

- The mechanisms in place to deliver on point, significant information do not work. Perhaps it is the hustle bustle of everyday life? Perhaps it is that humans are not very good at figuring out what’s important and what’s unimportant. The proof? Dead people. Constant news releases about the next big thing in open source intelligence analysis. Get real. This stuff failed at the scale of SBF’s machinations.

- The uninformed pontifications of cyber security marketers, the bureaucratic chatter flowing from assorted government agencies, and the cloud of unknowing when the signals are as subtle as the foghorn on cruise ship with a passenger overboard. Hello, hello, the basic analysis processes don’t work. A WeWork investor’s thought processes were more on point than the output of reporting systems in use in Maine and Israel.

After the aircraft did the thump-and-bump landing, I was able to walk away. That’s more than I can say for the victims of analysis, investigation, and information processing methods in use where moose roam free and where intelware is crafted and sold like canned beans at TraderJoe’s.

Less baloney and more awareness that talking about advanced information methods is a heck of a lot easier than delivering actual signal analysis.

Stephen E Arnold, November 7, 2023

test

The GOOG and MSFT Tried to Be Pals… But

October 30, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Here is an interesting tangent to the DOJ’s case against Google. Yahoo Finance shares reporting from Bloomberg in, “Microsoft-Google Peace Deal Broke Down Over Search Competition.” The two companies pledged to stop fighting like cats and dogs in 2016. Sadly, the peace would last but three short years, testified Microsoft’s Jonathan Tinter.

In a spirit of cooperation and profits for all, Microsoft and Google-parent Alphabet tried to work together. For example, in 2020 they made a deal for Microsoft’s Surface Duo: a Google search widget would appear on its main screen (instead of MS Bing) in exchange for running on the Android operating system. The device’s default browser, MS Edge, would still default to Bing. Seemed like a win-win. Alas, the Duo turned out to be a resounding flop. That disappointment was not the largest source of friction, however. We learn:

“In March 2020, Microsoft formally complained to Google that its Search Ads 360, which lets marketers manage advertising campaigns across multiple search engines, wasn’t keeping up with new features and ad types in Bing. … Tinter said that in response to Microsoft’s escalation, Google officially complained about a problem with the terms of Microsoft’s cloud program that barred participation of the Google Drive products — rival productivity software for word processing, email and spreadsheets. In response to questions by the Justice Department, Tinter said Microsoft had informally agreed to pay for Google to make the changes to SA360. ‘It was half a negotiating strategy,’ Tinter said. Harrison ‘said, ‘This is too expensive.’ I said, ‘Great let me pay for it.’’ The two companies eventually negotiated a resolution about cloud, but couldn’t resolve the problems with the search advertising tool, he said. As a result, nothing was ever signed on either issue, Tinter said. ‘We ultimately walked away and did not reach an agreement,’ he said. Microsoft and Google also let their peace deal expire in 2021.”

Oh well, at least they tried to get along, we suppose. We just love dances between killer robots with money at stake.

Cynthia Murrell, October 30, 2023

Now the AI $64 Question: Where Are the Profits?

October 26, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

As happens with most over-hyped phenomena, AI is looking like a disappointment for investors. Gizmodo laments, “So Far, AI Is a Money Pit That Isn’t Paying Off.” Writer Lucas Ropek cites this report from the Wall Street Journal as he states tech companies are not, as of yet, profiting off AI as they had hoped. For example, Microsoft’s development automation tool GitHub Copilot lost an average of $20 a month for each $10-per-month user subscription. Even ChatGPT is seeing its user base decline while operating costs remain sky high. The write-up explains:

“The reasons why the AI business is struggling are diverse but one is quite well known: these platforms are notoriously expensive to operate. Content generators like ChatGPT and DALL-E burn through an enormous amount of computing power and companies are struggling to figure out how to reduce that footprint. At the same time, the infrastructure to run AI systems—like powerful, high-priced AI computer chips—can be quite expensive. The cloud capacity necessary to train algorithms and run AI systems, meanwhile, is also expanding at a frightening rate. All of this energy consumption also means that AI is about as environmentally unfriendly as you can get. To get around the fact that they’re hemorrhaging money, many tech platforms are experimenting with different strategies to cut down on costs and computing power while still delivering the kinds of services they’ve promised to customers. Still, it’s hard not to see this whole thing as a bit of a stumble for the tech industry. Not only is AI a solution in search of a problem, but it’s also swiftly becoming something of a problem in search of a solution.”

Ropek notes it would have been wise for companies to figure out how to turn a profit on AI before diving into the deep end. Perhaps, but leaping into the next big thing is a priority for tech firms lest they be left behind. After all, who could have predicted this result? Let’s ask Google Bard, OpenAI, or one of the numerous AI “players”? Even better perhaps will be deferring the question of costs until the AI factories go online.

Cynthia Murrell, October 26, 2023

xx

Google Giggles: Late October 2023 Edition

October 25, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

The Google Giggles is nothing more than items reported in the “real” news about the antics, foibles, and fancy dancing of the world’s most beloved online advertising system.

Googzilla gets a kick out of these antics. Thanks, MidJourney. You do nice but repetitive dinosaur illustrations.

Giggle 1: Liking sushi is not the same as sushi liking you. “The JFTC Opens an Investigation and Seeks Information from Third Parties Concerning the Suspected Violation of the Antimonopoly Act by Google LLC, Etc.” Now that’s a Googley headline from the government of Japan. Why? Many items are mentioned in the cited document; for example, mobile devices, the Google Play Store, and sharing of search advertising. Would our beloved Google exploit its position to its advantage? Japan wants to know more. Many people do because the public trial in the US is not exactly outputting public information in a comprehensive, unredacted way, is it?

Giggle 2: Just a minor change in the Internet. Google wants to protect content, respect privacy, and help out its users. Listen up, publishers, creators, and authors. “Google Chrome’s New IP Protection Will Hide Users’ IP Addresses” states:

As the traffic will be proxied through Google’s servers, it may make it difficult for security and fraud protection services to block DDoS attacks or detect invalid traffic. Furthermore, if one of Google’s proxy servers is compromised, the threat actor can see and manipulate the traffic going through it. To mitigate this, Google is considering requiring users of the feature to authenticate with the proxy, preventing proxies from linking web requests to particular accounts, and introducing rate-limiting to prevent DDoS attacks.

Hmmm. Can Google see the traffic, gather data, and make informed decisions? Would Google do that?

Giggle 3: A New Language. Google’s interpretation of privacy is very, very Googley. “When Is a Privacy Button Not a Privacy Button? When Google Runs It, Claims Lawsuit” explains via a quote from a legal document:

"Google had promised that by turning off this [saving a user’s activity] feature, users would stop Google from saving their web and app activity data, including their app-browsing histories," the fourth amended complaint [PDF] says. "Google’s promise was false."

When Google goes to court, Google seems to come out unscathed and able to continue its fine work. In this case, Google is simply creating its own language which I think could be called Googlegrok. One has to speak it to be truly Googley. Now what does “trust” mean?

Giggle 4: Inventing AI and Crawfishing from Responsibility. I read “AI Risk Must Be Treated As Seriously As Climate Crisis, Says Google DeepMind Chief.” What a hoot! The write up’s subtitle is amazing:

Demis Hassabis calls for grater regulation to quell existential fears over tech with above human levels of intelligence.

Does this Google posture suggest that the firm is not responsible for the problems it is creating and diffusing because “government” is not regulating a technology? Very clever. Perhaps a bit of self control is more appropriate? But I am no longer Googley. The characteristic goes away with age and the end of checks.

Giggle 5: A Dark Cloud. Google reported strong financial results. With online ads in Google search and YouTube.com, how could the firm fail its faithful? “Google Cloud Misses Revenue Estimates — And It’s Your Fault, Wanting Smaller Bills” reports that not all is gold in the financial results. I noted this statement:

Another concerning outcome for the Google cloud was that its $266 million operating income number was down from $395 million in the previous quarter – when revenue was $370 million lower.

Does this mean that the Google Cloud is an issue? In my lingo, “issue” means, “Is it time for the Google to do some clever market adaptation?” Google once was good at clever. Now? Hmmm.

Are you giggling? I am.

Stephen E Arnold, October 25, 2023

The Google Experience: Personnel Management and Being Fair

October 23, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

The Google has been busy explaining to those who are not Googley that it is nothing more than a simple online search engine. Heck, anyone can use another Web search system with just a click. Google is just delivering a service and doing good.

I believe this because I believe everything a big high-technology outfit says about the Internet. But there is one facet of this company I find fascinating; namely, it’s brilliant management of people or humanoids of a particular stripe.

The Backstory

Google employees staged a walkout in 2018, demanding a safer and fairer workplace for women when information about sexual discrimination and pay discrepancies leaked. Google punished the walkout organizers and other employees, but they succeed in ending the forced arbitration policy that required employees to settle disputes privately. Wired’s article digs into the details: “This Exec Is Forcing Google Into Its First Trial over Sexist Pay Discrimination.”

Google’s first pay discrimination case will be argued in New York. Google cloud unit executive Ulku Rowe alleges she was hired at a lower salary than her male co-workers. When she complained, she claims Google denied her promotions and demoted her. Rowe’s case exposed Google’s executive underbelly.

The case is also a direct result of the walkout:

“The costs and uncertainty of a trial combined with a fear of airing dirty laundry cause companies to settle most pay discrimination lawsuits, says Alex Colvin, dean of Cornell University’s School of Industrial and Labor Relations. Last year, the US government outlawed forced arbitration in sexual harassment and sexual assault cases, but half of US employers still mandate it for other disputes. Rowe would not be scheduled to have her day in court if the walkout had not forced Google to end the practice. “I think that’s a good illustration of why there’s still a push to extend that law to other kinds of cases, including other kinds of gender discrimination,” Colvin says.”

The Outcome

“Google Ordered to Pay $1 Million to Female Exec Who Sued over Gender Discrimination” reported:

A New York jury on Friday decided that Google did commit gender-based discrimination, and now owes Rowe a combined $1.15 million for punitive damages and the pain and suffering it caused. Rowe had 23 years of experience when she started at Google in 2017, and the lawsuit claims she was lowballed at hiring to place her at a level that paid significantly less than what men were being offered.

Observation

It appears that the Googley methods at the Google are neither understood nor appreciated by some people.

Whitney Grace, October 23, 2023

The Path to Success for AI Startups? Fancy Dancing? Pivots? Twisted Ankles?

October 17, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-13.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “AI-Enabled SaaS vs Moatless AI.” The buzzwordy title hides a somewhat grim prediction for startups in the AI game.” Viggy Balagopalakrishnan (I love that name Viggy) explains that the best shot at success is:

…the only real way to build a strong moat is to build a fuller product. A company that is focused on just AI copywriting for marketing will always stand the risk of being competed away by a larger marketing tool, like a marketing cloud or a creative generation tool from a platform like Google/Meta. A company building an AI layer on top of a CRM or helpdesk tool is very likely to be mimicked by an incumbent SaaS company. The way to solve for this is by building a fuller product.

My interpretation of this comment is that small or focused AI solutions will find competing with big outfits difficult. Some may be acquired. A few may come up with a magic formula for money. But most will fail.

How does that moat work when an AI innovator’s construction is attacked by energy weapons discharged from massive death stars patrolling the commercial landscape? Thanks, MidJourney. Pretty complicated pointy things on the castle with a moat.

Viggy does not touch upon the failure of regulatory entities to slow the growth of companies that some allege are monopolies. One example is the Microsoft game play. Another is the somewhat accommodating investigation of the Google with its closed sessions and odd stance on certain documents.

There are other big outfits as well, and the main idea is that the ecosystem is not set up for most AI plays to survive with huge predators dominating the commercial jungle. That means clever scripts, trade secrets, and agility may not be sufficient to ensure survival.

What’s Ziggy think? Here’s an X-ray of his perception:

Given that the infrastructure and platform layers are getting reasonably commoditized, the most value driven from AI-fueled productivity is going to be captured by products at the application layer. Particularly in the enterprise products space, I do think a large amount of the value is going to be captured by incumbent SaaS companies, but I’m optimistic that new fuller products with an AI-forward feature set and consequently a meaningful moat will emerge.

How do moats work when Amazon-, Google-, Microsoft-, and Oracle-type outfits just add AI to their commercial products the way the owner of a Ford Bronco installs a lift kit and roof lights?

Productivity? If that means getting rid of humans, I agree. If the term means to Ziggy smarter and more informed decision making? I am not sure. Moats don’t work in the 21st century. Land mines, surprise attacks, drones, and missiles seem to be more effective. Can small firms deal with the likes of Googzilla, the Bezos bulldozer, and legions of Softies? Maybe. Ziggy is an optimist. I am a realist with a touch of radical empiricism, a tasty combo indeed.

Stephen E Arnold, October 17, 2023

Intelware: Some Advanced Technology Is Not So New

October 11, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-8.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “European Spyware Consortium Supplied Despots and Dictators.” The article is a “report” about intelware vendors. The article in Spiegel International is a “can you believe this” write up. The article identifies a number of companies past and present. Plus individuals are identified.

The hook is technology that facilitates exfiltration of data from mobile devices. Mobile phones are a fashion item and a must have for many people. It does not take much insight to conclude that data on these ubiquitous gizmos can provide potentially high value information. Even better, putting a software module on a mobile device of a person of interest can save time and expense. Modern intelligence gathering techniques are little more than using technology to minimize the need for humans sitting in automobiles or technicians planting listening devices in interesting locations. The other benefits of technology include real time or near real time data acquisition, geo-location data, access to the digital information about callers and email pals, and data available to the mobile’s ever improving cameras and microphones.

The write up points out:

One message, one link, one click. That’s all it takes to lose control of your digital life, unwittingly and in a matter of seconds.

The write up is story focused, probably because a podcast or a streaming video documentary was in the back of the mind of the writers and possibly Spiegel International itself. If you like write ups that have a slant, you will find the cited article interesting.

I want to mentions several facets of the write up which get less attention from “real” journalists.

First, the story of the intelware dates back to the late 1970s. Obviously some of the technology has been around for decades, although refined over time. If this “shady” technology were a problem, why has it persisted, been refined, and pressed into service around the world by many countries? It is tempting to focus on a current activity because it makes a good story, but the context and longevity of some of the systems and methods are interesting to me. But 40 years?

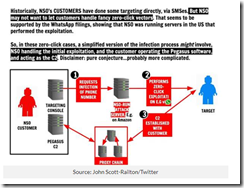

Second, in the late 1970s and the block diagrams I have seen presenting the main features of the Amesys system (i2e Technologies) and its direct descendants have had remarkable robustness. In fact, were one to look at the block diagram for a system provided to a controversial government in North Africa and one of the NSO Group Pegasus block diagrams, the basics are retained. Why? A good engineering solution is useful even thought certain facets of the system are improved with modern technology. What’s this mean? From my point of view, the clever individual or group eager to replicate this type of stealth intelware can do it, just with modern tools and today’s robust cloud environment. The cloud was not a “thing” in 1980, but today it is a Teflon for intelware. This means quicker, faster, better, cheaper, and smarter with each iteration.

Source: IT News in Australia

Third, this particular type of intelware is available from specialized software companies worldwide. Want to buy a version from a developer in Spain? No problem. How about a Chinese variety? Cultivate your contacts in Hong Kong or Singapore and your wish will be granted. What about a version from an firm based in India? No problem, just hang out at telecommunications conference in Mumbai.

Net net: Newer and even more stealthy intelware technologies are available today. Will these be described and stories about the use of them be written? Yep. Will I identify some of these firms? Sure, just attend one of my lectures for law enforcement and intelligence professionals. But the big question is never answered, “Why are these technologies demonstrating such remarkable magnetic appeal?” And a related question, “Why do governments permit these firms to operate?”

Come on, Spiegel International. Write about a more timely approach, not one that is decades old and documented in detail on publicly accessible sources. Oh, is location tracking enabled on your phone to obviate some of the value of Signal, Telegram, and Threema encrypted messaging apps?

PS. Now no clicks are needed. The technology can be deployed when a mobile number is known and connected to a network. There is an exception too. The requisite code can be pre-installed on one’s mobile device. Is that a story? Nah, that cannot be true. I agree.

Stephen E Arnold, October 11, 2023

Getty and Its Licensed Smart Software Art

September 26, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/09/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-1.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid. (Yep, the dinobaby is back from France. Thanks to those who made the trip professionally and personally enjoyable.)

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid. (Yep, the dinobaby is back from France. Thanks to those who made the trip professionally and personally enjoyable.)

The illustration shows a very, very happy image rights troll. The cloud of uncertainty from AI generated images has passed. Now the rights software bots, controlled by cheerful copyright trolls, can scour the Web for unauthorized image use. Forget the humanoids. The action will be from tireless AI generators and equally robust bots designed to charge a fee for the image created by zeros and ones. Yes!

A quite joyful copyright troll displays his killer moves. Thanks, MidJourney. The gradient descent continues, right into the legal eagles’ nests.

“Getty Made an AI Generator That Only Trained on Its Licensed Images” reports:

Generative AI by Getty Images (yes, it’s an unwieldy name) is trained only on the vast Getty Images library, including premium content, giving users full copyright indemnification. This means anyone using the tool and publishing the image it created commercially will be legally protected, promises Getty. Getty worked with Nvidia to use its Edify model, available on Nvidia’s generative AI model library Picasso.

This is exciting. Will the images include a tough-to-discern watermark? Will the images include a license plate, a social security number, or a just a nifty sting of harmless digits?

The article does reveal the money angle:

The company said any photos created with the tool will not be included in the Getty Images and iStock content libraries. Getty will pay creators if it uses their AI-generated image to train the current and future versions of the model. It will share revenues generated from the tool, “allocating both a pro rata share in respect of every file and a share based on traditional licensing revenue.”

Who will be happy? Getty, the trolls, or the designers who have a way to be more productive with a helping hand from the Getty robot? I think the world will be happier because monetization, smart software, and lawyers are a business model with legs… or claws.

Stephen E Arnold, September 26, 2023

Microsoft: Good Enough Just Is Not

September 18, 2023

Was it the Russian hackers? What about the special Chinese department of bad actors? Was it independent criminals eager to impose ransomware on hapless business customers?

No. No. And no.

The manager points his finger at the intern working the graveyard shift and says, “You did this. You are probably worse than those 1,000 Russian hackers orchestrated by the FSB to attack our beloved software. You are a loser.” The intern is embarrassed. Thanks, Mom MJ. You have the hands almost correct… after nine months or so. Gradient descent is your middle name.

“Microsoft Admits Slim Staff and Broken Automation Contributed to Azure Outage” presents an interesting interpretation of another Azure misstep. The report asserts:

Microsoft’s preliminary analysis of an incident that took out its Australia East cloud region last week – and which appears also to have caused trouble for Oracle – attributes the incident in part to insufficient staff numbers on site, slowing recovery efforts.

But not really. The report adds:

The software colossus has blamed the incident on “a utility power sag [that] tripped a subset of the cooling units offline in one datacenter, within one of the Availability Zones.”

Ah, ha. Is the finger of blame like a heat seeking missile. By golly, it will find something like a hair dryer, fireworks at a wedding where such events are customary, or a passenger aircraft. A great high-tech manager will say, “Oops. Not our fault.”

The Register’s write up points out:

But the document [an official explanation of the misstep] also notes that Microsoft had just three of its own people on site on the night of the outage, and admits that was too few.

Yeah. Work from home? Vacay time? Managerial efficiency planning? Whatever.

My view of this unhappy event is:

- Poor managers making bad decisions

- A drive for efficiency instead of a drive toward excellence

- A Microsoft Bob moment.

More exciting Azure events in the future? Probably. More finger pointing? It is a management method, is it not?

Stephen E Arnold, September 18, 2023

Bankrupting a City: Big Software, Complexity, and Human Shortcomings Does the Trick

September 15, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have noticed failures in a number of systems. I have no empirical data, just anecdotal observations. In the last few weeks, I have noticed glitches in a local hospital’s computer systems. There have been some fascinating cruise ship problems. And the airlines are flying the flag for system ineptitudes. I would be remiss if I did not mention news reports about “near misses” at airports. A popular food chain has suffered six recalls in a four or five weeks.

Most of these can be traced to software issues. Others are a hot mess combination of inexperienced staff and fouled up enterprise resource planning workflows. None of the issues were a result of smart software. To correct that oversight, let me mention the propensity of driverless automobiles to mis-identify emergency vehicles or possessing some indifference to side street traffic at major intersections.

“The information technology manager looks at the collapsing data center and asks, “Who is responsible for this issue?” No one answers. Those with any sense have adopted the van life, set up stalls to sell crafts at local art fairs, or accepted another job. Thanks, MidJourney. I guarantee your sliding down the gradient descent is accelerating.

What’s up?

My person view is that some people do not know how complex software works but depend on it despite that cloud of unknowing. Other people just trust the marketing people and buy what seems better, faster, and cheaper than an existing system which requires lots of money to keep chugging along.

Now we have an interesting case example that incorporates a number of management and technical issues. Birmingham, England is now bankrupt. The reason? The cost of a new system sucked up the cash. My hunch is that King Charles or some other kind soul will keep the city solvent. But the idea of city going broke because it could not manage a software project is illustrative of the future in my opinion.

“Largest Local Government Body in Europe Goes Under amid Oracle Disaster” reports:

Birmingham City Council, the largest local authority in Europe, has declared itself in financial distress after troubled Oracle project costs ballooned from £20 million to around £100 million ($125.5 million).

An extra £80 million would make little difference to an Apple, Google, or Microsoft. To a city in the UK, the cost is a bit of a problem.

Several observations:

- Large project management expertise does not deliver functional solutions. How is that air traffic control or IRS system enhancement going?

- Vendors rely on marketing to close deals, and then expect engineers to just make the system work. If something is incomplete or not yet coded, the failure rate may be anticipated, right? Nope, what’s anticipated in a scope change and billing more money.

- Government agencies are not known for smooth, efficient technical capabilities. Agencies are good at statements of work which require many interesting and often impossible features. The procurement attorneys cannot spot these issues, but those folks ride herd on the legal lingo. Result? Slips betwixt cup and lip.

Are the names of the companies involved important? Nope. The same situation exists when any enterprise software vendor wins a contract based on a wild and wooly statement of work, managed by individuals who are not particularly adept at keeping complex technical work on time and on target, and when big outfits let outfits sell via PowerPoints and demonstrations, not engineering realities.

Net net: More of these types of cases will be coming down the pike.

Stephen E Arnold, September 15, 2023